1 >> Darko Kirovski: Hello, everyone. It's a huge... Ton Kalker to Microsoft research. He's a strategic technologist...

1

>> Darko Kirovski: Hello, everyone. It's a huge pleasure and honor for me to welcome

Ton Kalker to Microsoft research. He's a strategic technologist at Hewlett-Packard, IEEE fellow. He'll talk about semantic coding. Ton, please.

>> Ton Kalker: Thank you. I had a short conversation with Darko this morning. Let me say this is an intellectual exercise. This is not so much anything you can really apply to some important problem yet, or maybe never. Who knows.

It's work that I've done with my colleague, Franz Willems, at the Technical University in

Eindhoven. Actually, It started a long time ago, around 2004, and we kept kind of building on the theory. Again, every time is technical as an intellectual exercise. Two years ago, we essentially summarized everything in one single paper.

And so what I want to get out of this talk is that take another look at Shannon's theory.

And again, I don't -- I'd like to be a Shannon type of person. But again, this refers to an earlier discussion I had with Darko. That's not going to happen. Anyway, based on this theory, at least.

So if you look at Shannon's approach to communication, he said whenever you want to do communication, you really don't care about semantics and symbols. The only thing you care about is statistics. Statistics is essentially how you have to do compression, compaction, transmission, whatever. So in this case, we actually want to assign some meaning to the symbols. For some reason, and I'm not going to specify why, suppose you have some reason to apply a meaning to your symbols. What can you do? What do you lose or what do you gain? The ability to do information theory, meaning that your symbols is called semantic coding.

And you can derive a few results from the theory. If they're really relevant or not depends on your perspective. If you think that watermarking is important, you can derive a few theorems. If you think it's not important, well, that's where it stands.

This is already said, it's irrelevant.

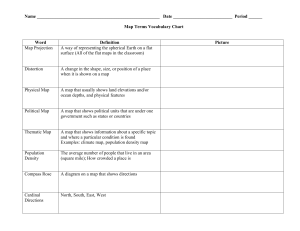

And just take a look at this picture. This is the classical. If you go do a freshman course in base theory, this is the kind of picture that you would see. We are assuming that all of our symbols are IID. You have a source X, you generate a bunch of symbols from the source X, and you want to compress that source. So you have some kind of encoding mechanism, you get out another string of symbols, Ys, they are not necessary IID, of course. And what you require, given the sequence of Y, that you are able to reconstruct

2 your original sequence X. That's essentially a compaction.

Of course, the question then is what is the condition under which you can do this. And

Shannon answered that question. He said, well you can do this if essentially the entropy that you have in Y is larger than the entropy you have in X. So essentially says, you shouldn't lose information.

And the thing is that if you have IID symbols, this whole condition can be brought down to a single letter condition. The original problem here, you work with arbitrary sequences.

The question is when can you do this. Turns out the condition when can you do it boils down to a single letter condition. Simply stating that the entropy of Y is larger than the entropy of X.

Of course, we didn't say anything about the symbols X having any type of meaning.

They're just an arbitrary alphabet.

Now, the first example of what you could mean by having semantics associated with it, this would be the case. So we have the same setup. Symbol's X. Somehow being encoded in symbols Y. You want to be able to reconstruct X from Y. And now there's a condition. Suppose for some reason we want to compare X and Y. In some sense, Y should resemble X. We go to use a distortion condition. We're going to say let's assume that we want to do this, but at the same time we want to make sure that X and Y are distortion constrained. So the symbols in X and the symbols in Y should be close together.

If you have this additional condition, what can you do? Well, there are a few things to look at, of course. You can -- still, you have to condition the error rates. Those should be low. Of course, you want to do perfect reconstruction. You can look at the encoding distortion, so you simply take the sequence X, you take a sequence Y, and you look at, say, the average distortion you have per symbol. And you can also look, of course, at the expected distortion, given the statistics on the symbols X and Y, and you do an expected distortion.

Now, turns out that with this side condition, the X -- the theorem that you get out is very similar to the original Shannon theorem. It turns out it's a single letter condition. You can do this, the semantic compaction. This is the same condition as you had before. You should have sufficient information in Y. So the single letter condition in Y is still the entropy in Y is larger than the entropy in X. That doesn't really change. But you have another single letter condition, which is that the expected distortion on the symbol level is lower than the given condition on your encoding distortion. So if you look at the strings

3 as a whole.

So essentially when you say, well, I can find the conditions for this setup, again looking at single letter distortions. So somehow, if I put this on top of it, it doesn't make it too much -- too complicated. I can still boil it down to single letter conditions.

This proof is actually not too complicated. It's quite easy to do if you sit down and work the details out and you work with -- your proof would work with typical sequences, it turns out that this condition, essentially, is automatic from your standard proof doing the classical theory in the Shannon sense.

But for example, what it would mean in a very simple example, if I have Lenna, and I would assume that Lenna, every pixel in Lenna would be independent, then you know that per pixel, the entropy is something like 6.9, 7, whatever it is. So it's less than seven bits. And if you assume that your alphabet Y is also a set of pixels, but only, say, the even pixel values, then you could actually compute whether -- to what distortion you could compress Lenna using only the even pixel values for a given distortion, whether or not this would be possible or not.

And you would simply only do a single letter computation. And for example, you can show that I can do this flat Lenna from eight pixels to seven pixels -- sorry, from eight bits to seven bits with a distortion. [inaudible] distortion is 7.3. This is nothing amazing. It's just quantization, put in a slightly different context.

>>: Ton, do you mind if I ask you a question?

>> Ton Kalker: No.

>>: It would be fair to say, when you have the original results, the entropy result

[inaudible]. In your case, there's probably [inaudible] that you need to add to the entropy because of the distortion.

>> Ton Kalker: Actually, if you go -- this is the typical result. If N goes to infinity, it's a tide bound as well. There's no [inaudible] left.

>>: There's no penalty?

>> Ton Kalker: There's no penalty whatsoever.

>>: That's interesting.

4

>> Ton Kalker: Actually, if you look at -- and the proof in this case is extremely, almost extremely trivial. Because the typical proof, which is typical sequences, you simply measure how the distortion behaves for typical sequences and you see that the epsilon goes to zero.

So the important point to remember is it's a single letter condition. That's the important point.

Although it's a very simple result, actually you can use this, in case you're interested in reversible watermarking, to prove a very fundamental theorem about reversible watermarking. I'm assuming most of you know what watermarking is. I'm assuming most of you know what reversible watermarking is. I'm going to repeat it anyway.

Again, we have a setup where we have a symbol string X. We now have an encoder, and the encoder takes an additional piece of input, which is an message. The message and the string X, the source X are combined to do a new single string, Y, which is your watermark sequence Y. And what you should be able to do is both extract the message, which is typically the case in watermarking. But in reversible watermarking, you also require that you're able to reconstruct the original signal.

If you look at the literature for reversible watermarking, it actually bounds with all kinds of ad hoc techniques. People rarely look, what can you actually do? What is the capacity limit? What can you achieve given a certain distortion. How many bits can I embed at what given distortion.

So with the setup that we have done before, it's actually quite easy. So the first thing to realize is, well, this, because it seems like we have to reconstruct what goes in, and there's a distortion constraint. So it already looks very similar to the result we had before.

So the only thing that's actually missing is that, well, this is a sequence. This is a single message system. What you have to realize, these messages can always be represented as sequences of sub-messages.

So without losing any generality, we can actually say, well, this "W" can be represented as a sequence of sub-messages.

And by then, we're very close to the situation we already had before, because now I can simply merge these two into a single new alphabet with pairs XW, like this, and then we have exactly the same setup that we had before for compaction, semantic compaction.

5

Actually, it makes sense. You see we actually have an obvious case where we have a semantic constraint. Because in reversible watermarking, there's always the constraint that the X, which is the original source, and the Y, which is the watermark source, needs to be together. This is a perceptual constraint on the X and Y. Of course, this distortion constraint doesn't really pay attention to W, so it only pays attention to the second component of your input alphabet.

But so this would be a typical setup. Now, we can -- yes?

>>: What's the practical application of reversible watermarking?

>> Ton Kalker: Well, it's actually being applied for music archives. So if you have high quality music archives and you want to attach metadata to the music files, you always want to be able to restore the original files so the metadata are independently formatted in the data.

>>: What's strange, why don't they just attach metadata to headers?

>> Ton Kalker: You could. The fact is that the systems that deals with headers are more fragile than the way that you actually embed metadata in the content itself, because this header data kind of change over time, systems change over time, and this is very persistent.

For the same reason, it's also used in medical applications, because they really don't trust the metadata in headers. You're right, if they would have a good, consistent system, do it in headers and don't fiddle with the content itself. I agree. As long as that doesn't happen, that's actually applied in this way. There's no penalty. So you can always restore in this case the original.

So you have the setup. Now, what would the condition be? Well, the condition is that this would be the condition. The entropy of the input source should be smaller than the entropy of the output source. You know the W and X are independent because it's an independent message. This splits the two components. It actually tells you the entropy of W should be smaller than the difference of the entropy of Y and X.

So the condition is that the maximal amount of bits you can store for reversible watermarking into a source X, given the distortion D, is given by this expression. And again, referring to -- there is no epsilon. It's a tide bound. And the maximum, of course, is over all test channels, such that the expected distortion constraint is lower than the constraint D that you're given.

6

And although that is an extremely use simple result, if you actually look at the literature on reversible watermarking, people hardly realize that this is the case. There's a lot of ad hoc techniques, and people actually don't know how far they are away from what's typically possible.

Let's look at another semantic coding scheme. In this case, we're going to consider transmission. It's very close to your classical setup. So forget about this part for now.

You have a source. Go to do some encoding. Then you go through a channel. And at the end of the channel, you like to reconstruct the source. That's the classical setup.

The semantic setup is, well, now also assume that you have constraint and distortion constraint on X and Y. So the symbols that go into the channel actually have a meaning.

The question will be, what can you prove? Well, we know without the constraint what the condition would be. You know that what goes into the channel, the rate should be lower than the capacity of the channel. If you formulate it in a semantic way, and you have the usual notions of distortions on the strings and the usually expected distortion, it turns out that these are the conditions. Again, these are single letter conditions. This is the classical Shannon condition, which essentially tells you the entropy of X should be lower than mutual information between Y and Z. That's what you can push through maximally.

And then again, there's a single side condition. And again, it's a single letter condition which essentially tells you that the expected distortion should be lower than the post distortion. Yeah?

>>: When you say semantic transmission, I expected to see that you distinguish between

Lenna and [inaudible].

>> Ton Kalker: Sorry?

>>: What is the semantics component here?

>> Ton Kalker: The semantics component is the Y symbols now have a meaning. They have a meaning in that they can be compared to the input. So if you look at the data that goes into your channel, they are not arbitrary bits anymore. So suppose this were an image, and it would say what goes into the channel would essentially be another image and you could say that image, officially, you could inspect that image and it would still look like Lenna.

>>: [Inaudible].

7

>> Ton Kalker: It's some distortion measure. We don't specify what the distortion measure is, but there's some distortion measure. For some reason or another, you want to compare X and Y.

>>: Can you give us an example?

>> Ton Kalker: I gave the example if you want to say transmit Lenna through a channel, you could say, well, eight bits Lenna goes through. What you send through the channel is a seven bits Lenna, for example.

>>: Close enough to Lenna. What would be X is Lenna and Y is orange.

>> Ton Kalker: If Y is?

>>: Orange, fruit.

>> Ton Kalker: I'm not saying what the application is. Suppose you simply require, for whatever reason, that X and Y are comparable in some sense. So Y is an alphabet with a distortion measure that compares to X. I'm not saying why you would do it. I'm saying suppose you would do it. And the only example that I can give right now is the Lenna example, where Lenna is, say, eight bits originally, and the Y symbol that goes into the channel would be Lenna with seven bits per pixel. Then you have a case where X and Y are comparable.

There's a natural notion of how they differ. I'm still not answering your question, I can tell.

>>: In my mind, it is still too close to Shannon. I expect to see something --

>> Ton Kalker: I'm not saying that it's not close to Shannon. The only difference is when

Shannon does this, he doesn't look at this situation. He says okay, X goes in. I don't care about Y. There's no condition on distortion whatsoever. The only thing that I'm concerned with is can I get it through the channel and can I reconstruct?

>>: Is this correct? I mean, is this [inaudible]. I mean, all the source encoders, I mean, you definitely want to come here Y and X. Therefore, in the Shannon, typical Shannon, you also have a rate distortion function in which you definitely want to compare the distortion between Y and X.

>> Ton Kalker: The rate distortion typically refers to if you don't do a perfect

8 reconstruction, so what comes out at the end, so that's the rate distortion, typical rate distortion setup. Some sequence X that goes in, you do some transmission, you retrieve some symbols at the end, and you want that comes out at the end is comparable to the input within a certain distortion constraint. That's the rate distortion theory.

>>: [inaudible] X, Y, some sort of distortion, right?

>> Ton Kalker: Yeah.

>>: How is that distortion different from -- what is the semantics portion of it?

>> Ton Kalker: As I said before, and probably I didn't clearly -- didn't say it clearly enough. The semantic portion is that it's expressed by this distortion. The symbols that go into the channel, which typically, in a classical Shannon setup, are just arbitrary bits, with no meaning whatsoever.

>>: Is that true?

>> Ton Kalker: Sorry?

>>: Is that true? Is that statement true?

>> Ton Kalker: Yes --

>>: [inaudible] the distribution, which is meaningless to me and another distribution which is very meaningful for me, like tomorrow there will be a war in Georgia, if they have the same distribution for [inaudible] the same, the semantics.

>>: [Inaudible].

>>: Even if they are the same distributions, because they have different semantics, we can do something new.

>> Ton Kalker: Well, maybe then I disappointed your expectations.

>>: You're saying less than that.

>> Ton Kalker: I'm saying much less than that. I'm only saying that the symbols that you typically use, and that go into a channel, or that represent your compacted signal, typically is arbitrary bits with no meaning whatsoever.

9

The point I'm trying to get across, if you actually would put a condition on those symbols, typically arbitrary, you can still essentially do Shannon type of theory and everything still reduces down to a single letter condition if you have these type of conditions on your encoding mechanisms.

>>: So maybe another way is what he's saying is now from the encoding bits, I can actually reconstruct an approximation of a signal without decoding?

>> Ton Kalker: Without decoding, yes.

>>: And in general Shannon doesn't have that product. The bits tell you nothing. You would have to decode to tell the signal. He's reconstructing an approximation of the signal without decoding.

>> Ton Kalker: And the reconstruction is in the sense of a distortion.

>>: Right, in the sense of a distortion.

>> Ton Kalker: Yes.

>>: Which is different from RND, right, is what I'm asking, because RND is distortion between the decoded one --

>> Ton Kalker: Yeah, so you actually have to go through a full decoding cycle and the rate distortion theory will tell you for a given rate what kind of distortion you can decode. I think you said it very well, yeah.

>>: Here there's no distortion between [inaudible].

>> Ton Kalker: No. So this assumption is X and X bar are really the same. So if you do a full decoding, you can restore the original. If you don't do a full decoding, with only, say, through inspection and use your distortion, then you already can do, say, a partial decoding in that sense.

And the amazing thing is, again, I repeat myself, is that this setup, essentially all of the classical Shannon results remain the same, plus an additional single letter condition. So in this --

>>: Quite interesting, because it's contrary to [inaudible].

10

>> Ton Kalker: There is not -- the penalty is in a very easy condition. It's a single letter condition. Because there's a penalty. You can't do whatever you want, because you do have to satisfy in this case, this condition. So you cannot do everything, but it's easily measurable.

So again, it's the classical result, you cannot push through more than what is [inaudible] information. You have the single letter condition on the distortion.

Again, another example would be where you do semantic compression. That would be a system like this. Now we have two types of distortions. So this refers more to your rate distortion case. Again, we do coding. There's an interpretation of your compressed -- your encoded symbols in terms of distortion, and now we don't require that you restore the original, but you get, after full decoding cycle, you get an approximation of the original. So there are now two types of distortion. It's the encoding distortion, which is the semantic part, and there is the decoding distortion, which is your classical rate distortion part.

So the question is, in this particular case, what do you get?

Again, we have the usual notions of distortions. Distortions on the strings. Expected distortions. So semantic part and the rate distortion part. And this will be the classical theorem on Shannon. I phrase it a little bit differently than you would probably normally see. But if you think bit a little bit carefully, this would be the classical rate distortion result that Shannon has presented.

Simply says that the entropy of Y should be larger than the mutual information between X and a pair of YV. I'm pretty sure this is not the way you typically look at rate distortion, but that's what it is.

And these distortions, so the rate distortion part is actually encoded in this part. Because this mutual information takes it into account. Now if you do the semantic version of this, you have an additional distortion constraint, which is the semantic part. You essentially get the same result. Again, you get the Shannon type result, which is this guy, plus the semantic part.

So again, pushes down to a single letter site condition; and again, there's no penalty.

There's no action whatsoever.

So essentially what we have done, I've shown you three types of theorems. Compaction, transmission and compression. Actually, these theorems are all related. So compaction

11 is you do encoding, you want to restore. Transmission, you want to push through a channel. And this is with rate distortion. Actually, they're all related to each other.

If I do transmission, but I assume that the channel is trivial, then essentially I have compaction. So if I take this theorem and I simply replace Z with Y, which makes the channel trivial, then I get the compaction result. And if I take the compression result, but then I require that I do full restoration so there's no additional distortion when there's an output, so the V should essentially be replaced by X, I want to restore X instead of V, and you then insert the substitution into the theorem, you get this result.

So essentially, all of these three results are related to each other. For this proofs, we had say up to now, if you saw the references, there is three different types of proofs.

But this diagram already suggests that maybe there's something more. So is there maybe a more general theorem from which everything can be derived in one step? So can we come up with some kind of theorem that if we do proper substitutions, you get this one, you get this one, and, of course, you also get this one.

And the answer to that question is yes, there is a more general type of theorem, and that's a theorem you typically don't see in information theory. It will say okay, there's something that actually, the uber theorem that proves all of these cases.

And this is the, say, the general semantic coding theorem which says if I have a source

X, and I suppose I encode that source X into a symbol set U, and then I go through a channel, and then I try to reconstruct, and what I want is not to reconstruct X, but I want to reconstruct the representation of X in this U space.

What are the conditions that I can do that? This is the theorem. If I have this special set-up, where I first take X, I represent the U space, and then I go through a channel and then I reconstruct the representation of U space, I can do that if this condition holds. And if you are familiar with Galvin Pinsker [phonetic], and you think of U as your auxiliary variable, this is very much like the Galvin Pinsker theorem, but now you have chosen variable instead of where you have to maximize over U.

And the proof of this theorem is not that hard at all. The left-hand side actually sensed that the space U should be rich enough to allow mapping from X into the U space, and the right-hand side essentially says that U should be rich enough to allow reconstruction from Z. These are essentially the two conditions. And actually this proof very much follows the Galvin Pinsker proof. So this setup can be done.

12

People are staring right now. Yes?

>>: Is there an additional condition about recovering X or --

>> Ton Kalker: No, there's only a condition on recovering the represented X. So you only recover that --

>>: In that case, it's hard to see why the statistics or the probability distribution of X matters at all. I mean, if I just cut the diagram, the first two blocks out --

>> Ton Kalker: The thing is, you don't get the full U. So you're not the first one to ask this question. So the point is if you cut this part out, then if you do, say, what you typically would do, then you would reconstruct more of U. That would be the condition.

But I don't want to reconstruct all of U. I only want to reconstruct in U which is represented by X.

>>: If I had generated a U that did not come from this map, then I don't care --

>> Ton Kalker: Then I don't care about it. So I only care about reconstructing what is inserted in the U space.

>>: It's the subset of the U space you care about.

>> Ton Kalker: Yes.

>>: The ones that came from --

>> Ton Kalker: Came from X, precisely.

>>: Got it.

>> Ton Kalker: That's why we have this bar. This represents how X is represented by U.

It's actually represented by this part and the reconstruction parts. So given Z, I need to go back to this particular portion of U, which is actually represented by this part.

>>: Gotcha.

>>: Mind if I ask you a general question? Are you familiar with the information bottleneck algorithm?

13

>> Ton Kalker: No I'm not.

>>: It's from machine parting. It's actually very similar. In fact, it's almost identical, except it's phrased in machine parting. The information theory perspective is you're doing clustering. And each of your inputs has a distribution, and you want to encode it to some lower bit encoding. So take like English, you have the unigram distribution of words. You want to encode all of English in eight bits as an extreme problem.

You can think of just doing normal Shannon encoding over the unigram distribution, but then there's no guarantee that two symbols, two words which are similar in meaning will get mapped to the same symbol. So what they say is, well, each of these words has a set of features, like the counts of the words that come to the left and right, which characterize the meaning, and then they say they want to come up with the best encoding in the normal Shannon sense of the word, with the constraint that the distortion between each word and each cluster symbol have maximized the mutual information with respect to the side features.

So their distortion function is the mutual information that the reduced symbol at the original word [inaudible] mutual information. I'm just wondering if you knew about that.

>> Ton Kalker: I don't know about it, no.

>>: It's actually really similar. They have a general -- they don't call it distortion. They don't phrase it in all the same ways, but basically the same idea.

>> Ton Kalker: Okay. Well, that's new to me. I didn't know. So I have to read. So if I would be interested in pointing to literature.

>>: Yeah, I can tell you. It's only a handful of papers on it.

>> Ton Kalker: We came from a completely different perspective. So if I take a step back right now, we have now this general, say, reconstructing representation theorem, which is this theorem. The question now is can we actually redrive -- because that's what

I wanted to set out, can we now derive at least these two theorems from this reconstructed representation theorem?

Let's, like let's look at this example. This is your compaction. This is the first example that I did. So you compact and you want to reconstruct. How does this follow from the last theorem that I did? So this is the -- we have source, representation, channel, reconstruction.

14

So how does this map into this and the other way around? Well, if you have this, I have a natural mapping of X -- well, this mapping already exists so this is simply identity and, of course, I have this part. So I can take identity, plus this encoding scheme and put this in this box. So I have a representation in this way.

Then what would be my channel. I'm only interested in, given a pair, X and an encoding of X, I'm only interested in a second component, so my channel would be really simple given a pair. I would only be interested in the second component. And what would I reconstruct? Well, I need to reconstruct these pairs. So how would I reconstruct? So given Z, what I would do is I take, given this pair, so given Y, I will simply reconstruct Y, because that's what I got done. See this part here is simply this part, and it's simply

[inaudible] so that would be an XX reconstruction. And for the first part, I would have used my decoding algorithm which I have already. If I do the decoding, I will get there.

So this is essentially perfect set up. From compaction, I can make a reconstruct representation equivalent by just taking these parts and putting them in at the right places. And what I can do then is, of course, well, what would your condition be? I simply repeat the theorem that I had before, with these substitutions, and voila, the compaction theorem follows by just doing the simple substitutions.

So this is essentially what you do. These are the conditions. These would be the derived condition, and it's not too hard to prove that all of these mutual informations and representations boil down to this would simply be the entropy of X, this would be the entropy of Y, and this is the condition.

So again, just, you take this general theorem, and you get this.

I'm not going to do the other ones, but there are similar tricks to actually for the other two.

So this theorem, with the proper substitutions, will actually allow you to reconstruct these two theorems.

So instead of worrying about all of these three separate cases, just prove this and use those simple tricks. And this theory has many more applications so this is the kind of trick we came up with. So all of these, chronologically, we did these kind of things, say, over a number of years. So every now and then. And this is the thing that we proved two years ago which kind of combined everything together. So this is the ring that holds everything together, the talking approach.

As the last part of the presentation, I'm going to look at one more example that actually doesn't fit in the previous set of theorems, which is a very similar setup as we had before.

15

We do an encoding, we go through a channel, and we try to reconstruct.

But now there's also the condition that -- sorry, this should be an M. So instead of -- let me change that on the fly right now. So instead of requiring that you reconstruct a full sequence, we're now going to require only reconstruct part of the original sequence.

And if you're familiar with the literature, and then you know that's actually very hard problem. Reconstructing part of the state or an input sequence, many people have worked on that. Many people found that an extremely difficult thing to actually prove any results on. Go over gray, number of people worked on it, only partial results.

What it kind of proved is in this setup, with the semantic condition, you represent part of the input signal, but just by a regular function, not by a general channel, but an actual function, then you can actually prove a theorem for reconstruction part of the state. If you replace this by channel, we don't have any results yet.

>>: Is it a specific part or arbitrary part?

>> Ton Kalker: Arbitrary part. Just any function will do. And in this particular case, you need an auxiliary random variable U to actually state that result. So you need an auxiliary random variable U with a particular channel and a particular condition that will allow you to do reconstruction. So even if you leave out the, say, the semantic part, then it would already be interesting in itself. But with the semantic part, it still holds. This is pretty recent. This is what we presented in Vancouver this year.

And again, this is a nice result if you are, for example, interested in watermarking with correlated data. So there's a known problem is that if I have, say, an image, and I have an image that is correlated with the first image, and I want to embed the correlated second image into the first image. But if the images are correlated, it feels like okay, I only have to do part of the work, because part of the information of the second image is already in the first image. So how can I explore that correlation? This theorem actually allows you to explore that correlation.

Naively, if you think about it, in this case, I have a first signal and a second signal. The second signal is correlated. The first thing how you could do this is simply ignore the fact that your two signals are correlated. What you could do is you could compress the second signal, W, and you simply embed that compressed signal into X.

If you do that, it's clearly suboptimal, because you don't explore the fact that X and W are correlated. You throw that away. You simply compress W on itself. You don't use that

16 correlation.

Well, another thing you could do is well, okay, let's use that fact that X and W are correlated. So what you can do is you can only compress W conditioned on X so you have less entropy. So you should be able to do a better job. And this compressed bit stream is going to be embedded in X. But if you do that, X will be modified into a watermark signal. Therefore, when you want the decoding, you don't have the original available so you cannot do reconstruction. So this trick doesn't work either.

Another trick would be, again, you look at the conditional entropy. You compress, but now you do a reversible embedding, because if you do reversible embedding, you can reconstruct X. And if you have X again, you can retrieve this part. But if you do reversible embedding, that's also clearly suboptimal, because now you'll also have to spend bits on reconstructing X, and you're not interested in X. You're only interested in

W.

So all of these three methods are suboptimal. And again, if you look at the literature, this is what people do. And nobody actually came up with something which -- what can you optimally do? If I have correlated data, how can I do watermarking of the correlated data of one signal into another?

And if you use this, the theorem that we did before, and this is actually, then, you can do this much better if you use, again, an auxiliary variable U with an associated coding scheme if this condition helps.

And it turned out that this result actually was known in a different way already in the literature. It was a result by Young Soon [phonetic] in 2006, where it essentially proved the same result, and this was their condition.

But if you look at this proof, it essentially takes a thesis to do this proof. It was the thesis by Young to prove this result. We essentially proved the same result in four pages using very classical methods. Moreover, we have a somewhat stronger result. We have a stronger result, only allowed how you actually do the distortions. So in that sense, this theorem's nice.

There's still open questions. So, for example, if you look at the specific case, you want to know how well does this system behave. So you want to say something was embedding capacity of such a system, and it turns out that it's a very hard question to ask, because you don't even know what to ask.

17

So you could say well, this system behaves well because you embed entropy of W bits.

That's kind of fake, because part of W is already in X. It's already in the correlated image. So you don't really want to look at this.

You could say, well, I could look at the difference between the conditional entropy and the actual number of bits I embedded, so the mutual information. But that doesn't really help either, because when you do this, you already introduce a distortion also. You don't take the embedding distortion into account. So it doesn't work either.

Or you could look at this case, where you look at the image itself, X itself, when there's no distortion, and could look at the entropy of W when there is distortion, when you embed, and look at the difference. This is how could measure capacity. The problem with this is, although it's probably a better definition, there's no way how can actually make any useful statement about it. We have no way to say, what is the bound on this number. So we have no result on this. And so this is still work in progress to see how we can actually define capacity for such a system.

And again, I repeat my executive summary. What we've done is we've looked at coding in the classical Shannon sense, added a semantic component to it, and you can argue whether or not, what the applications are. But then as Rico said, what you want to do, you want to do reconstruction but actually looking, actually do a full decoding. And if you apply these results, you can actually make some statements about watermarking systems. For example, you can make an easy statement about reversible watermarking, what is the capacity, and it actually gives you a very efficient coding scheme.

If you want to do correlated watermarking, you can actually come up with a good correlated watermarking system with an associated coding scheme.

That's my presentation. Thank you.

[applause]

>>: Thank you, Ton. Any questions? I think we have quite a few questions.

>>: I want to ask you, when you get down to reversible watermarking, so do you believe that this is a framework that can essentially create a good benchmarking tool? Well, not a benchmarking tool, but essentially be used as a quantifier of how a specific ad hoc technique is.

>> Ton Kalker: Yes.

18

>>: It's a relatively easy scheme --

>> Ton Kalker: Yeah. It's not like general robot watermarking, which is much more complicated. This is a very clearly defined objective. This is what you can maximally do.

We know the coding scheme. There's no auxiliary variable or nothing. It's just straightforward coding. So you know theoretically what you need to do to get there. So if you have a practical system, you can easily compare, now, how far that practical system is away from --

>>: Has anyone looked into what these systems are with respect to --

>> Ton Kalker: Yeah, so there's a report by one of my students, this guy.

>>: Oh, I see.

>> Ton Kalker: And we actually did those comparisons, and you will actually find that all of the systems are far below capacity.

>>: I see.

>> Ton Kalker: It's mostly because all of these existing systems are quite ad hoc. They don't really take into account information theoretic principles at all. They simply deal with bits and location maps. I don't know if you're familiar with the literature, but it's very ad hoc. And essentially what it says is that you don't need to do that. You can just try to find a good code, and identical work.

>>: Okay.

>>: So I was thinking about a possible generalization of this, but I'm not sure how to formalize it. It would be that rather than the signal -- rather than the encoded signal literally being close to the original signal, you would have two decoding functions. One that is more efficient and produces an approximation of the original signal and one that is more computationally intensive but produces the original signal. And it seems like that could be useful practice.

>> Ton Kalker: Could be, yeah, yeah. We didn't think about it that way, but that's another way to look at it.

>>: [inaudible].

19

>>: Be able to get a result without any penalty, right, because you put an even stronger constraint than --

>> Ton Kalker: Yeah, so you would --

>>: [inaudible].

>>: You're not forcing the signal to approximate the input. You're saying there's some other simpler, potentially, decoding function that gets an approximation of the input, which is a little more relaxed than --

>> Ton Kalker: Yeah, so if you want to do this, I guess you would start with a simple compaction scheme and see if you can actually do that with the simple compaction scheme where you have two encoders. That's probably the easiest one to prove.

>>: I'm just not sure what kind of [inaudible] you would put on --

>>: That's a good point. [inaudible].

>>: Ton, may I ask you, one, if you could bring the slide where you put the two distortions, the distortion from input output and the and the inputs to symbol? Right.

So if we think in terms of classical reconstruction theory, what I'm looking at, what is the entropy of Y [inaudible] the distortion between X and P. And if you allow the distortion, the allowable distortion between X and Y, to move to infinity, I don't care, it could be anything. The band to rate distortion is the traditional one.

>> Ton Kalker: It's the traditional one, yes.

>>: As you start putting that constraint, then the curve --

>> Ton Kalker: Will shift, yes.

>>: Do you have calculations for the typical distribution, how much it shifts?

>> Ton Kalker: We have calculations for the binary case [inaudible].

>>: One of those references will have --

20

>> Ton Kalker: Yeah, yeah.

>>: Okay.

>> Ton Kalker: And that's just pure laziness, because binary case is just easy to do.

>>: I see. But maybe with things like [inaudible] sources and stuff --

>> Ton Kalker: Yeah.

>>: It might be possible to do. Or maybe like doing watermarking. [inaudible] on the signal, binary on the message or something like that.

>> Ton Kalker: Yeah, yeah.

>>: Okay.

>> Ton Kalker: So historically, we started out with watermarking, as you might guess.

Where, of course, you have distortion constraints or you do some kind of watermarking and encoding technique, but there's a natural distortion constraint on the signal that you're encoding. This is how it all started out.

>>: If you don't mind staying on that, when you computed the dual distortion plus rate functions, when you did in the binary examples you looked into, did those functions, did anything strange happen with them, or the results kind of something [inaudible].

>> Ton Kalker: Yeah, well, for example, for the reversible watermarking stuff, I can actually bring up presentation. Actually something pretty strange did happen. I have to get -- let me get another presentation. Let me see.

Let's see which one. No, that's not one. This one. So, for example, if you do the classical techniques, what everybody does is you then, if you measure, say, distortion for reversible watermarking, and the rate that you get for reversible watermarking, typically, people work on this line. It's a linear correlation, a linear relationship between distortion and rate. This is for the binary case, by the way. If you do use clever coding techniques, then actually, this happens. So if you use symmetrical test channels, this is what you get.

And these are actual coding examples, actual codes.

If you actually allow your channels to go asymmetric, you get these kinds of results that the distortion, the rate essentially stays constant up to a point and then falls off.

21

So these results, which are based on this theory, are completely different from what you currently see in literature, because literature, all of these are all around these curves so it actually shows you that you can do considerably better by using -- I don't know if --

>>: I'm not following something.

>> Ton Kalker: Okay.

>>: This curve is going up. So shouldn't you be going down?

>> Ton Kalker: No. The distortion increases. If your distortion for reversible increases, then your rate goes up.

>>: Less of a constraint?

>> Ton Kalker: Less of a constraint, but --

>>: I'm too wired with the normal coding --

>> Ton Kalker: Yeah, yeah, yeah. So distortion goes up, less constraint. You can embed more bits. If you use --

>>: Embedding bits, that's not total [inaudible].

>> Ton Kalker: Sorry?

>>: This is embedding bits. I was thinking of the Y rates of the channel [inaudible].

>> Ton Kalker: Typically, people do time sharing approaches.

>>: This is nonlinear to [inaudible] to understand [inaudible].

>> Ton Kalker: So, for example, so this is the embedding rate in the case of the optimal channel that you would use for an embedding rate. So you actually have -- in this case, it will tell you. So again, this is again a very simple example. It will tell you if you have a binary sequence, use hemming distance and I want to do reversible watermarking on the binary sequence. Using these simple formulas that they've presented, what it will actually tell you is if you want to do optimal reversible watermarking for this case, and suppose your zero symbol occurs more often than the one symbol. So it's more zero [inaudible].

22

The optimal way to do reversible watermarking in this case is actually leave the one symbol alone. Everything that's a 1 should stay a 1, because I have less of them. The only symbols that should change are the zero symbols.

And this is then the rate distortion function that you get. It's constant for high distortions, and then it falls off rapidly for lower distortions. If you find these channels too difficult to work with because these channels are very difficult to find proper codes for, then you would say, okay, let's only work with symmetrical channels. Then you get this result, which is still better than time sharing. And this would be expression for rate distortion.

And for this guy, we actually have codes, because these are symmetrical channels and we know how to do coding for symmetrical channels. But you lose efficiency, because now suddenly, we also gone to [inaudible] once, which we know we shouldn't in the optimal case. And this, then, is what it looks like.

So you see strange, somewhat counterintuitive results. One of the counter intuitive results is actually this region is constant. There's just a limit and then you can actually include the distortion, but there's nothing more that you can gain in terms of rate.

>>: Is that limit just intrinsic on [inaudible].

>> Ton Kalker: Yeah, yeah.

>>: That makes sense because you can't embed any more than that, right?

>> Ton Kalker: Yes. But the fact that there was this limit, that this limit is here and not there --

>>: Oh, I see, because you reached that limit.

>> Ton Kalker: You reach that limit, because in the hemming case, your limit, of course, is 0.5. Every bit leaps arbitrarily, but you reached the limit in this case at 0.4 for this setting. That is surprising.

>>: The interesting way to phrase that is say I can have [inaudible] distortion, and achieve exactly the maximum [inaudible].

>> Ton Kalker: Yeah, yeah. You don't need infinite distortion, you can always achieve that with the finites.

23

>>: Or the maximums.

>> Ton Kalker: Yes, so in the [inaudible] case, they'll say you have to go up to a certain point and it doesn't matter anymore. I'm sure there's something similar out there as well.

Again, those channels would probably be equally difficult as in a simple binary case. A

C-channel is just a very nasty channel. Of course, if you look at symmetrical channels, you don't have that property. They just keep on rising.

So in that sense, yeah, it's -- you can actually get results out that are different from what you expect. Okay?

>>: Ton, thank you so much for doing this talk. Thank you for the audience.