Unified Expectation Maximization Rajhans Samdani Joint work with Ming-Wei Chang (

advertisement

Unified Expectation Maximization

Rajhans Samdani

NAACL 2012,

Montreal

Joint work with

Ming-Wei Chang (Microsoft Research)

and Dan Roth

University of Illinois at Urbana-Champaign

Page 1

Weakly Supervised Learning in NLP

Labeled data is scarce and difficult to obtain

A lot of work on learning with a small amount of labeled data

Expectation Maximization (EM) algorithm is the de facto

standard

More recently: significant work on injecting weak supervision

or domain knowledge via constraints into EM

Constraint-driven Learning (CoDL; Chang et al, 07)

Posterior regularization (PR; Ganchev et al, 10)

Page 2

Weakly Supervised Learning: EM and …?

Several variants of EM exist in the literature: Hard EM

Variants of constrained EM: CoDL and PR

Which version to use: EM (PR) vs hard EM (CoDL)?????

Or is there something better out there?

OUR CONTRIBUTION: a unified framework for EM algorithms,

Unified EM (UEM)

Includes existing EM algorithms

Pick the most suitable EM algorithm in a simple, adaptive, and

principled way

Adapting to data, initialization, and constraints

Page 3

Outline

Background: Expectation Maximization (EM)

EM with constraints

Unified Expectation Maximization (UEM)

Optimization Algorithm for the E-step

Experiments

Page 4

Predicting Structures in NLP

Predict the output or dependent variable y from the space of

allowed outputs Y given input variable x using parameters or

weight vector w

E.g.

predict POS tags given a sentence,

predict word alignments given sentences in two different languages,

predict the entity-relation structure from a document

Prediction expressed as

y* = argmaxy 2 Y P (y | x; w)

Page 5

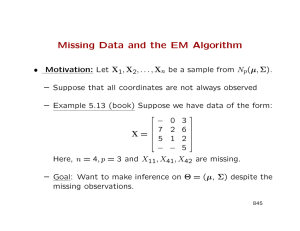

Learning Using EM: a Quick Primer

Given unlabeled data: x, estimate w; hidden: y

for t = 1 … T do

E:step: estimate a posterior distribution, q, over y:

qt(y) = argmin

q(y) , Pt) (y|x;wt) )

qt(y)q KL(

= P (y|x;w

(Neal and Hinton, 99)

Posterior

distribution

Conditional

distribution of

y given w

M:step: estimate the parameters w w.r.t. q:

wt+1 = argmaxw Eq log P (x, y; w)

Page 6

Other Version of EM: Hard EM

Standard EM

E-step:

Not

clear

argmin

KL(q

q

t(y),P

(y|x;wt))

Hard EM

E-step:

*= argmax

which yversion

To

q(y) = ±yy=y*

P(y|x,w)

use!!!

M-step:

M-step:

argmaxw Eq log P (x, y; w)

argmaxw Eq log P (x, y; w)

Page 7

Constrained EM

Domain knowledge-based constraints can help a lot by guiding

unsupervised learning

Constraint-driven Learning (Chang et al, 07),

Posterior Regularization (Ganchev et al, 10),

Generalized Expectation Criterion (Mann & McCallum, 08),

Learning from Measurements (Liang et al, 09)

Constraints are imposed on y (a structured object, {y1,y2…yn})

to specify/restrict the set of allowed structures Y

Page 8

Entity-Relation Prediction: Type Constraints

Per

Loc

Dole ’s wife, Elizabeth , is a resident of N.C.

E1

E2

R12

E3

R23

lives-in

Predict entity types: Per, Loc, Org, etc.

Predict relation types: lives-in, org-based-in, works-for, etc.

Entity-relation type constraints

Page 9

Bilingual Word Alignment: Agreement Constraints

Align words from

sentences in EN with

sentences in FR

Agreement constraints:

alignment from EN-FR

should agree with the

alignment from FR-EN

(Ganchev et al, 10)

Picture: courtesy Lacoste-Julien et al

10

Structured Prediction Constraints Representation

Assume a set of linear constraints:

Y = {y : Uy · b}

A universal representation (Roth and Yih, 07)

Can be relaxed into expectation constraints on posterior

probabilities:

Eq[Uy] · b

Focus on introducing constraints during the E-step

Page 11

Two Versions of Constrained EM

Posterior Regularization

(Ganchev et al, 10)

E-step:

argminNot

(y),P

q KL(qtclear

(y|x;wt))

Eq[Uy] · b

Constraint driven-learning

(Chang et al, 07)

E-step:

= argmaxy P(y|x,w)

whichy*version

To

Uy · b

use!!!

M-step:

argmaxw Eq log P (x, y; w)

M-step:

argmaxw Eq log P (x, y; w)

Page 12

So how do we learn…?

EM (PR) vs hard EM (CODL)

Unclear which version of EM to use (Spitkovsky et al, 10)

This is the initial point of our research

We present a family of EM algorithms which includes

these EM algorithms (and infinitely many new EM

algorithms): Unified Expectation Maximization (UEM)

UEM lets us pick the best EM algorithm in a principled

way

Page 13

Outline

Notation and Expectation Maximization (EM)

Unified Expectation Maximization

Motivation

Formulation and mathematical intuition

Optimization Algorithm for the E-step

Experiments

Page 14

Motivation: Unified Expectation Maximization (UEM)

EM (PR) and hard EM (CODL) differ mostly in the entropy of

the posterior distribution

EM

Hard EM

UEM tunes the entropy of the posterior distribution q and is

parameterized by a single parameter °

Page 15

Unified EM (UEM)

EM (PR) minimizes the KL-Divergence KL(q , P (y|x;w))

KL(q , p) = y q(y) log q(y) – q(y) log p(y)

UEM changes the E-step of standard EM and minimizes a

modified KL divergence KL(q , P (y|x;w); °) where

KL(q , p; °) = y ° q(y) log q(y) – q(y) log p(y)

Changes the entropy of the

posterior

Different ° values ! different EM algorithms

Page 16

Effect of Changing °

KL(q , p; °) = y ° q(y) log q(y) – q(y) log p(y)

q with ° = 1

q with ° = 1

Original

Distribution p

q with ° = 0

q with ° = -1

Page 17

Unifying Existing EM Algorithms

KL(q , p; °) = y ° q(y) log q(y) – q(y) log p(y)

Changing ° values results in different existing EM algorithms

Deterministic

Annealing (Smith and

No

Constraints

With

Constraints

-1

CODL

Hard EM

EM

0

1

°

Eisner, 04; Hofmann, 99)

1

PR

Page 18

Range of °

KL(q , p; °) = y ° q(y) log q(y) – q(y) log p(y)

We focus on tuning ° in the range [0,1]

Hard EM

No

Constraints

With

Constraints

0

LP

approx to

CODL

(New)

Infinitely many

new EM algorithms

°

EM

1

PR

Page 19

Tuning ° in practice

° essentially tunes the entropy of the posterior to better

adapt to data, initialization, constraints, etc.

We tune ° using a small amount of development data over

the range

0 .1 .2 .3

……

1

UEM for arbitrary ° in our range is very easy to implement:

existing EM/PR/hard EM/CODL codes can be easily extended

to implement UEM

Page 20

Outline

Setting up the problem

Unified Expectation Maximization

Solving the constrained E-step

Lagrange dual-based based algorithm

Unification of existing algorithms

Experiments

Page 21

The Constrained E-step

°-Parameterized

KL divergence

Domain knowledge-based linear

constraints

Standard probability

simplex constraints

For ° ¸ 0 ) convex

Page 22

Solving the Constrained E-step for q(y)

1

2

3

Iterate until

convergence

Introduce dual variables ¸ for

each constraint

Sub-gradient ascent on dual

vars with O ¸ / Eq[Uy] – b

Compute q for given ¸

For °>0, compute

With ° !0, unconstrained MAP

inference:

Page 23

Some Properties of our E-step Optimization

We use a dual projected sub-gradient ascent algorithm

(Bertsekas, 99)

Includes inequality constraints

For special instances where two (or more) “easy” problems

are connected via constraints, reduces to dual decomposition

For ° > 0: convex dual decomposition over individual models

(e.g. HMMs) connected via dual variables

° = 1: dual decomposition in posterior regularization

(Ganchev et al, 08)

For °

= 0: Lagrange relaxation/dual decomposition for hard

ILP inference (Koo et al, 10; Rush et al, 11)

Page 24

Outline

Setting up the problem

Introduction to Unified Expectation Maximization

Lagrange dual-based optimization Algorithm for the E-step

Experiments

POS tagging

Entity-Relation Extraction

Word Alignment

Page 25

Experiments: exploring the role of °

Test if tuning ° helps improve the performance over baselines

Study the relation between the quality of initialization and °

(or “hardness” of inference)

Compare against:

Posterior Regularization (PR) corresponds to ° = 1.0

Constraint-driven Learning (CODL) corresponds to ° = -1

Page 26

Unsupervised POS Tagging

Model as first order HMM

Try varying qualities of initialization:

Uniform initialization: initialize with equal probability for all states

Supervised initialization: initialize with parameters trained on varying

amounts of labeled data

Test the “conventional wisdom” that hard EM does well with

good initialization and EM does better with a weak

initialization

Page 27

Unsupervised POS tagging: Different EM instantiations

Relative performance to EM (Gamma=1)

0.9

Hard EM

Initialization with

40-80 examples

0.7

uniform posterior initializer

5 labeled examples initializer

10 labeled examples initializer

20 labeled examples initializer

40 labeled examples initializer

80 labeled examples initializer

0.8

0.6 0.5 0.4

Gamma

Performance relative to EM

0.05

0

-0.05

-0.1

-0.15

1.0

EM

Initialization with

20 examples

Initialization with

10 examples

0.3

Initialization with

5 examples

0.2

Uniform

Initialization

0.1

0.0

°

Page 28

Experiments: Entity-Relation Extraction

Dole ’s wife, Elizabeth , is a resident of N.C.

E1

E2

R12

R23

Extract entity types (e.g. Loc, Org, Per) and relation types

(e.g. Lives-in, Org-based-in, Killed) between pairs of

entities

Add constraints:

E3

Type constraints between entity and relations

Expected count constraints to regularize the counts of ‘None’ relation

Semi-supervised learning with a small amount of labeled data

Page 29

Result on Relations

0.48

0.46

No semi-sup

Macro-f1 scores

0.44

0.42

CODL

0.4

PR

0.38

UEM

0.36

0.34

0.32

0.3

5%

10%

% of labeled data

20%

Page 30

Experiments: Word Alignment

Word alignment from a language S to language T

We try En-Fr and En-Es pairs

We use an HMM-based model with agreement constraints for

word alignment

PR with agreement constraints known to give HUGE

improvements over HMM (Ganchev et al’08; Graca et al’08)

Use our efficient algorithm to decomposes the E-step into

individual HMMs

Page 31

Word Alignment: EN-FR with 10k Unlabeled Data

Alignment Error Rate

25

20

15

EM

PR

CODL

10

UEM

5

0

EN-FR

FR-EN

Page 32

Word Alignment: EN-FR

Alignment Error Rate

25

20

15

EM

PR

CODL

10

UEM

5

0

10k

50k

100k

Page 33

Word Alignment: FR-EN

Alignment Error Rate

25

20

15

EM

PR

CODL

10

UEM

5

0

10k

50k

100k

Page 34

Word Alignment: EN-ES

40

Alignment Error Rate

35

30

EM

PR

25

CODL

UEM

20

15

10

10k

50k

100k

Page 35

Word Alignment: ES-EN

Alignment Error Rate

35

30

25

EM

PR

CODL

20

UEM

15

10

10k

50k

100k

Page 36

Experiments Summary

In different settings, different baselines work better

Entity-Relation extraction: CODL does better than PR

Word Alignment: PR does better than CODL

Unsupervised POS tagging: depends on the initialization

UEM allows us to choose the best algorithm in all of these

cases

Best version of EM: a new version with 0 < ° < 1

Page 37

Unified EM: Summary

UEM generalizes existing variations of EM/constrained EM

UEM provides new EM algorithms parameterized by a single

parameter °

Efficient dual projected subgradient ascent technique to

incorporate constraints into UEM

The best ° corresponds to neither EM (PR) nor hard EM

(CODL) and found through the UEM framework

Tuning ° adaptively changes the entropy of the posterior

UEM is easy to implement: add a few lines of code to existing

EM codes

Questions?

Page 38