General-Purpose Computation on Graphics Hardware David Luebke University of Virginia

advertisement

General-Purpose Computation on

Graphics Hardware

David Luebke

University of Virginia

Course Introduction

• The GPU on commodity video cards has evolved

into an extremely flexible and powerful processor

– Programmability

– Precision

– Power

• We are interested in how to harness that power for

general-purpose computation

Motivation:

Computational Power

• GPUs are fast…

–

–

–

–

3 GHz Pentium4 theoretical: 6 GFLOPS, 5.96 GB/sec peak

GeForceFX 5900 observed: 20 GFLOPS, 25.3 GB/sec peak

GeForce 6800 Ultra observed: 53 GFLOPS, 35.2 GB/sec peak

GeForce 8800 GTX: estimated at 520 GFLOPS, 86.4 GB/sec

peak (reference here)

• That’s almost 100 times faster than a 3 Ghz Pentium4!

• GPUs are getting faster, faster

– CPUs: annual growth 1.5× decade growth 60×

– GPUs: annual growth > 2.0× decade growth > 1000

Courtesy Kurt Akeley,

Ian Buck & Tim Purcell, GPU Gems (see course notes)

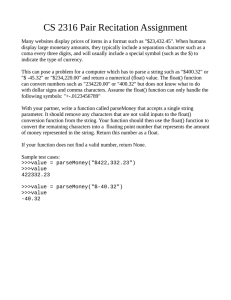

Motivation:

Computational Power

GPU

CPU

Courtesy Naga Govindaraju

Motivation:

Computational Power

GPU

multiplies per second

GFLOPS

NVIDIA NV30, 35, 40

ATI R300, 360, 420

Pentium 4

July 01

Jan 02

July 02

Jan 03

July 03

Jan 04

Courtesy Ian Buck

An Aside:

Computational Power

• Why are GPUs getting faster so fast?

– Arithmetic intensity: the specialized nature of GPUs makes it

easier to use additional transistors for computation not cache

– Economics: multi-billion dollar video game market is a

pressure cooker that drives innovation

Motivation:

Flexible and precise

• Modern GPUs are deeply programmable

– Programmable pixel, vertex, video engines

– Solidifying high-level language support

• Modern GPUs support high precision

– 32 bit floating point throughout the pipeline

– High enough for many (not all) applications

Motivation:

The Potential of GPGPU

• The power and flexibility of GPUs makes them an

attractive platform for general-purpose computation

• Example applications range from in-game physics

simulation to conventional computational science

• Goal: make the inexpensive power of the GPU

available to developers as a sort of computational

coprocessor

The Problem:

Difficult To Use

• GPUs designed for and driven by video games

– Programming model is unusual & tied to computer graphics

– Programming environment is tightly constrained

• Underlying architectures are:

– Inherently parallel

– Rapidly evolving (even in basic feature set!)

– Largely secret

• Can’t simply “port” code written for the CPU!

GPU Fundamentals:

The Graphics Pipeline

Graphics State

Application

Transform

Vertices

(3D)

Rasterizer

Xformed,

Lit

Vertices

(2D)

CPU

Shade

Fragments

(pre-pixels)

GPU

• A simplified graphics pipeline

– Note that pipe widths vary

– Many caches, FIFOs, and so on not shown

Final

pixels

(Color, Depth)

Video

Memory

(Textures)

Render-to-texture

GPU Fundamentals:

The Modern Graphics Pipeline

Graphics State

Application

Vertices

(3D)

Vertex

Vertex

Processor

Processor

Rasterizer

Xformed,

Lit

Vertices

(2D)

CPU

• Programmable vertex

processor!

Fragments

(pre-pixels)

GPU

Pixel

Fragment

Processor

Processor

Final

pixels

(Color, Depth)

Video

Memory

(Textures)

Render-to-texture

• Programmable pixel

processor!

GPU Pipeline:

Transform

• Vertex Processor (multiple operate in parallel)

– Transform from “world space” to “image space”

– Compute per-vertex lighting

GPU Pipeline:

Rasterizer

• Rasterizer

– Convert geometric rep. (vertex) to image rep. (fragment)

• Fragment = image fragment

– Pixel + associated data: color, depth, stencil, etc.

– Interpolate per-vertex quantities across pixels

GPU Pipeline: Shade

• Fragment Processors (multiple in parallel)

– Compute a color for each pixel

– Optionally read colors from textures (images)

Importance of Data Parallelism

• GPU: Each vertex / fragment is independent

– Temporary registers are zeroed

– No static data

– No read-modify-write buffers

• Data parallel processing

– Best for ALU-heavy architectures: GPUs

• Multiple vertex & pixel pipelines

– Hide memory latency (with more computation)

Courtesy of Ian Buck

Arithmetic Intensity

• Lots of ops per word transferred

• GPGPU demands high arithmetic intensity for peak

performance

– Ex: solving systems of linear equations

– Physically-based simulation on lattices

– All-pairs shortest paths

Courtesy of Pat Hanrahan

Data Streams & Kernels

• Streams

– Collection of records requiring similar computation

• Vertex positions, Voxels, FEM cells, etc.

– Provide data parallelism

• Kernels

– Functions applied to each element in stream

• Transforms, PDE, …

– No dependencies between stream elements

• Encourage high arithmetic intensity

Courtesy of Ian Buck

Example: Simulation Grid

• Common GPGPU computation style

– Textures represent computational grids = streams

• Many computations map to grids

–

–

–

–

Matrix algebra

Image & Volume processing

Physical simulation

Global Illumination

• ray tracing, photon mapping,

radiosity

• Non-grid streams can be

mapped to grids

Stream Computation

• Grid Simulation algorithm

– Made up of steps

– Each step updates entire grid

– Must complete before next step can begin

• Grid is a stream, steps are kernels

– Kernel applied to each stream element

Scatter vs. Gather

• Grid communication

– Grid cells share information

Computational Resources Inventory

• Programmable parallel processors

– Vertex & Fragment pipelines

• Rasterizer

– Mostly useful for interpolating addresses (texture coordinates)

and per-vertex constants

• Texture unit

– Read-only memory interface

• Render to texture

– Write-only memory interface

Vertex Processor

• Fully programmable (SIMD / MIMD)

• Processes 4-vectors (RGBA / XYZW)

• Capable of scatter but not gather

– Can change the location of current vertex

– Cannot read info from other vertices

– Can only read a small constant memory

• Future hardware enables gather!

– Vertex textures

Fragment Processor

• Fully programmable (SIMD)

• Processes 4-vectors (RGBA / XYZW)

• Random access memory read (textures)

• Capable of gather but not scatter

– No random access memory writes

– Output address fixed to a specific pixel

• Typically more useful than vertex processor

– More fragment pipelines than vertex pipelines

– RAM read

– Direct output

CPU-GPU Analogies

• CPU programming is familiar

– GPU programming is graphics-centric

• Analogies can aid understanding

CPU-GPU Analogies

CPU

GPU

Stream / Data Array =

Memory Read

=

Texture

Texture Sample

CPU-GPU Analogies

CPU

GPU

Loop body / kernel / algorithm step = Fragment Program

Feedback

• Each algorithm step depend on the

results of previous steps

• Each time step depends on the

results of the previous time step

CPU-GPU Analogies

CPU

GPU

.

.

.

Grid[i][j]= x;

.

.

.

Array Write

=

Render to Texture

GPU Simulation Overview

• Analogies lead to implementation

– Algorithm steps are fragment programs

• Computational “kernels”

– Current state variables stored in textures

– Feedback via render to texture

• One question: how do we invoke

computation?

Invoking Computation

• Must invoke computation at each pixel

– Just draw geometry!

– Most common GPGPU invocation is a full-screen quad

Typical “Grid” Computation

• Initialize “view” (so that pixels:texels::1:1)

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

glOrtho(0, 1, 0, 1, 0, 1);

glViewport(0, 0, outTexResX, outTexResY);

• For each algorithm step:

– Activate render-to-texture

– Setup input textures, fragment program

– Draw a full-screen quad (1x1)

Branching Techniques

• Fragment program branches can be expensive

– No true fragment branching on NV3X & R3X0

• Better to move decisions up the pipeline

–

–

–

–

–

Replace with math

Occlusion Query

Domain decomposition

Z-cull

Pre-computation

Branching with OQ

• Use it for iteration termination

Do

{ // outer loop on CPU

BeginOcclusionQuery

{

// Render with fragment program that

// discards fragments that satisfy

// termination criteria

} EndQuery

} While query returns > 0

• Can be used for subdivision techniques

– Demo later

Domain Decomposition

• Avoid branches where outcome is fixed

– One region is always true, another false

– Separate FPs for each region, no branches

• Example:

boundaries

Z-Cull

• In early pass, modify depth buffer

– Write depth=0 for pixels that should not be modified by later

passes

– Write depth=1 for rest

• Subsequent passes

– Enable depth test (GL_LESS)

– Draw full-screen quad at z=0.5

– Only pixels with previous depth=1 will be processed

• Can also use early stencil test

• Not available on NV3X

– Depth replace disables ZCull

Pre-computation

• Pre-compute anything that will not change every

iteration!

• Example: arbitrary boundaries

– When user draws boundaries, compute texture containing

boundary info for cells

– Reuse that texture until boundaries modified

– Combine with Z-cull for higher performance!

High-level Shading Languages

• Cg, HLSL, & GLSlang

– Cg:

• http://www.nvidia.com/cg

– HLSL:

• http://msdn.microsoft.com/library/default.asp?url=/library/enus/directx9_c/directx/graphics/reference/highlevellanguageshad

ers.asp

– GLSlang:

• http://www.3dlabs.com/support/developer/ogl2/whitepapers/inde

x.html

float3 cPlastic = Cd * (cAmbi + cDiff)

+ Cs*cSpec

….

MULR R0.xyz, R0.xxx, R4.xyxx;

MOVR R5.xyz, -R0.xyzz;

DP3R R3.x, R0.xyzz, R3.xyzz;

…

GPGPU Languages

• Why do we want them?

– Make programming GPUs easier!

• Don’t need to know OpenGL, DirectX, or ATI/NV extensions

• Simplify common operations

• Focus on the algorithm, not on the implementation

• Sh

– University of Waterloo

– http://libsh.sourceforge.net

– http://www.cgl.uwaterloo.ca

• Brook

– Stanford University

– http://brook.sourceforge.net

– http://graphics.stanford.edu

Brook: general purpose streaming

language

• stream programming model

– enforce data parallel computing

• streams

– encourage arithmetic intensity

• kernels

• C with stream extensions

• GPU = streaming coprocessor

system outline

.br

Brook source files

brcc

source to source compiler

brt

Brook run-time library

Brook language

streams

• streams

– collection of records requiring similar computation

• particle positions, voxels, FEM cell, …

float3 positions<200>;

float3 velocityfield<100,100,100>;

– similar to arrays, but…

• index operations disallowed: position[i]

• read/write stream operators

streamRead (positions, p_ptr);

streamWrite (velocityfield, v_ptr);

– encourage data parallelism

Brook language

kernels

• kernels

– functions applied to streams

• similar to for_all construct

kernel void foo (float a<>, float b<>,

out float result<>) {

result = a + b;

}

float a<100>;

float b<100>;

float c<100>;

foo(a,b,c);

for (i=0; i<100; i++)

c[i] = a[i]+b[i];

Brook language

kernels

• kernels

– functions applied to streams

• similar to for_all construct

kernel void foo (float a<>, float b<>,

out float result<>) {

result = a + b;

}

– no dependencies between stream elements

– encourage high arithmetic intensity

Brook language

kernels

• kernels arguments

–

–

–

–

input/output streams

constant parameters

gather streams

iterator streams

kernel void foo (float a<>, float b<>,

float t, float array[],

iter float n<>,

out float result<>) {

result = array[a] + t*b + n;

}

float a<100>;

float b<100>;

float c<100>;

float array<25>

iter float n<100> = iter(0, 10);

foo(a,b,3.2f,array,n,c);

Brook language

kernels

• ray triangle intersection

kernel void krnIntersectTriangle(Ray ray<>, Triangle tris[],

RayState oldraystate<>,

GridTrilist trilist[],

out Hit candidatehit<>) {

float idx, det, inv_det;

float3 edge1, edge2, pvec, tvec, qvec;

if(oldraystate.state.y > 0) {

idx = trilist[oldraystate.state.w].trinum;

edge1 = tris[idx].v1 - tris[idx].v0;

edge2 = tris[idx].v2 - tris[idx].v0;

pvec = cross(ray.d, edge2);

det = dot(edge1, pvec);

inv_det = 1.0f/det;

tvec = ray.o - tris[idx].v0;

candidatehit.data.y = dot( tvec, pvec ) * inv_det;

qvec = cross( tvec, edge1 );

candidatehit.data.z = dot( ray.d, qvec ) * inv_det;

candidatehit.data.x = dot( edge2, qvec ) * inv_det;

candidatehit.data.w = idx;

} else {

candidatehit.data = float4(0,0,0,-1);

}

}

Brook language

reductions

• reductions

– compute single value from a stream

reduce void sum (float a<>,

reduce float r<>)

r += a;

}

float a<100>;

float r;

sum(a,r);

r = a[0];

for (int i=1; i<100; i++)

r += a[i];

Brook language

reductions

• reductions

– associative operations only

(a+b)+c = a+(b+c)

• sum, multiply, max, min, OR, AND, XOR

• matrix multiply

Brook language

reductions

• multi-dimension reductions

– stream “shape” differences resolved by reduce function

reduce void sum (float a<>,

reduce float r<>)

r += a;

}

float a<20>;

float r<5>;

sum(a,r);

for (int i=0; i<5; i++)

r[i] = a[i*4];

for (int j=1; j<4; j++)

r[i] += a[i*4 + j];

Brook language

stream repeat & stride

• kernel arguments of different shape

– resolved by repeat and stride

kernel void foo (float a<>, float b<>,

out float result<>);

float a<20>;

float b<5>;

float c<10>;

foo(a,b,c);

foo(a[0],

foo(a[2],

foo(a[4],

foo(a[6],

foo(a[8],

foo(a[10],

foo(a[12],

foo(a[14],

foo(a[16],

foo(a[18],

b[0],

b[0],

b[1],

b[1],

b[2],

b[2],

b[3],

b[3],

b[4],

b[4],

c[0])

c[1])

c[2])

c[3])

c[4])

c[5])

c[6])

c[7])

c[8])

c[9])

Brook language

matrix vector multiply

kernel void mul (float a<>, float b<>,

out float result<>)

{ result = a*b; }

reduce void sum (float a<>,

reduce float result<>)

{ result += a; }

float

float

float

float

matrix<20,10>;

vector<1, 10>;

tempmv<20,10>;

result<20, 1>;

mul(matrix,vector,tempmv);

sum(tempmv,result);

M

V

V

V

=

T

Brook language

matrix vector multiply

kernel void mul (float a<>, float b<>,

out float result<>)

{ result = a*b; }

reduce void sum (float a<>,

reduce float result<>)

{ result += a; }

float

float

float

float

matrix<20,10>;

vector<1, 10>;

tempmv<20,10>;

result<20, 1>;

mul(matrix,vector,tempmv);

sum(tempmv,result);

T

sum

R

Running Brook

• Compiling .br files

Brook CG Compiler

Version: 0.2 Built: Apr 24 2004, 18:11:59

brcc [-hvndktyAN] [-o prefix] [-w workspace] [-p shader ] foo.br

-h

help (print this message)

-v

verbose (print intermediate generated code)

-n

no codegen (just parse and reemit the input)

-d

debug (print cTool internal state)

-k

keep generated fragment program (in foo.cg)

-t

disable kernel call type checking

-y

emit code for ATI 4-output hardware

-A

enable address virtualization (experimental)

-N

deny support for kernels calling other kernels

-o prefix

prefix prepended to all output files

-w workspace workspace size (16 - 2048, default 1024)

-p shader

cpu / ps20 / fp30 / cpumt (can specify multiple)

Running Brook

• BRT_RUNTIME selects platform

CPU Backend:

CPU Multithreaded Backend:

NVIDIA NV30 Backend:

OpenGL ARB Backend:

DirectX9 Backend:

BRT_RUNTIME = cpu

BRT_RUNTIME = cpumt

BRT_RUNTIME = nv30

BRT_RUNTIME = arb

BRT_RUNTIME = dx9

Runtime

• accessing stream data for graphics aps

– Brook runtime api available in c++ code

– autogenerated .hpp files for brook code

brook::initialize( "dx9", (void*)device );

// Create streams

fluidStream0 = stream::create<float4>( kFluidSize, kFluidSize );

normalStream = stream::create<float3>( kFluidSize, kFluidSize );

// Get a handle to the texture being used by

// the normal stream as a backing store

normalTexture = (IDirect3DTexture9*)

normalStream->getIndexedFieldRenderData(0);

// Call the simulation kernel

simulationKernel( fluidStream0, fluidStream0, controlConstant,

fluidStream1 );

Applications

• Includes lots of sample

applications

–

–

–

–

Ray-tracer

FFT

Image segmentation

Linear algebra

Brook performance

2-3x faster than CPU implementation

ATI Radeon 9800 XT

NVIDIA GeForce 6800

compared against 3GHz P4:

• Intel Math Library

• FFTW

• Custom cached-blocked

segment C code

GPUs still lose against SSE

cache friendly code.

Super-optimizations

• ATLAS

• FFTW

Brook for GPUs

• Release v0.3 available on Sourceforge

• Project Page

– http://graphics.stanford.edu/projects/brook

• Source

– http://www.sourceforge.net/projects/brook

• Over 4K downloads!

• Brook for GPUs: Stream Computing on Graphics Hardware

– Ian Buck, Tim Foley, Daniel Horn, Jeremy Sugerman, Kayvon

Fatahalian, Mike Houston, Pat Hanrahan

Fly-fishing fly images from The English Fly Fishing Shop

GPGPU Examples

• Fluids

• Reaction/Diffusion

• Multigrid

• Tone mapping