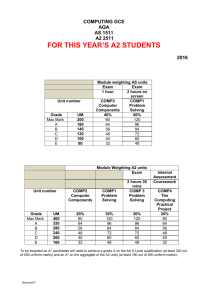

Robust PCA in Stata Vincenzo Verardi FUNDP (Namur) and ULB (Brussels), Belgium

advertisement

Robust PCA in Stata Vincenzo Verardi (vverardi@fundp.ac.be) FUNDP (Namur) and ULB (Brussels), Belgium FNRS Associate Researcher Introduction Robust Covariance Matrix Robust PCA Application Conclusion PCA, transforms a set of correlated variables into a smaller set of uncorrelated variables (principal components). For p random variables X1,…,Xp. the goal of PCA is to construct a new set of p axes in the directions of greatest variability. X2 Introduction Robust Covariance Matrix Robust PCA Application Conclusion X1 X2 Introduction Robust Covariance Matrix Robust PCA Application Conclusion X1 X2 Introduction Robust Covariance Matrix Robust PCA Application Conclusion X1 X2 Introduction Robust Covariance Matrix Robust PCA Application Conclusion X1 Introduction Robust Covariance Matrix Robust PCA Application Conclusion Hence, for the first principal component, the goal is to find a linear transformation Y=1 X1+2 X2+..+ p Xp (= TX) such that tha variance of Y (=Var(TX) =T ) is maximal The direction of is given by the eigenvector correponding to the largest eigenvalue of matrix Σ Introduction Robust Covariance Matrix Robust PCA Application Conclusion The second vector (orthogonal to the first), is the one that has the second highest variance. This corresponds to the eigenvector associated to the second largest eigenvalue And so on … Introduction The new variables (PCs) have a variance equal to their corresponding eigenvalue Robust Covariance Matrix Var(Yi)= i for all i=1…p Robust PCA Application Conclusion The relative variance explained by each PC is given by i / i How many PC should be considered? Introduction Robust Covariance Matrix Robust PCA Application Conclusion Sufficient number of PCs to have a cumulative variance explained that is at least 60-70% of the total Kaiser criterion: eigenvalues >1 keep PCs with Introduction Robust Covariance Matrix Robust PCA Application Conclusion PCA is based on the classical covariance matrix which is sensitive to outliers … Illustration: Introduction Robust Covariance Matrix Robust PCA Application Conclusion PCA is based on the classical covariance matrix which is sensitive to outliers … Illustration: . set obs 1000 . drawnorm x1-x3, corr(C) . matrix list C c1 c2 c3 r1 1 r2 .7 1 r3 .6 .5 1 . corr x1 x2 x3 (obs=1000) Introduction Robust x1 x2 x3 x1 x2 x3 1.0000 0.7097 0.6162 1.0000 0.5216 1.0000 x1 x2 x3 1.0000 0.0005 -0.0148 1.0000 0.5216 1.0000 Covariance Matrix . replace x1=100 in 1/100 (100 real changes made) Robust PCA Application . corr x1 x2 x3 (obs=1000) Conclusion x1 x2 x3 . corr x1 x2 x3 (obs=1000) Introduction Robust x1 x2 x3 x1 x2 x3 1.0000 0.7097 0.6162 1.0000 0.5216 1.0000 x1 x2 x3 1.0000 0.0005 -0.0148 1.0000 0.5216 1.0000 Covariance Matrix . replace x1=100 in 1/100 (100 real changes made) Robust PCA Application . corr x1 x2 x3 (obs=1000) Conclusion x1 x2 x3 . corr x1 x2 x3 (obs=1000) Introduction Robust x1 x2 x3 x1 x2 x3 1.0000 0.7097 0.6162 1.0000 0.5216 1.0000 x1 x2 x3 1.0000 0.0005 -0.0148 1.0000 0.5216 1.0000 Covariance Matrix . replace x1=100 in 1/100 (100 real changes made) Robust PCA Application . corr x1 x2 x3 (obs=1000) Conclusion x1 x2 x3 This drawback can be easily solved by basing the PCA on a robust estimation of the covariance (correlation) matrix. Introduction Robust Covariance Matrix Robust PCA Application Conclusion A well suited method for this is MCD that considers all subsets containing h% of the observations (generally 50%) and estimates Σ and µ on the data of the subset associated with the smallest covariance matrix determinant. Intuition … Introduction The generalized variance proposed by Wilks (1932), is a one-dimensional measure of multidimensional scatter. It is defined as GV det() . Robust Covariance Matrix In the 2x2 case it is easy to see the underlying idea: Robust PCA Application Conclusion xy 2 x Spread due to covariations xy 2 2 2 and det() x y xy 2 y Raw bivariate spread Remember, MCD considers all subsets containing 50% of the observations … Introduction Robust Covariance Matrix Robust PCA However, if N=200, the number of subsets to consider would be: 200 58 9.0549×10 ... 100 Application Conclusion Solution: use subsampling algorithms … The implemented algorithm: Rousseeuw and Van Driessen (1999) Introduction 1. P-subset Robust Covariance Matrix Robust PCA Application Conclusion 2. Concentration (sorting distances) 3. Estimation of robust ΣMCD 4. Estimation of robust PCA Introduction Robust Covariance Consider a number of subsets containing (p+1) points (where p is the number of variables) sufficiently large to be sure that at least one of the subsets does not contain outliers. Matrix Robust PCA Application Calculate the covariance matrix on each subset and keep the one with the smallest determinant Conclusion Do some fine tuning to get closer to the global solution Introduction The minimal number of subsets we need to have a probability (Pr) of having at least one clean if a% of outliers corrupt the dataset can be easily derived: Robust Covariance Matrix Robust PCA Contamination: Pr 1 1 1 a % p Application Conclusion log(1Pr) N log(1(1a )p ) * N Introduction The minimal number of subsets we need to have a probability (Pr) of having at least one clean if a% of outliers corrupt the dataset can be easily derived: Robust Covariance Matrix Robust PCA Application Conclusion Pr 1 1 1 a p N Will be the probability that one random point in the dataset is not an outlier log(1Pr) N log(1(1a )p ) * Introduction The minimal number of subsets we need to have a probability (Pr) of having at least one clean if a% of outliers corrupt the dataset can be easily derived: Robust Covariance Matrix Robust PCA Application Conclusion Pr 1 1 1 a p N Will be the probability that none of the p random points in a p-subset is an log(1Pr) * outlier N p log(1(1a ) ) Introduction The minimal number of subsets we need to have a probability (Pr) of having at least one clean if a% of outliers corrupt the dataset can be easily derived: Robust Covariance Matrix Robust PCA Application Conclusion Pr 1 1 1 a p N Will be the probability that at least one of the p random points in a p-subset is log(1Pr) * an outlier N p log(1(1a ) ) Introduction The minimal number of subsets we need to have a probability (Pr) of having at least one clean if a% of outliers corrupt the dataset can be easily derived: Robust Covariance Matrix Robust PCA Application Conclusion Pr 1 1 1 a p N Will be the probability that there is at least one outlier in each of the N plog(1Pr) * subsets (i.e. that all pN log(1considered p (1a ) ) subsets are corrupt) Introduction The minimal number of subsets we need to have a probability (Pr) of having at least one clean if a% of outliers corrupt the dataset can be easily derived: Robust Covariance Matrix Robust PCA Application Conclusion Pr 1 1 1 a p N Will be the probability that there is at least one clean p-subset among the N log(1Pr) * considered N p log(1(1a ) ) Introduction The minimal number of subsets we need to have a probability (Pr) of having at least one clean if a% of outliers corrupt the dataset can be easily derived: Robust Covariance Matrix Robust PCA Application Conclusion Pr 1 1 1 a p N Rearranging we have: log(1Pr) N log(1(1a )p ) * The preliminary p-subset step allowed to estimate a preliminary Σ* and μ* Introduction Robust Calculate Mahalanobis distances using Σ* and μ* for all individuals Covariance Matrix Robust PCA Application Conclusion Mahalanobis distances, are defined as 1 MD ( xi ) ( xi )' . MD are distributed as p2 for Gaussian data. The preliminary p-subset step allowed to estimate a preliminary Σ* and μ* Introduction Robust Covariance Matrix Robust PCA Application Calculate Mahalanobis distances using Σ* and μ* for all individuals Sort individuals according to Mahalanobis distances and re-estimate Σ* and μ* using the first 50% observations Conclusion Repeat the convergence previous step till In Stata, Hadi’s method is available to estimate a robust Covariance matrix Introduction Robust Covariance Matrix Robust PCA Application Conclusion Unfortunately it is not very robust The reason for this is simple, it relies on a non-robust preliminary estimation of the covariance matrix 1. Compute a variant of MD MD ( xi MED ) ( xi MED )' 1 Introduction Robust Covariance Matrix Robust PCA Application Conclusion 2. Sort individuals according to MD . Use the subset with the first p+1 points to re-estimate μ and Σ. 3. Compute MD and sort the data. 4. Check if the first point out of the subset is an outlier. If not, add this point to the subset and repeat steps 3 and 4. Otherwise stop. Introduction Robust Covariance Matrix Robust PCA Application Conclusion clear set obs 1000 local b=sqrt(invchi2(5,0.95)) drawnorm x1-x5 e replace x1=invnorm(uniform())+5 in 1/100 mcd x*, outlier gen RD=Robust_distance hadimvo x*, gen(a b) p(0.5) scatter RD b, xline(`b') yline(`b') 8 6 Fast-MCD Introduction Covariance 4 Robust Matrix 2 Robust PCA Application Conclusion 0 Hadi 0 1 2 3 Hadi distance (p=.5) 4 5 1 C .7 1 .6 .5 1 . drawnorm x1-x3, corr(C) . pca x1-x3 Principal components/correlation Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Introduction Robust Covariance Matrix Robust PCA Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 2.26233 .471721 .26595 1.79061 .205771 . 0.7541 0.1572 0.0886 0.7541 0.9114 1.0000 Principal components (eigenvectors) Variable Comp1 Comp2 Comp3 Unexplained x1 x2 x3 0.6021 0.5815 0.5471 -0.2227 -0.5358 0.8145 -0.7667 0.6123 0.1931 0 0 0 Application Conclusion 1 C .7 1 .6 .5 1 . drawnorm x1-x3, corr(C) . pca x1-x3 Principal components/correlation Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Introduction Robust Covariance Matrix Robust PCA Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 2.26233 .471721 .26595 1.79061 .205771 . 0.7541 0.1572 0.0886 0.7541 0.9114 1.0000 Principal components (eigenvectors) Variable Comp1 Comp2 Comp3 Unexplained x1 x2 x3 0.6021 0.5815 0.5471 -0.2227 -0.5358 0.8145 -0.7667 0.6123 0.1931 0 0 0 Application Conclusion . replace x1=100 in 1/100 (100 real changes made) . pca x1-x3 Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 1.51219 1.00075 .487058 .511435 .513695 . 0.5041 0.3336 0.1624 0.5041 0.8376 1.0000 Principal components (eigenvectors) Application Variable Comp1 Comp2 Comp3 Unexplained Conclusion x1 x2 x3 -0.0261 0.7064 0.7073 0.9986 0.0512 -0.0143 0.0463 -0.7059 0.7068 0 0 0 . replace x1=100 in 1/100 (100 real changes made) . pca x1-x3 Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 1.51219 1.00075 .487058 .511435 .513695 . 0.5041 0.3336 0.1624 0.5041 0.8376 1.0000 Principal components (eigenvectors) Application Variable Comp1 Comp2 Comp3 Unexplained Conclusion x1 x2 x3 -0.0261 0.7064 0.7073 0.9986 0.0512 -0.0143 0.0463 -0.7059 0.7068 0 0 0 . replace x1=100 in 1/100 (100 real changes made) . pca x1-x3 Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 1.51219 1.00075 .487058 .511435 .513695 . 0.5041 0.3336 0.1624 0.5041 0.8376 1.0000 Principal components (eigenvectors) Application Variable Comp1 Comp2 Comp3 Unexplained Conclusion x1 x2 x3 -0.0261 0.7064 0.7073 0.9986 0.0512 -0.0143 0.0463 -0.7059 0.7068 0 0 0 . mcd x* The number of subsamples to check is 20 . pcamat covRMCD, n(1000) Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Application Conclusion Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 2.24708 .473402 .27952 1.77368 .193882 . 0.7490 0.1578 0.0932 0.7490 0.9068 1.0000 Principal components (eigenvectors) Variable Comp1 Comp2 Comp3 Unexplained x1 x2 x3 0.6045 0.5701 0.5564 -0.0883 -0.6462 0.7581 -0.7917 0.5074 0.3402 0 0 0 . mcd x* The number of subsamples to check is 20 . pcamat covRMCD, n(1000) Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Application Conclusion Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 2.24708 .473402 .27952 1.77368 .193882 . 0.7490 0.1578 0.0932 0.7490 0.9068 1.0000 Principal components (eigenvectors) Variable Comp1 Comp2 Comp3 Unexplained x1 x2 x3 0.6045 0.5701 0.5564 -0.0883 -0.6462 0.7581 -0.7917 0.5074 0.3402 0 0 0 . mcd x* The number of subsamples to check is 20 . pcamat covRMCD, n(1000) Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Application Conclusion Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 1000 3 3 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 2.24708 .473402 .27952 1.77368 .193882 . 0.7490 0.1578 0.0932 0.7490 0.9068 1.0000 Principal components (eigenvectors) Variable Comp1 Comp2 Comp3 Unexplained x1 x2 x3 0.6045 0.5701 0.5564 -0.0883 -0.6462 0.7581 -0.7917 0.5074 0.3402 0 0 0 QUESTION: Can a single indicator accurately sum up research excellence? Introduction Robust Covariance Matrix GOAL: Determine the underlying factors measured by the variables used in the Shanghai ranking Robust PCA Application Conclusion Principal component analysis Alumni: Alumni recipients of the Nobel prize or the Fields Medal; Introduction Robust Covariance Matrix Robust PCA Application Conclusion Award: Current faculty Nobel laureates and Fields Medal winners; HiCi : Highly cited researchers N&S: Articles published in Nature and Science; PUB: Articles in the Science Citation Index-expanded, and the Social Science Citation Index; . pca scoreonalumni scoreonaward scoreonhici scoreonns scoreonpub Principal components/correlation Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) Introduction Robust Covariance = = = = 150 5 5 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 3.40526 .872601 .414444 .189033 .118665 2.53266 .458157 .225411 .0703686 . 0.6811 0.1745 0.0829 0.0378 0.0237 0.6811 0.8556 0.9385 0.9763 1.0000 Matrix Principal components (eigenvectors) Robust PCA Application Conclusion Variable Comp1 Comp2 Comp3 Comp4 Comp5 Unexplained scoreonalu~i scoreonaward scoreonhici scoreonns scoreonpub 0.4244 0.4405 0.4829 0.5008 0.3767 -0.4816 -0.5202 0.2651 0.1280 0.6409 0.5697 -0.1339 -0.4261 -0.3848 0.5726 -0.5129 0.6991 -0.3417 -0.1104 0.3453 -0.0155 0.1696 0.6310 -0.7567 0.0161 0 0 0 0 0 . pca scoreonalumni scoreonaward scoreonhici scoreonns scoreonpub Principal components/correlation Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) Introduction Robust Covariance = = = = 150 5 5 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 3.40526 .872601 .414444 .189033 .118665 2.53266 .458157 .225411 .0703686 . 0.6811 0.1745 0.0829 0.0378 0.0237 0.6811 0.8556 0.9385 0.9763 1.0000 Matrix Principal components (eigenvectors) Robust PCA Application Conclusion Variable Comp1 Comp2 Comp3 Comp4 Comp5 Unexplained scoreonalu~i scoreonaward scoreonhici scoreonns scoreonpub 0.4244 0.4405 0.4829 0.5008 0.3767 -0.4816 -0.5202 0.2651 0.1280 0.6409 0.5697 -0.1339 -0.4261 -0.3848 0.5726 -0.5129 0.6991 -0.3417 -0.1104 0.3453 -0.0155 0.1696 0.6310 -0.7567 0.0161 0 0 0 0 0 . pca scoreonalumni scoreonaward scoreonhici scoreonns scoreonpub Principal components/correlation Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) Introduction Robust Covariance = = = = 150 5 5 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 3.40526 .872601 .414444 .189033 .118665 2.53266 .458157 .225411 .0703686 . 0.6811 0.1745 0.0829 0.0378 0.0237 0.6811 0.8556 0.9385 0.9763 1.0000 Matrix Principal components (eigenvectors) Robust PCA Application Conclusion Variable Comp1 Comp2 Comp3 Comp4 Comp5 Unexplained scoreonalu~i scoreonaward scoreonhici scoreonns scoreonpub 0.4244 0.4405 0.4829 0.5008 0.3767 -0.4816 -0.5202 0.2651 0.1280 0.6409 0.5697 -0.1339 -0.4261 -0.3848 0.5726 -0.5129 0.6991 -0.3417 -0.1104 0.3453 -0.0155 0.1696 0.6310 -0.7567 0.0161 0 0 0 0 0 The first component accounts for 68% of the inertia and is given by: Introduction Robust Covariance Matrix Robust PCA Application Conclusion Φ1=0.42Al.+0.44Aw.+0.48HiCi+0.50NS+0.38PUB Variable Alumni Awards HiCi N&S PUB Total score Corr. (Φ1,Xi) 0.78 0.81 0.89 0.92 0.70 0.99 . mcd scoreonalumni scoreonaward scoreonhici scoreonns scoreonpub, raw The number of subsamples to check is 20 . pcamat covMCD, n(150) corr Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 150 5 5 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 1.96803 1.46006 .835928 .409133 .326847 .507974 .624132 .426794 .0822867 . 0.3936 0.2920 0.1672 0.0818 0.0654 0.3936 0.6856 0.8528 0.9346 1.0000 Principal components (eigenvectors) Application Conclusion Variable Comp1 Comp2 Comp3 Comp4 Comp5 Unexplained scoreonalu~i scoreonaward scoreonhici scoreonns scoreonpub -0.4437 -0.5128 0.5322 0.3178 0.3948 0.4991 0.4375 0.3220 0.6537 0.1690 0.2350 -0.0544 -0.3983 -0.1712 0.8682 0.6946 -0.5293 0.3494 -0.3163 -0.1233 -0.1277 0.5123 0.5765 -0.5851 0.2158 0 0 0 0 0 . mcd scoreonalumni scoreonaward scoreonhici scoreonns scoreonpub, raw The number of subsamples to check is 20 . pcamat covMCD, n(150) corr Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 150 5 5 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 1.96803 1.46006 .835928 .409133 .326847 .507974 .624132 .426794 .0822867 . 0.3936 0.2920 0.1672 0.0818 0.0654 0.3936 0.6856 0.8528 0.9346 1.0000 Principal components (eigenvectors) Application Conclusion Variable Comp1 Comp2 Comp3 Comp4 Comp5 Unexplained scoreonalu~i scoreonaward scoreonhici scoreonns scoreonpub -0.4437 -0.5128 0.5322 0.3178 0.3948 0.4991 0.4375 0.3220 0.6537 0.1690 0.2350 -0.0544 -0.3983 -0.1712 0.8682 0.6946 -0.5293 0.3494 -0.3163 -0.1233 -0.1277 0.5123 0.5765 -0.5851 0.2158 0 0 0 0 0 . mcd scoreonalumni scoreonaward scoreonhici scoreonns scoreonpub, raw The number of subsamples to check is 20 . pcamat covMCD, n(150) corr Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 150 5 5 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 1.96803 1.46006 .835928 .409133 .326847 .507974 .624132 .426794 .0822867 . 0.3936 0.2920 0.1672 0.0818 0.0654 0.3936 0.6856 0.8528 0.9346 1.0000 Principal components (eigenvectors) Application Conclusion Variable Comp1 Comp2 Comp3 Comp4 Comp5 Unexplained scoreonalu~i scoreonaward scoreonhici scoreonns scoreonpub -0.4437 -0.5128 0.5322 0.3178 0.3948 0.4991 0.4375 0.3220 0.6537 0.1690 0.2350 -0.0544 -0.3983 -0.1712 0.8682 0.6946 -0.5293 0.3494 -0.3163 -0.1233 -0.1277 0.5123 0.5765 -0.5851 0.2158 0 0 0 0 0 . mcd scoreonalumni scoreonaward scoreonhici scoreonns scoreonpub, raw The number of subsamples to check is 20 . pcamat covMCD, n(150) corr Principal components/correlation Introduction Robust Covariance Matrix Robust PCA Number of obs Number of comp. Trace Rho Rotation: (unrotated = principal) = = = = 150 5 5 1.0000 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 1.96803 1.46006 .835928 .409133 .326847 .507974 .624132 .426794 .0822867 . 0.3936 0.2920 0.1672 0.0818 0.0654 0.3936 0.6856 0.8528 0.9346 1.0000 Principal components (eigenvectors) Application Conclusion Variable Comp1 Comp2 Comp3 Comp4 Comp5 Unexplained scoreonalu~i scoreonaward scoreonhici scoreonns scoreonpub -0.4437 -0.5128 0.5322 0.3178 0.3948 0.4991 0.4375 0.3220 0.6537 0.1690 0.2350 -0.0544 -0.3983 -0.1712 0.8682 0.6946 -0.5293 0.3494 -0.3163 -0.1233 -0.1277 0.5123 0.5765 -0.5851 0.2158 0 0 0 0 0 Introduction Two underlying factors are uncovered: Φ1 explains 38% of inertia and Φ2 explains 28% of inertia Variable Corr. (Φ1,∙) Corr. (Φ2,∙) Alumni Awards HiCi N&S PUB Total score -0.05 -0.01 0.74 0.63 0.72 0.99 0.78 0.83 0.88 0.95 0.63 0.47 Robust Covariance Matrix Robust PCA Application Conclusion Classical PCA could be heavily distorted by the presence of outliers. Introduction Robust Covariance Matrix Robust PCA Application Conclusion A robustified version of PCA could be obtained either by relying on a robust covariance matrix or by removing multivariate outliers identified through a robust identification method.