>> Pedro Domingos: We'll start with the motivation. ... machine learning beyond doing basic classification and things like that...

advertisement

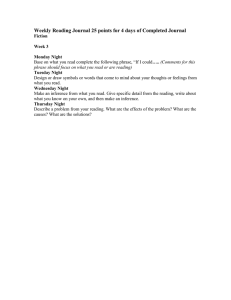

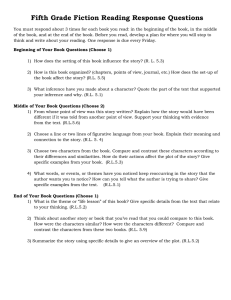

>> Pedro Domingos: We'll start with the motivation. My experience working in machine learning beyond doing basic classification and things like that over the last ten years or so is that at the end of the day the hardest part of learning is doing inference. Inference shows up as a subroutine, almost all the time, when you're trying to do partial kinds of learning. It appears when you want to do learn on directed graphical models, K Markov networks; when you want to learn discriminative graphical models, whether they're directed or undirected. It shows up, for example, in EM, when you're trying to learn within complete data or latent variabilities. Shows up in bayesian learning, because in bayesian learning, learning is inference. It shows up very much in deep learning, and it shows up most prominently for me in statistical relational learning. When you're trying to model data that is not IID, you actually have the complexity of probabilistic inference combined with the complexity of logical inference. So this is a big problem, particularly when what we want to do is beyond the kind of very simple models that people used to build in the past, to building large joint models for the means like natural language, vision, where you want to model the whole pipeline, not just one stage into join inference between all of them. Social networks, active recognition, computational biology is another example. We want to model whole metabolic pathways, want to model whole systems, whole cells, not just individual genes or proteins. So when inference is a problem when you're trying to do this on large models basically at some point it just becomes impossible. Because inference, in, for example, graphical models, is a sharply complete problem. It's as hard as counting the number of solutions of a set formula. So it's actually harder than NP complete. And not only that, but when we do learning with these things, typically we don't just have to solve the inference problem once. We have to solve it successfully, for example, at each step of gradient descent. So it becomes very, very tough. Now, of course, when inference is intractable what you do is approximate inference. And there's many good approximate methods like MCM, belief, propagational, variational propagations and whatnot. However -- and those things have a lot of problems. You need to really know how to work with them. But one that's particularly relevant to us is the approximate inference tends to interact badly with parameter optimization. Parameter optimization often uses things like line searches, these second-order methods like conjugate, gradient and quasi-mutant and they go haywire, or they can go haywire and often do go haywire when the function that is being optimized is not known exactly, which is exactly what happens when you're doing learning. The biggest problem, however, is the following: Suppose you had actually learned a very, very accurate model using whatever, using unlimited computational resources. At performance time, even if the model is accurate, because it's intractable, it effectively becomes inaccurate. Because now what you do is you do approximate inference, and now you're limited by the errors of the inference, which are often by far the dominant thing. You have bazillions of modes in your space, and you're only going to sample one or two or your mean field is going to converge to one of them, for example. So this is not a very happy state of affairs. We would like -- we need to overcome this problem if we really want to do the kind of powerful machine learning that the world needs. So what can we do? Well, one very attractive solution, at least [inaudible] is just to learn tractable models. If we only win tractable models, then the inference problem is easy. The problem, however, is that the kinds of tractable models that we've had in the past were insufficiently expressive for most of the applications that we want to do. There's some useful restricted classes, but for the most part this is not really a solution. The take-home message I would like you to remember from this talk, however, is that actually the class of tractable models is actually much more expressive than you might think. There's a whole series of tractable models that people have discovered in the last several years, and progress continues. And I would say that these models at this point we can say fairly confidently they're expressive enough for a lot of real challenging, real world applications. So what I would like to do here as time allows is go through some of these. Starting from the simplest to the most advanced. Thin junction trees I will mention to start with, and then large mixture models, arithmetic circuits, feature trees, some [inaudible] networks and tractable Markov logic, which I may not have time to get to, but that's okay. So the idea in conjunction trees is the following: So, first of all, as a reminder, a junction tree is the basic structure you used to do inference in a graphical model. If you have a Markov network, you obtain it by triangulating the network which is making sure that every cycle of length greater than three has a cord. And then the tree width of the network is the size of the largest click. And generally people think of the inference as being exponential in the tree width. And this is the problem. If your model has a low tree width, and remember this is not -- and you have to think even if the model doesn't look like it's going to have high tree width, once you do the triangulation, it often will. If your model has no tree width, you're in good shape. One thing you can imagine doing is just learn low tree width models and then you're good. The problem with learning with tree width models typically you can only learn very low tree width models because both the learning and the inference are exponential in the tree width. So people like Carlos, for example, have worked on this. But you wind up only learning models say of length tree width maybe two or three, which is really not enough for like, for example, if you're doing image processing, you have a tree width of, if it's an image of a thousand by a thousand the tree width is already a thousand. So this is very far cry from what we need. So however there are many interesting ideas in this literature that we can pick up and use more powerful things which we will do. Here's something that you can do that's very simple and you should remember this before we go on to more sophisticated things, which is just to learn a very large mixture model. The beauty of a mixture model is that inference is linear in the size of the model. It's very fast. Just have to go through the mixtures, through the mixture components. And if we just try to do accurate probability estimation then we don't have to restrict the number of components. We only make sure that we don't overfit. So we tried doing this. And we've actually found through our pleasant surprise when you compare this with bayesian network structured learning using the state of the art, the Wind Mind system that was developed here by people like Max Chickering and David Heckerman [phonetic] and some people in this room, we found that the, first of all, the likelihood that you obtain is comparable, which is surprising, because it's a more illustrative class. But we get better query. Then when you use Wind Mind to get a sample, which what a lot of people typically do, give sampling next to Wind Mind. But, most importantly, the inference is way faster. Takes a fraction of a second. It's reliable, don't have to whittle parameters and try different things. There's only one way to do it. The problem with this, of course, is the curse of dimensionality. As with all clustering, similarity-based methods, if you're in low dimension, say 10, this is probably fine. But if you have hundreds or thousands, tens of thousands, or millions of variables, this doesn't work. What's missing is factorization. This is what graphical models do for you, they factorize into smaller pieces. And those pieces are learnable. What can we do? This is where the next key idea comes in. And this is something that people like Rina Dector [phonetic] and Nina Darwiche and others have worked on over the years. It's this very important notion that in fact inference is not in general exponentially in tree width. The tree width is just an upper bound. It's a worst case. You could have a model with a very large tree width that's still tractable because it might have things like content-specific independence where two variables are independent given one value of a variable but not given other values. Determinism, determinism means there's parts of the space that have zero probability which is very bad things like MCMC and BP and variational but actually is really good. It means there's less of this space that you need to sum over so we should be able to exploit that. So if the cost of inference is not the tree width or exponential in tree width, what is it? Well, if you think about it, inference is just doing sums and products. That's all that's going on. It's a bunch of sums and products. So the cost of inference is really just the total number of sums and products that you do. So if you imagine organizing as we'll see in illustration shortly, your computation as a DAG where the nodes are sums and products and the leaves are the inputs, the variables and the parameters, then the cost of inference is just the size of that circuit. It's the number of edges in that circuit. So let's look at this in these terms. So, first of all, what is an arithmetic circuit? An arithmetic circuit is a way to represent the joint distribution of a set of variables as follows: So here's a table X1, X2, the probability of each of its stakes. What I'll do is I'll introduce indicators for the variables. So X1 is true when X1 is 1when X1 is true, not X1 when it's false and so forth. And I have products. This is a DAG. And then I have sums. And at the top I have a sum and what I have here is the -- and the sum nodes have children, right? And so, for example, here .4 is the width of this child which is a product of X1 and X2. What happens if you're in this state and you set the indicators in the appropriate way, meaning, for example, X1 to true and X1 to 1 and to 2 and the other to 0, this picks out only this product and the result is .4. Okay? And the same for the other states. So basically this -- all the information in here is also represented in here. But more importantly, if you now want to marginalize, let's say I want to compute the marginal of probability that X1 is equal to 1, then what I want to do is I want to sum out X2, meaning I want -- what I need to do is I need to sum this and this. I can do that by setting the indicators accordingly, both indicators for X2 will get set to 1, because I want to include both types of terms. So think of these indicators not as whether the variable is true or false, but whether you want to include the corresponding terms in your computation. So if I'm summing out a variable I want to include all of its terms so I set both of these guys to one. Since I want to set the probability to one and this one is 0, with those values now what I have is these two products being different from 0 I get this sum, .4 plus .2 which is the answer. So far so good. But in general the size of the circuit gets to be exponential in the number of variables so we haven't gained anything. Where we gain something is that if we have determinism, some of this won't be there. If we have content-specific independence the parts repeated because this is a graph need appear only once. So in general we might have a situation where the tree width is large but the size of the circuit that computes all the marginals is still polynomial the number of variables. And what people like N and Darwiche and others did, they compiled graphical models into arithmetic circuits, the same you can compile them in junction tree. You think of arithmetic circuit as a sparse junction tree and do inference on those. But what we would like to do is actually to learn from scratch arithmetic circuits that we know are of bounded size. And one way to do that is we can just use a standard, say, graphical model learning like Wind Mind, but instead of the score being log likelihood minus the number of parameters, we let the score be the log likelihood minus the circuit size. So instead of regularizing using the model size, we regularize using the cost of inference. And as far as overfitting goes this does the same job, does equally well but has a very important consequence which in this case I could have a model where inference is completely hellish. In this case I'm guaranteed that the inference is always manageable. So we try this. It works quite well. Again, comparable to likelihood to bayesian networks, and this was on challenging problems like e-commerce predicting action in e-commerce click logs and visits to websites and whatnot. So high dimensional problems. And the inference again is compared to doing something like MCMC is amazing. It takes a fraction of a second there's nothing to do. When you look at the circuits that we've learned, they do have large tree width. But now -- so this is just a starting point. Let me actually skip over this part and go straight to the next one. Now we can ask ourselves the question: Well, why are we just using a standard bayesian learning network algorithm with this except for a different objective function. What we'd like to do is learn the most general class of tractable models that we can, right? So what is that general class? And this is the question that we addressed in this paper that we published in UAI last summer. It actually won the best paper award. And we developed this representation that is a generalization of arithmetic circuits called some product networks. And the answer to this question of what are the most general conditions under which a DAG sums and products correctly represents all the marginals of a distribution is two words, completeness and consistency. So let me tell you what those are. And then how we learn the sum product networks. Sum product network is just a DAG of sums and products for the variables and parameters of the leaps. So as long as these parameters are not negative you can represent any sum product network where it represents a normalized distribution we just say the probability of a state is proportional to the value of the sum product network for that state. And then you normalize by dividing by the partition function which is the sum of this overall states. But that's where you have a problem because the partition function takes exponential time to compute. In general, right, to compute the probability of some evidence E, I have to sum, I have to compute the value of the network for every state that's compatible with the evidence and add those all up. And this in general will take exponential time and that's the whole problem. What we would like to be able to do is instead of this exponential sum, just do one linear time evaluation of the sum product network. And then the exponential computation has been replaced by a linear one. The million-dollar question is when will these two be the same? Where can I do this one passive evaluation of the network and get the same result that I would get from the exponential sum? So we defined this as validity. We say SPN is valid if the linear time computation is equal to explicitly computing the probability for every possible evidence that I might have. So SPN being valid basically means I can compute all my margins in linear time including the partition function. The partition function is when you want to include every term. So you set all the indicators to one. So now the question is when is an SPN valid? SPN is valid if it's complete and consistent. And the answer is the So what I complete consistency actually two very simple conditions that can be very easily checked and more importantly they can be very easily imposed. I can say I'm going to have a network architecture that is complete and consistent and then as soon as I have that I know that my inference is always going to be tractable and correct and I can just learn whatever parameters I want. So what are they? Completeness is a condition on the sum notes. It says that under a sum note, the children or the child sub trees must be over the same variables. So when -- a sum note is really a little mixture model over a subspace. What this condition says is that I have to have the sum space on every side of the sum in every component. So, for example, here's an incomplete SPN. It's incomplete because it has X1 on this side and X2 on that side and the two are different. It's easy to say that if a network is incomplete, then what happens is that my evaluation underestimates the real partition function or the real marginal. Consistency is a condition on the product notes. And it says that I can't have a child, a variable in the sub tree on one side and then negation of that variable in the sub tree on the other side. The two sides have to be consistent. If I have X1 on one side I can't have X1 on the other. If I do then what happens is the linear evaluation is going to overestimate the marginals. If I'm both complete and consistent, then it's going to compute, then these two things will be equal and I get the right answer. So how do we learn SPNs? It's a lot like back problem. In fact, SPNs are [inaudible] for back problem because it's a DAG, it's a DAG of sums and products, so propagating derivatives from the likelihood from the output back to the input is very straightforward. However, there's a problem, which is the problem that people in deep learning run into all the time which is that if you try to do this by gradient descent, there's this gradient effusion problem where the signal disappears as you go down the layers. It becomes sparser and sparser until we don't know how to learn. However, we found a very nice way to get around that problem which is to do the following: We do online learning. So we present our examples to the network one at a time. And update the parameters. And then we do hard EM. So we do online EM, but it's hard EM. Meaning that what I send down each branch of -so at each sum node, you can think of it as a mixture model. Instead of fractionally assigning the example to the different components of the mixture model I pick the most likely child and I send the whole example or the whole chunk of example down that child. As a result of which I don't have a gradient effusion problem anymore. There's always a unit size increment going down the network no matter how deep it is. As a result of which we can learn networks of this type with as far as we know arbitrary numbers of layers. Even in deep learning people have sort of like these very ad hoc ways to try to learn them that don't go beyond the few layers. We usually learn networks with tens of layers. How does this work? Each sum node maintains a count for each child for each example we see we find the most probable assignment of that example to some children using the current weights. We increment the count for each chosen child like you've won this example and then we renormalize and those are the new weights. And then we just repeat this until convergence. So it's actually very straightforward learning algorithm. But it's very powerful. In particular, you can think of this as a new kind of deep architecture. One that is actually very well founded and doesn't suffer from the big problem in deep learning, which is intractable inference and sort of like the difficulties in learning that causes in turn. And we've applied SPNs to a number of things, in particular to some very challenging problems in vision where we did a lot better than anything else that went before. In particular, we did this for image completion, where we're able to complete images that no one had really been able to complete before. Also we have a paper coming up in NIPS where did he do discriminative training using similar principles and beat the state of the art on a number of image classification benchmarks. So before I finish let me just mention one more thing, which is what do these sums and products mean? And can we extend these things which so far have been on the propositional level to the relational level where we have non-IE data. This is what we've done in tractable Markov logic. The basic idea of tractable Markov logic is the sums really represent a splitting of a class into sub classes. And the products really represent features. So there's a meaning. So a product decomposes an object into soft parts, and the sum decomposes parts into subclasses. And again I can't go into details of this here, but what this means is that if you take a language like tractable Markov logic, as long as -this is a powerful language, you can talk about objects, classes, relations, hierarchies and so on, as long as you use this language we can guarantee that the inference will always be tractable. And the details, we have a paper that just came out in EEEI. So and we have some software coming out using this. If this is not enough, what we suggest is doing variational inference, using these model classes as the approximate model class. Much more powerful than what people typically use so you get correspondingly better approximations and I will stop there. Thank you. [applause] >>: Any questions? In the back. >>: Yeah, the way you describe the hard EM, the back propagation, sounded deterministic. Did you try like a stochastic routing rule where you would go on each sub tree as far as the relative probability? >> Pedro Domingos: This is an excellent idea, right? Instead of taking the MAP choice just sample in proportion to the probability. We've actually tried that recently. In fact, Rob over there just did this a couple of weeks ago. In the domains that we've tried this, it doesn't help that much. It doesn't hurt either. But we actually suspect that there will be many problems for which this is actually the best solution. When we just picked the most likely solution, there's this tendency for a winner-take-all process to happen. So, yeah, that's actually an excellent idea. >>: Did you try to value the SPNs, the value of which you can compute all known nodes, and maybe the problem may be not all marginals, have to have some, direct path for a couple easy and the rest are inverse problems that harbor, okay, we can live with that. >> Pedro Domingos: Absolutely. So what I talked about here was the generative training. But more coming out in NIPS we have the discriminative training where we get exactly this pay off. We don't care about everything being tractable, we only care about these variables being tractable. What this means is that we have a broader class of models. So you're right, yeah. >>: Haven't read this one. >> Pedro Domingos: It hasn't appeared yet. >>: He's the chair. >> Pedro Domingos: Well -- >>: There were like 1500 ->> Pedro Domingos: [laughter]. Maybe you weren't the chair handling this paper. >>: You want to point out your grad student doing the work? >> Pedro Domingos: Rob, where is he. Also the stuff on tractable Markov logic was Austin, who is also here. And the software system that I mentioned was Chloe, who is there. So we're here in force. >>: Okay. Great. Any other questions? Pedro? >>: The second you were talking about, the Markov [inaudible] have negation? >> Pedro Domingos: One more time? >>: Your circuit, Markov circuit or you can have negation in the middle somewhere? >> Pedro Domingos: Negation -- but negation is a logical operation. So actually let me make a higher level remark here. Everything that I have about sums and products applies equally well to any semi ring. It could some product. It could be max sum it could be and/or joint project. So can learn tractable logical models in the same way. Not that we've done here be you that here, but the same ideas apply. And then you could have negations at any level, but there's actually no gain in exclusivity from having that. There's this form called negation normal form where you push all the negations to the bottom and it's only expressive. So you could, but there's no necessary advantage to doing that. >>: Okay. [applause] Well, let's thank the speaker. Okay?