Supporting Efficient Execution in Heterogeneous Distributed Computing Environments with Cactus and Globus

advertisement

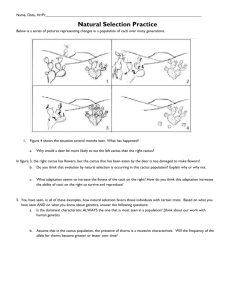

Supporting Efficient Execution in Heterogeneous Distributed Computing Environments with Cactus and Globus Gabrielle Allen, Thomas Dramlitsch, Ian Foster, Nick Karonis, Matei Ripeanu, Ed Seidel, Brian Toonen Proceedings of Supercomputing 2001 (Winning Paper for Gordon Bell Prize - Special Category) Presenter Imran Patel Outline Introduction Computational Grids Grid-enabled Cactus Toolkit Experimental Results Ghostzones and Compression Adaptive Strategies Conclusion Introduction Widespread use of numerical simulation techniques has led to a high demand for traditional highperformance computing resources (supercomputers). Low-end computers are becoming increasingly powerful and are connected with high-speed networks. “Computational Grids” aim to tie these scattered resources into an integrated infrastructure. Applications for the grid include large-scale simulations which require high resources for increased throughput. Introduction: The Problem Heterogeneous and dynamically behaving resources makes development of gridenabled applications extremely difficult. One approach is to develop computational frameworks which hide this complexity from the programmer. Cactus-G: a simulation framework which uses grid-aware components and message passing library (MPICH-G2) Computational Grids Computational Grids differ from other parallel computing environments: A grid may have nodes with different processor speeds, memory, etc Grids may have widely different network interconnects and topologies. Resource availability varies in a grid. Nodes in a grid may have different software configurations. Computational Grids – Programming Techniques To overcome these problems, some generic techniques have been devised: Irregular Data Distributions: use application/network/node information. Grid-aware Communication schedules: overlapping/grouping, dedicated nodes Redundant Computation: at the expense of reduced communication. Protocol Tuning: TCP tweaks,compression Cactus-G Cactus is a modular and parallel simulation environment used by scientists and engineers in the fields of numerical relativity, astrophysics, climate modeling, etc. Cactus design consists of a core (flesh) which connects to application modules (thorns). Various thorns exist for services such as Globus Toolkit, PETSc library, visualization, etc. Cactus is highly portable and parallel since it uses abstraction APIs which themselves are implemented as thorns. MPICH-G2 exploits Globus services and provides faster communication and QOS. Cactus-G: Architecture Applications thorns need not be gridaware. Example of a gridaware Cactus thorn is PUGH, which provides MPIbased parallelism. The DUROC library handles process management. Experimental Results: Setup An application in Fortran for solving numerical relativity problems 57 3-d variables, 780 flops per gridpoint per iteration. N x N x 6 x ghostzone_size x 8 variables need to be sync’ed at each processor. Total 1500 CPUs organized in a 5 x 12 x 25 3-d mesh Experimental Results: Setup 4 supercomputers at SDSC and NCSA 1024-CPU IBM Power-SP (306 MFlops/s). One 256 CPU and two 128-CPU SGI Origin2000 systems. (168 MFlops/s). Intramachine: 200 MB/s Intermachine: 100 MB/s SDSC<->NCSA: 3 MB/s on 622 Mb/s Communication Optimizations Communication/Computation Overlap: Processors across WANs were given few grid points so that they could overlap their communication with computations. Compression: A cactus thorn which exploits the regularity of data for compression using the libz library. Ghostzones: Larger ghostzones were used for efficient communication at the expense of redundant computations. Performance Metrics Flop/s rate and efficiency are used as metrics. Total execution time (ttot): Measured using MPI_Wtime() Expected Communication Time (tcomp): Ideal time calculated from single node. Flop Count F: Calculated using hardware counters 780 Flops per gridpoint per iteration. Flop/s Rate = F * num_gridpts * num_iterations / ttot E = tcomp/ttot Performance Figures 4 supercomputers: 42 GFlop/s, 14% Compression + 10 Ghostzones: 249 GFlop/s, 63.3% Smaller run on 120+1120=1140 processors: 292 GFlop/s, 88% Ghostzones Increasing ghostzone size can reduce latency overhead by transferring fewer messages with same amount of total data. Increasing the ghostzone size beyond a certain point does not give any benefits and wastes memory. Compression For increased throughput across WANs. Compression found to be highly useful since data is regular/smooth. Since smoothness of data changes over time, compression effects can change. So, we need adaptive compression. Adaptive Strategies Compression Predicting optimal values of ghostzone/compression parameters might be good. Don’t want to use detailed network characteristics. For ex: change the compression state based on efficiency, which is averaged over N iterations. Adaptive Ghostzone Sizes Adapting Ghostzone size is challenging: Many ghostsize values possible, memory re-allocations, ripple effects, need to get extra data from neighbors. Start with a size of 1 and increase/decrease in accordance with the efficiency. Adaptive Ghostzone Sizes Further Information The Cactus Framework and Toolkit: Design and Applications Tom Goodale, et al. Vector and Parallel Processing - VECPAR'2002 Grid Aware Parallelizing Algorithms Thomas Dramlitsch, Gabrielle Allen, Edward Seidel Journal of Parallel and Distributed Computing