Selective cutoff reporting in studies of diagnostic Comparing traditional meta-analysis to

advertisement

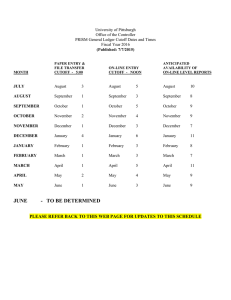

Selective cutoff reporting in studies of diagnostic test accuracy of depression screening tools: Comparing traditional meta-analysis to individual patient data meta-analysis Brooke Levis, MSc, PhD Candidate Jewish General Hospital and McGill University Montreal, Quebec, Canada Does Selective Reporting of Data-driven Cutoffs Exaggerate Accuracy? The Hockey Analogy 2 What is Screening? Purpose to identify otherwise unrecognisable disease By sorting out apparently well persons who probably have a condition from those who probably do not Not diagnostic Positive tests require referral for diagnosis and, as appropriate, treatment A program – of which a test is one component Illustration: This information was originally developed by the UK National Screening Committee/NHS Screening Programmes (www.screening.nhs.uk) and is used under the Open Government Licence v1.0 3 The Patient Health Questionnaire (PHQ-9) depression screening tool Patient Health Questionnaire (PHQ-9) Depression screening tool Scores range from 0 to 27 Higher scores = more severe symptoms 4 Selective Reporting of Results Using Data-Driven Cutoffs Extreme scenarios: Cutoff of ≥ 0 All subjects above cutoff sensitivity = 100% Cutoff of ≥ 27 All subjects below cutoff specificity = 100% 5 Does Selecting Reporting of Data-driven Cutoffs Exaggerate Accuracy? Sensitivity increases from cutoff of 8 to cutoff of 11 For standard cutoff of 10, missing 897 cases (13%) For cutoffs of 7-9 and 11, missing 52-58% of data Manea et al., CMAJ, 2012 6 Questions Does selective cutoff reporting lead to exaggerated estimates of accuracy? Can we identify predictable patterns of selective cutoff reporting? Why does selective cutoff reporting appear to impact sensitivity, but not specificity? Does selective cutoff reporting transfer high heterogeneity in sensitivity due to small numbers of cases to heterogeneity in cutoff scores, but homogeneous accuracy estimates? 7 Methods Data source: Inclusion criteria: Studies included in published traditional meta-analysis on the diagnostic accuracy of the PHQ-9. (Manea et al, CMAJ 2012) Unique patient sample Published diagnostic accuracy for MDD for at least one PHQ-9 cutoff Data transfer: Invited authors of the eligible studies to contribute their original patient data (de-identified) Received data from 13 of 16 eligible datasets (80% of patients, 94% of MDD cases) 8 Methods Data preparation For each dataset, extracted PHQ-9 scores and MDD diagnostic status for each patient, and information pertaining to weighting Statistical analyses (2 sets performed) Traditional meta-analysis For each cutoff between 7 and 15, included data from the studies that reported accuracy results for the respective cutoff in the original publication IPD meta-analysis For each cutoff between 7 and 15, included data from all studies 9 Comparison of data availability Published data (traditional MA) Cutoff All data (IPD MA) # of studies # of patients # mdd cases # of studies # of patients # mdd cases 7 4 2094 550 13 4589 1037 8 4 2094 550 13 4589 1037 9 4 1579 309 13 4589 1037 10 11 3794 723 13 4589 1037 11 5 1253 216 13 4589 1037 12 6 1388 261 13 4589 1037 13 4 1073 186 13 4589 1037 14 3 977 150 13 4589 1037 15 4 1075 193 13 4589 1037 10 Methods Model: Bivariate random-effects* meta-analysis models Models sensitivity and specificity at the same time Accounts for clustering by study Provides an overall pooled sensitivity and specificity for each cutoff, for the 2 sets of analyses Within each set of analyses, each cutoff requires its own model Estimates between study heterogeneity Note: model accounts for correlation between sensitivity and specificity at each threshold, but not for correlation of parameters across thresholds *Random effects model: sensitivity & specificity assumed to vary across primary studies 11 Questions Does selective cutoff reporting lead to exaggerated estimates of accuracy? Can we identify predictable patterns of selective cutoff reporting? Why does selective cutoff reporting appear to impact sensitivity, but not specificity? Does selective cutoff reporting transfer high heterogeneity in sensitivity due to small numbers of cases to heterogeneity in cutoff scores, but homogeneous accuracy estimates? 12 Comparison of Diagnostic Accuracy Published data (traditional MA) All data (IPD MA) Cutoff N studies Sens Spec Cutoff N studies Sens Spec 7 4 0.85 0.73 7 13 0.97 0.73 8 4 0.79 0.78 8 13 0.93 0.78 9 4 0.78 0.82 9 13 0.89 0.83 10 11 0.85 0.88 10 13 0.87 0.88 11 5 0.92 0.90 11 13 0.83 0.90 12 6 0.82 0.92 12 13 0.77 0.92 13 4 0.82 0.94 13 13 0.67 0.94 14 3 0.71 0.97 14 13 0.59 0.96 15 4 0.61 0.98 15 13 0.52 0.97 13 Comparison of ROC Curves 14 Questions Does selective cutoff reporting lead to exaggerated estimates of accuracy? Can we identify predictable patterns of selective cutoff reporting? Why does selective cutoff reporting appear to impact sensitivity, but not specificity? Does selective cutoff reporting transfer high heterogeneity in sensitivity due to small numbers of cases to heterogeneity in cutoff scores, but homogeneous accuracy estimates? 15 Publishing trends by study 16 Comparison of Sensitivity by Cutoff 17 Questions Does selective cutoff reporting lead to exaggerated estimates of accuracy? Can we identify predictable patterns of selective cutoff reporting? Why does selective cutoff reporting appear to impact sensitivity, but not specificity? Does selective cutoff reporting transfer high heterogeneity in sensitivity due to small numbers of cases to heterogeneity in cutoff scores, but homogeneous accuracy estimates? 18 Comparison of Diagnostic Accuracy Published data (traditional MA) All data (IPD MA) Cutoff N studies Sens Spec Cutoff N studies Sens Spec 7 4 0.85 0.73 7 13 0.97 0.73 8 4 0.79 0.78 8 13 0.93 0.78 9 4 0.78 0.82 9 13 0.89 0.83 10 11 0.85 0.88 10 13 0.87 0.88 11 5 0.92 0.90 11 13 0.83 0.90 12 6 0.82 0.92 12 13 0.77 0.92 13 4 0.82 0.94 13 13 0.67 0.94 14 3 0.71 0.97 14 13 0.59 0.96 15 4 0.61 0.98 15 13 0.52 0.97 19 Why Sensitivity Changes with Moving Cutoffs, but Not Specificity 20 Questions Does selective cutoff reporting lead to exaggerated estimates of accuracy? Can we identify predictable patterns of selective cutoff reporting? Why does selective cutoff reporting appear to impact sensitivity, but not specificity? Does selective cutoff reporting transfer high heterogeneity in sensitivity due to small numbers of cases to heterogeneity in cutoff scores, but homogeneous accuracy estimates? 21 Heterogeneity 22 Summary Selective cutoff reporting in depression screening tool DTA studies may distort accuracy across cutoffs. It will lead to exaggerated estimates of accuracy. These distortions were relatively minor in the PHQ, but would likely be much larger for other measures where standard cutoffs are less consistently reported and more data-driven reporting seems to occur (e.g., HADS). IPD meta-analysis can address this and will allow subgroup-based accuracy evaluation. 23 Summary STARD undergoing revision: Needs to require precision-based sample size calculation to avoid very small samples – particularly number of cases – and unstable estimates Needs to require reporting of spectrum of cutoffs, which is easily done with online appendices 24 Acknowledgements DEPRESSD Investigators Brett Thombs Andrea Benedetti Roy Ziegelstein Pim Cuijpers Simon Gilbody John Ioannidis Scott Patten Dean McMillan Ian Shrier Russell Steele Lorie Kloda Other Contributors Alex Levis Danielle Rice 25