Bhiksha Raj Hearing without Listening Pittsburgh, PA 15213 Collaborators: Manas Pathak

advertisement

Hearing without Listening

Collaborators: Manas Pathak (CMU), Shantanu Rane (MERL), Paris

Smaragdis (UIUC) and Madhusudana Shashanka (UTC), Jose Portelo

(INESC)

Bhiksha Raj

Pittsburgh, PA 15213

21 April 2011

About Me

• Bhiksha Raj

• Assoc. Prof in LTI

• Areas:

o

o

Computational Audition

Speech recognition

• Was at Mitsubishi Electric

Research prior to CMU

o

Led research effort on speech

processing

2

A Voice Problem

Call center mans calls relating

to various company services

and products

• Call center records customer response

o

o

Thousands of hours of customer calls

Must be mined for improved response to market

conditions and improved customer response

• Audio records carry confidential information

o

o

o

Social security number

Names, addresses

Cannot be revealed!!

3

Mining Audio

•

Can be dealt with as a problem of keyword spotting

o

o

•

Must find words/phrases such as “problem”, “disk controller”

Data holder willing to provide list of terms to search for

Must not discover anything that was not specified!

o

E.g. Social security number, names, addresses, other identifying

information

• Trivial solution: Ship keyword spotter to data holder

o

o

•

Unsatisfactory

Does not prevent operator of spotter from having access to private

information

Work on encrypted data?

4

A more important problem

• ABC NEWS Oct 2008

• Inside Account of U.S. Eavesdropping on Americans

Despite pledges by President George W. Bush and

American intelligence officials to the contrary,

hundreds of US citizens overseas have been

eavesdropped on as they called friends and family

back home...

5

The Problem

• Security:

o

NSA must monitor call for public safety

Caller may be a known miscreant

Call may relate to planning subversive activity

• Privacy:

o

Terrible violation of individual privacy

• The gist of the problem:

o

NSA is possibly looking for key words or phrases

o

Or if some key people are calling in

o

Did we hear “bomb the pentagon”??

Was that Ayman al Zawahiri’s voice?

But must have access to all audio to do so

• Similar to the mining problem

6

Abstracting the problem

•

Data holder willing to provide encrypted data

o

•

Mining entity willing to provide encrypted keywords

o

•

A locked box

Sealed packages

Must find if keywords occur in data

o

Find if contents of sealed packages are also present in the locked box

•

Without unsealing packages or opening the box!

Data are spoken audio

7

Privacy Preserving Voice Processing

• Problems are examples of need for privacy

preserving voice processing algorithms

• The majority of the world’s communication has

•

historically been done by voice

Voice is considered a very private mode of

communication

o

o

Eavesdropping is a social no-no

Many places that allow recording of images in public

disallow recording of voice!

• Yet little current research on how to maintain the

privacy of voice ..

8

The History of Privacy Preserving Technologies

for Voice

9

The History of Counter Espionage Techniques

against Private Voice Communication

10

Voice Database Privacy: Not Covered

• Can “link” uploaders of video to Flickr, youtube etc.,

by matching the audio recordings

o

“Matching the uploaders of videos across accounts”

Lei, Choi, Janin, Friedland, ICSI Berkeley

• More generally, can identify common “anonymous”

speakers across databases

o

And, through their links to other identified subjects, can

possibly identify them as well..

• Topic for another day

11

Automatic Speech Processing Technologies

• Lexical content comprehension

o

Recognition

o

Determining the sequence of words that was spoken

Keyterm spotting

Detecting if specified terms have occurred in a recording

• Biometrics and non-lexical content recognition

o

Identification

o

Identifying the speaker

Verification/Authentication

Confirming that a speaker is who he/she claims to be

12

Audio Problems involve Pattern Matching

• Biometrics:

o

Speaker verification / authentication:

o

Binary classification

– Does the recording belong to speaker X or not

Speaker identification:

Multi-class classification

– Who (if) from a given known set is the speaker

• Recognition:

o

Key-term spotting:

o

Binary classification

– Is the current segment of audio an instance of the keyword

Continuous speech recognition

Multi- or infinite- class classification

13

Simplistic View of Voice Privacy:

Learning what is not intended

• User is willing to permit the system to have access

to the intended result, but not anything else

• Recognition/spotting System allowed access to

“text” content of message

o

But system should not be able to identify the speaker,

his/her mood etc

• Biometric system allowed access to identity of

speaker

o

But should not be able to learn the text content as well

E.g. discover a passphrase

14

Signal Processing Solutions to the simple problems

• Voice is produced by a source filter mechanism

o

o

Lungs are the source

Vocal tract is the filter

• Mathematical models allow us to separate the two

• The source contains much of the information about the

•

speaker

The filter contains information about the sounds

15

Eliminating Speaker Identity

• Modifying the Pitch

o

o

Lowering it makes the voice somewhat unrecognizable

Of course, one can undo this

• Voice morphing

o

o

Map the entire speech (source and filter) to a neutral third

voice

Even so, the cadence gives identity away

16

Keep the voice, corrupt the content

• Much tougher

o

The “excitation” also actually carries spoken content

• Cannot destroy content without corrupting the

excitation

17

Signal Processing is not the Solution

• Often no “acceptable to expose” data

o

Voice recognition:

o

Keyword spotting: Signal processing inapplicable

o

Medical transcription. HIPAA rules

Court records

Police records

Cannot modify only undesired portions of data

Biometrics:

Identification: Data holder may not know who is being identified

(NSA)

Verification/Authentication – many problems

18

What follows

• A related problem:

o

Song matching

• Two voice matching problems

o

o

Keyword spotting

Voice authentication

Cryptographic approach

An alternative approach

• Issues and questions

• Caveat: Have squiggles (cannot be avoided)

o

Will be kept to a minimum

19

A related problem

• Alice has just found a short piece of music on the

web

o

Possibly from an illegal site!

• She likes it. She would like to find out the name of

the song

20

Alice and her song

• Bob has a large, organized catalogue of songs

• Simple solution:

o

o

o

Alice sends her song snippet to Bob

Bob matches it against his catalogue

Returns the ID of the song that has the best match to the

snippet

21

What Bob does

S1

S2

S3

SM

•

•

•

Bob uses a simple correlation-based procedure

Receives snippet W = w[0]..w[N]

For each song S

o

At each lag t

o

•

Finds Correlation(W,S,t) = Si w[i] s[t+i]

Score(S) = maximum correlation: maxt Correlation(W,S,t)

Returns song with largest correlation.

22

What Bob does

S1

W

S2

S3

SM

•

•

•

Bob uses a simple correlation-based procedure

Receives snippet W = w[0]..w[N]

For each song S

o

At each lag t

o

•

Finds Correlation(W,S,t) = Si w[i] s[t+i]

Score(S) = maximum correlation: maxt Correlation(W,S,t)

Returns song with largest correlation.

23

What Bob does

S1

W

S2

S3

SM

•

•

•

Bob uses a simple correlation-based procedure

Receives snippet W = w[0]..w[N]

For each song S

o

At each lag t

o

•

Finds Correlation(W,S,t) = Si w[i] s[t+i]

Score(S) = maximum correlation: maxt Correlation(W,S,t)

Returns song with largest correlation.

24

What Bob does

S1

W

S2

S3

Corr(W, S1, 0)

SM

•

•

•

Bob uses a simple correlation-based procedure

Receives snippet W = w[0]..w[N]

For each song S

o

At each lag t

o

•

Finds Correlation(W,S,t) = Si w[i] s[t+i]

Score(S) = maximum correlation: maxt Correlation(W,S,t)

Returns song with largest correlation.

25

What Bob does

S1

W

S2

S3

Corr(W, S1, 0)

Corr(W, S1, 1)

SM

•

•

•

Bob uses a simple correlation-based procedure

Receives snippet W = w[0]..w[N]

For each song S

o

At each lag t

o

•

Finds Correlation(W,S,t) = Si w[i] s[t+i]

Score(S) = maximum correlation: maxt Correlation(W,S,t)

Returns song with largest correlation.

26

What Bob does

W

S1

S2

S3

Corr(W, S1, 0)

Corr(W, S1, 1)

Corr(W, S1, 2)

SM

•

•

•

Bob uses a simple correlation-based procedure

Receives snippet W = w[0]..w[N]

For each song S

o

At each lag t

o

•

Finds Correlation(W,S,t) = Si w[i] s[t+i]

Score(S) = maximum correlation: maxt Correlation(W,S,t)

Returns song with largest correlation.

27

Relationship to Speech Recognition

• Words are like Alice’s snippets.

• Word spotting:

Find locations in a recording where

patterns that match the word occur

o

Computing “match” score is analogous to finding the

correlation with an (or the best of many) examples of the

word

• Continuous speech recognition: Repeated word

spotting

28

Alice has a problem

• Her snippet may have been illegally downloaded

• She may go to jail if Bob sees it

o

Bob may be the DRM police..

29

Solving Alice’s Problem

• Alice could encrypt her snippet and send it to Bob

• Bob could work entirely on the encrypted data

o

And obtain an encrypted result to return to Alice!

• A job for SFE

o

Except we wont use a circuit

30

Correlation is the root of the problem

•

The basic operation performed is a correlation

o

•

Corr(W,S,t) = Si w[i] s[t+i]

This is actually a dot product:

o

o

o

W = [w[0] w[2] .. w[N]]T

St = [s[t] s[t+1] .. s[t+N]]T

Corr(W,S,t) = W.St

• Bob can compute Encrypt[Corr(W,S,t)] if Alice sends him

Encrypt[W]

•

Assumption: All data are integers

o

Encryption is performed over large enough finite fields to hold all the

numbers

31

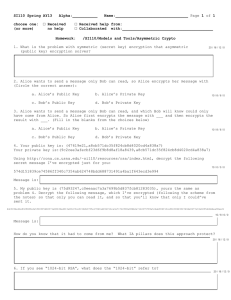

Paillier Encryption

•

Alice uses Paillier Encryption

o

o

o

o

o

o

o

Random P,Q

N = PQ

G = N+1

L = (P-1)(Q-1)

M = L-1 mod N

Public key = (N, G), Private Key = (L,M)

Encrypt message m from (0…N-1):

•

Enc[m] = gm rN mod N2

Dec[ u ] = { [ (uL mod N2) – 1]/N}M mod N

Important property:

o

o

o

Enc [m] == gm

Enc[m] . Enc[v] = gmgv = gm+v = Enc[m+v]

(Enc[m] )v = Enc[vm]

32

Solving Alice’s Problem

•

Alice generates public and private keys

•

She encrypts her snippet using her public key and sends it to Bob:

o

Alice Bob : Enc[W] = Enc[w[0]], Enc[w[2]], … Enc[w[N]]

• To compute the dot product with any snippet s[t]..s[t+N]

o

Bob computes

Pi

Enc[w[i]]s[t+i]

= Enc[Si w[i]s[t+i]]

= Enc[Corr(W,S,t)]

•

Bob can compute the encrypted correlations from Alice’s

encrypted input without needing even the public key

•

The above technique is the Secure Inner Product (SIP) protocol

33

Solving Alice’s Problem - 2

• Given the encrypted snippet Enc[W] from Alice

• Bob computes Enc[Corr(S,W,t)] for all S, and all t

o

o

i.e. he computes the correlation of Alice’s snippet with all

of his songs at all lags

The correlations are encrypted, however

• Bob only has to find which Corr(S,W,t) is the highest

o

And send the metadata for the corresponding S to Alice

• Except, Bob cannot find argmaxS maxt Corr(S,W,t)

o

He only has encrypted correlation values

34

Bob Solves the Problem

• Bob ships the encrypted correlations back to Alice

o

o

o

She can decrypt them all, find the maximum value, and

send the index back to Bob

Bob retrieves the song corresponding to the index

Problem – Bob effectively sends his catalogue over to

Alice

• Problems for Bob

o

Alice may be a competitor pretending to be a customer

o

She is phishing to find out what Bob has in his catalogue

She can find out what songs Bob has by comparing the

correlations against those from a catalog of her own

Even if Bob sends the correlations in permuted order

35

The SMAX protocol

•

•

Alice sends her public key to Bob

Bob adds a random number to each correlation

o

o

•

Enc[Corrnoisy(W,S,t)] = Enc[Corr(W,s,t)]*Enc[r]

= Enc[Corr(W,s,t) + r]

A different random number corrupts each correlation

Bob permutes the noisy correlations and sends the

permuted correlations to Alice

o

Bob Alice P(Enc[Corrnoisy(W,S,t)]) for all S,t

•

P is a permutation

Alice can decrypt them to get P(Corrnoisy(W,S,t))

• Bob retains P (-r), i.e. the permuted set of random numbers

36

The SMAX protocol

• Bob has P (-r), Alice has P(Corr(W,s,t)+r)

• Bob generates a public/private key, encrypts P (-r), and

sends it along with his public key to Alice

o

Bob Alice: EncBob[P (-r)]

• Alice encrypts the noisy correlations she has and “adds”

them to what she got from Bob:

o

EncBob[P(Corr(W,s,t)+r)]* EncBob[P (-r)]

= P EncBob[ (Corr(W,s,t))]

• Alice now has encrypted and permuted correlations.

37

The SMAX protocol

• Alice now has encrypted and permuted correlations.

PEncBob[Corr(W,s,t)]

• Alice adds a random number q :

o

P EncBob[Corr(W,s,t)]*EncBob[q]

= P EncBob[ Corr(W,s,t)+q]

The same number q corrupts everything

• Alice permutes everything with her own

permutation scheme and ships it all back to Bob:

o

Alice Bob : PAlice P EncBob[ Corr(W,s,t)+q]

38

The SMAX protocol

•

Bob has : PAlice P EncBob[ Corr(W,s,t)+q]

•

Bob decrypts them and finds the index of the largest value

Imax

•

Bob unpermutes Imax and sends the result to Alice:

o

•

Bob Alice P-1 Imax

Alice unpermutes it with her own permutation to get the

true index of the song in Bob’s catalogue

o

PAlice-1 P-1 Imax = Idx

The above technique is insecure; in reality, the process is more involved

• Alice finally has the index in Bob’s catalogue for her song

39

Retrieving the Song

• Alice cannot simply send the index of the best song

to Bob

o

It will tell Bob which song it is

The song may not be available for public download

i.e. Alice’s snippet is illegal

i.e.

40

Retrieving the Song

• Alice cannot simply send the index of the best song

to Bob

o

It will tell Bob which song it is

The song may not be available for public download

i.e. Alice’s snippet is illegal

i.e.

41

Retrieving Alice’s song: OT

• Alice sends Enc[Idx] to Bob (and public key)

o

Alice Bob : Enc[Idx]

• For each song Si, Bob computes

o

o

Enc[M(Si)]*(Enc[-i]*Enc[idx])ri = Enc[M(Si) + ri(Idx-i)]

Bob sends this to Alice for every song

o

Bob Alice : Enc[M(Si) + ri(idx-i)]

M(Si) is the metadata for Si

• Alice only decrypts the idx-th entry to get M(Sidx)

o

Decrypting the rest is pointless

Decrypting any other entry will give us M(Si) + ri(idx-i)

42

Unrealistic solution

•

The proposed method is computationally unrealistic

o

o

Public key encryption is very slow

Repeated shipping of entire catalog

•

Can be simplified to one shipping of catalog

Still inefficient

Illustrates a few primitives

o

o

SIP: Secure inner product; computing dot products of private data

SMAX: Finding the index of the largest entry without revealing

entries

o

Also possible – SVAL: Finding the largest entry (and nothing else)

OT as a mechanism for retrieving information

• Have ignored a few issues..

43

Security Picture

• Assumption: Honest but curious

• What to they gain by being Malicious?

o

Bob: Manipulating numbers

o

Can prevent Alice from learning what she wants

Bob learns nothing of Alice’s snippet

Alice:

Can prevent herself from learning the identity of her song

• Key Assumption:

o

Sufficient if malicious activity does not provide

immediate benefit to agent

44

Pattern matching for voice: Basics

Feature

Computation

Pattern

Matching

• The basic approach includes

o

A feature computation module

o

Derives features from the audio

A pattern matching module

Stores “models” for the various classes

• Assumption: Feature computation performed by

entity holding voice data

45

Pattern matching in Audio: The basic tools

• Gaussian mixtures

o

o

Class distributions are generally modelled as Gaussian

mixtures

“Score” computation is equivalent to computing log

likelihoods

Rather than computing correlations

• Hidden Markov models

o

o

Time series models are usually Hidden Markov models

With Gaussian mixture distributions as state output

densities

Conceptually not substantially more complex than Gaussian

mixtures

46

Computing a log Gaussian

• The Gaussian likelihood for a vector X, given a

Gaussian with mean m and covariance Q is given by

G ( X ; m , Q)

1

2 | Q |

D

exp 0.5( X m )T Q 1 ( X m )

• The log Gaussian is given by

log G( X ; m , Q) 0.5( X m )T Q1 ( X m ) C

• With a little arithmetic manipulation, we can write

1

1

0

.

5

Q

Q

m X

X

T

T

log G( X ; m , Q) [ X 1]

[ X 1]W

D 1

1

0

47

Computing a log Gaussian

T

X

log G ( X ; m , Q) [ X 1]W X W X

1

T

• Which can be further modified to

T

log G( X ; m , Q) W X

•

•

W is a vector containing all the components of W

X is a vector containing all pairwise products

XiX

j

• In other words, the log Gaussian is an inner product

o

If Bob has parameters W and Alice has data X, they can

compute the log Gaussian without exposing the data using SIP

o

By extension, can compute log Mixture Gaussians privately

48

Keyword Spotting

• Very similar to song matching

• The “snippet” is now the keyword

• A “Song” is now a recording

• Instead of finding a best match, we must find every

recording in which a “correlation” score exceeds a

threshold

• Complexity is comparable to song retrieval

o

Actually worse

We will use HMMs instead of sample sequences and correlations

are replaced by best-state-sequence scores

49

A Biometric Problem: Authentication

• Passwords and text-based forms of authentication are

physically insecure

o

Passwords may be stolen, guessed etc.

• Biometric authentication is based on “non-stealable”

signatures

o

o

o

Fingerprints

Iris

Voice

We will ignore the problem of voice mimicry..

50

Voice Authentication

• User identifies himself

o

May also authenticate himself/herself via text-based

authentication

• System issues a challenge

o

o

User often asked to say a chosen sentence

Alternately, User may say anything

• System compares voiceprint to stored model for

user

o

And authenticates user if print matches.

51

Authentication

• Most common approach

o

Gaussian mixture distribution for User

o

Gaussian mixture distribution for “background”

o

Learned from enrollment data recorded by the user

Learned from a large number of speakers

Compare log likelihoods of “speaker” Gaussian mixture with

“background” Gaussian mixture to authenticate

• Privately done:

o

o

Compute all loglikelihoods on encrypted data E[X]

Engage in a protocol to determine which is higher

To authenticate the user without “seeing” the user’s data

52

Private Voice Authentication

• Protecting user’s voice is perhaps less critical

o

Actually opens up security issues not present in the “open”

mechanism

o

OT can be manipulated and must be protected from malicious user

May be useful, for surveillance-type scenarios

The NSA problem

• Bigger problem

o

The “authenticating” site may be phishing

Using the learned speaker models to synthesize fake recordings for

the speaker

To spoof the speaker at other voice authentication sites

53

Private Voice Authentication

• Assumption:

o

User is using a client on a computer or a smartphone

Which can be used for encryption and decryption

• Protocol for modified learning procedure:

o

Learn the user model from encrypted recordings

The system finally obtains a set of encrypted models

Cannot decrypt the models

– Unable to Phish

• Assumption 2:

o

Motivated user: will not provide fake data during enrollment

54

Authenticating with encrypted models

• Authentication procedure changes

o

System can no longer compute dot products on

encrypted data

Since models are themselves encrypted

• User must perform some of the operations

o

Which will be seen without observing partial results

• Malicious user

o

Imposter who has stolen Alice’s smartphone

Has her encryption keys!

Can manipulate data sent by Alice during shared computation

55

Malicious User

• User intends to break into the system

o

Is happy if probability of false acceptance increases even

just slightly

Improves his chance of breaking in

• System:

o

Is satisfied if manipulating partial results of computation

results in false acceptance rate no greater than that

obtained with random data

56

Protecting from Malicious User

• Modify likelihood computation

• Instead of computing

o

L(X, Wspeaker), and

L(X, Wbackground)

o

Compute

o

J(X, g(Wspeaker, Wbackground))

J(X, h(Wspeaker, Wbackground))

o

o

• System “unravels” these to obtain Enc[L(X, Wspeaker)] and

Enc[L(X, Wbackground)]

o

P(L(X,Wspeaker) > L(X,Wbackground)) comparable to that for random X if user

manipulates intermediate results

• Finally engages in a millionaire protocol designed for malicious users to

decide user is authentic

57

Speaker Authentication

• Ignoring many issues w.r.t. malicious user

• Computation overhead large

• Signal processing techniques: Simple, but insecure

• Cryptographic methods: Secure, but complex

o

Is there a golden mean

58

An Efficient Mechanism

• Find a method to convert voice recording to a

sequence of symbols

• Sequence must be such that:

o

o

The sequence s(X) for any recording X must belong to a

small set S of sequences with high probability if the

speaker is the target speaker

The sequence must not belong to S with high probability

if the speaker is not the target speaker

• Reduces to conventional password approach

o

S is the set of passwords for the speaker

Any of which can authenticate him

59

Converting speaker’s audio to symbol sequence

• Is as secure as conventional password system

o

o

o

Speaker’s client converts voice to symbol sequence

Symbol sequence shipped to system as password

Speaker’s “client” maintains no record of valid set of

passwords

o

Cannot be hacked

System only stores encrypted passwords

Cannot voice-phish

• Can improve false alarm/false rejection by using

multiple mappings..

60

Converting the Speaker’s voice Symbol Sequences

• Technique 1:

o

o

A generic HMM that represents all speech

Most likely state sequences are “password” set for

speaker

• Learn model parameters to be optimal for speaker

61

Converting the Speaker’s voice Symbol Sequences

• Technique 2:

o

Locality sensitive Hashing

• Hashes are “passwords”

o

Hashing must be learned to have desired password

properties

62

Other voice problems

• Problems currently being addressed:

• Keyword detection (for mining)

• Authentication

• Speaker identification

• Speech recognition is a much tougher problem

o

o

Infinite class classification

Requires privacy-preserving graph search

63

Work in progress

• Efficient / practical authentication schemes

• Efficient/practical computational schemes for voice

processing

o

o

On the side: Investigations into privacy preserving

classification schemes

Differential privacy / combining DP and SMC

• Better signal processing schemes

• Database privacy:

Issues and solutions..

• QUESTIONS?

64