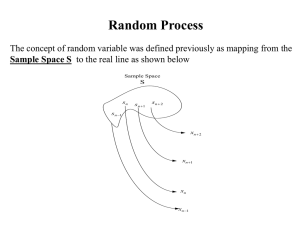

Random Process Sample Space S S

advertisement

Random Process The concept of random variable was defined previously as mapping from the Sample Space S to the real line as shown below Sample Space S sn sn 1 sn 2 sn 1 xn 2 xn 1 xn xn 1 The concept of random process can be extended to include time and the outcome will be random functions of time as shown below The functions xn 2 (t ), xn 1 (t ), xn (t ), xn 1 (t ), are one realizations of many of the random process X(t) A random process also represents a random variable when time is fixed X(t1 ) is a random variable Stationary and Independence The random process X(t) can be classified as follows: First-order stationary A random process is classified as first-order stationary if its first-order probability density function remains equal regardless of any shift in time to its time origin. If we Xt1let represent a given value at time t1 then we define a first-order stationary as one that satisfies the following equation: f X (x t1 ) = f X (x t1 + τ) The physical significance of this equation is that our density function, f X (x t1 ) is completely independent of t1 and thus any time shift t For first-order stationary the mean is a constant, independent of any time shift Second-order stationary A random process is classified as second-order stationary if its second-order probability density function does not vary over any time shift applied to both values. In other words, for values Xt1 and Xt2 then we will have the following be equal for an arbitrary time shift t f X (x t1 ,x t2 ) = f X (x t1+τ ,x t2+τ ) From this equation we see that the absolute time does not affect our functions, rather it only really depends on the time difference between the two variables. For a second-order stationary process, we need to look at the autocorrelation function ( will be presented later) to see its most important property. Since we have already stated that a second-order stationary process depends only on the time difference, then all of these types of processes have the following property: R XX (t,t+τ) = E[X(t)X(t+τ)] = R XX (τ) Wide-Sense Stationary (WSS) A process that satisfies the following: E X(t) = X = constant E X(t)X(t + τ) = R XX (τ) is a Wide-Sense Stationary (WSS) Second-order stationary The converse is not true in general Wide-Sense Stationary Time Average and Ergodicity Ergodicity An attribute ( )سمةof stochastic systems; generally, a system that tends in probability to a limitingform that is independent of the initial condititions The time average of a quantity is defined as 1 T A = lim dt T 2T T Here A is used to denote time average in a manner analogous to E for the statistical average. The time average is taken over all time because, as applied to random processes, sample functions of processes are presumed to exist for all time. Let x(t) be a sample of the random process X(t) were the lower case letter imply a sample function (not random function). X(t) the random process x(t) a sample of the random process the random process Time Average and Ergodicity Let x(t) be a sample of the random process X(t) were the lower case letter imply a sample function. We define the mean value x = A x(t) ( a lowercase letter is used to imply a sample function) and the time autocorrelation function XX (τ) as follows: 1 T x = A x(t) = lim x(t) dt T 2T T XX (τ) = A x(t)x(t + τ) 1 T = lim x(t)x(t + τ) dt T T 2T For any one sample function ( i.e., x(t) ) of the random process X(t), the last two integrals simply produce two numbers. A number for the average x and a number for XX (τ) for a specific value of t Since the sample function x(t) is one out of other samples functions of the random process X(t), The average x and the autocorrelation are actually random variables By taking the expected value for x XX (τ) and XX (τ) ,we obtain 1 T lim 1 T E[x(t)] dt E[x] = E[A[x(t)]] = E lim x(t) dt T T T 2T T 2T 1 T X(1) = X lim X dt = Tlim T 2T T 1 T E[XX (τ)] = E [A[x(t)x(t + τ)] ] = E lim x(t)x(t + τ) dt T 2T T 1 T 1 T = lim E[x(t)x(t + τ)] dt = lim R XX (τ) dt = R XX (τ) T 2T T T 2T T Correlation Function Autocorrelation Function and Its Properties The autocorrelation function of a random process X(t) is the correlation E X1X 2 of two random variables X1 = X(t1 ) and X 2 = X(t 2 ) by the process at times t1 and t2 R XX (t1 ,t 2 ) = E X(t1 )X(t 2 ) Assuming a second-order stationary process R XX (t, t + τ) = E X(t)X(t + τ) R XX (τ) = E X(t)X(t + τ) Linear System with Random Input In application of random process, the Input-Output relation through a linear system can be described as follows: X(t) Y(t)=X(t)*h(t) h(t) Linear System Here X(t) is a random process and h(t) (Deterministic Function) is the impulse response of the linear system ( Filter or any other Linear System ) Linear System with Random Input X(t) Y(t)=X(t)*h(t) h(t) Linear System Now we can look at input output relation as follows: The Time Domain The output in the time domain is the convolution of the Input random process X(t) and the impulse response h(t), Y(t)= X(ξ)h(t ξ)dξ= h(ξ)X(t ξ)dξ Question: Can you evaluate this convolution integral ? Answer: We can observe that we can not evaluate this convolution integral in general because X(t) is random and there is no mathematical expression for X(t). The Frequency Domain The output in the Frequency Domain is the Product of the Input Fourier Transform of the input random process X(t) , FX(f) and the Fourier Transform of the impulse response h(t), H(f) FX (f) FY (f) = FX (f)H(f) H(f) Linear System FX (f)= X(t) e j2πf dt the Fourier Transform of the input random process X(t) is a random process H(f)= h(t) e j2πf dt the Fourier Transform of the deterministic impulse response FY (f) = FY (f)H(f) the Fourier Transform of the output random process Y(t) is a random process Question : Can you evaluate the Fourier Transform of the input random process X(t) , FX(f) ? Answer: In general no , since the function X(t) in general is random and has no mathematical expression. X(t) Y(t)=X(t)*h(t) h(t) Linear System Question: How can we describe then the behavior of the input random process and the output random process through a linear time-invariant system ? We defined previously the autocorrelation R X (τ) as R X (τ)=E[X(t)X(t + τ)] The auto correlation tell us how the random process is varying Is it a slow varying process or a high varying process. Next we will define another function that will help us on looking at the behavior of the random process Let Sxx(f) ( or Sxx(w)) be the Fourier Transform of Rxx(t) R XX (τ) SXX (f) SXX (f) = R XX (τ) = Since R XX (τ)e R XX (0) = SXX (f)e j2πfτ j2πfτ dτ df OR OR SXX (ω) = R XX (τ)e jωτ dτ 1 jωτ R XX (τ) = S (ω)e dω XX 2π 2 SXX (f)df = E[X(t)X(t)]=E[X (t)] Average Power Then SXX(f) is Power Spectral Density ( PSD ) of the Random Process X(t) R XX (τ) = SXX (f)e j2πfτ df autocorrelation SXX (f) = R XX (τ)e j2πfτ dτ Power Spectral Density ( PSD ) Properties of PSD (1) SXX (f ) 0 for all f ( Power never negative) (2) SXX (f) = SXX (f) (3) SXX (f) is real since (4) (5) R XX (0) = SXX (0) = Even Function ( X(t) real R XX (τ) is real) R XX (τ) SXX (f)df R XX (τ)dτ is even function E[X 2 (t)] Total Power DC power ( power at zero Frequency) Now let us look at the input-output linear system shown below in the time domain and Frequency domain assuming the Random Process X(t) is WSS Time Domain Random Function X(t) R XX (τ) Random Function Y(t)=X(t)*h(t) h(t) Linear System R YY (τ) None random Deterministic Function None random Deterministic Function Y = XH(0) X The Mean of the output, E Y(t) = E h(ξ)X(t ξ) dξ = h(ξ) E X(t ξ) dξ X = X h(ξ)dξ = XH(0) = Y Constant Random Function Random Function X(t) h(t) R XX (τ) Linear System None random Deterministic Function Y(t)=X(t)*h(t) R YY (τ) None random Deterministic Function the mean is constant and the autocorrelationof the output is a function of Y = XH(0) X R XX (τ) R YY (τ) = R XX (τ) h( τ) h(τ) The Autocorrelation of the output, R YY (t, t + τ) = E Y(t)Y(t + τ) = E h(ξ1 )X(t ξ1 ) dξ1 Y(t) = = E X(t Y(t) is WSS h(ξ )X(t + τ ξ ) dξ 2 2 2 Y(t + τ) ξ1 )X(t + τ ξ 2 ) h(ξ1 )h(ξ 2 )dξ1dξ 2 R XX (τ + ξ1 ξ 2 )h(ξ1 )h(ξ 2 )dξ1dξ 2 = R XX (τ) h( τ) h(τ)