Chapter 13 The Behavioral/Social Learning Approach: Theory and Application

advertisement

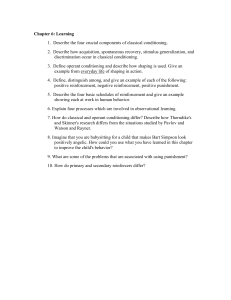

Chapter 13 The Behavioral/Social Learning Approach: Theory and Application Behaviorism John B. Watson (1878-1958) Watson was a fighter and a builder. In college, he was unsociable and uninterested in his studies. He switched from philosophy to psychology at the University of Chicago, but preferred to study animals rather than humans. After joining the faculty at Johns Hopkins University in 1908, he developed and promoted his ideas about behaviorism. Behaviorism swept the field following the publication of his paper “Psychology as the Behaviorist Views It” in 1913. Watson’s flourishing academic career ended in disgrace in 1920, in the wake of his affair with his research associate, Rosalie Rayner. Watson’s view of behaviorism Watson argued that if psychology were to be a science, psychologists must stop examining mental states and study overt, observable behavior instead. Emotions, thoughts, experiences, values, reasoning, insight, and the unconscious would therefore be off-limits to behaviorists unless they could be defined in terms of observable behaviors. For example, Watson regarded thinking as a variant of verbal behavior that he called “subvocal speech.” According to Watson, personality could be described as “the end product of our habit systems.” Watson argued that he could take “a dozen healthy infants, wellformed” and condition them “to become any type of specialist” that he chose. B.F. Skinner (1904-1990) Skinner passed on joining his father’s law firm and studied English at Hamilton College with the intent of becoming a writer. After producing nothing of consequence in the two years following his graduation, he went to Harvard to study psychology. He became the new standard bearer for a view of behaviorism that he called radical behaviorism. In the 1940s, he published Walden Two, a novel about a utopian community based on conditioning principles. To the end, he remaining an adamant believer in the power of the environment, and conceded little to those who emphasized genetic determinants of behavior. Skinner’s “radical behaviorism” Skinner did not deny the existence of thoughts and inner experiences, but he argued that we often misattribute our actions to mental states when they should be attributed to our conditioning instead. His position maintained that we often don’t know the reason for many of our behaviors, although we may think we do. He therefore argued that our perception that we are free to act as we choose is, to a large extent, an illusion. Instead, we act in response to environmental contingencies. Among Skinner’s many contributions to the study of conditioning are the so-called “Skinner box” and the discovery of partial reinforcement schedules. Basic Principles of Conditioning Ivan Pavlov (1849-1936) Ivan Pavlov was born in Ryazan, Russia. He began his higher education as a seminary student, but dropped out and enrolled at the University of Petersburg to study the natural sciences. He received his doctorate in 1879. In the 1890s, Pavlov was investigating the digestive process in dogs by externalizing a salivary gland so he could collect, measure, and analyze the saliva produced in response to food under different conditions. He noticed that the dogs tended to salivate before food was actually delivered to their mouths. He realized that this was more interesting than the chemistry of saliva, and changed the focus of his research, carrying out a long series of experiments in which he manipulated the stimuli occurring before the presentation of food. He thereby established the basic laws for the establishment and extinction of what he called "conditional reflexes" — i.e., reflex responses, like salivation, that only occurred conditional upon specific previous experiences of the animal. Pavlov’s experimental setup Classical (Pavlovian) conditioning (aka “signal learning”) Classical conditioning begins with an existing stimulus- response (S-R) association. Understanding that there was an existing S-R association between the food (S) and the dog’s salivation, Pavlov quickly perceived that there might also be a learned or “conditioned” association between cues associated with feeding (S) and the dog’s salivation (R). Using the sound of either a bell or a tuning fork as his conditioned stimuli, Pavlov found that he could indeed “condition” the response of salivation to the sound of a bell or a tuning fork. Pavlov’s experimental setup Pavlov’s famous demonstration of classically conditioned salivation in a dog Classical conditioning paradigm food Unconditioned stimulus (UCS) Conditioned stimulus (CS) tuning fork saliva Unconditioned response (UCR) Conditioned response (CR) saliva Classical (Pavlovian) conditioning (aka “signal learning”) Once the new S-R association is established, it can be used to condition yet another S-R association in a process called second-order conditioning. For example, once the dog is reliably salivating to the sound of the tuning fork, the tuning fork can be paired with a green light and soon the dog will salivate whenever the green light comes on. Both first-order and second-order classical conditioning are subject to extinction. Another limitation of classical conditioning involves the length of the delay between the presentation of the CS and the presentation of the UCS (in general, one second is optimal). Operant (instrumental) conditioning (aka “consequence learning”) Operant conditioning concerns the effect certain kinds of consequences have on the frequency of behavior. A consequence that increases the frequency of a behavior is called a reinforcement. A consequence that decreases the frequency of a behavior is called a punishment. Whether a consequence is reinforcing or punishing varies according to the person and the situation. There are two basic strategies for increasing the frequency of a behavior: positive reinforcement and negative reinforcement. There are two basic strategies for decreasing the frequency of a behavior: extinction and punishment. Edward L. Thorndike (1874-1949) Edward L. Thorndike graduated from Wesleyan University in 1895, and received his Ph.D. from Columbia University in 1898 He was appointed as an instructor in genetic psychology at Teachers College, Columbia University, in 1899, and served there until 1940. He devised methods to measure children’s intelligence and their ability to learn. He also conducted studies in animal psychology and the psychology of learning. His law of effect addressed the phenomenon we now call reinforcement. Thorndike’s books include Educational Psychology (1903), Mental and Social Measurements (1904), Animal Intelligence (1911), A Teacher’s Word Book (1921), Your City (1939), and Human Nature and the Social Order (1940). The Skinner box Operant (instrumental) conditioning (aka “consequence learning”) Operant conditioning concerns the effect certain kinds of consequences have on the frequency of behavior. A consequence that increases the frequency of a behavior is called a reinforcement. A consequence that decreases the frequency of a behavior is called a punishment. Whether a consequence is reinforcing or punishing varies according to the person and the situation. There are two basic strategies for increasing the frequency of a behavior: positive reinforcement and negative reinforcement. There are two basic strategies for decreasing the frequency of a behavior: extinction and punishment. Operant conditioning procedures Procedure Purpose Application Positive reinforcement Increase behavior Give reward following behavior Negative reinforcement Increase behavior Remove aversive stimulus following behavior Extinction Decrease behavior Do not reward behavior Punishment Decrease behavior Give aversive stimulus following behavior or take away positive stimulus Problems with the use of punishment Punishment does not teach what behavior is appropriate. It only teaches what behavior is inappropriate. To be effective, punishment must be delivered immediately and consistently. Punishment can have the negative side effect of inhibiting not only the undesirable behavior but also desirable behavior that is associated with it. Punishment can result in the person who is punished coming to fear the person who administers the punishment. Punishment may also serve as a behavior that is later modeled by the person being punished. Punishment can create strong negative emotions that can interfere with learning the desired response. For all of these reasons, punishment should be used sparingly and only when other operant conditioning procedures either cannot be used or will not work. Other important operant conditioning concepts Shaping: reinforcing successive approximations of the desired behavior until the complete response is well established Generalization: displaying the response to stimulus situations that resemble the one in which the original response was acquired Discrimination: selectively reinforcing the response to help ensure that it will only occur in the presence of the original stimulus and not ones that might resemble it Social Learning Theory Julian B. Rotter (1916- ) Julian Rotter first learned about psychology in the Avenue J Library in Brooklyn, where he spent much of his childhood and adolescence. Believing that he couldn’t earn a living as a psychologist, he majored in chemistry at Brooklyn College. While still in college, he discovered that Alfred Adler was teaching at the Long Island School of Medicine and began associating with Adler and his colleagues. He then became a psychology major at the University of Iowa and received a Ph.D. in clinical psychology at the University of Indiana. After serving as a psychologist in the Army during World War II, he later taught at the Ohio State University and at the University of Connecticut. Key concepts in Rotter’s Social Learning Theory: perceptions, expectancies, and values Behavior potential (BP): the likelihood of a given behavior occurring in a particular situation Expectancy: the perceived likelihood that a given behavior will result in a particular outcome – Generalized expectancies: beliefs about how often our actions typically lead to reinforcements and punishments – Locus of control: generalized perceptions about the degree to which one’s outcomes are determined by internal versus external factors Reinforcement value: the degree to which we prefer one reinforcer over another Rotter’s basic formula for predicting behavior Behavior potential (BP) = Expectancy (E) X Reinforcement Value (RV) If either the expectancy or the reinforcement value is zero, then the behavior potential will be zero. Examples of calculating the behavior potentials in an insult situation Option Possible outcome Expectancy Value Behavior potential Ask for apology Apology High High High Insult back Laughter Low High Average Yell at insulter Ugly scene High Low Average Leave the party Feel foolish Average Low Low Social-Cognitive Theory Albert Bandura (1925 Albert Bandura was raised in Alberta, Canada and received his bachelor’s degree at the University of British Columbia. He completed his Ph.D. at the University of Iowa, where he was influenced by the prominent learning theorist Kenneth Spence. After a year of clinical internship in Wichita, he accepted a position at Stanford University. His career has been spent building bridges between traditional learning theory, cognitive personality theory, and clinical psychology. ) Bandura’s reciprocal determinism model Behavior External factors (Rewards, punishments) Internal factors (Beliefs, thoughts, expectations) Features of learning and cognition that are (relatively) unique to humans The use of symbols and other cognitive representational structures to “re-create” the outside world within our own minds The resulting abilities to imagine alternative courses of action and conduct mental stimulations to “project” what their outcomes are likely to be The capacity for self-regulation through the application of self-reward and self-punishment, even in the face of strong external rewards and punishment The capacity for vicarious or observational learning Observational learning The distinction between learning and performance is important: not every behavior that is learned gets performed Much of our learning occurs vicariously, through our observation of other people’s actions, and the consequences of those actions. We are more likely to imitate a behavior we have seen other people display if the outcome of their behavior was a reward, rather than a punishment. In a study by Bandura (1965), nursery school children were more likely to model the aggressive behaviors of an adult who they observed in a film segment if they saw the model get rewarded, rather than punished, for his aggressive behavior. Mean number of aggressive responses performed (Bandura, 1965) 4 3.5 3 2.5 Boys 2 Girls 1.5 1 0.5 0 Model rewarded Model punished No consequences Diagram of Little Albert’s classical conditioning: A conditioned phobia Loud noise Fear responses (Unconditioned stimulus) (Unconditioned response) White rat (Conditioned stimulus) Fear response (Conditioned response) Application: behavior modification Classical conditioning applications – Systematic desensitization (for example, snake phobia) – Aversion therapy (for example, alcoholism) Operant conditioning applications – Changing behavior by changing contingencies • Reward • Punishment • Extinction through nonreinforcement – Token economy – Biofeedback Application: self-efficacy therapy The difference between an outcome expectancy and an efficacy expectancy. Four sources of efficacy expectancies: – – – – Enactive mastery experiences Vicarious experiences Verbal persuasion (“coaching”) Physiological and affective states Guided mastery as a step-by-step approach to achieve enactive mastery experiences Problems that have been addressed through the application of selfefficacy beliefs include traumatic stress disorder, test anxiety, phobias, and bereavement. Behavioral observation methods Direct observation – Direct observation in the field, in the laboratory, or in the clinic – Analogue behavioral observation (for example, staging a dance for clients who are being treated for shyness) – Role-play – The importance of reliable observations Self-monitoring – The importance of consistent and objective self-observation – The therapeutic value of self-observation Observation by others (parents, teachers, nurses, etc.) Strengths and criticisms of the behavioral / social learning approach Strengths – The approach has a solid foundation in empirical research with humans and with infrahuman species. – It led to the development of useful therapeutic procedures involving behavior modification. These intervention procedures, which are relatively quick, inexpensive, and easily administered, assess baseline levels of behavior and establish objective criteria for behavior change. – The social-cognitive extensions of the behavioral approach have greatly expanded the range of phenomena that can be addressed. Criticisms – The approach gives inadequate attention to the role of heredity. – Not all responses can be successfully conditioned (e.g., fear of food). – Rewarding intrinsically-motivated behaviors can sometimes reduce their frequency of occurrence. – Reducing the problems of therapy patients to observable behaviors may fail to address the underlying problem in certain kinds of disorders.