Document 15929798

advertisement

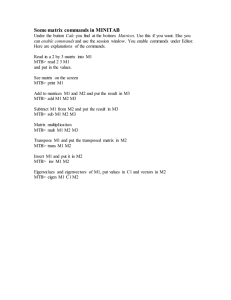

251y0511 2/14/05 ECO251 QBA1 FIRST HOUR EXAM February 18, 2005 Name: _____KEY_____________ Student Number : _____________________ Class Hour: _____________________ Remember – Neatness, or at least legibility, counts. In most non-multiple-choice questions an answer needs a calculation or short explanation to count. Part I. (7 points) (Source: Prem S. Mann) The following numbers represent the price earnings ratio of 12 corporations. 7, 16, 18, 18, 22, 20, 20, 19, 31, 34, 38, 58 Compute the following: a) The Median (1) b) The Standard Deviation (3) c) The 61st percentile (2) d) The Coefficient of variation (1) Solution: The numbers in order are 7, 16, 18, 18, 19, 20, 20, 22, 31, 34, 38, 58. n 12. a) pn 1 .513 65 The middle x 7 x2 49 16 256 18 324 x4 x5 18 324 19 361 x6 20 400 x7 20 400 x8 22 484 x9 31 961 x10 34 1156 s 179.3579 13.392 c) pn 1 .6113 7.93 . So a 7 and .b 0.93 x1 p xa .b( xa1 xa ) so x11 x12 38 1444 x1.61 x.39 x7 0.93( x8 x7 ) 58 3364 301 9523 20 0.93(22 20) 21.86 s 13 .392 d) C 0.5339 or 53.39% x 25 .0833 x1 x2 x3 Total numbers are the 6th and 7th number, which are x x8 20 . both 20. x.50 7 2 x 301 25 .0833 , b) x n 12 x 2 nx 2 9523 12 25 .0833 2 2 s n 1 12 1 1972 .9367 179 .3579 . So 11 1 251x0511 2/16/05 How mean and variance were checked. The numbers were put into c1. ————— 2/15/2005 10:44:12 PM ———————————————————— Welcome to Minitab, press F1 for help. MTB > let c2=c1*c1 MTB > sum c1 Sum of x Sum of x = 301 MTB > sum c2 Sum of xsq Sum of xsq = 9523 MTB > describe c1; SUBC> mean; SUBC> variance; SUBC> stdev. Descriptive Statistics: x Variable Mean StDev x 25.08 13.39 Variance 179.36 MTB > print c1 c2 Data Display Row x xsq 1 7 49 2 16 256 3 18 324 4 18 324 5 19 361 6 20 400 7 20 400 8 22 484 9 31 961 10 34 1156 11 38 1444 12 58 3364 2 251x0511 2/16/05 Part II. (At least 35 points – 2 points each unless marked - Parentheses give points on individual questions. Brackets give cumulative point total.) 1. I have the average time of the first 10 runners in the Boston Marathon. a) Is this a parameter or a statistic? (Think!) b) What symbol should you use to indicate this mean? [2] Answer: This is a parameter, since the first 10 runners are a population – we have all of them. The symbol for a population mean is mu . 2. The data in question 1 is an example of a) Ordinal Data b) Nominal Data c) Discrete ratio data d) Continuous interval data e) *None of the above. [4] Answer: They are none of the above. Both the times of the runners and their average are continuous ratio data. 3. Assume now that I have the times of all the runners who finish the Boston Marathon and that the first ten or 20 runners have times that are far below most of the rest, but that the more typical runners are relatively close together. Which of the following is most likely? a) *mean < median < mode b) mean < mode < median c) mode < mean < median d) mode < median < mean e) none of the above. [6] Answer: The description (which might be highly inaccurate) is of a data set that is skewed to the left. The mean is the least robust of the measures of central tendency, so it will be pulled furthest to the right. The mode is the most robust and will hold its ground. The median is generally between them. 4. Mark the variables below as qualitative (A) or quantitative (B) a) Celsius Temperature b) Absolute Temperature c) Cost of a new thermometer d) The number of thermometers you have in your house. [8] Answer: All of these variables are quantitative (B). The first is continuous interval data, the second and third are usually considered continuous ratio data and the last is discrete ratio data. 5. Which of the following is not a dimension – free measurement. a) *The population variance. b) Pearson’s measure of skewness c) g 1 d) The coefficient of variation. e) The coefficient of excess. f) All of the above are dimension free g) None of the above are dimension free. [10] Explanation: b – e are dimension-free ratios because they have data that are measured in the same units in their numerators and denominators. 6. Classify a deck of cards as follows: Write yes or no in each location. 3 251x0511 2/16/05 A1 Hearts; A2 Red cards; A3 Black cards; A4 Face cards (4) A1 and A2 A2 and A3 A2 , A3 , A4 A1 and A3 Mutually Exclusive? __no__ Collectively Exhaustive? __no__ __yes__ __yes_ __no__ __yes_ __yes__ __no__ [14] Explanation: A1 is inside A2 , and A2 hardly includes all cards. A2 and A3 do not overlap and between them include all cards. A4 includes parts of both A2 and A3 , but since A2 and A3 already include all cards, the three classes are collectively exhaustive. Hearts cannot be black cards but A1 and A3 together leave out diamonds. 7. What characteristic do the variance, standard deviation and Interquartile range have in common that they do not share with the mean, median, mode or skewness? (1) [15] Answer: They are all measures of dispersion. Exhibit 1. The boxplot, stem-and-leaf display and 5 number summary describe the data set ‘Length’ Boxplot of Length 602 Length 601 600 599 598 597 4 251x0511 2/16/05 Exhibit 1. (ctd.) Stem-and-Leaf Display: Length (Numbers are in the 2nd and 3rd columns – 1st column is a form of cumulative count) 1 597 2 4 597 688 17 598 0000222224444 28 598 88888888888 41 599 0000022244444 44 599 666 49 600 00224 (6) 600 668888 45 601 00000000002222222222224444444444444 10 601 666666888 1 602 2 Five number summary: Length 597.20 598.80 600.60 601.20 Descriptive Statistics: Length 602.20 Mean 600.07 StDev 1.34 8. What are the median and the interquartile range for the data set in exhibit 1? [17] Solution: The mean is the middle number of the 5 number summary, 600.60. The IQR = Q3 – Q1 = 601.20 – 598.80 = 2.40. 9. Assume that you were asked to present these data in seven intervals, what class interval would you use? (Show your work!!!) Solution: The highest number is 602.20 and the lowest is 597.20. w .7143 . 0.8 is probably the smallest interval one could consider. 10. Show the intervals that you would actually use. Class A B C D E F G From 597.0 597.8 598.6 599.4 600.2 601.0 601.8 to 597.7 598.5 599.3 600.1 600.9 601.7 602.5 602.20 597.20 7 [21] 11. If we take the area between 602.75 and 597.39 a) According to the empirical rule, what percent of the data should be between these points? Solution: It says: Mean 600.07 StDev 1.34. 597.39 = 600.07 – 2(1.34) . 602.75 = 600.07 + 2(1.34) This is two standard deviations from the mean and should include about 95% of the data in a unimodal symmetrical distribution. b) According to the Tchebyschev inequality, what percent of the data should be between these points? Solution: If k 2, the tails should include at most 1 2 1 2 .25 or 25% - so the center k 2 should have at least 75%. 5 251x0511 2/16/05 c) What percent of the data is actually between these points.? Comment. Solution: 597.2 is the only point that is not between 597.39 and 602.75. So 99% of the 100 points are in the interval. This is above what is predicted by the empirical rule, which is not surprising, since the distribution is asymmetrical and bimodal. It is above the 75% in the Tchebyschev inequality, which is actually exactly what the inequality says it will be. Original Data Frequency 1 4 17 28 41 44 49 6) 45 10 1 Exhibit 2: 597 597 598 598 599 599 600 600 601 601 602 class 2 688 0000222224444 88888888888 0000022244444 666 00224 668888 00000000002222222222224444444444444 666666888 2 f 0-10 10-20 20-30 30-40 40-50 Total f rel F 1 3 13 11 13 3 5 6 35 9 1 100 [27] Frel .10 .30 .70 .20 .10 100 xxxx 1.00 xxxx 12. Fill in the missing numbers in Exhibit 2. (4) [31] Explanation: n is the sum of the f column and also must be the last number in the F column. F f and f rel , so the F and f columns can be gotten by multiplying Frel and f rel by Frel n n n . Since Frel is the cumulative version of f rel , the missing number in the Frel column can be gotten by adding .20 to .70 to get .90. class f F f F rel 0-10 10-20 20-30 30-40 40-50 Total 10 20 40 20 10 100 .10 .20 .40 .20 .10 1.00 rel 10 30 70 90 100 xxxx .10 .30 .70 .90 1.00 xxxx 13. Find the 61st percentile in exhibit 2.(3) [34] Solution: position pn 1 .61101 61.61 . This is above 30 and below 70 in the F column, pN F so we look for the 61st percentile in the 20-30 class. x1 p L p w , so f p 0.61100 30 x.39 20 10 20 0.775 10 27.75. 40 6 251x0511 2/16/05 14. An Economics course has students of all classes in it. 10% Freshmen, 46% sophomores, 30%Juniors and 14% Seniors. Make this information into a Pareto chart. (4) [38] Solution: The graph consists of 4 labeled bars with heights 46%, 30%, 14% and 10%. The line starts at 46% and rises to 76%, 90% and 100%. Extra Credit. I forwarded this to several people a few days ago. The headline appeared in a Slovak newspaper. The subject line is my comment. From what you know about ordinal data, write a short essay explaining my comment. I’m looking for your ability to express yourself persuasively as much as for facts. Subject: A great example of the uselessness of ordinal data Bratislava is the 44th most expensive city in the world Suggested comments: The main thing that we learned about ordinal data is that the differences between successive items in the listing are not usually equal. What the reader wants to know is how expensive Bratislava is relative to cities that the reader might know better, perhaps like New York and Tokyo. Actually nowhere in the article does it say how many cities were ranked, but that wouldn’t really help. It is still possible that Bratislava, as the 45th city is still not very expensive relative to the, perhaps, 100 cities below it, and that Bratislava is very cheap compared with the 10 or so most expensive. But it is also possible that there are 40 or so European cities, including Bratislava, in the first 45 that are all relatively similar in cost, even if Bratislava is the cheapest. The study probably computed some sort of index number for businesspersons’ expenses in each city that would be much more useful. One of my companions in a Slovene language class in Ljubljana was a Greek lawyer, who was proficient at languages and went to Slovenia on a lark. He felt that Slovenia’s capital was expensive and had to call home for money, while I found it incredibly cheap. Index values or estimated costs per day compared to Philadelphia and Athens would have done us much more good than knowing the ranks of Philadelphia, Ljubljana and Athens. 7 251x0511 2/16/05 ECO251 QBA1 FIRST EXAM February 18, 2005 TAKE HOME SECTION Name: _________________________ Student Number: _________________________ Throughout this exam show your work! Please indicate clearly what sections of the problem you are answering and what formulas you are using. Turn this is with your in-class exam. Part IV. Do all the Following (11 Points) Show your work! 1. The frequency distribution below represents mortgage payments in hundreds of dollars of 85 families to the Relatively Reliable Bank and Trust Company. Personalize the data below by adding the last digit of your student number to the last frequency and the second to last digit of your student number to the second to last digit,. For example, Seymour Butz’s student number is 876509 so he adds 0 to the second to last frequency and 9 to the last frequency and uses (1, 4, 18, 32, 16, 10, 0, 13). Payment frequency a. Calculate the Cumulative Frequency (0.5) b. Calculate the Mean (0.5) 0 - 4 1 c. Calculate the Median (1) 4 - 8 4 d. Calculate the Mode (0.5) 8 – 12 18 e. Calculate the Variance (1.5) 12 – 16 32 f. Calculate the Standard Deviation (1) 16 – 20 16 g. Calculate the Interquartile Range (1.5) 20 – 24 10 h. Calculate a Statistic showing Skewness and 24 - 28 0 28 - 32 4 interpret it (1.5) i. Make a frequency polygon of the data showing relative or percentage frequency (Neatness Counts!)(1) j. Extra credit: Put a (horizontal) box plot below the relative frequency chart using the same scale (1) . Solution: If we use the Seymour’s numbers and the computational method, we get the following. Row Class f F x fx2 fx3 fx 1 0- 4 1 1 2 2 4 8 x is the class midpoint. 2 3 4 5 6 7 8 4- 8 8-12 12-16 16-20 20-24 24-28 28-32 4 18 32 16 10 0 13 94 5 23 55 71 81 90 94 6 10 14 18 22 26 30 24 144 180 1800 448 6272 288 5184 220 4840 0 0 390 11700 1552 29944 864 18000 87808 93312 106480 0 351000 657472 If we like to work, we may have used the definitional method instead. Row 1 2 3 4 5 6 7 8 Class 0- 4 4- 8 8-12 12-16 16-20 20-24 24-28 28-32 f x fx 1 4 18 32 16 10 0 13 94 2 6 10 14 18 22 26 30 2 24 180 448 288 220 0 390 1552 xx -14.5106 -10.5106 -6.5106 -2.5106 1.4894 5.4894 9.4894 13.4894 f x x -14.511 -42.043 -117.191 -80.340 23.830 54.894 0.000 175.362 0.000 f x x 2 f x x 3 210.56 441.89 762.99 201.71 35.49 301.33 0.00 2365.52 4319.49 -3055.3 -4644.6 -4967.6 -506.4 52.9 1654.1 0.0 31909.3 20442.4 8 251x0511 2/16/05 From the f and fx columns of either display, we find n fx 1552 16.5106 . We also find that f 94 and fx fx 657472 , f x x 0 (except for a rounding error), f x x 2 3478.47, and f x x 3 20442.4 mean is x n 94 fx 1552 , so that the 2 29944 , 3 (Note that, to be reasonable, the mean, median and quartiles must fall between 0 and 32.) a. Calculate the Cumulative Frequency (1): (See above) The cumulative frequency is the whole F column. fx 1552 16 .5106 b. Calculate the Mean (1): We already found that x n 94 c. Calculate the Median (2): position pn 1 .595 47.5 . This is above F 23 and below pN F w so f p F 55, so the interval is the 4th, 12 -16, which has a frequency of 32. x1 p L p .594 23 x1.5 x.5 12 4 12 0.754 15 32 d. Calculate the Mode (1) The mode is the midpoint of the largest group. Since 32 is the largest frequency, the modal group is 12 to 16 and the mode is 14. fx 2 nx 2 29944 94 16 .5106 2 4319 .6082 46 .4474 or e. Calculate the Variance (3): s 2 n 1 93 93 f x x 2 4319 .49 46 .4509 . The computer got 46.4461. n 1 93 f. Calculate the Standard Deviation (2): s 46.4461 6.8151 . g. Calculate the Interquartile Range (3): First Quartile: position pn 1 .2595 23.75 . This is above s2 pN F w gives us, f p F 23 and below F 55, so the interval is the 4th, 12-16. x1 p L p .2594 23 Q1 x1.25 x.75 12 4 12 .015625 4 12 .0625 . 32 Third Quartile: position pn 1 .7595 71.25 . This is above F 71 and below F 81, so the .7594 71 interval is the 6th, 20-24. x1.75 x.25 20 4 20 0.05 4 19.8 . The formula isn’t 10 working as well as it should, so lets try the 5th interval, 16-20. .7594 55 x1.75 x.25 16 4 16 0.96875 4 19.875 It doesn’t seem to matter which you use. 16 IQR Q3 Q1 19.8 12.0625 7.7375 . 9 251x0511 2/16/05 h. Calculate a Statistic showing Skewness and interpret it (3): fx 1552 , so that the mean is x 16.5106 . We also found that We had n 94 and fx 29944 , fx n fx k (n 1)( n 2) 3 657472 and 3 3x 2 3 fx 2 f x x 3 2nx 3 94 20442.4 . 93 92 657472 316.5106 29944 294 16.5106 3 0.0109864 657472 1483180 .2 846148 .2 0.0109864 20440 224 .562 . n 94 or k 3 20442 .4 224 .589 The computer gets 224.589 f x x 3 (n 1)( n 2) 9392 or g1 k3 s 3 224 .589 6.81514 3 0.70952 3mean mode 316 .5106 14 .1228 std.deviation 6.81514 Because of the positive sign, the measures imply skewness to the right.. Pearson's Measure of Skewness SK or i. A frequency polygon is a simple line graph with frequency on the y-axis and the numbers 0 to 36 on the xaxis. Each f point is plotted against the midpoint of the class, x . In addition 2 empty classes must be added. Your graph should show the point (32, 0) and show a line segment rising from (-2, 0) to (2, 0.0106). Of course, showing the half of the line segment to the left of the y-axis is undesirable. The data Seymour had is: x Row Class f f rel fx 0 1 2 3 4 5 6 7 8 9 -4-0 0- 4 4- 8 8-12 12-16 16-20 20-24 24-28 28-32 32-36 0 1 4 18 32 16 10 0 13 0 94 -2 2 6 10 14 18 22 26 30 34 0 2 24 180 448 288 220 0 390 0 1552 0.0000 .0106 .0426 .1915 .3404 .1702 .1064 0.0000 .1383 0.0000 1.0000 Each number in the f rel column is the corresponding number in the f column divided by axis could be marked from zero to .35. n 94. The y j. The box plot should show the median and the quartiles and use the same x axis as the histogram. How this was checked. Three Minitab routines are available to you in PROGRAMS on the website. Click on 'Programs for grouped data computation.' The three programs are 'grp', 'grpv', and 'grps.' These were run in succession with the following results. ————— 2/16/2005 7:54:17 PM ———————————————————— Welcome to Minitab, press F1 for help. Results for: 251x0501-1.MTW MTB > Save "C:\Documents and Settings\rbove\My Documents\Minitab\251x0501-1.MTW"; SUBC> Replace. Saving file as: 'C:\Documents and Settings\rbove\My 10 251x0511 2/16/05 Documents\Minitab\251x0501-1.MTW' Existing file replaced. MTB > exec 'grp' Executing from file: grp.MTB Data Display Row Class 1 0- 4 2 4- 8 3 8-12 4 12-16 5 16-20 6 20-24 7 24-28 8 28-32 f 1 4 18 32 16 10 0 13 x 2 6 10 14 18 22 26 30 fx 2 24 180 448 288 220 0 390 fxsq 4 144 1800 6272 5184 4840 0 11700 fxcu 8 864 18000 87808 93312 106480 0 351000 Data Display n 94.0000 mean 16.5106 Sfx 1552.00 Sfx2 29944.0 Sfx3 657472 Data Display Row Class 1 0- 4 2 4- 8 3 8-12 4 12-16 5 16-20 6 20-24 7 24-28 8 28-32 x^ -14.5106 -10.5106 -6.5106 -2.5106 1.4894 5.4894 9.4894 13.4894 fx^ -14.511 -42.043 -117.191 -80.340 23.830 54.894 0.000 175.362 fx^sq 210.56 441.89 762.99 201.71 35.49 301.33 0.00 2365.52 fx^cu -3055.3 -4644.6 -4967.6 -506.4 52.9 1654.1 0.0 31909.3 Data Display Sfx^ 0.000000000 Sfx^2 4319.49 Sfx^3 20442.4 MTB > exec 'grpv' Executing from file: grpv.MTB MTB > exec 'grps' Executing from file: grps.MTB Data Display n 94.0000 mean 16.5106 Sfx 1552.00 Sfx2 29944.0 Sfx3 657472 K6 93.0000 Sfx^ 0.000000000 Sfx^2 4319.49 Sfx^3 20442.4 var1 46.4461 var2 46.4461 K12 92.0000 K13 0.0109864 k31 224.589 K15 20442.4 k32 224.589 stdev 6.81514 g11 0.709520 g12 0.709520 MTB > Save "C:\Documents and Settings\rbove\My Documents\Minitab\251x0501-1.MTW"; SUBC> Replace. Saving file as: 'C:\Documents and Settings\rbove\My Documents\Minitab\251x0501-1.MTW' 11 251x0511 2/16/05 2. Take your student number followed by 10, 14, 16, 18, 20, 22 as 12 values of x . Change any zeros to ones. For example, Seymour Butz’s student number is 876509, so he uses 8, 7, 6, 5, 1, 9, 10, 14, 16, 18, 20, 22. For these twelve numbers, compute the a) Geometric Mean b) Harmonic mean, c) Root-mean-square (1point each). Label each clearly. If you wish, d) Compute the geometric mean using natural or base 10 logarithms. (1 point extra credit each). a) The Geometric Mean. 1 x g x1 x 2 x3 x n n n x 12 87651910 14 16 18 20 22 2682408960 00 0.0833333 8.96143 . At least, not many of you tried to get the answer by dividing 582112000 by 12, but a number of you seem to have convinced yourselves that you could take a square root instead of an 11th root. 12 2682408960 00 2682408960 00 12 1 b) The Harmonic Mean. 1 1 xh n 1 1 1 x 12 8 7 6 5 1 9 10 14 16 18 20 22 1 1 1 1 1 1 1 1 1 1 1 1 0.12500 0.14286 0.16667 0.20000 1.0000 0.11111 0.10000 0.07143 0.06250 0.05556 0.05000 0.04545 12 1 2.13057 0.177548 . 12 So x h 1 1 n x 1 1 5.63229 . 0.177548 Of course some of you decided that 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 xh n x 12 8 7 6 5 1 9 10 14 16 18 20 22 1 1 1 1 ??? . . This is, of course, an easier way to do the problem, but I warned you that n x 12 136 1 1 1 it wouldn’t work. . It is equivalent to believing that 2 2 4 c) The Root-Mean-Square. 1 1 2 2 x rms x2 8 7 2 6 2 5 2 12 9 2 10 2 14 2 16 2 18 2 20 2 22 2 n 12 1 64 49 36 25 1 81 100 196 256 324 400 484 12 1 1 x 2 168 .00 12 .9615 . 2016 168 .000 . So x rms n 12 2 1 1 1 2 Of course some of you decided that xrms x 2 ??? x 136 2 . This is, of course, an n n 12 easier way to do the problem, but I warned you that it wouldn’t work. It is equivalent to believing that 22 22 42 . d) (i) Geometric mean using natural logarithms ln 8 ln 7 ln 6 ln 5 ln 1 ln 9 ln 10 ln 14 ln 16 ln 18 1 ln( x) 1 ln x g n 12 ln 20 ln 22 1 2.07944 1.94591 1.79176 1.60944 0.00000 2.19722 2.30259 2.63906 11 2.77259 2.89037 2.99573 3.09104 1 26 .3152 2.19293 12 12 251x0511 2/16/05 x g e 2.19293 8.96143 . So (ii) Geometric mean using logarithms to the base 10 log x g 1 n 1 log 8 log 7 log 6 log 5 log 1 log 9 log 10 log 14 log 16 log( x) 12 log18 log20 log22 1 0.90309 0.84510 0.77815 0.69897 0.00000 0.95424 1.00000 1.14613 12 1.20412 1.25527 1.30103 1.34242 1 11 .4285 0.952377 12 So x g 10 0.952377 8.96143 . Notice that the original numbers and all the means are between 1 and 22. It’s probably more efficient to handle a problem this large in columns. The arithmetic mean is also computed below. 1 x Row x2 logx ln x x 1 2 3 4 5 6 7 8 9 10 11 12 Total Total n 8 7 6 5 1 9 10 14 16 18 20 22 136 0.12500 0.14286 0.16667 0.20000 1.00000 0.11111 0.10000 0.07143 0.06250 0.05556 0.05000 0.04545 2.13057 11.3333 0.177548 So, as before x 11 .3333 , xh 64 49 36 25 1 81 100 196 256 324 400 484 2016 168 0.90309 0.84510 0.77815 0.69897 0.00000 0.95424 1.00000 1.14613 1.20412 1.25527 1.30103 1.34242 11.4285 0.952377 2.07944 1.94591 1.79176 1.60944 0.00000 2.19722 2.30259 2.63906 2.77259 2.89037 2.99573 3.09104 26.3152 2.19293 1 5.63229 , xrms 168 12 .9615 , 0.177548 x g 10 0.952377 8.96143 and x g e 2.19293 8.96143 . . 13