NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE... This user guide is for users of AAUDE shared data...

advertisement

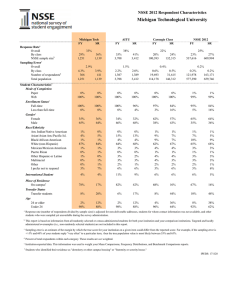

NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 1 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm This user guide is for users of AAUDE shared data from NSSE 2.0 (version 2, known here as v2, introduced 2013) and data from NSSE 1.0 (2000-2012, aka v1) converted to v2 formats where items and response scales were sufficiently comparable (known throughout as v1_v2). See the website for codebook with extensive mark-ups; variable list in Excel; data and background on response rates, populations, and samples; information on AAUDE questions; and procedures for AAUDE data sharing and using AAUDE questions. Super quick guide START by running Enhancements.sas – set the choices and locations in the code as desired. Described below. THEN run Longitudinal.sas – set choices as desired, to select schools and years. YOU WILL THEN HAVE Temp dataset long1, row = respondent (in year, school, class level), v2 and v1_v2 Permanent dataset BaseStats, row = year*school*class level*item, with marginals, v2 and v1_v2 NOTE, in both datasets, the “key variables,” described below. USE the marked-up codebook and key variables to plan and execute your analyses. Contents of this doc listed below. This is a chicken-and-egg doc, so many sections use terms etc. defined in later sections. Sorry! See section near the end for changes 2013 to 2014. These are minimal, affecting only two demographic vars. We did not revisit the v1-to-v2 decisions we made with the 2013 data; we simply took that model and added 2014 and 2015 data. NOTE that the contents points to a section on What’s new in (data from administration year XXXX) CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 2 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm Files to have at your fingertips ........................................................................................................ 2 Files – SAS datasets, SAS formats/code........................................................................................... 2 Key variables – for ID/selecting years, schools, respondents ......................................................... 5 Variables UCB added or modified ................................................................................................... 6 Notes on other variables – HIPsum.., engagement indicators, duration, sexorient ....................... 6 Picking years and schools and respondents for analyses ................................................................ 8 Missing data – Handling in SAS, patterns of response, PctValid vars, comparison to v1 ............... 8 Naming conventions – Files, variables, labels ................................................................................. 9 Majors – Use the CIPs .................................................................................................................... 10 Institutional metadata – Populations, samples, response rates ................................................... 11 AAUDE Questions – Changes, comparisons, CU calcs – runaround, obstacles ............................. 12 VSA................................................................................................................................................. 13 Things CU-Boulder did in creating the v2.0 response-level dataset ............................................. 13 Creating the v1_v2 dataset (in 2013; we didn’t recheck all this) .................................................. 15 Adding a new year ......................................................................................................................... 16 2015 changes/updates .................................................................................................................. 17 2014 changes/updates .................................................................................................................. 17 NOTES, CAUTIONS specific to ONE YEAR....................................................................................... 18 PBA file refs and summary of Boulder to do’s............................................................................... 18 Files to have at your fingertips This user guide The marked up codebook The SAS datasets and code from the passworded page. The ResponseRates Excel for the N_compare_Pivot tab to see patterns of administration and use of AAUDE questions over years Files – SAS datasets, SAS formats/code SAS datasets – all version 9. PBA ref: L:\IR\survey\NSSE\AAUDE\All\datasets, aka nssedb METADATA -- Institutional metadata Row = school x year x IRclass (1, 4) value Includes 2005 – most current Population and sample counts, response rates, derivations, weights. Same info is in ResponseRates Excel with plots, narrative CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 3 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm BASESTATS means and SD's (plus N and PctMiss) by institution, class level, and item. Row = school x year x IRclass (1, 4) value, plus marginals, x item. 2001-most current, with v1 data converted to v2 formats. Stats are based on compare=1 only Sorted by item x year x inst x class level with most recent year on top. Items are ordered by var_order CAUTION: here it’s variable CLASS, with values Freshman, Senior, All, rather than IRClass (values 1, 4) Contains handy key variables including public, Partic2013, Partic2014, Partic2015, Partic2016, v2, LastV1, compare (always 1 in this dataset) And item-specific variables: variable (name), LabelShort, q_num, Var_Order (as in MatchVars_v2), and InV1_v2 so you can limit rows to items with both v1 and v2 data if desired. Boulder had thoughts of, but decided against, taking on getting the stats into Tableau. If anyone else does, let us know, for sharing on the AAUDE-NSSE website. Boulder considered but decided not to add distributions (percentages) from layout (e.g., pct1, pct2, pct3, with pct who selected response values 1, 2, 3) Response-level data in v2 formats NSSEALLYRS_V2: 2013 (and later) response-level data, all institutions Vars include base questionnaire, NSSE recodes from base (e.g., estimated hrs from x to y scale), NSSE engagement indicator scales (e.g., HO, higher-order learning), NSSE-coded majors and CIPs, NSSE weights, items from all NSSE modules, AAUDE questions, and CU-added utility variables (e.g., COMPARE) See Enhancements.sas below to change to shorter variable labels and attach formats. CONVERT_V1_V2: Converted version 1 data to version 2 data. 2001-2012 response-level data, all institutions. Contains the 120 items from v1 that could be converted to a v2 item. Items have been renamed to the v2 name and values converted to v2 values. 233,000 rows – this N will not increase. This dataset will not be updated for future administrations aside from adding e.g. Partic2015 flags to all rows of schools participating that year Use the provided SAS code to put these two datasets together and select from them for longitudinal analyses. NEW Nov. 2015: NSSEALLYRS_V2_TEXT: 2014 (and later) response-level data for the open-ended response questions, all institutions. These items were not on what AAUDE got from NSSE in 2013. Row = 1 respondent, with year, IPEDS, INST, IRclass, and surveyID. Can be merged with NSSEALLYRS_V2 on YEAR, IPEDS, INST, IRclass and SURVEYID. (surveyID may not be unique across the other keys) Not all respondents are in this dataset. The dataset contains only respondents who made at least one non-blank response to one of the below items. Vars include GENDERID_TXT (gender identity), SEXORIENT_TXT (sexual orientation), CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 4 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm ADV03_TXT (academic advising module, primary source of advice), CIV03_TXT_PLUS (civic engagement module, most meaningful experiences), FYSFY06B_6_TXT (FY experiences & SR transitions module, considered leaving) FYSSR01A_TXT (plans after graduation), FYSSR08_TXT_PLUS (anything institution could have done better to prepare for career or further education) Note: CIV03_TXT_PLUS and FYSSR08_TXT_PLUS are a combination of variables due to very long responses. CIV03_TXT_PLUS has a length of 1,062 and FYSSR08_TXT has a length of 3,843. CAUTIONS from Lou on using this dataset Some of the items follow a closed item with text additions to alternatives, so you should not analyze the text items w/o reference to the closed item. The dataset is structured to be efficient for merging and analysis, not storage. Most of the space in it is blank. Many of the items come from modules, not base NSSE, so were presented to a minority of respondents. We masked nothing. Some of the comments name individuals, many berate offices or individuals. We did not include the open-ended response items on major/discipline in either dataset. Use the CIP code variables for this instead. Going forward, all future open-ended response items will be in this dataset.MATCH_V2 'Match' dataset used to assign formats and labels. Row=variable. For v2, with a map of the conversion of v1 to v2 variables. Comes from MatchVars_v2 Excel, which is the master source. The Match_v2 dataset is used in generating code that handles the conversion of v1 to v2. SAS formats and code for use with v2 and v1_v2 data. All have file extension .sas but are ASCII files that can be opened in any text editor or even in Word. Functions Put v2 and v1_v2 data together Switch from the extremely long variable labels provided by NSSE to shorter labels Drop variables from the NSSE modules Create SAS formats to label values Attach formats to variables Select populations of schools, years, and respondents to suit the analyses you’re after Formats_v2.sas – creates formats to label values. see codebook markup or MatchVars Excel or match dataset for what formats go with what vars. Enhancements.sas attaches formats to variables in a temporary dataset. PBA ref: L:\IR\survey\NSSE\AAUDE\All\gencode\Formats_v2.sas Enhancements.sas Run FIRST. Allows user to switch to shorter variable labels; also creates and attaches formats to variables in the dataset. Calls formats_v2.sas CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 5 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm PBA ref: L:\IR\survey\NSSE\AAUDE\All\Enhancements.sas Longitudinal.sas Run AFTER Enhancements.sas. Code to concatenate nsseallyrs_v2 with convert_v1_v2 to allow longitudinal analysis across v1 to v2, and to select comparable populations over time. Creates temp dataset long1, with all v2 vars (except those from modules, if dropped) plus two vars from v1: LastV1 (0/1 marking latest year a school did v1) and aau17v1 (the AAUDE “obstacles” question from v1, for reference – not similar enough to v2 AAUDE obstacles question to recode). New vars in v2 will be missing in v1 years. PBA ref: IR\survey\NSSE\AAUDE\All\Longitudinal.sas convert_v1_v2.sas For reference, what we did in converting v1 to v2. Users do not need to run or understand this!! PBA ref: L:\IR\survey\NSSE\AAUDE\All\Convert_V1_V2.sas NSSEinit.sas Initialization file for CU-Boulder users. Suggest you establish similar with your locations and directives PBA ref: l:\ir\survey\nsse\aaude\NSSEinit.sas In a separate ZIP - V1 only datasets, with v1 variable names – You should not need these at all. Datasets: Basestats_v1, Match_v1, nsseallyrs_v1 (same as nsseallyrs through 2012 data), nsse_oneyear_v1 (with 170 variables used in only one year) Use ONLY if you have code for v1 that you want to use on v1 only data PBA ref: L:\IR\survey\NSSE\AAUDE\All\datasets\archive, aka nsardb Key variables – for ID/selecting years, schools, respondents KEY variables are noted with the word KEY in the label -- meaning, critically important – not all are literally keys, nor are all literal keys so labelled. Generally near top of dataset. In both convert_v1_v2 and nsseallyears_v2 datasets except as noted YEAR -- Survey Year (KEY) – Numeric, e.g. 2013 – coverage depends on dataset. IS A KEY. No missing. YYYY. 2001+. INST -- Institution Name (KEY) – Short (16 char) version; e.g. ‘Colorado’ – these are identical over years and 1-1 with IPEDS so you can use them as a key. No missing. IPEDS -- IPEDS code (KEY) – Character, e.g. ‘126614’. IS A KEY. The 6-digit IPEDS code. Text variable. XXXXXX for McGill, YYYYY for Toronto. (NSSE uses 24002000 McGill, 35015001 Toronto ) PUBLIC -- Public Institution (0=no, 1=yes) TRGTINST -- Target institution (set to 1 for own institution) – 0/1 numeric; initially all zero. Partic2013, Partic2014, Partic2015, Partic2016 are 0/1 vars set for a school on all years administered – These make selecting comparable populations easy. E.g. “where Partic2013” gives all years for the schools that administered in 2013. Partic2016 is already set (based on 10-26-15 info; could change). We’ll add Partic2017 etc. in annual updates. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 6 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm AAUDEQ - 0/1 (zero, one) variable where 1 = respondent answered at least one AAUDE question. 0 = did not. 0 if school didn’t ask AAUDE q’s in a year, OR if student didn’t answer any of them. Property of an individual response/row COMPARE – Respondent-level. COMPARABLE over schools: Random sample of eligible first years/seniors (KEY) – 0/1 numeric. Critically important = use “where compare” for ALL comparisons across schools. IRclass -- Institution-reported: Class level (KEY) – IS A KEY to stats. Values 1, 4 – Numeric, for first-year, seniors Other values occur but not within COMPARE=1. Really not “class level” – e.g., 1=first year can include soph status. SurveyID – NSSE-assigned ID number – important only for linking to your own student records V2 -- NSSE version 2 (2013 & later) - 0=no, 1=yes. LastV1 – 0/1, marks cases from the school’s last v1 administration. Not in the nsseallyears_v2 dataset. Useful for comparing v2 responses with responses from only the last v1 year for a school. Variables UCB added or modified YEAR, PUBLIC, TRGTINST, COMPARE, V2, Partic2013, Partic2014, Partic2015, Partic2016, LastV1 CIP codes and descriptors of major codes (see section on Majors, below) SATT (V+M) MULTRE – calc, were multiple racial/ethnic categories checked PctValidQ1_19 and PctValidQ20-36 – pct of questions student made a valid response to – see section on Missing Data, below We changed NSSE variable “logdate” from a date-time to a date. A new var, logdatetime, records the date-time so you can see how many did it at 3am AAU13Avg, NValid, N_1, N_34 – derivations from items AAU13a-g, the new-format obstacles question BACH – 0/1 expecting bachelors at this school – derived from AAUDE q Notes on other variables – HIPsum.., engagement indicators, duration, sexorient Variables HIPsumFY and HIPsumSR are calc’d by NSSE from items judged “high impact practices.” The variable labels tell the varnames of the items that contribute – 3 for FY, 6 for SR HIPsumFY: Number of high-impact practices for first-year students (learncom, servcourse, and research) marked 'Done or in progress' HIPsumSR: Number of high-impact practices for seniors (learncom, servcourse, research, intern, abroad, and capstone) marked 'Done or in progress' The HIPsumXX vars range 0 to 3 or 6, with one point for each item marked “done or in progress.” HIPsumFY is missing for all IRclass=4 (seniors), and vice versa If a student answered none of the 3 or 6 items, the HIPsumxx variable is missing. It is NOT missing if the student answered even one of the 3 or 6. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 7 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm HIPsumFY is missing for about 15% of IRclass=1 HIPsumSR is missing for about 13% of IRclass=4 Note that the 3 items in the HIPsumFY scale are also in the HIPsumSR scale, with internship, study abroad, and capstone added. The Engagement Indicators (EI) – variables HO RI LS QR CL DD SF ET QI SE – see p 17 of the codebook markup. The var labels also show what items are included. All range 0 to 60, oddly. The v1 benchmarks and CU scales have not been carried into v1_v2 data. The EI indicators have not been calc’d for v1_v2 because most rely on new or extensively-modified variables; the exception is the HO indicator (which we didn’t calc for v1_v2 either). DURATION (in minutes) – it’s elapsed time not time on task!!! And <1 min was handled differently in v1 and v2. Use MEDIANS. Duration MEDIAN = 13 v2, 14 v1. But duration MEAN = 34 v2, 24 v1. More in v2 were interrupting and taking up later. Use mean duration with GREAT CAUTION Most v2 respondents are in the 8-22 minute range, centered around 12 min. SEXORIENT - Sexual orientation – New 2013, at school option. 2013: Colorado and Ohio State posed this Q to their respondents; Iowa St, Kansas, Michigan State, Nebraska, Washington did not. Most students at CU and OSU did respond. (who got to the demog section) Distributions are similar – 6% “prefer not to respond’ (which we have NOT set to missing), 1-2% each gay, lesbian, bisexual, and questioning/unsure, 87-92% heterosexual. NSSE has modified this q for 2014, adding “Another sexual orientation, please specify” to the options. See http://websurv.indiana.edu/nsse/interface/img/Changes%20to%20Demographic%20Ite ms%20(NSSE%202014).pdf NSSE chose to create a new variable SEXORIENT14 with the additional response but we have decided to rename the new variable back to SEXORIENT since fewer than 0.5% of respondents chose “Another sexual orientation, please specify.” “Another sexual orientation, please specify” has a value of 5 in 2014. The value for the response “Questioning or unsure” changed from 5 in 2013 to 6 in 2014. This change has been accounted for in the 2013 data. The write-in responses for the respondents that chose “Another sexual orientation, please specify” are in the variable SEXORIENT_TXT. GENDERID – gender identity – new in 2014, has replaced the variable Gender The new question GENDERID asks “What is your gender identity?” and the response values are “Man,” “Woman,” “Another gender identity,” and “I prefer not to respond.” The old question GENDER asked “What is your gender?” and the response values were “Male,” and “Female.” We chose to equate the old question to the new GENDERID question since fewer than 0.2% of participants chose “Another gender identity.” This also meant changing the Convert_V1_V2 dataset since the variable name was GENDER there as well. The write-in responses for the respondents that chose “Another gender identity” are in the variable GENDERID_TXT. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 8 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm Picking years and schools and respondents for analyses See the sample where clauses and if statements in the code Longitudinal.sas Look at ResponseRates.xlsx, especially the tab N_Compare_Pivot. This shows counts of random-sample respondents by school over time with various filters available. Variables Public, Partic2013, Partic2014, Partic2015, Partic2016, v2, and LastV1 are your friends in selecting schools and years Variable COMPARE is essential for picking respondents Missing data – Handling in SAS, patterns of response, PctValid vars, comparison to v1 Coding missing data We used SAS missing-data values, mostly . (default), for missing data = student did not answer the question at all, do not include in stats NSSE reports often show 9’s separately, not as missing, so you may need to take this into account when matching NSSE reports to your own analyses. On a few questions we used SAS special missing values Variable disability – No=0, Yes=1, I prefer not to respond = .P, missing but differentiatable from ., did not provide any response. This is noted in the codebook and acknowledged in formats. Variable sexorient, sexual orientation, was optional per institutional request. If the school did NOT request, we set this variable to .N, to differentiate from those who were at schools that asked (Colorado and Ohio State) but provided no response. These are shown in the codebook markup V2 2013, what the respondents actually did The codebook markup shows where NSSE questionnaire screens broke, and where respondents dropped off. We used variable PctValidq1_19 (19 is the last q before demographics) to explore respondent patterns, and catergorized them with format pvalid. Of those respondents with 90%+ valid responses on the ratings questions (1-19), none had durations zero or one minute, 7% were 8 or fewer minutes. 12 still the center. THEREFORE, to get a measure of “seriousness” of the respondent, use the PctValidq1_19 measure, not duration. As noted in the codebook, 3% of R’s stopped at or before question 1e – midway through the very first block of questions. PctValidq1_19 =< 7%. Another 3% stopped after/around q 2a, the start of the second block, still on upper half of screen 1; PctValidq1_19 =< 15%. An additional 8% of R's stopped with QRevaluate -- bottom of screen 1. Total ~14% never made it to screen 2. PctValidq1_19 =< 40%. Another ~6% of R's stopped at/about q 13c, QIfaculty, bottom of screen 2. Total 20% never made it to screen 3; PctValidq1_19 =< 70%. Those who DID go to screen 3 generally completed entire rest of the instrument including demographics and AAUDE q's. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 9 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm Relationship of PctValid to duration – yes – Duration is tricky because it’s in minutes but extends to 17 days, reflecting students who started, stopped, and came back before submitting. It’s elapsed time not time on task. But it clearly relates to PctValidq1_19, and is unnervingly low for those who checked out early: --Percent of questions 1 - 19 not missing, of 86 total Lower Upper N Obs Min Quartile Median Quartile Max ------------------------------------------------------------------------------Stopped in q01 415 0 0 0 0 117 Stopped in q02 506 0 0 0 0 5412 Stopped in q06, end screen 1 1067 0 0 2 4 7529 Stopped in q13, end screen 2 820 0 4 6 9 3643 Completed all screens/parts 11093 1 11 15 22 26222 Code to produce: proc means data = long1 min q1 median q3 max maxdec=0 ; var duration ; where v2 ; class pctvalidq1_19 ; format pctvalidq1_19 pvalid. ; run ; V2 has more missing data than in v1, and very clear relationship to position in questionnaire. True across all schools. E.g. genderid self-report (a late question) – 19% missing v2, 11% v1. This is such an easy, common question that missing indicates the respondent had stopped/finished by the time it was posed. The steady increase in pct missing w question number in 2013 is visible in BaseStats dataset. 20-21% on q14 and all remaining. This was Probably true in v1 too but didn’t get to as high a level. Are there diffs by school? Yes. And the lower the overall response rate at a school, the lower the average PctValidq1_19 at the school. Ouch. Naming conventions – Files, variables, labels Files V2 in a name means, from version 2, 2013 and later, only V1 in a name means, from version 1, through 2012, only, with formats, variable names, response alternatives, wording, etc. all as through 2012 V1_V2 in a name means, data from v1 through 2012, that are comparable to v2 data and with formats, variable names as in v2. The v1_to_v2 files are designed to combine with v2 data for longitudinal analyses. Variable names – max length 16 -- In V2 (and V1_to_V2) We have not changed the var names NSSE sent, so names should match those in data you received directly from NSSE. In some cases the NSSE names are confusing, not systematic – especially those involving majors. See section below. ALL CAPS = added by CU Boulder: YEAR, PUBLIC, TRGTINST, COMPARE, V2 Some others added by CU Boulder are not in all caps: Partic2013, Partic2014, Partic2015, Partic2016, LastV1 A few all caps are NSSE calcs, such as WEIGHT1/2 Engagement indicator scales are the 2-letter acronym, such as HO for higher-order learning CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 10 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm NSSE numeric vars, student responses to individual items – all lowercase EXCEPT items that contribute to the EI Engagement Indicators have the 2-character initial of the indicator in all caps as prefix. E.g., empstudy = q 14a, (How much does your institution emphasize the following) Spending significant amounts of time studying and on academic work, not in any EI SEacademic = q 14b, Providing support to help students succeed academically, in EI SE, Supportive Environment NSSE modules – items have 3-character acronym prefixes in all caps, followed by item number (letter). E.g., ADV02d for the advising module AAUDE questions have prefix AAU Variable labels – in V2 and v1_to_v2, same labels Are VERY long. These are from NSSE. One, in the diversity module, is 207 characters long. Only 10% are < 40, the old limit. 90% are < 120. UCB added the acronym for engagement indicators to the front of labels for items with the EI prefix. E.g. RIconnect, label = RI: Connected ideas from your courses to your prior experiences and knowledge Start with “—“ (two hyphens) when CU-Boulder added the variable or calc or modified the var ALTERNATIVE SET of labels is Max 83 long – using abbreviations, discarding some words . . . Attaches the question number and source info to the end of the label. E.g. LS: Reviewed your notes after class (09b) Est'd hrs/wk: tmrelax recoded by NSSE using the midpoints (15f calc) Institution reported: First-time first-year (INST) Diversity: Discussed: Different political viewpoints (DIV03d) Academic quality of your major program (AAU08) The codebook is organized by question number, so having this in the label makes tracking exact questions easier. However, the short labels no longer have the full question wording of the NSSE labels. TO USE the alt set run Enhancements.sas Majors – Use the CIPs Bottom line – use the CIPS NSSE vars, v2, carried into nsseallyrs_v2 MAJnum How many majors do you plan to complete? (Do not count minors.) – Numeric. In the 2013 data takes on values 1 and 2 and missing only; 19% of both frosh and seniors said two. Not converted from v1 – there was something but it was too iffy and too little utility to be worth pushing. MAJfirstcode First major or expected major - NSSE code – numeric value, really a code, assigned by NSSE based on what the student wrote in. More differentiated than the codes used in v1. Not converted from v1. MAJsecondcode Second major - NSSE code – ditto. MAJfirstcol First major category NSSE code (discipline area) from MAJFIRSTCODE – This is also a numeric var where a value means a discipline area, called variously CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 11 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm “discipline” and “category” and “college” (as in the var name) and “broad field” - not converted from v1. The varname SHOULD have the word “code” in it but doesn’t. MAJsecondcol Secondary major category NSSE code (discipline area) from MAJSECONDCODE – ditto NSSE vars, v2, NOT carried into nsseallyrs_v2 – 6 vars each 256 characters, holding the verbatim write-ins for first and second major. These appeared useless to us – NSSE already coded into the vars noted above. Not worth 1500 characters! MAJfirst, MAJFI0, MAJFI1, MAJsecond, MAJSE0, MAJSE1 Vars added by CU Boulder No v1 conversion for these: MAJfirstDesc --First major or expected major - NSSE description and code MAJsecondDesc --Second major - NSSE description and code Both about 60 characters long, with the code in parens at the end – from the NSSE format. DESC here stands for description. DiscFirst --First major category NSSE description (discipline area) from MAJFIRSTCOL DiscSecond --Secondary major category NSSE description (discipline area) from MAJSECONDCOL We realize these names do not match NSSE’s – we used DISC for discipline (as in broad discipline, college). Same 60-char format as above. CIP codes – all 6-character character vars. All HAVE BEEN COVERTED from v1. So for longitudinal analyses of majors, USE THESE. CIPS are from the NSSE crosswalk, which NSSE did by itself based on the one AAUDE did for it years ago (Mary Black and Linda Lorenz) CIP1First --CIP1 from MAJfirstcode (first/expected major) CIP2First --CIP2 from MAJfirstcode (first/expected major) CIP3First --CIP3 from MAJfirstcode (first/expected major) CIP1Second --CIP1 from MAJsecondcode (second major) CIP2Second --CIP2 from MAJsecondcode (second major) CIP3Second --CIP3 from MAJsecondcode (second major) If you want a broad-field grouping for longitudinal (or even just v2) data, use your own usual grouping of CIP codes. You’re on your own figuring out how to use 3 CIPS per major Institutional metadata – Populations, samples, response rates The institutional metadata dataset, provided by NSSE, contains the number of freshmen and seniors, by year of administration and participating institution, in each institution's population and NSSE-selected random sample from the eligible population (COMPARE=1). It also contains the number of responding students, the response rate (computed as the number of responses divided by the number of students in the sample), and NSSE-computed sampling error (stored in the dataset as a percentage). The ResponseRates Excel is based on the same metadata but has additional info – plots, narrative, reference to NSSE studies, more. NSSE attempts an explanation of its sampling error formula at http://nsse.iub.edu/html/error_calculator.cfm. An example of sampling error usage that NSSE provides is: “assume that 60% of your students reply ‘very often’ to a particular item. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 12 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm If the sampling error is +/-5%, then the true population value is most likely between 55% and 65%.” This of course ignores non-response bias. A row in the metadata dataset is a combination of institution, IPEDS no., year of administration, and IRClass (1 = first year, 4 = senior). This dataset can therefore be merged easily with the all-years dataset, which has a similar structure. Both the metadata dataset and the all-years response-level dataset are sorted by year, IPEDS, and IRclass. AAUDE Questions – Changes, comparisons, CU calcs – runaround, obstacles See the PDF codebook markup pages 18-19 for v2 questions, var names, formats. See the AAUDE optional q Excel with map from v1 for questions dropped Q 1-12 in v2 came directly from v1 so have v1_v2 data. CAUTION: Q 10 in v2, on runaround, changed position – in v1 it was q14 and followed items on academic advising, in v2 those items were dropped. But the response scales were identical, with “strongly agree” listed first in both v1 and v2. Every 2013 school shows more agreement with “At this university, students have to run around from one place to another to get the information or approvals they need.” This can’t be due to chance – I looked and looked to see if/how we’d messed up the conversion. But we didn’t. SO – the increases in agreement with “have to run around” are due to some combination of Real – there’s really more run-around required Real – students now have less patience with run-around now (that is, higher expectations) than students surveyed with v1 Effect of dropping the prior advising items Effect of other changes in entire instrument Something else Obstacles to academic progress – most of this is also noted in the codebook and Excel Old q17 asked students to pick the ‘biggest’, pick one, and “I have no real obstacles” was a listed option. New q 13 is actually 7 separate q’s, “please rate the following as obstacles to your academic progress during the current AY” with 1=not an obstacle at all, 2=minor, 3= moderate, 4=major. Furthermore, 13g is an alternative not listed at all in v1, Personal health issues, physical or mental. We obviously couldn’t recode old 17 into new 13a-g. But we DID carry the v1 data intact into v1_v2 dataset, named AAU17v1, with format obstac2_. to label the responses. This should allow you to compare old to new however is appropriate for you. AAU13a – AAU13g are packed with info but difficult to summarize. We therefore created and stored additional vars AAU13Avg, AAu13NValid, AAU13N_1 (number rated 1=not an obstacle), AAU13N_34 (number rated moderate/major obstacle). A quick look at results, 2013, 2014, and 2015 as shown in the first couple. AAU13a – money, work obligations, finances – by far highest rating each year Same as in v1 (which was “pick one”), where money, work, finances = 38%, with lack of personal motivation 15%, rest all 4-6%. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 13 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm All others alts 13b-g similar to one another each year. 13f (poor academic performance) usually the lowest rating.Avg of 1.6 items (of 7 max) were listed as 3 or 4 (moderate/major); avg of 3 were listed as not an obstacle. In v1, about 27% checked the option “no real obstacles.” In v2, only 5% rated all 7 items as 1=not an obstacle, but 26% rated none of the 7 as 3-4/mod/major. So v2 is definitely yielding more discrimination. V2 items 13a-g are positively correlated. So it’s NOT “if the kid has obstacle X he doesn’t have obstacle Y” – it’s just the opposite. Strongest correlations a-b – money with family c-d – advising with getting courses In my opinion the obstacle data were useful before and are terrific now with great potential utility. VSA http://nsse.iub.edu/html/vsa.cfm explains the role of NSSE in VSA (Voluntary System of Accountability) “assessment.” If a school uses NSSE to satisfy VSA, it reports “results for seniors within six specified areas that academic research has shown to be correlated with greater student learning and development: group learning, active learning, experiences with diverse groups of people and ideas, student satisfaction, institutional commitment to student learning and success, and student interactions with faculty and staff.” The webpage links to lists of items in v1, and in v2, grouped into the six areas, showing what responses are to be grouped (e.g. for SEacademic, “Very much; Quite a bit; Some (Exclude Very Little)”). The figures required for entry to VSA can be produced from the nsseallyrs_v2 or long1 datasets using a tabulate or freq with formats to do the groupings of response alternatives. Sample, what VSA looks like with NSSE 2.0 data/items: http://www.collegeportraits.org/CO/CU-Boulder/student_experiences Things CU-Boulder did in creating the v2.0 response-level dataset Started with SAV (SPSS) file from NSSE Made no modifications to ROWS – all rows sent, are still in even though some are (by NSSE’s categorization) “ineligible to take survey.” [Probably individuals removed from the population by a school who nevertheless got a survey invitation and responded.] Calculated COMPARE, the very important variable carried from AAUDE v1. COMPARE = 1 marks rows that were first-year or senior, random sample, and eligible. Almost but not quite all rows are COMPARE = 1. Added the name of the institution (variable INST). Moved various important variables, including INST and COMPARE, to the top/front of the dataset Located two NSSE-provided vars which are unique to respondent and match to the samenamed vars in the datasets NSSE provided directly to institutions: PopID and SurveyID. These two have been moved near top of the dataset. Developed the formats working backwards from SPSS value labels, but created just one format for each unique set of response alternatives, rather than one per item CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 14 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm Created a new set of labels using the 2-character variable prefixes that NSSE used to indicate which “Engagement Indicator” (aka scale, no longer known as benchmarks) an item contributes to. Both old and new labels are in the Excel. Added, to NSSE numeric codes for majors 1 and 2 The NSSE description of the code NSSE’s up-to-three CIP codes. I didn’t even look at these, just used them. These are at http://nsse.iub.edu/html/commonDataRecodes.cfm because I asked NSSE to do them. Let me or NSSE know if changes are in order. DROPPED some vars NSSE sent us: Institutional student ID number!!! If you want to match to your own student records you can using SurveyID and other datasets from NSSE. NSSE will not provide AAUDE with institutional student IDs in future. GROUP variables which contained institution-provided data that varied from school to school. Most schools didn’t use these, and the info was useless and together they were over 2000 characters so we dropped! The write-in major(s). Again, lots of characters. NSSE coded the write-ins to major codes, which ARE in the dataset. Looked for but did NOT FIND two sets of NSSE variables The experimental items on course evaluation. No AAUDE received these items. No school with two modules or consortium and module got them. The student comments We LEFT IN items in the NSSE modules (advising, writing, etc.). Some of them had no AAUDE users so every item is 100% missing. PARTIC Excel shows who used what module, and below. We put the module abbreviation on the labels of all these items. Longitudinal.sas also drops the 118 variables from the NSSE modules. Or use this drop: drop adv0: div0: trn0: civ0: tec0: wri0: gpi0: inl0: ; CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 15 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm List of all modules can be found at http://nsse.iub.edu/html/modules.cfm 2013 modules and what data we have (publics) ADV – Academic Advising : Michigan State, Ohio State CIV – Civic Engagement - none DIV – Experience with Diverse Perspectives – none TEC – Learning with Technology - none TRN – Development of Transferable Skills – Iowa State, Nebraska WRI - Writing – Experiences with Writing - Colorado None – Kansas, Washington 2014 modules and what data we have (publics) ADV – Academic Advising : Boston U, Georgia Tech, McGill, SUNY-Stony Brook CIV – Civic Engagement - none DIV – Experience with Diverse Perspectives – Wisconsin GPI – Global Perspectives (new) – Illinois INL – Information Literacy (new) – none TEC – Learning with Technology - none TRN – Development of Transferable Skills –Maryland and SUNY-Buffalo WRI - Writing – Experiences with Writing – none 2015 modules and what data we have (publics). ADV – Academic Advising : Illinois, Indiana, Oregon, Syracuse CIV – Civic Engagement - Tulane DIV – Experience with Diverse Perspectives – Missouri FYS – First Year Experiences & Senior Transitions (new) – Indiana, Rutgers GPI – Global Perspectives – Case Western INL – Information Literacy - Arizona TEC – Learning with Technology - none TRN – Development of Transferable Skills – Case Western, Illinois WRI - Writing – Experiences with Writing – none Creating the v1_v2 dataset (in 2013; we didn’t recheck all this) – Started with NSSE item by item documentation on what changed. Coupled that with our over time record of variable names etc. Built matchVars_v2.xlsx. Used that to write code to convert varnames and drop v1 vars not carried to v2. Checked distributions of responses before and after. Iterated time and again. Etc. How checked: take all publics from 2013, get their single most recent admin 2009 and later. Check. Drop Ohio State, diff pop in 2013. Drop Michigan State, response rate 2013 was half of prior. (Colorado *N* is < prior but that was due to sampling in 2013.) Keep COMPARE=1 obs, 12,000 v1, 10,300 v2. From Colo, Iowa State, Kansas, Nebraska, UW. About equal except Kansas smaller. Resulting N’s used in comparison checks: INST(--Institution Name (KEY)) YEAR(--Survey Year (KEY)) Frequency | 2009| 2010| 2011| 2013| -----------+--------+--------+--------+--------+ Colorado | 2896 | 0 | 0 | 1915 | -----------+--------+--------+--------+--------+ Iowa St | 0 | 0 | 2282 | 2615 | CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 Total 4811 4897 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 16 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm -----------+--------+--------+--------+--------+ Kansas | 0 | 1605 | 0 | 1231 | -----------+--------+--------+--------+--------+ Nebraska | 0 | 2557 | 0 | 2072 | -----------+--------+--------+--------+--------+ Washington | 0 | 0 | 2782 | 2495 | -----------+--------+--------+--------+--------+ Total 2896 4162 5064 10328 2836 4629 5277 22450 Details: NSSE created an item by item comparison of v1 and v2, and categorized change – none, major, minor, dropped, new, and whether change involved stem, response alternatives, or both. We used this to create ItemComparisonLongitudinal Excel, and added some info based on our looks, about AAUDE questions, etc. For all years of v1, Boulder had MatchVars http://www.colorado.edu/pba/surveys/nsseaaude/MatchVars.xls , tracking each variable over time. We put the item-comparisons together with MatchVars info on v1 to create MatchVars_v2 Excel, with Match_v2 tab. The Match_v2 tab is the master source for translating v1 data to v2 formats. Ultimately Match_v2 produced Match dataset, which is the source of SAS-written keep, drop, format, and rewrite statements. These are used, and more complex recodes done, in convert_v1_v2.sas Troublesome vars in the conversion LIVING – where living – response alts changed order, recode required, done DDRACE – V2: q 8a, During the current school year, about how often have you had discussions with people from the following groups? - People of a race or ethnicity other than your own. V1: How often… Had serious conversations with students of a different race or ethnicity than your own? Level up significantly at every school. E.g. UW 2.79 to 3.3. Others similar. Decided – DROP old. Do not equate. Change in wording and change in context (from 1 of 2 items, to first of 4-5) are probably source of level change. Would be improper to equate and infer that real activity increased significantly at every school. DURATION V1 has missing and some value=0. V2, 2013, has no missing and more value=0. Also value 1, 2 etc. = minutes. Most with short durations answered few questions. Bet NSSE handled < 1 min differently in v1 and v2, but rest of data are OK, don't try to fix. 2014 has missing duration, wildly long durations well over 500 minutes. No zero values but several under 0.05 minutes. 2015 has NO missing duration, over 1,200 cases of wildly long duration over 500 minutes, no zero values but 100 cases 0.20 minutes and less. Adding a new year We account for any changes in variable names with both the V2 dataset and the Convert_V1_V2 dataset. Other processing is similar to 2013. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 17 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm 2015 changes/updates Variables added by NSSE but not added to the all years dataset BROWSER_FIRSTLOGIN, BROWSER_LASTLOGIN, OS_FIRSTLOGIN, OS_LASTLOGIN. The browser/operating system used by the respondent when beginning/finishing the survey. Question 31a on international student status. NSSE added an additional question on country of citizenship if the student answered ‘yes’ to being an international student. Two variables were added to the NSSE dataset that we also added to the all years dataset. COUNTRY and COUNTRYCOL (region, eight categories). Both are coded numerically so use the formats for text values. Recoded variables added by NSSE but not added to the all years dataset. These variables recode items that have a ‘Not Applicable’ response from missing to a value of 9. We decided these were not needed. This occurred exclusively on question 13: indicate the quality of your interactions with the following people at your institution. QIstudentr, QIadvisorr, QIfacultyr, QIstaffr, and QIadminr. Also in the Academic advising module. ADV02AR – ADV02IR. The variable POPID (unique population number) was removed by NSSE. This variable was previously in 2013 and 2014 but will no longer be provided. We’ve removed this variable from the all years dataset. The var SURVEYID is unique within year/institution (at least) and can be used to merge to your own datasets. Modules New module on first-year experience and senior transitions. All of these variables begin with FYSfy or FYSsr. Academic advising module has a new question ADV04_15. How often have your academic advisors reached out to you about your academic progress or performance? We’ve added this to the dataset. 2014 changes/updates Gender and sexual orientation q’s changed 2013 to 2014. See http://websurv.indiana.edu/nsse/interface/img/Changes%20to%20Demographic%20Items %20(NSSE%202014).pdf Gender was dropped and replaced by GenderID by NSSE. Response values of ‘Another gender identity’ and ‘Prefer not to respond’ were added. Decided – in AAUDE/NSSE data, equate old to new since only 0.2% chose ‘Another gender identity’ in 2014. The new value has been added to the format. V1 datasets updated to reflect change. Gender replaced by GenderID NSSE dropped the variable sexorient (Sexual orientation) and replaced by sexorient14. Response value of ‘Another sexual orientation’ was added. Decided – in AAUDE/NSSE data, equate old and new but kept variable name as sexorient since less than 0.5% chose ‘Another sexual orientation’ in 2014. The new value has been added to the format. IRGender (institutional reported gender) name changed by NSSE to IRSex. Response values were not changed. We adopted the new variable name. V1 datasets updated to reflect the name change. CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016 NSSE-AAUDE Data User Guide – For NSSE v2 and NSSE v1 converted to v2 18 University of Colorado Boulder - Last update 11/2015 Posted from http://www.colorado.edu/pba/surveys/nsse-aaude/index.htm Question 16 on ‘time spent preparing for class in a typical week about how many hours are on assigned reading’ changed response values from an hours estimate to a descriptive scale (very little to almost all). Decided – could not equate so added variable READING (NSSE’s name; for 2014 and later only) and kept the old var TMREAD for 2013 data only. Caution – looking at estimated time per week on reading assignments using 2013 vs 2014 and later data will be tricky at best and probably impossible. Question on duration (in minutes) it took to answer the AAU questions changed from AAUDUR to DURATIONAAU. Three modules were added. Global Perspectives – Cognitive and Social. Illinois answered these in 2014 Experiences with Information Literacy. No AAU publics answered. First-year Experiences and Senior Transitions were listed by NSSE but the variables were not part of the NSSE dataset (we’re assuming no institutions answered these questions) NOTES, CAUTIONS specific to ONE YEAR 2013 Ohio State senior population. N in the population dropped by 72% while N in frosh population dropped by only 14%. This was due to OSU change in definition of senior pop, restricting to those who’d declared intent to graduate by about October prior to spring. Ratio of Sr:Fr now different for OSU 2013 than for all others. Therefore CAUTION using OSU seniors I’ve corresponded with Julie and others and recommended change in definition next time 2014 No known issues. Just watch out for change in name of gender variables. 2015 No known issues PBA file refs and summary of Boulder to do’s L:\IR\survey\NSSE\AAUDE\All\UserGuide_v2.docx Scavenged from L:\IR\survey\NSSE\AAUDE\All\NSSE_AAUDE_v2_release2.docx L:\IR\survey\NSSE\AAUDE\All\archive\UserGuide_v1toscavenge.docx And copied here/memo from L:\IR\survey\NSSE\AAUDE\NSSE_AAUDE_201311.docx CU-Boulder PBA:Lou McClelland –Document1 – 7/12/2016