Hewlett Fellows: Horace Smith,... CISGS ISP 205 Visions of the Universe

advertisement

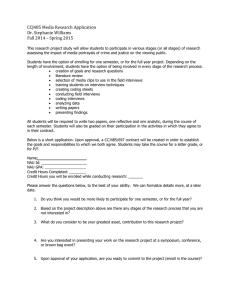

Hewlett Fellows: Horace Smith, Gene Capriotti CISGS HEWLETT PROJECT OUTLINE 1. Course number, name, & catalogue description ISP 205 Visions of the Universe Role of observation, theory, philosophy, and technology in the development of the modern conception of the universe. The Copernican Revolution. Birth and death of stars. Spaceship Earth. Cosmology and time. 2. Course goal(s) as stated in course syllabus (relation to IS goals). We are evaluating the three sections of ISP205 taught this fall. Below are the course goals as stated for one of these sections: “The goal of the course is to give you an outline of what we do (and don’t) know about the universe on size scales from the planets on up, and also to teach you a bit about how science works.” The ISP 205 course thus touches upon at least three of the integrative studies goals: “Become more familiar with the ways of knowing in the arts and humanities, the biological and physical sciences, and the social sciences.” “Learn more about the role of scientific method in developing a more objective understanding of the natural and social worlds.” “Become more knowledgeable about other times, places, and cultures as well as key ideas and issues in human experience.” 3. Course goal(s) to be assessed. What learning outcomes will be studied and how? Does student understanding of the most important ideas which we think we are teaching in ISP 205 improve over the semester, and by how much? How does the improvement in student knowledge of the “big picture” questions compare with the improvement in their knowledge of detailed factual questions relating to the subject matter? Elaborate on strategy of assessment: Our initial idea was to use pre- and post-course tests to get a broad view of student progress. The 15 questions given as the pre and post test for the 450 students in Professor Baldwin’s class were questions we labeled “big picture” questions. For Professor Capriotti’s ISP section, these “big picture” questions were supplemented by additional questions on more detailed points. The interviews were intended to tell us whether the multiple choice exams are giving us an accurate picture of student mastery of the material. In particular, from the interviews we wanted to learn: (a) Can students explain important topics in their own words? and (b) Can the students answer questions which require “critical thinking”? 4. Description of assessment instrument and application (e.g. multiple choice test, pre- and post-tests, critical essay, concept map, portfolio sample, etc.) Pre and post course multiple choice tests, as indicated above. Interviews with selected students toward the end of the semester. 5. When is this to be implemented? (semester) Beginning fall semester, 2002 If this is being done this semester, what are preliminary results? We are still analyzing the results, but there are some things which we already know we would do differently next time around. First, the 15 “big picture” questions used as a pretest were probably too easy, thereby limiting what we will learn from them in the posttest. Secondly, our choice of questions can probably be improved if, before choosing the questions, we make up a two-dimensional diagram indicating the subjects we want to test and the level of ability we want to test for each subject. We have already done this in creating questions to ask our interviewees, and it would have been useful had we done it before designing our multiple choice tests. The interviews were informative, but our poorest students tended to skip their interview, so that the interviews probably give a somewhat biased result. However, despite those problems, we have some preliminary results. 1. For 393 students who took the pretest in Professor Baldwin’s sections, the average score was 7.9 correct. No student correctly answered all 15 “big picture” questions on the pretest. By contrast, on the post-test the average score was 11.9 and 38 of 454 students answered all 15 questions correctly. With further work on designing the questions asked, the pre- and post- tests should be a very useful diagnostic for seeing how well we are meeting the course goals. 2. The interviews were instructive, but because the number of students interviewed had to be limited, and because the better students actually showed up for their interviews more than the poorer students, the results are not necessarily fully representative. 18 students were invited for interviews, which were held during the last week of classes and final exam week. 13 agreed to be interviewed, but only 10 actually showed up. Those who were interviewed did show evidence of a level of thinking beyond the memorization of facts. Most could, for example, string together a narrative describing changing ideas of the solar system from Ptolemy’s heliocentric universe through Newton’s picture of a solar system governed by natural laws. However, the interviews by themselves do not tell us the degree of improvement in critical thinking during the course. 6. Feedback- Anticipated assessment results and how these results might lead to or stimulate changes in instruction. Our pre- and post-test exam strategy looks useful, but needs tweaking. In particular, we need to better isolate different levels of critical thinking in our exam questions. We might also want to make more specific our descriptions of course goals in the course syllabus. Although interesting, the small number of students and the purely post-course nature of the interviews makes their interpretation difficult. Perhaps we should emphasize techniques which sample a larger number of students at various times during the course. 7. When completed, for what audiences will your assessment results be valuable? They will certainly be useful for instructors teaching integrative studies courses in the Physics and Astronomy Department. We hope the methods may be of some interest to others, perhaps especially those teaching large class sections. Integrative Studies/Hewlett Grant Projects