Lecture 5 Least Square Fits, Correlation and Covariance, Poisson

advertisement

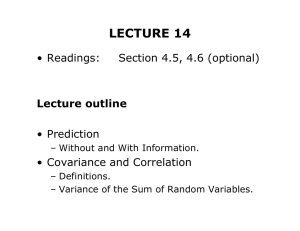

PHYSICS 2150 EXPERIMENTAL MODERN PHYSICS Lecture 5 Least Square Fits, Correlation and Covariance, Poisson Distribution LINE FITS • Most common data fitting in lab • Example: Photoelectric effect: • h = Tmax + h • Vs = + e e • Plot versus and fit a line to find slope (h/e) and work function 1.4 Stopping Potential (V) • e Vs = Tmax 1.2 1.2 1.0 0.8 0.8 0.6 0.4 0.4 5 5.5 6.0 6.5 7.0 6 7 Frequency (1014 Hz) 7.5 8.0 8 WE ALREADY KNOW HOW TO OBTAIN THE BEST FIT • Measured N data points (xi, yi) with constant uncertainty on all y values • Want to find the parameters of the linear model A*x + B, which reproduces the data best • Probability and B: for obtaining (y1,...,yN) for given parameters A p(y1 , . . . , yn |A, B) e 1 2 ( Ax1 +B y1 2 ) · ... · e 1 2 “ AxN +B yN ”2 LEAST SQUARES FIT • Assume that data y1 , . . . , yN deviate from linear model because of statistical „measurement errors“ 5 p( y8 ) p( y10 ) p( y9 ) 4 3 2 p( y3 ) p( y6 ) p( y5 ) p( y11 ) • Assume further that are normally distributed, i.e. “ ” p( y7 ) p( y4 ) p( y1 ) 1 p( y2 2 ) 4 y1 , . . . , y N p( y) 6 8 10 e 1 2 y y y 2 • Employ Maximum Likelihood principle, i.e. max (p( y1 ) · . . . · p( yN )) LEAST SQUARES FIT Minimise quadratic deviation from the model 2 = 1 N (A xi + B 2 yi )2 i=1 ( yi ) 2 5 4 3 2 1 2 4 6 8 10 LEAST SQUARES FIT TO A LINEAR MODEL A= N xi yi N x2i xi yi ( xi )2 B= x2i N N yi x2i 5 4 y =A·x+B 3 2 1 2 4 6 8 10 xi xi yi ( xi )2 HOW RELIABLE ARE THE FITTED PARAMETERS? • The parameters A(y1,...,yN) and B(y1,...,yN) are well defined functions of y1,...,yN. • Thus, we can use an error propagation calculation to find the uncertainties of A and B: A B = = N y y N N x2i ( xi )2 x2i x2i ( xi )2 LINEAR FITS: EXAMPLE y = (0.53 ± 0.06) · x + (0.3 ± 0.3) 7 6 y = 1.0 y = (0.47 ± 0.07) · x + (0.6 ± 0.4) 8 y 5 = 1.5 6 4 4 3 2 2 1 2 4 6 8 10 2 4 6 8 y = (0.4 ± 0.1)x + (1.1 ± 0.6) 8 y = 2.0 „True Model“ ? 6 y = 0.5 · x + 0.5 4 2 2 4 6 8 10 10 LINEAR FITS: EXAMPLE „True Model“: y = sin( 20 x) y = (0.10 ± 0.01) · x + (0.13 ± 0.04) 1.0 1.0 0.8 0.8 0.6 0.6 0.4 0.4 0.2 0.2 2 4 6 8 10 2 4 6 8 10 Lesson learned: small uncertainties of the model parameters do not imply correctness of the assumed model! Need a measure for „how well the assumed linear model matches the data “ LEAST SQUARE FITS TO ARBITRARY CURVES • How to fit data to arbitrary function f(x|p1,...,pM) with M parameters p1,...,pM? • Application of Maximum Likelihood Principle yields that the best fit corresponds to values of p1,...,pM for which 2 = 1 N 2 i=1 (f (xi |p1 , . . . , pM ) yi )2 is minimum! / pi = 0 can only be solved equation system analytically for a small number of curves and we have to resort to numerical methods to find the best fit parameters. • The 2 CAVEAT: LINEARISING NONLINEAR FUNCTIONS • Suppose that we want to fit data to a exponential y = Ae B·x to „linearise“ the data by the transformation z = ln y , because this gives z = ln y = ln A + B · x • Tempting 2 7 6 1 ln(y) y 5 4 3 2 0.5 1.0 1.5 2.0 -1 -2 1 -3 0.5 1.0 1.5 2.0 Linearised „errors“ are not any longer normally distributed! COVARIANCE • Take a measurement q(x, y), which depends on pairs of data (x1, y1),...,(xN, yN) • Error q= propagation says that q x x 22 + qq yy yy • Here 22 + q q x y x y we assumed that x and y follow a Gaussian distribution and x and y are independent! • But what if not? x y=0 COVARIANCE • If the Covariance xy 1 = N N (xi x )(yi y )=0 i=1 we say that the errors in x and y are correlated. • The xy • This q covariance fulfils the Schwarz inequality x y can be used to show that q x x + q y y COVARIANCE AND ERROR PROPAGATION 2 q • In = 2 x q y + 2 2 y +2 case of maximum positive correlation: q x q • In q x 2 x q y + y case of no correlation: q = q x 2 2 x + q y 2 2 y q q x y x y EXAMPLE OF CORRELATED 0 VARIABLES: THE K MASS to measure angle θT between pions • Need K+ + + T K 0 p need to measure θ±, angles between pions and K0 • Also θT is easy, but K0 direction can be hard if its path is short • Measuring •The K0 travels a short distance and decays to π+π− + hits stationary neutron, producing proton and K0 Incoming K • EXAMPLE OF CORRELATED 0 VARIABLES: THE K MASS • If + K + K0 p + direction of the red line is wrong, then θ+ and θ− will be wrong by equal amounts but ins opposite directions. measurements of θ+ and θ− will be correlated (actually anti-correlated) • our EXAMPLE OF CORRELATED 0 VARIABLES: THE K MASS θ+ θ- 53º 56º 56º 34º 58º 46º 48º 56º 38º 61º 27º 24º 24º 46º 22º 34º 32º 24º 42º 20º • Covariance: + = • Standard 70.6 Deviations: = 78.5 + • Ratio: + / + = 0.9 COVARIANCE VS. CORRELATION covariance σxy can be normalised to create a correlation coefficient • The xy r= or r= x y • Let‘s (xi (xi x )(yi x )2 (yi y ) y )2 assume that all points (xi, yi) lie exactly on y = A x + B: r= • Thus, r A (xi (xi x )2 A2 x )2 (xi A = = ±1 |A| x )2 is the wanted indicator for how well the data are matched by a straight line! CORRELATION COEFFICIENT Correlation coefficients of sample data sets COVARIANCE VS. CORRELATION covariance σxy can be normalised to create a correlation coefficient • The r= •r xy x y can vary between -1 and 1: •r = 0 indicates that the variables are uncorrelated • |r| • Sign = 1 means the variables are completely correlated. of r indicates direction of covariance: • r>0 means that large x indicates y is likely large • r<0 means that large x indicates y is likely small