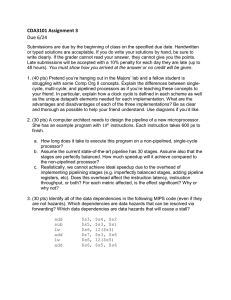

CS 1104 Help Session IV Five Issues in Pipelining Colin Tan,

advertisement

CS 1104

Help Session IV

Five Issues in Pipelining

Colin Tan,

ctank@comp.nus.edu.sg

S15-04-15

Issue 1

Pipeline Registers

• Instruction execution typically involves several

stages

– Fetch: Instructions are read from memory

– Decode: The instruction is interpreted, data is read from

registers.

– Execute: The instruction is actually executed on the

data

– Memory: Any data memory accesses are performed

(e.g. read, write data memory)

– Writeback: Results are written to destination registers.

Issue 1

Pipeline Registers

• Stages are built using combinational devices

– The second you put something on the input of a

combinational device, the outputs change.

– These outputs form inputs to other stages, causing their

outputs to change

– Hence instructions in the fetch stage affect every other

stage in the CPU.

– Not possible to have multiple instructions at different

stages, since each stage will now affect stages further

down.

• Effect is that CPU can only execute 1 instruction at a time.

Issue 1

Pipeline Registers

• To support pipelining, stages must be de-coupled

from each other.

– Add pipeline registers!

– Pipeline registers allow each stage to hold a different

instruction, as they prevent one stage from affecting

another stage until the appropriate time.

• Hence we can now execute 5 different instructions

at 5 stages of the pipeline

– 1 different instruction at each stage.

Issue 2

Speedup

• Figure below shows a non-pipelined CPU

executing 2 instructions

IF

ID

EX MEM

WB

IF

ID

EX

MEM

WB

• Assuming each stage takes 1 cycles, each

instruction will take 5 cycles (i.e. CPI=5)

Issue 2

Speedup

IF

ID

EX MEM

WB

IF

ID

EX MEM

IF

ID

WB

EX MEM

WB

• For pipelined case:

– First instruction takes 5 cycles

– Subsequent instructions will take 1 cycle

• The other 4 cycles of each subsequent instruction is amortized

by the previous instruction (see diagram).

Issue 2

Speedup

• For N+1 instructions, 1st instruction takes 5

cycles, subsequent N instructions take 1 cycle.

• Total number of cycles is not 5+N.

• Hence average number of cycles per instruction is:

CPI = (5+N)/(N+1)

• As N tends to infinity, CPI tends to 1.

• Compare with none-pipeline case, a 5-stage

pipeline gives a 5:1 speedup!

• Ideally, an M stage pipeline will give an M times

speedup.

Issue 3

Hazards

• A hazard is a situation where computation is

prevented from proceeding correctly.

– Hazards can cause performance problems.

– Even worse, hazards can cause computation to

be incorrect.

– Hence hazards must be resolved

Issue 3A

Structural Hazards

• Structural Hazards

• Generally a shared resource (e.g. Memory, disk drive) can be

used by only 1 processor or pipeline stage at a time.

• If >1 processor or pipeline stage needs to use the resource, we

have a structural hazard.

• E.g. if 2 processors want to use a bus, we have a structural

hazard that must be resolved by arbitration (See I/O notes).

• Structural hazards are reduced in the MIPS by having separate

instruction and data memory

– If they were in the same memory, it is possible that the IF stage

may try to fetch instructions at the same time as the MEM stage

accesses data => Structural Hazard results.

Issue 3B

Data Hazards

• Data Hazards

• Caused by having >1 instruction executing concurrently.

• Consider the following instructions:

add $1, $2, $3

add $4, $1, $1

add $1,$2,$3

add $4,$1,$1

IF

ID

EX MEM

IF

ID

WB

EX MEM

WB

• The first add instruction will update the contents of $1 in WB

stage.

• But the second instruction will read it $1 2 cycles earlier in the

ID stage! It will obviously read the old value of $1, and the add

instruction will give the wrong result.

Issue 3B

Data Hazards

• The result that will be written to $1 in the WB stage first

becomes available at the ALU in the EX stage.

• The result for the first add become available from the ALU

just as the second instruction needs it.

• If we can just send this result over, can resolve hazard

already! This is called “Forwarding”.

add $1,$2,$3

add $4,$1,$1

IF

ID

EX

MEM

IF

ID

EX

WB

MEM

WB

Issue 3B

Data Hazards

• Sometimes forwarding doesn’t quite work.

Consider:

lw $1, 4($3)

add $4, $1, $1

• For the lw instruction the EX stage actually

computes the result of 4 + $3 (i.e. the 4($3)

portion of the instruction).

• This forms the fetch address. No use forwarding

this to the add instruction!

Issue 3B

Data Hazards

• The result of the ALU stage (i.e. the fetch address

of the lw instruction) gets sent to the memory

system in the MEM stage, and the contents of that

addresses becomes available at the end of the

MEM stage.

• But the add instruction needs it at the start of the

EX stage. We have this situation:

Issue 3B

Data Hazards

lw $1,4($3)

add $4,$1,$1

IF

ID

EX

MEM

IF

ID

EX

WB

MEM

WB

• The forwarding is being done backwards, meaning

that at the point when the add instruction needs the

data, the data is not yet available.

• Since it is not yet available, cannot forward!!

Issue 3B

Data Hazards

• This form of hazard is called a “load-use” hazard,

and is the only type of data hazard that cannot be

resolved by forwarding.

• The way to resolve this is stall the add instruction

by 1 cycle.

lw $1, 4($3)

add $4,$1,$1

IF

ID

IF

EX MEM

ID

(stall)

WB

EX MEM

WB

Issue 3B

Data Hazards

• Stalling is a bad idea as the processor spends 1

cycle not doing anything.

• Can also find an independent instruction to place

between the lw and add.

lw $1, 4($3)

IF

ID

EX

IF

ID

EX

MEM

add $4,$1,$1

IF

ID

EX

sub $5,$7,$7

MEM

WB

WB

MEM

WB

Issue 3B

Data Hazards

• Forwarding can be done either from the ALU

stage or from the MEM stage.

add $1,$2,$3

add $4,$1, $5

add $6,$1, $7

IF

ID

EX

MEM

WB

IF

ID

EX

MEM

IF

ID

EX

WB

MEM

WB

Issue 3B

Data Hazards

• Hazards between adjacent instructions are

resolved from the ALU stage (between add $1,$2,

$3 and add $4,$1,$5)

• Hazards between instructions separated by another

instructions (between add $1,$2,$3 and add

$6,$1,$7) are resolved from the MEM stage.

– This is because if we try to resolve this from the MEM

stage, the add $6,$1,$7 instruction will actually get the

results of the previous (add $4,$1,$5) instruction

instead (since it is the instruction that is in the EX

stage)

Issue 3C

Control Hazards

• In an unoptimized pipeline branch decisions are

made after the EX stage

– The EX stage is where the comparisons are made.

– Hence the branching decision becomes available at the

end of the EX stage.

• Pipeline can be optimized by moving the

comparisons to the ID stage

– Comparisons are always made between register

contents (e.g. beq $1, $3, R).

– The register contents are available by the end of the ID

stage.

Issue 3C

Control Hazards

• However still have a problem. E.g.

L1: add $3, $1, $1

beq $3, $4, L1

sub $4, $3, $3

• Depending on whether the beq is taken or not,

the next instruction to be fetched is either add

(if the branch is taken) or sub (if the branch is

not taken)

Issue 3C

Control Hazard

beq $3,$4,L1

sub $4,$3,$3

or

add $3,$1,$1

IF

ID

EX MEM

WB

IF

• We don’t know which instruction to fetch until the

end of the ID stage.

• But the IF stage must still fetch something!

– Fetch add or fetch sub?

Issue 3C

Control Hazards - Solutions

• Always assume that the branch is taken:

– The fetch stage will fetch the add instruction.

– By the time this fetch is complete, the outcome of the

branching is known.

– If the branch is taken, the add instruction proceeds to

completion.

– If the branch is not taken, the add instruction is flushed

from the pipeline, and the sub instruction is fetched and

executed. This causes a 1 cycle stall.

Issue 3C

Control Hazards - Solutions

• Always assume that the branch is not taken

– The IF stage will fetch the sub instruction first.

– By this time, the outcome of the branch will be known.

– If the branch is not taken, then the sub instruction

executes to completion.

– If the branch is taken, then the sub instruction is

flushed, and the add instruction is fetched and

executed. Results in 1 cycle stall.

Issue 3C

Control Hazards - Solutions

• Delayed Branching

L1: add $3, $1, $1

beq $3, $4, L1

sub $4, $3, $3

ori $5, $2, $3

• Just like the assume not taken strategy, the IF

stage fetches the sub instruction. However, the sub

instruction executes to completion regardless of

the outcome of the branch.

Issue 3C

Control Hazards-Solutions

– The outcome of the branching will now be known.

– If the branch is taken, the add instruction is fetched and

executed.

– Otherwise the ori instruction is fetched and executed.

• This strategy is called “delayed branching”

because the effect of the branch is not felt until

after the sub instruction (i.e. 1 instruction later).

• The sub instruction here is called the delay slot or

delay instruction, and it will always be executed

regardless of the outcome of the branching.

Issue 4

Instruction Scheduling

• We must prevent pipeline stalls in order to gain

maximum pipeline performance.

• For example, for the load-use hazard, we must

find an instruction to place between the lw

instruction and the instruction that uses the lw

results to prevent stalling.

• Also may need to place instructions into delay

slots.

• This re-arrangement of instructions is called

Instruction Scheduling.

Issue 4

Instruction Scheduling

• Basic criteria:

– An instruction I3 to be placed between two instructions

I1 and I2 must be independent of both I1 and I2.

lw $1, 0($3)

add $2, $1, $4

sub $4, $3, $2

In this example, the sub instruction modifies $4, which is used by

the add instruction. Hence it cannot be moved between the lw

and the add.

Issue 4

Instruction Scheduling

– An instruction I3 that is moved must not violate

dependency orderings. For example:

add $1, $2, $3

sub $5, $1, $7

lw $4, 0($6)

ori $9, $4, $4

• The add instruction cannot be moved between the lw and ori

instructions as it would violate the dependency ordering with

the sub instruction.

– I.e. the sub depends on the add, and moving the add after the sub

would cause incorrect computation of the sub.

Issue 4

Instruction Scheduling

• The nop instruction stands for “no operation”.

• When the CPU reads and executes the nop

instruction, absolutely nothing happens, except

that 1 cycle is wasted executing this instruction.

• The nop instruction can be used in delay slots or

simply to waste time.

Issue 4

Instruction Scheduling

• Delay branch example: Suppose we have the following

program, and suppose that branches are not delayed:

add $3, $4, $5

add $2, $3, $7

beq $2, $3, L1

sub $7, $2, $4

L1:

• In this program, the 2 add instructions will be executed

regardless of the outcome of the branch, but the sub

instruction will not be executed if the branch is taken.

Issue 4

Instruction Scheduling

• Suppose a hardware designer modifies the beq instruction

to become a delayed branch.

– The sub instruction is now in the delay slot, and will be executed

regardless of the outcome of the branch!

– This is obviously not what the programmer originally intended.

– To correct this, we must place an instruction that will be executed

regardless of the outcome of the branch into the delay slot.

– Either of the 2 add instructions qualify, since they will be executed

no matter how the branch turns out.

– BUT moving either of them into the delay slot will cause incorrect

computation

• They will violate the dependency orderings between the first and

second add, and between the second add and the lw.

Issue 4

Instruction Scheduling

• But if we don’t move anything into the delay slot,

the program will not execute correctly.

• Solution: Place a nop instruction there.

add $3, $4, $5

add $2, $3, $7

beq $2, $3, L1

nop

sub $7, $2, $4

L1:

#delay slot here

• The sub instruction now moves out of the delay

slot.

Issue 4

Instruction Scheduling

• Loop Unrolling

– Idea: If we loop 16 times to perform an operation, we

can duplicate that operation 4 times and loop only 4

times.

E.g.

for(int i=0; i<16; i++)

my[i] = my[i]+3;

Issue 4

Instruction Scheduling

• This loop can be unrolled to:

for(int i=0; i<16; i=i+4)

{

my[i] = my[i]+3;

my[i+1]=my[i+1]+3;

my[i+2]=my[i+2]+3;

my[i+3]=my[i+3]+3;

}

Issue 4

Instruction Scheduling

• But why even bother doing this?

– Loop unrolling actually generates more instructions!

• Previously we only had 1 instruction doing my[i]=my[i]+3

• Now we have 4 such instructions!

– Increasing the number of instructions gives us more

flexibility in scheduling the code.

• This allows us to eliminate pipeline stalls etc. more effectively.

Issue 5

Improving Performance

• Super-pipelines

– Each pipeline stage is further broken down.

– Effectively each pipeline stage is in turn pipe-lined.

– E.g. if each stage can be further broken down into 2

sub-stages:

IF1 IF2 ID1 ID2 EX1 EX2 M1 M2 WB1 WB2

Issue 5

Improving Performance

• This allows us to accommodate more

instructions in the pipeline.

– Now we can have 10 instructions operating

simultaneously.

– So now we can have a 10x speedup over the

non-pipeline architecture instead of just a 5x

speedup!

Issue 5

Improving Performance

• Unfortunately when things go wrong, penalties are

higher:

– E.g. a branch misprediction resulting in an IF-stage

flush will now cause 2 bubbles (in the IF1 and IF2

stages) instead of just 1.

– In a load-use stall, 2 bubbles must be inserted.

Issue 5

Improving Performance

• Superscalar Architectures

– Have 2 or more pipelines working at the same time!

• In a single pipeline, normally the best CPI possible is 1.

• With 2 pipelines, the average CPI goes down to 1/2!

– This will allow us to execute twice as many instructions

per second.

– Real situation not that ideal

• Instructions going to 2 different pipelines simultaneously must

be independent of each other

– There is NO forwarding between pipelines!

Issue 5

Improving Performance

• If CPU is unable to find independent instructions,

then 1 pipeline will remain idle.

• Example of superscalar machine:

– Pentium processor - 2 integer pipelines, 1 floating-point

pipeline, giving a total of 3 pipelines!

Summary

• Issue 1: Pipeline registers

– These decouple stages so that different instructions can

exist in each stage.

– Allows us to execute multiple instructions in a single

pipeline.

• Issue 2: Speed-up

– Ideally, an N stage pipeline should give you an N times

speedup.

Summary

• Issue 3: Hazards

– Structural hazards: Solved by having multiple

resources. E.g. Separate memory for instruction and

data.

– Data hazards: Solved by forwarding or stalling.

– Control hazards: Solved by branch prediction or

delayed branching.

Summary

• Issue 4: Instruction Scheduling

– Instructions may need to be re-arranged to avoid

pipeline stalls (e.g. load-use hazards) or to ensure

correct execution (e.g. filling in delayed slots).

– Loop unrolling gives extra instructions.

• This in turn gives better scheduling opportunities.

Summary

• Issue 5: Improving Performance

– Super-pipelines: Increases pipeline depth.

• A 5-stage pipeline becomes a 10-stage pipeline,

improving performance by 10x instead of 5x.

• Also causes larger penalties.

– Super-scalar pipelines: Have multiple pipelines

• Can increase instruction execution rate.

• Average CPI can actually fall below 1!