Informetrics, Webometrics and Web Use metrics Huimin Lu 10/21/2004

advertisement

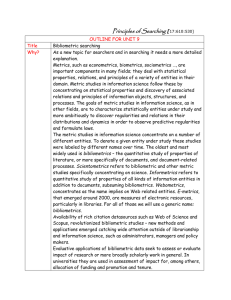

Informetrics, Webometrics and Web Use metrics Huimin Lu 10/21/2004 Outline History Article 1: Bibliometrics & WWW Article 2: Bibliometrics of the WWW Article 3: Authoritative Sources Article 4: ParaSite Conclusion History Term introduced by Pritchard in 1969. Pritchard’s explanation: “the application of mathematical and statistical methods to books and other media of communication”. A1: Bibliometrics and the World Wide Web By Don Turnbull Bibliometrics Bibliometric laws Apply bibliometric to WWW Metrics design A1: Bibliometrics Classic citation analysis Refined classic bibliometrics - Standard formula for impact: n journal citations / n citable articles published - Basic formula for immediacy index of influence: n citations received by article during the year / total number of citable articles published Bibliometric Coupling - Measure the number of references two papers have in common to test for similarity Cocitation Analysis - Measure the relations between cited documents Common Errors - multiple authors lost, self-citation, similar author names, human error, etc. A1: Bibliometric Laws Bradford’s Law of Scattering - clustering method: Ran (n from 0; a<1), sum = R/(1-a) Lotka’s Law - inverse square Zipf’s Law - familiar words with high frequency (nth word: k/n times) A1: Applying Bibliometric to Web Web surveys - Georgia Tech Graphics, Visualization, and Usability Web Surveys Web servers - Add programming logic - Inaccurate data gathered: skip standard procedures, miss state information between usage hits, server hits themselves don’t represent true usage. A1: Metrics Design Configure Web server to gather comprehensive metrics Manage log files - Enhence reliability: regular backup, store log file analysis results and logs, begin new logs timely, post results and log information for comparasion. - Log analysis tools: Analog, WWWStat, GetStats, Perl Scripts. - Standardization: Extended Log File Format by WWW Consortium Standards Committee Downie’s attempt analysis: user-based, request, byte-based Optimal Web content setup & External bibliometric gathering A2: Bibliometrics of the World Wide Web: An Exploratory Analysis of the Intellectual Structure of Cyberspace By Ray R. Larson Analysis of 30G Web pages collected by Inktomi “Web Crawler” Cocitation analysis using DEC AltaVista search engine A2: Growth and Usage of Web WWW A2: Cocitation Analysis of Web Attempt: Map the intellectual structure of Web Question: Can cocitation techniques be applied to charting the contents of cyberspace? A2: Methods Selection of core set of items for study Retrieval of cocitation frequency information Compilation of the raw cocitation frequency matrix Correlation analysis to convert the raw frequencies into correlation coefficients Multivariate analysis of the correlation matrix Interpretation of the resulting “map” and validation A2: Results A3: Authoritative Sources in a Hyperlinked Environment By Jon M. Kleinberg A new method for automatically extracting certain types of information about a hypermedia environment from its link structure. A3: Goal Types of query search and problem - Specific queries: scarcity problem - Broad-topic queries: abundance problem - Similar-page queries Synthesize the unreliable information contained in the presence of individual links to provide a set of authoritative pages relevant to an initial query. A3: Common Approaches Only S - Define S to be the top k pages indexed by AltaVista - Rank pages according to their in-degree S -> T - Define same root set S - Grow S to a larger base set T - Rank pages by their in-degree A3: Their Approach Extract small core sets of community of hubs and authorities from T Authoritative pages - A novel type of quality measure of the document in hypermedia by algorithmic means. - Large in-degree & considerable overlap in sets of pages that point to them Hub Pages - have links to multiple relevant authoritative pages A3: Algorithm and Output Method: Iteratively propagates “authority weight” and “hub weight” across links of the web graph, converging simultaneously to steady states for both types of weights Output: a pair of sets (X, Y) (X: a small set of authorities, Y: a small set of hubs) referred by authors as community of hubs and authorities Claim: authoritative pages can be identified as belonging to dense bipartite communities in the link graph of the WWW via their algorithm. A4: ParaSite: Mining Structural Information on the Web By Ellen Spertus Varieties of link information on the Web How the web differs from conventional hypertext How the links can be exploited to build useful applications A4: Classical Hypertext vs. Web Classical hypertext Web - links don’t cross site even document boundaries - links can cross site and document boundaries - documents limited to a single topic - multiple topics permitted in one web page - manual answers each question in exactly one place or in none - an answer could appear any number of times on the web - Hardly change - constantly changing A4: Mining Links Naïve Link Geometry - A useful technique for finding pages on a given set of topics Hypertext Links example - Categorized into upward, downward, crosswise, and outward Directory Links - Directory structure relation in pages in the absence of hypertext links Structure within a Page - Page can be considered a tree of nodes, each with attached text and links embedded in the text Other - Domain names, relationships between concepts represented by words and phrases, paths traveled through Web sites by visitors A4: Application Finding Moved Pages - Exploiting hyperlinks - Exploiting directory links Finding Related Pages - Collaborative filtering - When searching for a related page with similar pages got, ParaSite can find the page (A) that has maximum links to the pages user got and return other pages referneced by A. A Person Finder Conclusion World Wide Web information increase exponentially and Internet architecture turns to be more complicated. Applying bibliometrics to the Web will help us control and manage web information wisely. Example of Hypertext Link Back to hypertext link

![paul wouters abstract [DOCX 12.06KB]](http://s2.studylib.net/store/data/015015002_1-9f5a5d090f3ec5be0def3a106c41985e-300x300.png)