Why standards?

advertisement

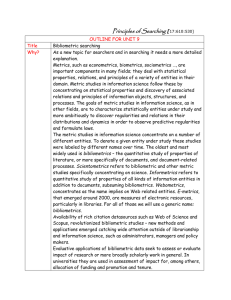

The debate on uses and consequences of STI indicators OST Workshop 12 May 2014, Paris Paul Wouters, Sarah de Rijcke and Ludo Waltman, Centre for Science and Technology Studies (CWTS) Debate so far 1 “The variety of available bibliographic databases and the tempestuous development of both hardware and software have essentially contributed to the great rise of bibliometric research in the 80-s. In the last decade an increasing number of bibliometric studies was concerned with the evaluation of scientific research at the macro- and meso-level. Different database versions and a variety of applied methods and techniques have resulted sometimes in considerable deviations between the values of science indicators produced by different institutes” Glänzel (1996): THE NEED FOR STANDARDS IN BIBLIOMETRIC RESEARCH AND TECHNOLOGY, Scientometrics, 35 (2), p. 167. 2 Applications citation analysis Citation analysis has four main applications: • Qualitative and quantitative evaluation of scientists, publications, and scientific institutions • Reconstruction and modeling of the historical development of science and technology • Information search and retrieval • Knowledge organization Why standards? • Bibliometrics increasingly used in research assessment • Data & indicators for assessment widely available • Some methods blackboxed in database-linked services (TR as well as Elsevier) • No consensus in bibliometric community • Bewildering number of indicators and data options • Bibliometric knowledge base not easily accessible • Ethical and political responsibility distributed and cannot be ignored State of affairs on standards • “End users” demand clarity about the best way to assess quality and impact from bibliometric experts • Most bibliometric research focused on creating more diversity rather than on pruning the tree of data and indicator options • Bibliometric community has not yet a professional channel to organize its professional, ethical, and political responsibility • We lack a code of conduct with respect to research evaluation although assessments may have strong implications for human careers and lives Three types of standards • Data standards • Indicator standards • Standards for good evaluation practices Data standards • Data standards: – Choice of data sources – Selection of documents from those sources – Data cleaning, citation matching and linking issues – Definition and delineation of fields and peer groups for comparison Indicator standards • Indicator standards: – Choice of level of aggregation (nations; programs; institutes; groups; principal investigators; individual researchers) – Choice of dimension to measure (size, activity, impact, collaboration, quality, feasibility, specialization) – Transparency of construction – Visibility of uncertainty and of sources of error – Specific technical issues: • Size: fractionalization; weighting • Impact: averages vs percentiles; field normalization; citation window Standards for GEP • Standards for good evaluation practices: – When is it appropriate to use bibliometric data and methods? – The balance between bibliometrics, peer review, expert review, and other assessment methodologies (eg Delphi) – The transparency of the assessment method (from beginning to end) – The accountability of the assessors – The way attempts to manipulate bibliometric measures is handled (citation cartels; journal self-citations) – Clarity about the responsibilities of researchers, assessors, university managers, database providers, etc. Preconference STI_ENID workshop 2 September 2014 • Advantages and disadvantages of different types of bibliometric indicators, Which types of indicators are to be preferred, and how does this depend on the purpose of a bibliometric analysis? Should multiple indicators be used in a complementary way? • Advantages and disadvantages of different approaches to the fieldnormalization of bibliometric indicators, eg cited-side and citing-side approaches. • The use of techniques for statistical inference, such as hypothesis tests and confidence intervals, to complement bibliometric indicators. • Journal impact metrics. Which properties should a good journal impact metric have? To what extent do existing metrics (IF, Eigenfactor, SJR, SNIP) have these properties? Is there a need for new metrics? How can journal impact metrics be used in a proper way? An example of a problem in standards for assessment 11 Example: individual level bibliometrics • Involves all three forms of standardization • Not in the first place a technical problem, but does have many technical aspects • Glaenzel & Wouters (2013) presented 10 dos and don’ts of individual level bibliometrics • Moed (2013) presented a matrix/portfolio approach • ACUMEN (2014) presented the design of a Web based research portfolio portfolio aim is to give researchers a voice in evaluation narrative influence ➡evidence based arguments ➡shift to dialog orientation ➡selection of indicators ➡narrative component ➡Good Evaluation Practices ➡envisioned as web service ACUMEN Portfolio Evaluation Guidelines Career Narrative Links expertise, output, and influence together in an evidence-based argument; included content is negotiated with evaluator and tailored to the particular evaluation - aimed at both researchers and evaluators - development of evidence based arguments (what counts as evidence?) - expanded list of research output - establishing provenance Expertise Output Influence - scientific/scholarly - technological - communication - organizational - knowledge transfer - educational - publications - public media - teaching - web/social media - data sets - software/tools - infrastructure - grant proposals - on science - taxonomy of indicators: bibliometric, webometric, altmetric - on society - guidance on use of indicators - on economy - contextual considerations, such as: stage of career, discipline, and country of residence - on teaching Tatum & Wouters | 14 November 2013 Portfolio & Guidelines ➡Instrument for empowering researchers in the processes of evaluation ➡Taking in to consideration all academic disciplines ➡Suitable for other uses (e.g. career planning) ➡Able to integrate into different evaluation systems Tatum & Wouters | 14 November 2013 What type of standardization process do we need? 16 Evaluation Machines • Primary function: make stuff auditable • Mechanization of control – degradation of work and trust? (performance paradox) • Risks for evaluand and defensive responses • What are their costs, direct and indirect? • Microquality versus macroquality – lock-in • Goal displacement & strategic behaviour Citation as infrastructure • Infrastructures are not constructed but evolve • Transparent structures taken for granted • Supported by invisible work • They embody technical and social standards • Citation network includes databases, centres, publishers, guidelines Effects of indicators • Intended effect: behavioural change • Unintended effects: – Goal displacement – Structural changes • The big unknown: effects on knowledge? • Institutional rearrangements • Does quality go up or down? Constitutive effects • Limitations of conventional critiques (eg ‘perverse or unintended effects’) • Effects: • Interpretative frames • Content & priorities • Social identities & relations (labelling) • Spread over time and levels • Not a deterministic process • Democratic role of evaluations