L14_PCA.pptx

advertisement

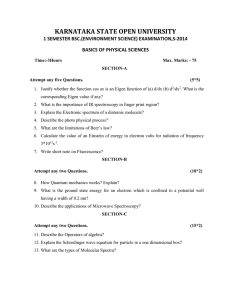

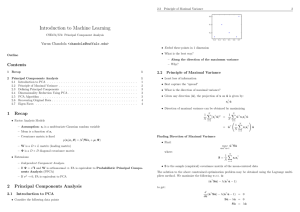

Principle Component Analysis and its use in MA clustering Lecture 12 What is PCA? • This is a MATHEMATICAL procedure that transforms a set of correlated responses into a smaller set of uncorrelated variables called PRINCIPAL COMPONENTS. • • • • • Uses: Data screening Clustering Discriminant Analysis Regression combating Multicollinearity Objectives of PCA • It is an exploratory technique meant to give researchers a better FEEL for their data • Reduce dimensionality, rather try to understand the TRUE dimensionality of the data • Identify “meaningful” variables • If you have a VARIANCE-COVARIANCE MATRIX, S: • PCA returns new variables called Principal Components that are: – Uncorrelated – First component explains MOST of the variability – The remaining PC explain decreasing amounts of variability Idea of PCA • Consider x to be a random variable with mean m and Variance given by S. • The first PC variable is defined by: y1= a1’(x-m), such that a1 is chosen so that the VAR(a1’(x-m)) is maximized for all vectors a1 satisfying a1’a1=1 • It can be shown that the maximum value of the variance of a1’(x-m) among all vectors satisfying the condition is l1 (the first or largest eigen value of the Matrix, S). This implies a1is the eigen vector corresponding to the eigen value l1. • The second PC is the eigen vector corresponding to the second largest eigen value l2 and so on to the pth eigen value. Supplementary Info: • What are Eigen Values and Eigen Vectors? • Also called characteristic root (latent root) eigen values are the roots of the polynomial equation defined by: • | S - l I| =0 • This leads to an equation of form: • c1lp + c2lp-1 + … cpl + cp+1 = 0 • If S is symmetric then the eigen values are real numbers and can be ordered. Supplementary Info: II • What are Eigen Vectors? • Similarly, eigen vectors are the vectors satisfying the equation: Sa - l a =0 • If S is symmetric then there will be p eigen vectors corresponding to the p eigen values. • Generally not unique and are normalized to aj’aj = 1 • Remarks: if two eigen values are NOT equal there eigen vectors will be orthogonal to each other. When two eigen values are equal their eigen vectors are CHOSEN orthogonal to each other (in this case these are non-unique). • Tr(S) = S li • |S| = li Idea of PCA contd… • Hence the p principal components are a1, a2….ap, the eigen vectors corresponding to the ordered eigen values of S. • Here, l1 l2 … lp. • Result: two principal components are uncorrelated if and only if their defining eigen vectors are orthogonal to each other. Hence the PC are placed on a orthogonal axis system where are the data fall. Idea of PCA contd… • The varaince of the jth component is lj, j=1,…,p. • Remember: tr(S) = s11+s22+…+spp. • Also, tr(S)=l1+l2+…+lp. • Hence, often a measure of “importance” of the jth principal component is given by lj/tr(S). Comments • To actually do PCA we need to compute the principal component scores or the values of the principal component variable for each unit in the data set. • These scores provide locations of the observations in a data set with respect to the principal component axis. • Generally eigen vectors are normalized to length 1, aj’aj=1. • Often to make comparison between eigen values each element in the vector is multiplied by the square root of the corresponding eigen value( called component vectors), cj = (lj1/2 )aj. Estimating PC • Life would be easy if m and S were known. All we had to do was to estimate the normalized eigen vectors and corresponding eigen values. • But, most of the time we DO NOT know m and S and we need to estimate those and hence the PCA are the sample values corresponding to the estimated m and S. • Determining the # of PC: – Look for the eigen values that are much smaller than the others. Plots like SCREE plot (plot of eigen value versus the eigen number) Caveats • The whole idea of PCA is to transform a set of correlated variables to a set of uncorrelated variables, hence if the data are already uncorrelated, not much additional advantage of doing PCA. • One can do PCA on correlation matrix or the Covariance matrix. • In the latter case, the component correlation vectors cj = (lj1/2 )aj give the correlations between the original variables and the jth principal component variable. PCA and Multidimensional Scaling • Essentially what PCA does is what is called SINGULAR VALUE DECOMPOSITION(SVD) of a matrix • • • • X=UDV’ Where X is n by p, with n<<p (in MA) U is n by n D is n by n, diagonal matrix with the diagonals decreasing, d1 d2… dn. • V is a p by n matrix, which rotates X into a new set of co-ordinates. such that XV=UD SVD and MDS • SVD is a VERY memory hungry procedure and especially for MA data when there a large number of genes it is very slow and often needs HUGE amounts of memory to work. • Multidimensional Scaling (MDS): is a collection of methods that do not use the full data matrix but rather the distance matrix between the variables. This reduces the computation from n by p to n by n (quite a reduction!). Sammon Mapping • A common method used in MA is SAMMON mapping which aims to find the two-dimensional representation that has the maximum dissimilarity matrix compared to the original one. • PCA has the advantage in the sense that it represents the samples in a scatterplot whose axes are made up of a linear combination of the most variable genes. • Sammon mapping treats all genes equivalently and hence is a bit “duller” than PCA based clustering. PCA in Microarrays • Useful technique to understand the TRUE dimensionality of the data. • Useful for clustering. • In R under the MASS package you can use: • • • • • • my.data1=read.table("cluster.csv",header=TRUE,sep=",") princomp(my.data1) myd.sam <- sammon(dist(my.data1)) plot(myd.sam$points, type = "n") text(myd.sam$points, labels =as.character(1:nrow(my.data1)))