PCA: Principal Component Analysis in Machine Learning

advertisement

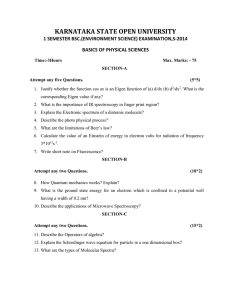

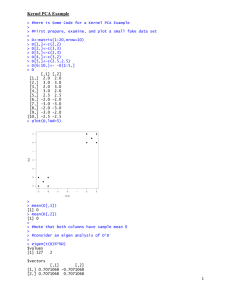

2.2 Principle of Maximal Variance 2 0.6 0.4 Introduction to Machine Learning 0.2 CSE474/574: Principal Component Analysis 0.2 0.3 0.4 0.5 0.6 0.7 Varun Chandola <chandola@buffalo.edu> • Embed these points in 1 dimension • What is the best way? Outline – Along the direction of the maximum variance – Why? Contents 1 Recap 2 Principal Components Analysis 2.1 Introduction to PCA . . . . . . 2.2 Principle of Maximal Variance . 2.3 Defining Principal Components 2.4 Dimensionality Reduction Using 2.5 PCA Algorithm . . . . . . . . . 2.6 Recovering Original Data . . . . 2.7 Eigen Faces . . . . . . . . . . . 1 . . . . . . . . . PCA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 1 2 3 3 3 4 4 2.2 Principle of Maximal Variance • Least loss of information • Best capture the “spread” • What is the direction of maximal variance? • Given any direction (û), the projection of x on û is given by: x> i û • Direction of maximal variance can be obtained by maximizing 1 Recap N N 1 X > 2 1 X > (xi û) = û xi x> i û N i=1 N i=1 • Factor Analysis Models = û> – Assumption: xi is a multivariate Gaussian random variable – Mean is a function of zi p(xi |zi , θ) = N (Wzi + µ, Ψ) • Find: – W is a D × L matrix (loading matrix) – Ψ is a D × D diagonal covariance matrix – Independent Component Analysis. – If Ψ = σ 2 I and W is orthonormal ⇒ FA is equivalent to Probabilistic Principal Components Analysis (PPCA) – If σ 2 → 0, FA is equivalent to PCA Principal Components Analysis Introduction to PCA • Consider the following data points û max û> Sû û:û> û=1 where: • Extensions: 2.1 ! Finding Direction of Maximal Variance – Covariance matrix is fixed 2 N 1 X x i x> i N i=1 S= N 1 X xi x > i N i=1 • S is the sample (empirical) covariance matrix of the mean-centered data The solution to the above constrained optimization problem may be obtained using the Lagrange multipliers method. We maximize the following w.r.t. û: (û> Sû) − λ(û> û − 1) to get: d > (û Sû) − λ(û> û − 1) = 0 dû Sû − λû = 0 Sû = λû 2.3 Defining Principal Components 3 Obviously, the solution to the above equation is an eigen vector of the matrix S. But which S? Note that for the optimal solution: û> Sû = (û> λû) = λ Thus we should choose the largest possible λ which means that the first solution is the eigen vector of S with largest eigen value. 2.6 Recovering Original Data • W is D × L 5. Let Z = XW 6. Each row in Z (or z> i ) is the lower dimensional embedding of xi 2.6 2.3 Defining Principal Components • First PC: Eigen-vector of the (sample) covariance matrix with largest eigen-value • Second PC? 4 Recovering Original Data • Using W and zi x̂i = Wzi • Average Reconstruction Error • Eigen-vector with next largest value J(W, Z) = • Variance of each PC is given by λi • Variance captured by first L PC (1 ≤ L ≤ D) PL • What are eigen vectors and values? Pi=1 D i=1 λi λi Theorem 1 (Classical PCA Theorem). Among all possible orthonormal sets of L basis vectors, PCA gives the solution which has the minimum reconstruction error. × 100 Av = λv v is eigen vector and λ is eigen-value for the square matrix A • Geometric interpretation? • Optimal “embedding” in L dimensional space is given by zi = W> xi 2.7 Eigen Faces EigenFaces [1] • Input: A set of images (of faces) For the second PC we need to optimize the variance with a second constraint that the solution should be orthogonal to the first PC. It is easy to show that this solution will be the PC with second largest eigen value, and so on. 2.4 N 1 X kxi − x̂i k2 N i=1 Dimensionality Reduction Using PCA • Task: Identify if a new image is a face or not. References • Consider first L eigen values and eigen vectors References • Let W denote the D × L matrix with first L eigen vectors in the columns (sorted by λ’s) [1] M. Turk and A. Pentland. Face recognition using eigenfaces. In Computer Vision and Pattern Recognition, 1991. Proceedings CVPR ’91., IEEE Computer Society Conference on, pages 586–591, Jun 1991. • PC score matrix Z = XW • Each input vector (D × 1) is replaced by a shorter L × 1 vector 2.5 PCA Algorithm 1. Center X X = X − µ̂ 2. Compute sample covariance matrix: S= 3. Find eigen vectors and eigen values for S 4. W consists of first L eigen vectors as columns • Ordered by decreasing eigen-values 1 X> X N −1