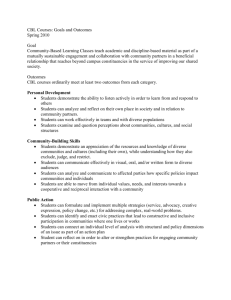

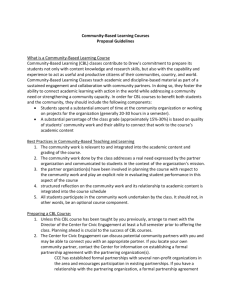

Capstone- 2013- Charles, Choi

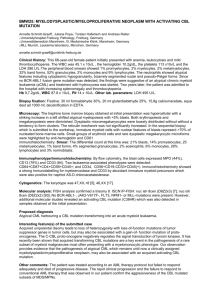

advertisement