Tail Latency: Networking

advertisement

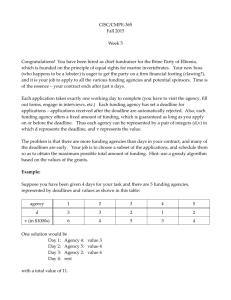

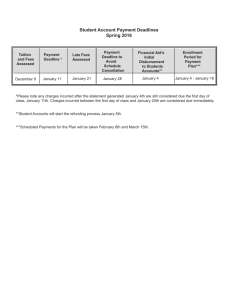

Tail Latency: Networking The story thus far • Tail latency is bad • Causes: – Resource contention with background jobs – Device failure – Uneven-split of data between tasks – Network congestion for reducers Ways to address tail latency • • • • • Clone all tasks Clone slow tasks Copy intermediate data Remove/replace frequently failing machines Spread out reducers What is missing from this picture? • Networking: – Spreading out reducers is not sufficient. • The network is extremely crucial – Studies on Facebook traces show that [Orchestra] • in 26% of jobs, shuffle is 50% of runtime. • in 16% of jobs, shuffle is more than 70% of runtime • 42% of tasks spend over 50% of their time writing to HDFS User-facing online services Limits Other implication of Network Request Response Scalability Application SLAs Cascading SLAs Scalability ofAggregator Netflix-like recommendation 200 ms SLAs for components at each system5msis bottlenecked by communication 5ms 5ms 25ms 100 ms Agg. 4ms 45 ms Worker 200 Iteration time (s) Agg. Agg. 4ms 25ms Communication Worker level of the hierarchy 25ms 30ms 4ms 4ms 15ms 250 35ms Worker Computation 150 22ms Network SLAs Deadlines on communications Did not scale beyond 60 nodes between components Worker » Comm. time increased faster than comp. time decreased 100 Flow Deadlines 50 A0 flow is useful if and only if it satisfies its deadline 10 30 60 90 Today’s transport protocols: Number of machines Deadline agnostic and strive for fairness 5 What is the of the Role? Network What is tImpact e N twork’s • Assume deadline of forRTT tasks [DCTCP] Analyzed10ms distribution measurements: • Simulate job completion times based on distributions of tasks completion times • Median RTT takes 334μs, but 6% take over 2ms • Can be as high asa14ms • For 40Network about 4delays tasks alone (14%)ucan consume the data time b [SIGCOMM’10] dget Dretrieval’s ta Centerfail TCP D ( CTCP) for 400 14Source: tasks [3%] respectively 5 What is the Impact of the Network • Assume 10ms deadline for tasks [DCTCP] • Simulate job completion times based on distributions of tasks completion times (focus on 99.9%) • For 40 about 4 tasks (14%) for 400 14 tasks [3%] fail respectively WhatImpact is the Impact the Network on PageofCreation • • Assume 10ms for tasks Under the RTTdeadline distribution, 150[DCTCP] data retrievals take 200ms computation Simulate job (ignoring completion times based time) on distributions of tasks completion times • ForAs40Facebook about 4already tasks (14%) at 130 data retrievals per page, for 400 14 tasks [3%] fail respectively need to address network delays 7 Other implication of Network Limits Scalability Scalability of Netflix-like recommendation system is bottlenecked by communication Iteration time (s) 250 Communication 200 Computation Did not scale beyond 60 nodes 150 » Comm. time increased faster than comp. time decreased 100 50 0 10 30 60 90 Number of machines 9 What Causes this Variation in Network Transfer Times? • First let’s look at type of traffic in network • Background Traffic – Latency sensitive short control messages; e.g. heart beats, job status – Large files: e.g. HDFS replication, loading of new data • Map-reduce jobs – Small RPC-request/response with tight deadlines – HDFS reads or writes with tight deadlines What Causes this Variation in Network Transfer Times? • No notion of priority – Latency sensitive and non-latency sensitive share the network equally. • Uneven load-balancing – ECMP doesn’t schedule flows evenly across all paths – Assume long and short are the same • Bursts of traffic – Networks have buffers which reduce loss but introduce latency (time waiting in buffer is variable) – Kernel optimization introduce burstiness Ways to Eliminate Variation and Improve tail latency • Make the network faster – HULL, DeTail, DCTCP – Faster networks == smaller tail • Optimize how application use the network – Orchestra, CoFlows – Specific big-data transfer patterns, optimize the patterns to reduce transfer time • Make the network aware of deadlines – D3, PDQ – Tasks have deadlines. No point doing any work if deadline wouldn’t be met – Try and prioritize flows and schedule them based on deadline. Fair-Sharing or Deadline-based sharing • Fair-share (Status-Quo) – Every one plays nice but some deadlines lines can be missed • Deadline-based – Deadlines met but may require non-trial implemantionat • Two ways to do deadline-based sharing – Earliest deadline first (PDQ) – Make BW reservations for each flow • Flow rate = flow size/flow deadline • Flow size & deadline are known apriori Limitations of Fair Sharing Fair-Sharing or Deadline-based sharing Case for unfair sharing: Deadline aware Status Quo Flows X – Earliest deadline first (PDQ) f1 Flows • Flow Two versions of deadline-based sharing f1, 20ms f1 f2 f2 – Make BW reservations for each flow Flow f2, 40ms 20 40 6 flows with 30ms deadline 30 Time X X X X X X 40 Time Flows Flows • Flow rate = flow size/flow deadline Time Flowquenching: size & deadline are known apriori Case for •flow 20 30 Time WithInsufficient deadline awareness, bandwidthone to satisfy flow can all deadlines be quenched With Allfair other share, flows all make flows their miss the deadline deadline (partial (empty response) response) Issues with Deadline Based Scheduling • Implications for non-deadline based jobs – Starvation? Poor completion times? • Implementation Issues – Assign deadlines to flows not packets – Reservation approach • Requires reservation for each flow • Big data flows: can be small & have small RTT – Control loop must be extremelly fast – Earliest deadline first • Requires coordination between switches & servers • Servers: specify flow deadline • Switches: priority flows and determine rate – May require complex switch mechanisms How do you make the Network Faster • Throw more hardware at the problem – Fat-Tree, VL2, B-Cube, Dragonfly – Increases bandwidth (throughput) but not necessarily latency So, how do you reduce latency • Trade bandwidth for latency – Buffering adds variation (unpredictability) – Eliminate network buffering & bursts • Optimize the network stack – Use link level information to detect congestion – Inform application to adapt by using a different path HULL: Trading BW for Latency • Buffering introduces latency – Buffer is used to accommodate bursts – To allow congestion control to get good throughput • Removing buffers means – Lower throughput for large flows – Network can’t handle bursts – Predictable low latency Why do Bursts Exists? • Systems review: – NIC (network Card) informs OS of packets via interrupt • Interrupt consume CPU • If one interrupt for each packet the CPU will be overwhelmed – Optimization: batch packets up before calling interrupt • Size of the batch is the size of the burst Impact'of'Interrupt'Coalescing' Why do Bursts Exists? • Systems review: Interrupt' Receiver' Throughput' Burst'Size' Coalescing' CPU'(%)' (Gbps)' (KB)' – NIC (network Card) informs OS of packets via interrupt • Interrupt consume CPU • If one interrupt for each packet the CPU will be overwhelmed – Optimization: batch packets up before calling interrupt • Size of the batch is the size of the burst More'Interrupt' Coalescing' Lower'CPU'UAlizaAon'' &'Higher'Throughput' More'' BursAness' Why Does Congestion Need buffers? • Congestion Control AKA TCP – Detects bottleneck link capacity through packet loss – When loss it halves its sending rate. • Buffers help the keep the network busy – Important for when TCP reduce sending rate by half • Essentially the network must double capacity for TCP to work well. – Buffer allow for this doubling TCP Review • Bandwidth-delay product rule of thumb: – A single flow needs C×RTT buffers for 100% Throughput. Buffer Size B Throughput B < C×RTT 100% B ≥ C×RTT B 100% 22 Key Idea Behind Hull • Eliminate Bursts – Add a token bucket (Pacer) into the network – Pacer must be in the network so it happens after the system optimizations that cause bursts. • Eliminate Buffering – Send congestion notification messages before link it fully utilized • Make applications believe the link is full when there’s still capacity – TCP has poor congestion control algorithm • Replace with DCTCP Key Idea Behind Hull • Key$idea:$$ •– Eliminate Bursts Associate$congesI on$with$link$uI lizaI on,$not$buffer$occupancy$$ – – Add a token (Gibbens$ Kelly$1999,$ &$Srikant$2001)$$ bucket&$ (Pacer) intoKunniyur$ the network – Pacer must be in the network so it happens after the system optimizations that cause bursts. !! • Eliminate Buffering – Send congestion notification messages before link it fully utilized • Make applications believe the link is full when there’s still capacity 11$ Orchestra: Managing Data Transfers in Computer Clusters • Group all flows belonging to a stage into a transfer • Perform inter-transfer coordination • Optimize at the level of transfer rather than individual flows Transfer Patterns HDFS Broadcast Map Map Transfer: set of all flows transporting data between two stages of a job Map Shuffle Reduce Reduce Incast* – Acts as a barrier Completion time: Time for the last receiver to finish HDFS 26 Orchestra Cooperative broadcast (Corn ITC – Infer and utilize topology information Inter-Transfer Fair sharing Controller (ITC) FIFO Priority Weighted Shuffle Scheduling (WSS) TCShuffle (shuffle) TC Broadcast (broadcast) TC Broadcast (broadcast) Transfer Hadoop shuffle Controller WSS (TC) HDFS Transfer Tree (TC) Controller Cornet HDFS Transfer Tree (TC) Controller Cornet – Assign flow rates to optimiz shuffle completion time Inter-Transfer Controller – Implement weighted fair sharing between transfers End-to-end performance shuffle broadcast 1 broadcast 2 27 Cornet: Cooperative broadcast Broadcast same data to every receiver » Fast, scalable, adaptive to bandwidth, and resilient Peer-to-peer mechanism optimized for cooperative environments Use bit-torrent to distribute data Observations Cornet Design Decisions 1. High-bandwidth, low-latency network Large block size (4-16MB) 2. No selfish or malicious peers No need for incentives (e.g., TFT) No (un)choking Everyone stays till the end 3. Topology matters Topology-aware broadcast 28 Topology-aware Cornet Many data center networks employ tree topologies Each rack should receive exactly one copy of broadcast – Minimize cross-rack communication Topology information reduces cross-rack data transfer – Mixture of spherical Gaussians to infer network topology 29 Status quo in Shuffle r1 s1 s2 Links to r1 and r2 are full: r2 s3 s4 s5 3 time units Link from s3 is full: 2 time units Completion time: 5 time units 31 Weighted Shuffle Scheduling Allocate rates to each flow using weighted fair sharing, where the weight of a flow between a sender-receiver pair is proportional to the total amount of data to be sent r1 1 s1 1 s2 r2 2 2 s3 1 1 s4 s5 Completion time: 4 time units Up to 1.5X improvement 32 Faster spam classification Communication reduced from 42% to 28% of the iteration time Overall 22% reduction in iteration time 33 Summary • Discuss tail latency in network – Types of traffic in network – Implications on jobs – Cause of tail latency • Discuss Hull: – Trade Bandwidth for latency – Penalize huge flows – Eliminate bursts and buffering • Discuss Orchestra: – Optimize transfers instead of individual flows • Utilize knowledge about application semantics http://www.mosharaf.com/ 34