Decoupled Dynamic Cache Segmentation Samira M. Khan and

advertisement

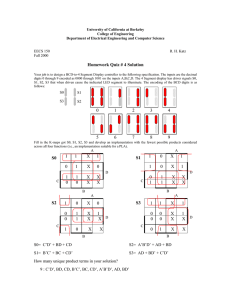

18th International Symposium on High Performance Computer Architecture, 2012 Decoupled Dynamic Cache Segmentation Samira M. Khan, Zhe Wang and Daniel A. Jiménez The University of Texas at San Antonio Introduction • Least Recently Used (LRU) replacement policy – Does not work well in last-level cache(LLC) [Lai et al. ISCA’00, Qureshi et al. ISCA’07, Chaudhuri et al. MICRO’09, Jaleel et al. ISCA’10] – Temporal locality is filtered by L1 and L2 accesses – Does not address workload behavior • Working set is larger than the cache size (thrashing) • Bursts of non-temporal references (scanning) Need a replacement policy in LLC that adapts to workload behavior 2 Introduction • Non-referenced and referenced blocks exhibit different behavior in LLC [Karedla et al. 94, Megiddo et al. FAST’03, Qureshi et al. ISCA’07, Jaleel et al. ISCA’10] Non-referenced blocks, will not be referenced before eviction Non-referenced blocks, will be referenced before eviction Referenced lines 400.perlbench Each pixel represents a block in a 1MB LLC We propose cache segmentation technique that dynamically adjusts the number of non-referenced and referenced blocks and adapts to workload behavior 3 Contribution • We propose Dynamic Cache Segmentation(DCS) – Each set divided into two segments • Non-referenced and referenced blocks in each segment – Predicts the optimal number of blocks in each segment • Sampling-based scalable low-overhead predictor – Decoupled from the replacement policy • Works with random policy Improves performance of an 8MB LLC four-core system on average by 12%, with a random replacement policy requiring only half the space of the LRU policy 4 Outline • • • • • • • Introduction Motivation Dynamic Cache Segmentation Optimal Segment Predictor Methodology Results Conclusion 5 Motivation 400.perlbench 434.zeusmp 435.gromacs Each pixel represents a block in a 1MB LLC Non-referenced blocks, will not be referenced before eviction Non-referenced blocks, will be referenced before eviction Referenced blocks Non-referenced and referenced blocks are different in different workloads 6 Motivation Workloads have different sensitivity to 3.1segmentation 2.3 1.6 2.2 3 IPC IPC IPC 1.4 2.1 1.2 2.9 Static LRU 2 Static 3 5 7 9 11 13 15 Static Segmentation Size LRU 2.8 1 1 Static LRU 1 3 5 7 9 11 13 15 Static Segmentation Size 1 3 5 7 9 11 13 15 Static Segmentation Size 400.perlbench 434.zeusmp 435.gromacs Sensitive Insensitive LRU Friendly Need a dynamic segmentation policy to address workload behavior 7 Dynamic Cache Segmentation Optimal Segment Size Predictor predicted segment size <= non-referenced list size yes Evict from nonreferenced list Cache sets non-referenced list no Evict from referenced list referenced list Only 1 bit is needed to differentiate between non-referenced and referenced blocks 8 Dynamic Cache Segmentation Optimal Segment Size Predictor Access x, miss Access d, hit Access d, hit Access c, hit Access y, miss Non-referenced blocks: 5 Greater than optimal size Evict from non-referenced list ax b c d e Non-referenced list f g h i Optimal Segment size 4 j k l m n o p Referenced list 9 Dynamic Cache Segmentation Optimal Segment Size Predictor Access x, miss Access d, hit Access d, hit Access c, hit Access y, miss d is now a referenced block Nothing happens, d is already in referenced list x a b c d Non-referenced list f g h i Optimal Segment size 4 j k l m n o p Referenced list 9 Dynamic Cache Segmentation Optimal Segment Size Predictor Access x, miss Access d, hit Access d, hit Access c, hit Access y, miss c is now a referenced block x a b c Non-referenced list d f g h i Optimal Segment size 4 j k l m n o p Referenced list 9 Dynamic Cache Segmentation Optimal Segment Size Predictor Access x, miss Access d, hit Access d, hit Access c, hit Access y, miss non-referenced blocks: 3 Less than optimal size Evict from referenced list xy a b Non-referenced list c d f g h i Optimal Segment size 4 j k l m n o p Referenced list 9 Decoupled DCS • Our policy only decides which list to use in eviction • Each list is free to use any replacement policy • Decouples replacement policy from segmentation • We use 1 bit Not-Recently-Used(NRU) and random a b c d d f g h i Non-referenced list j k l m n o p Referenced list Use random/NRU policy within the list 10 Optimal Segment Predictor • Sampling based set-dueling [Qureshi et al. ISCA’07] – Samples a small number of sets – A counter keeps track of misses in specific type of set – Policy that has less miss is the winner • Sampler sets have specific segment size – assoc assoc assoc , , assoc , assoc Size 1, 4 2 4 • Sets in cache follow the dynamic segment size – Decided by the predictor • Decision tree analysis minimizes space overhead Our predictor uses only 16 sets for each type of sets 11 Optimal Segment Predictor Set-dueling between segment size 16 and 8 Segment 8 winner Segment 16 winner Set-dueling between segment size 1 and 8 Segment 1 winner Optimal segment size 1 Set-dueling between segment size 12 and 16 Segment 8 winner Set-dueling between segment size 4 and 8 Segment 4 winner Optimal segment size 4 Segment 8 winner Segment 12 winner Optimal segment size 12 Segment 16 winner Optimal segment size 16 Optimal segment size 8 Winner of each level decides the segment size of the next level 12 Optimal Segment Predictor Set-dueling between segment size 16 and 8 Segment 8 winner Set-dueling between segment size 1 and 8 Segment 1 winner Optimal segment size 1 Set-dueling between segment size 12 and 16 Segment 8 winner Set-dueling between size 1 and ignore segment Segment 1 winner Segment 16 winner Set-dueling between segment size 4 and 8 Ignore segment winner Ignore segment Segment 8 winner Segment 4 winner Set-dueling between size 4 and ignore segment Segment 4 winner Optimal segment size 4 Set-dueling between size 8 and ignore segment Ignore Segment 8 segment winner winner Ignore segment Segment 16 winner Segment 12 winner Optimal segment size 8 Ignore segment winner Set-dueling between size 12 and ignore segment Segment 12 winner Optimal segment size 12 Set-dueling between size 16 and ignore segment Ignore Segment 16 segment winner winner Ignore segment Optimal segment size 16 Ignore segment winner Ignore segment Ignore segment We also incorporate ignoring segmentation for LRU-friendly workloads 13 DCS in Shared Cache Partitioning • Complements shared cache partitioning techniques [Qureshi et al. ISCA’06, Suh et al. HPCA’02] • Utility-based Cache Partitioning(UCP)[Qureshi et al. ISCA’06] – Partitions cache ways to each core based on utility • DCS segments each partition – Into non-referenced and referenced blocks core 0 core 1 core 2 core 3 UCP decides the way partitions among the cores 14 DCS in Shared Cache Partitioning • Complements shared cache partitioning techniques [Qureshi et al. ISCA’06, Suh et al. HPCA’02] • Utility-based Cache Partitioning(UCP)[Qureshi et al. ISCA’06] – Partitions cache ways to each core based on utility • DCS segments each partition – Into non-referenced and referenced blocks core 0 core 1 core 2 core 3 DCS decides the segmentation in each partition 14 Methodology • CMP$im cycle accurate simulator [Jaleel et al. 2008] – 2MB per core 16-way set-associative LLC – 32KB I+D L1, 256KB L2 – 200-cycle DRAM access time • 19 memory-intensive SPEC CPU 2006 benchmarks • 10 mixes of SPEC CPU 2006 for 4-cores 15 433.milc 434.zeusmp 436.cactusADM 462.libquantum 470.lbm 435.gromacs 450.soplex 459.GemsFDTD 471.omnetpp 473.astar 400.perlbench 401.bzip2 403.gcc 429.mcf 437.leslie3d 456.hmmer 481.wrf 482.sphinx3 483.xalancbmk Percentage of Total Cycle Segment Size in SPEC CPU 2006 100 80 60 40 20 0 ignore size 16 size 12 size 8 size 4 size 1 Insensitive LRU Friendly Sensitive Segment size chosen by DCS is different depending on the workload 16 Speedup Compared to LRU in Singlethreaded Workloads 1.14 DCS with NRU DCS with Random DIP[Qureshi et al. ISCA'07] RRIP[Jaleel et al. ISCA'10] Speedup 1.12 1.1 1.08 1.06 1.04 1.02 1 Insensitive LRU Friendly mean 483.xalancbmk 482.sphinx3 481.wrf 456.hmmer 437.leslie3d 429.mcf 403.gcc 401.bzip2 400.perlbench 473.astar 471.omnetpp 459.GemsFDTD 450.soplex 435.gromacs 470.lbm 462.libquantum 436.cactusADM 434.zeusmp 433.milc 0.98 Sensitive Outperforms LRU replacement on average by 5.2% with NRU replacement 17 Speedup Compared to LRU in Multiprogrammed Workloads 1.3 1.25 Speedup 1.2 DCS with NRU UCP with LRU[Qureshi et al. MICRO'06] DCS + UCP with Random DCS + UCP with NRU 1.15 1.1 1.05 1 0.95 mix1 ssif mix2 sfii mix3 isis mix4 iifi mix5 siss mix6 siis mix7 sisi mix8 sssi mix9 mix10 Mean isss ifss Random performs similar to NRU with DCS + UCP 18 Space Overhead in 8MB LLC Multi-core 80 DCS Overhead(Predictor) DCS Overhead(Reference bit) UCP Overhead Replacement State Overhead in KB 70 60 50 40 30 20 10 0 LRU UCP (LRU) DCS with UCP (NRU) DCS with UCP (Rand) Space overhead is less than half of LRU in random This is 0.35% of the 8MB LLC capacity 19 Conclusion • Dynamic Segmentation – Treats non-referenced and referenced blocks differently – Addresses different workload behavior – Decoupled from replacement policy • Results – On average 12% improvement with random policy – Requires half of the space of LRU 20 Thank you