Improving Cache Performance by Exploiting Read-Write Disparity Samira Khan

advertisement

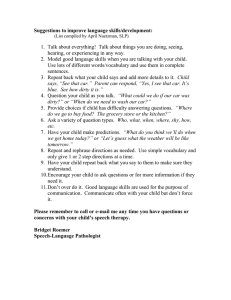

Improving Cache Performance by Exploiting Read-Write Disparity Samira Khan, Alaa R. Alameldeen, Chris Wilkerson, Onur Mutlu, and Daniel A. Jiménez Summary • Read misses are more critical than write misses • Read misses can stall processor, writes are not on the critical path • Problem: Cache management does not exploit read-write disparity • Goal: Design a cache that favors reads over writes to improve performance • Lines that are only written to are less critical • Prioritize lines that service read requests • Key observation: Applications differ in their read reuse behavior in clean and dirty lines • Idea: Read-Write Partitioning • Dynamically partition the cache between clean and dirty lines • Protect the partition that has more read hits • Improves performance over three recent mechanisms 2 Outline • • • • • Motivation Reuse Behavior of Dirty Lines Read-Write Partitioning Results Conclusion 3 Motivation • Read and write misses are not equally critical • Read misses are more critical than write misses • Read misses can stall the processor • Writes are not on the critical path Wr B Rd C Rd A STALL STALL Buffer/writeback B time Cache management does not exploit the disparity between read-write requests 4 Key Idea • Favor reads over writes in cache • Differentiate between read vs. only written to lines • Cache should protect lines that serve read requests • Lines that are only written to are less critical • Improve performance by maximizing read hits • An Example Rd A A D Rd B Wr B Read-Only B Rd D Wr C Read and Written C Write-Only 5 An Example Rd A Rd A M DCB Wr B WR B M Rd B H STALL Write B Wr C M Rd D M Write C STALL DBiteration AD C B Aper CBA A 2Dstalls LRU Replacement Policy Rd A H Rd D Wr C Rd B Wr B H Rd B H WR C M Rd D M Write B Write C DCB cycles saved STALL C DBA ADBBA D D BA B A1Dstall CBA perReplace iteration Read-Biased Replacement Policy Dirty lines differently Evicting linesare thattreated are only written to depending on performance read requests can improve 6 Outline • • • • • Motivation Reuse Behavior of Dirty Lines Read-Write Partitioning Results Conclusion 7 Reuse Behavior of Dirty Lines • Not all dirty lines are the same • Write-only Lines • Do not receive read requests, can be evicted • Read-Write Lines • Receive read requests, should be kept in the cache Evicting write-only lines provides more space for read lines and can improve performance 8 Applications have37.4% different reuse behavior On average linesread are write-only, in both dirty read lines and written 9.4% lines are 9 483.xalancbmk 482.sphinx3 481.wrf 473.astar 471.omnetpp 80 470.lbm 90 465.tonto 100 464.h264ref 462.libquantum 459.GemsFDTD 458.sjeng 456.hmmer 450.soplex 447.dealII 445.gobmk 437.leslie3d 436.cactusADM 435.gromacs 434.zeusmp 433.milc 429.mcf 410.bwaves 403.gcc 401.bzip2 400.perlbench Percentage of Cachelines in LLC Reuse Behavior of Dirty Lines Dirty (write-only) Dirty (read-write) 70 60 50 40 30 20 10 0 Outline • • • • • Motivation Reuse Behavior of Dirty Lines Read-Write Partitioning Results Conclusion 10 Read-Write Partitioning • Goal: Exploit different read reuse behavior in dirty lines to maximize number of read hits • Observation: – Some applications have more reads to clean lines – Other applications have more reads to dirty lines • Read-Write Partitioning: – Dynamically partitions the cache in clean and dirty lines – Evict lines from the partition that has less read reuse Improves performance by protecting lines with more read reuse 11 Read-Write Partitioning Soplex 9 Number of Reads Normalized to Reads in clean lines at 100m Number of Reads Normalized to Reads in clean lines at 100m 10 Clean Line Dirty Line 8 7 6 5 4 3 2 1 0 0 100 200 300 400 Instructions (M) 500 4 Xalanc 3.5 Clean Line Dirty Line 3 2.5 2 1.5 1 0.5 0 0 100 200 300 400 Instructions (M) 500 Applications have significantly different read reuse behavior in clean and dirty lines 12 Read-Write Partitioning • • • • Utilize disparity in read reuse in clean and dirty lines Partition the cache into clean and dirty lines Predict the partition size that maximizes read hits Maintain the partition through replacement – DIP [Qureshi et al. 2007] selects victim within the partition Predicted Best Partition Size 3 Dirty Lines Cache Sets Replace from dirty partition Clean Lines 13 Predicting Partition Size • Predicts partition size using sampled shadow tags – Based on utility-based partitioning [Qureshi et al. 2006] • Counts the number of read hits in clean and dirty lines • Picks the partition (x, associativity – x) that maximizes number of read hits Maximum number of read hits … … S E T S T A G S Dirty LRU+1 LRU S H A D O W MRU-1 S H A D O W D MRU E LRU+1 LRU L MRU-1 A M P C O U N T E R S MRU S C O U N T E R S T A G S Clean 14 Outline • • • • • Motivation Reuse Behavior of Dirty Lines Read-Write Partitioning Results Conclusion 15 Methodology • • • • • • CMP$im x86 cycle-accurate simulator [Jaleel et al. 2008] 4MB 16-way set-associative LLC 32KB I+D L1, 256KB L2 200-cycle DRAM access time 550m representative instructions Benchmarks: – 10 memory-intensive SPEC benchmarks – 35 multi-programmed applications 16 Comparison Points • DIP, RRIP: Insertion Policy [Qureshi et al. 2007, Jaleel et al. 2010] – Avoid thrashing and cache pollution • Dynamically insert lines at different stack positions – Low overhead – Do not differentiate between read-write accesses • SUP+: Single-Use Reference Predictor [Piquet et al. 2007] – Avoids cache pollution • Bypasses lines that do not receive re-references – High accuracy – Does not differentiate between read-write accesses • Does not bypass write-only lines – High storage overhead, needs PC in LLC 17 Comparison Points: Read Reference Predictor (RRP) • A new predictor inspired by prior works [Tyson et al. 1995, Piquet et al. 2007] • Identifies read and write-only lines by allocating PC – Bypasses write-only lines • Writebacks are not associated with any PC Allocating No PC from L1 allocating PC Time PC P: Rd A Wb A Wb A PC Q: Wb A Wb A Wb A Marks P as athe PC allocating that allocates lineand that is never read Associates PC ina overhead L1 passes PC in L2,again LLC High storage 18 Single Core Performance Speedup vs. Baseline LRU 1.20 48.4KB 2.6KB RRP RWP 1.15 1.10 1.05 1.00 DIP RRIP SUP+ Differentiating read vs. write-only lines RWP performs within 3.4% of RRP, improves performance over recentoverhead mechanisms But requires 18X less storage 19 Speedup vs. Baseline LRU 4 Core Performance 1.14 DIP RRIP SUP+ RRP RWP 1.12 1.10 1.08 1.06 +8% +4.5% 1.04 1.02 1.00 No Memory Intensive 1 Memory Intensive 2 Memory Intensive 3 Memory Intensive 4 Memory Intensive More benefit when more applications Differentiating read vs. write-only lines are memory intensive improves performance over recent mechanisms 20 Average Memory Traffic Percentage of Memory Traffic 120 100 15% 80 Writeback Miss 17% 60 40 85% 20 66% 0 Base RWP Increases writeback traffic by 2.5%, but reduces overall memory traffic by 16% 21 20 18 16 14 12 10 8 6 4 2 0 400.perlbench 401.bzip2 403.gcc 410.bwaves 416.gamess 429.mcf 433.milc 434.zeusmp 435.gromacs 436.cactusADM 437.leslie3d 444.namd 445.gobmk 447.dealII 450.soplex 453.povray 454.calculix 456.hmmer 458.sjeng 459.GemsFDTD 462.libquantum 464.h264ref 465.tonto 470.lbm 471.omnetpp 473.astar 481.wrf 482.sphinx3 483.xalancbmk Number of Cachelines Dirty Partition Sizes Natural Dirty Partition Predicted Dirty Partition Partition size varies significantly for some benchmarks 22 400.perlbench 401.bzip2 403.gcc 410.bwaves 416.gamess 429.mcf 433.milc 434.zeusmp 435.gromacs 436.cactusADM 437.leslie3d 444.namd 445.gobmk 447.dealII 450.soplex 453.povray 454.calculix 456.hmmer 458.sjeng 459.GemsFDTD 462.libquantum 464.h264ref 465.tonto 470.lbm 471.omnetpp 473.astar 481.wrf 482.sphinx3 483.xalancbmk Number of Cachelines Dirty Partition Sizes 20 18 16 14 12 10 8 6 4 2 0 Natural Dirty Partition Predicted Dirty Partition Partition size varies significantly during the runtime for some benchmarks 23 Outline • • • • • Motivation Reuse Behavior of Dirty Lines Read-Write Partitioning Results Conclusion 24 Conclusion • Problem: Cache management does not exploit read-write disparity • Goal: Design a cache that favors read requests over write requests to improve performance – Lines that are only written to are less critical – Protect lines that serve read requests • Key observation: Applications differ in their read reuse behavior in clean and dirty lines • Idea: Read-Write Partitioning – Dynamically partition the cache in clean and dirty lines – Protect the partition that has more read hits • Results: Improves performance over three recent mechanisms 25 Thank you 26 Improving Cache Performance by Exploiting Read-Write Disparity Samira Khan, Alaa R. Alameldeen, Chris Wilkerson, Onur Mutlu, and Daniel A. Jiménez Extra Slides 28 Read Intensive • Most accesses are reads, only a few writes • 483.xalancbmk: Extensible stylesheet language transformations (XSLT) processor XSLT Processor XML Input HTML/XML/TXT Output XSLT Code • 92% accesses are reads • 99% write accesses are stack operation 29 Read Intensive Read and Written Written Only elementAt push %ebp mov %esp, %ebp push %esi … Logical View of Stack WRITEBACK %ebp %esi pop %esi pop %ebp ret Written Only WRITEBACK 1.8% lines in LLC write-only, 0.38% lines are read-written 30 Write Intensive • Generates huge amount of intermediate data • 456.hmmer: Searches a protein sequence database Hidden Markov Model of Multiple Sequence Alignments Best Ranked Matches Database • Viterbi algorithm, uses dynamic programming • Only 0.4% writes are from stack operations 31 Write IntensiveRead and Written 2D Table WRITEBACK WRITEBACK Written Only 92% lines in LLC write-only, 2.68% lines are read-written 32 Read-Write Intensive • Iteratively reads and writes huge amount of data • 450.soplex: Linear programming solver Miminum/maximum of objective function Inequalities and objective function • Simplex algorithm, iterates over a matrix • Different operations over the entire matrix at each iteration 33 Read-Write Intensive Read and Written Pivot Column READ WRITEBACK Pivot Row Written Only Read and Written 19% lines in LLC write-only, 10% lines are read-written 34 120 100 0 400.pe… 401.bzi… 403.gcc 410.bw… 429.mcf 433.milc 434.ze… 435.gro… 436.cac… 437.lesl… 445.go… 447.de… 450.so… 456.hm… 458.sje… 459.Ge… 462.lib… 464.h2… 465.to… 470.lbm 471.om… 473.astar 481.wrf 482.sp… 483.xal… Percentage of Cachelines in LLC Read Reuse of Clean-Dirty Lines in LLC Clean Non-Read Dirty Non-Read Clean Read Dirty Read 80 60 40 20 On average 37.4% blocks are dirty non-read and 42% blocks are clean non-read 35 Single Core Performance Speedup vs. Baseline LRU 1.07 48.4KB 2.6KB RRP RWP 1.06 1.05 1.04 1.03 1.02 1.01 1.00 DIP RRIP SUP+ 5% speedup over the whole SPECCPU2006 benchmarks 36 Speedup Speedup vs. Baseline LRU 1.30 DIP RRIP SUP+ RRP RWP 1.20 1.10 1.00 0.90 On average 14.6% speedup over baseline 5% speedup over the whole SPECCPU2006 benchmarks Writeback Traffic Normalized to LRU Writeback Traffic to Memory 1.4 1.2 RRP RWP 1 0.8 0.6 0.4 0.2 0 Increases traffic by 17% over the whole SPECCPU2006 benchmarks 38 4-Core Speedup Normalized Weighted Speedup 1.3 1.25 RRP RWP 1.2 1.15 1.1 1.05 1 0.95 Workloads 39 Change of IPC with Static Partitioning Xalancbmk 0.9 Soplex 0.8 0.7 0.7 IPC IPC 0.8 0.6 0.6 0.5 0.4 0.5 0 2 4 6 8 10 12 14 16 Number of Ways Assigned to Dirty Blocks 0 2 4 6 8 10 12 14 16 Number of Ways Assigned to Dirty Blocks