ch11 (HMM).ppt

advertisement

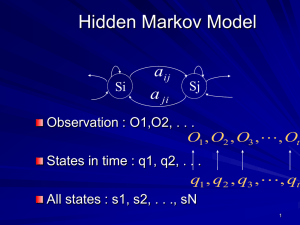

Hidden Markov Model

Si

aij

a ji

Sj

Observation : O1,O2, . . .

O1 , O2 , O3 ,, Ot

States in time : q1, q2, . .

.

q1 , q2 , q3 , , qt

All states : s1, s2, . . .

1

Hidden Markov Model (Cont’d)

Discrete Markov Model

P(qt s j | qt 1 si , qt 2 sk ,, q1 s z )

P(qt s j | qt 1 si )

Degree 1 Markov Model

2

Hidden Markov Model (Cont’d)

aij : Transition Probability from Si to Sj ,

1 i, j N

aij P(qt s j | qt 1 si )

3

Hidden Markov Model

Example

S1 : The weather is rainy

S2 : The weather is cloudy

S3 : The weather is sunny

rainy cloudy sunny

0.4 0.3 0.3 rainy

A {aij } 0.2 0.6 0.2 cloudy

0.1 0.1 0.8 sunny

4

Hidden Markov Model Example

(Cont’d)

Question 1:How much is this probability:

Sunny-Sunny-Sunny-Rainy-Rainy-Sunny-Cloudy-Cloudy

q1q2 q3 q4 q5 q6 q7 q8

s3 s3 s3 s1s1s3 s2 s2 1.536 10

a33a33a31a11a13a32a22

5

4

Hidden Markov Model Example

(Cont’d)

The probability of being in state i in time t=1

i P(q1 si ),1 i N

Question 2:The probability of staying in a state for d

days if we are in state Si?

d 1

ii

P(si si si s j i ) a (1 aii ) Pi (d )

d Days

6

HMM Components

N : Number Of States

M : Number Of Outputs

A : State Transition Probability Matrix

B : Output Occurrence Probability in

each state

: Primary Occurrence Probability

( A, B, )

: Set of HMM Parameters

7

Three Basic HMM Problems

Given an HMM and a sequence of

observations O,what is the probability P(O | ) ?

Given a model and a sequence of

observations O, what is the most likely state

sequence in the model that produced the

observations?

Given a model and a sequence of

observations O, how should we adjust model

parameters in order to maximize P(O | ) ?

8

First Problem Solution

T

T

t 1

t 1

P(o | q, ) P(ot | qt , ) bqt (ot )

P(q | ) q1aq1q2 aq2 q3 aqT 1qT

We Know That:

And

P( x, y) P( x | y) P( y)

P( x, y | z ) P( x | y, z ) P( y | z )

9

First Problem Solution (Cont’d)

P(o, q | ) P(o | q, ) P(q | )

P(o, q | )

q1 bq1 (o1 )aq1q2 bq2 (o2 ) aqT 1qT bqT (oT )

P(o | ) P(o, q | )

q

q1

bq1 (o1 )aq1q2 bq2 (o2 ) aqT 1qT bqT (oT )

q1q2 qT

Account Order : O(2TN

T

)

10

Forward Backward Approach

t (i) P(o1 , o2 ,, ot , qt i | )

Computing

t

(i)

1) Initialization

1 (i) i bi (o1 ),1 i N

11

Forward Backward Approach

(Cont’d)

2) Induction :

N

t 1 ( j ) [ t (i )aij ]b j (ot 1 )

i 1

1 t T 1,1 j N

3) Termination :

N

P(o | ) T (i)

i 1

Account Order :

2

O( N T )

12

Backward Variable

t (i) P(ot 1 , ot 2 ,, oT | qt i, )

1) Initialization

T (i) 1,1 i N

2)Induction

N

t (i ) aijb j (ot 1 ) t 1 ( j )

j 1

t T 1, T 2, ,1And1 j N

13

Second Problem Solution

Finding the most likely state sequence

P (o, qt i | )

t (i ) P(qt i | o, )

P (o | )

P (o, qt i | )

t (i ) t (i )

N

N

P(o, qt i | ) t (i) t (i)

i 1

i 1

Individually most likely state :

q arg max [ t (i )],1 t T ,1 t n N

*

t

i

14

Viterbi Algorithm

Define :

t (i)

max P[q1 , q 2 ,, qt 1 , qt i, o1 , o2 , , ot | ]

q1 , q2 ,, qt 1

1 i N

P is the most likely state sequence with these

conditions : state i , time t and observation O

15

Viterbi Algorithm (Cont’d)

t 1 ( j ) [max t (i)aij ].b j (ot 1 )

i

1) Initialization

1 (i) i bi (o1 ),1 i N

1 (i) 0

t (i ) Is the most likely state before state i

at time t-1

16

Viterbi Algorithm (Cont’d)

2) Recursion

t ( j ) max [ t 1 (i )aij ]b j (ot )

1i N

t ( j ) arg max [ t 1 (i )aij ]

1i N

2 t T ,1 j N

17

Viterbi Algorithm (Cont’d)

3) Termination:

p max [ T (i )]

*

1i N

q arg max [ T (i )]

*

T

1i N

4)Backtracking:

q t 1 (q ), t T 1, T 2,,1

*

t

*

t 1

18

Third Problem Solution

Parameters Estimation using BaumWelch Or Expectation Maximization

(EM) Approach

t (i, j ) P (qt i, qt 1 j | o, )

Define:

P (o, qt i, qt 1 j | )

P (o | )

t (i )aijb j (ot 1 ) t 1 ( j )

N

N

(i)a b

i 1 j 1

t

ij

j

(ot 1 ) t 1 ( j )

19

Third Problem Solution

(Cont’d)

N

t (i) t (i, j )

j 1

T 1

t (i)

t 1

T

: Expected value of the number of

jumps from state i

Expected value of the number of

(i, j ):jumps

from state i to state j

t 1

t

20

Third Problem Solution

(Cont’d)

T

( j)

T

aij

(i, j )

t 1

T

t

t (i)

t 1

t

b j (k )

t 1

ot Vk

T

( j)

t 1

t

i 1 (i)

21

Baum Auxiliary Function

Q( | ) P(o, q | ' ) log P(o, q | )

'

q

if : Q( , ) Q( , ' )

P(o | ) P(o | )

'

'

By this approach we will reach to a local

optimum

22

Restrictions Of

Reestimation Formulas

N

i 1

N

a

j 1

i

ij

M

1

1,1 i N

b (k ) 1,1

k 1

j

jN

23

Continuous Observation

Density

We have amounts of a PDF instead of

b j (k ) P(ot Vk | qt j )

We have

M

b j (o) C jk (o, jk , jk ), b j (o)do 1

k 1

Mixture

Coefficients

Average

Variance

24

Continuous Observation

Density

Mixture in HMM

M1|1 M2|1

M1|2 M2|2

M1|3 M2|3

M3|1 M4|1

M3|2 M4|2

M3|3 M4|3

S2

S3

S1

Dominant Mixture:

b j (o) Max C jk (o, jk , jk )

k

25

Continuous Observation

Density (Cont’d)

Model Parameters:

( A, , C , , )

N×N

1×N

N×M N×M×K N×M×K×K

N : Number Of States

M : Number Of Mixtures In Each State

K : Dimension Of Observation Vector

26

Continuous Observation

Density (Cont’d)

T

C jk

t 1

T

M

t

( j, k )

t 1 k 1

T

jk

t 1

T

t

t 1

t

( j, k )

( j , k )ot

t

( j, k )

27

Continuous Observation

Density (Cont’d)

T

jk

( j , k ) (o

t 1

t

)

(

o

)

t

t

jk

jk

T

( j, k )

t 1

t

t ( j, k ) Probability of event j’th state

and k’th mixture at time t

28

State Duration Modeling

aij

Si

Sj

a ji

Probability of staying d times in state i :

d 1

ii

Pi (d ) a

(1 aii )

29

State Duration Modeling

(Cont’d)

HMM With clear duration

Pi (d )

aij

…….

…….

Si

Pj (d )

a ji

Sj

30

State Duration Modeling

(Cont’d)

HMM consideration with State Duration :

– Selecting q1 i

using i ‘s

– Selecting d1 using Pq (d )

– Selecting Observation Sequence O1 , O2 ,, Od

using bq (O1 , O2 , , Od )

1

1

in practice we assume the following independence:

1

d1

bq1 (O1 , O2 ,, Od1 ) bq1 (t , Ot )

t 1

– Selecting next state q2 j using transition

probabilities aq1q2 . We also have an additional

constraint:

aq1q1 0

31

Training In HMM

Maximum Likelihood (ML)

Maximum Mutual Information (MMI)

Minimum Discrimination Information

(MDI)

32

Training In HMM

Maximum Likelihood (ML)

P(o | 1 )

P(o | 2 )

P(o | 3 )

.

.

.

P(o | n )

P Maximum[ P(O | V )]

*

r

Observation

Sequence

33

Training In HMM (Cont’d)

Maximum Mutual Information (MMI)

P(O , | )

I (O , ) log

P (O ) P( )

, {v }

Mutual Information

I (O , ) log P(O | v )

v

log P(O | w , w) P( w)

w 1

34

Training In HMM (Cont’d)

Minimum Discrimination Information

(MDI)

Observation :

O (O1 , O2 ,, OT )

Auto correlation : R ( R1 , R2 ,, Rt )

( R, P ) inf I (Q : P )

Q (R)

q (o )

I (Q : P ) q(o) log

do

P (o | )

35