Computer Science report 2013-14

advertisement

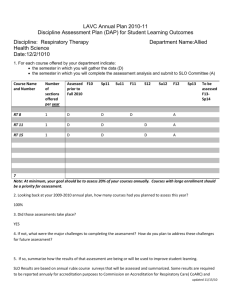

2013-2014 Annual Program Assessment Report 7/21/14 Please submit report to your department chair or program coordinator, the Associate Dean of your College and the assessment office by Tuesday, September 30, 2014. You may submit a separate report for each program which conducted assessment activities. College: Engineering and Computer Science Department: Computer Science Program: B.S. in Computer Science Assessment liaison: Diane Schwartz 1. Overview of Annual Assessment Project(s). Provide a brief overview of this year’s assessment plan and process. Based on our ABET program review in Fall 2013, the Computer Science Department significantly revised its assessment methodology and developed a 5-year assessment plan. We did a pilot assessment of two of our eleven SLOs (SLO a.1 and SLO c) using the new methodology. We also conducted our 2014 Senior Exit Survey. 2. Assessment Buy-In. Describe how your chair and faculty were involved in assessment related activities. Did department meetings include discussion of student learning assessment in a manner that included the department faculty as a whole? The new assessment methodology and 5-year plan was developed and discussed in Department meetings and in meetings with the Department Assessment Committee. The Department chair is at every department meeting and so was part of the design and development of our new process. The pilot assessment of two of our SLOs was done by the Assessment Liaison. 3. [SLO a.1] Student Learning Outcome Assessment Project. Answer items a-f for each SLO assessed this year. If you assessed an additional SLO, copy and paste items a-f below, BEFORE you answer them here, to provide additional reporting space. 3a. Which Student Learning Outcome was measured this year? 1 SLO a.1 Apply knowledge of computing appropriate to the discipline. 3b. Does this learning outcome align with one or more of the university’s Big 5 Competencies? (Delete any which do not apply) This learning outcome aligns indirectly with Critical Thinking . 3c. What direct and/or indirect instrument(s) were used to measure this SLO? Questions on the midterms and final in Comp 333(Spring 2014) were used to assess SLO a.1. This was a pilot assessment using our new Assessment plan. Beginning Fall 2104 a set of core computer science major courses will be used to assess each student learning outcome in the program. 3d. Describe the assessment design methodology: For example, was this SLO assessed longitudinally (same students at different points) or was a cross-sectional comparison used (Comparing freshmen with seniors)? If so, describe the assessment points used. All assessments will use a cross-sectional comparison model. We will assess each of the eleven program SLOs in a set of lower division and upper division core courses over a 5 year period. This will allow us to compare how well students are achieving the student outcomes from freshman to senior year. 3e. Assessment Results & Analysis of this SLO: Provide a summary of how the results were analyzed and highlight findings from the collected evidence. Student scores on the assessment questions for SLO a.1 were normalized to a 0 – 10 scale and aggregated to compute the mean scores and develop a histogram of scores to show the distribution of student achievement on this student learning outcome. The mean score on a scale of 0 -10 was 6.5. The distribution of the scores was 0 – 2.0 ( 9%); 2.1- 4.0 (13%); 4.16.0 (13%); 6.1 – 8.0 (35%); 8.1-10(30%) 2 3f. Use of Assessment Results of this SLO: Describe how assessment results were used to improve student learning. Were assessment results from previous years or from this year used to make program changes in this reporting year? (Possible changes include: changes to course content/topics covered, changes to course sequence, additions/deletions of courses in program, changes in pedagogy, changes to student advisement, changes to student support services, revisions to program SLOs, new or revised assessment instruments, other academic programmatic changes, and changes to the assessment plan.) Since this was a small pilot assessment completed in May 2014, the assessment results have not been used to make program changes. [SLO c] Student Learning Outcome Assessment Project. Answer items a-f for each SLO assessed this year. If you assessed an additional SLO, copy and paste items a-f below, BEFORE you answer them here, to provide additional reporting space. 3a. Which Student Learning Outcome was measured this year? SLO c Apply knowledge of programming concepts, algorithmic principles, and data abstraction to design, implement, and evaluate the software necessary to solve a specified problem. 3b. Does this learning outcome align with one or more of the university’s Big 5 Competencies? (Delete any which do not apply) This learning outcome aligns indirectly with Critical Thinking . 3c. What direct and/or indirect instrument(s) were used to measure this SLO? 3 Questions on the midterms and final in Comp 333(Spring 2014) were used to assess SLO c. This was a pilot assessment using our new Assessment plan. Beginning Fall 2104 a set of core computer science major courses will be used to assess each student learning outcome in the program. 3d. Describe the assessment design methodology: For example, was this SLO assessed longitudinally (same students at different points) or was a cross-sectional comparison used (Comparing freshmen with seniors)? If so, describe the assessment points used. All assessments will use a cross-sectional comparison model. We will assess each of the eleven program SLOs in a set of lower division and upper division core courses over a 5 year period. This will allow us to compare how well students are achieving the student outcomes from freshman to senior year. 3e. Assessment Results & Analysis of this SLO: Provide a summary of how the results were analyzed and highlight findings from the collected evidence. Student scores on the assessment questions for SLO a.1 were normalized to a 0 – 10 scale and aggregated to compute the mean scores and develop a histogram of scores to show the distribution of student achievement on this student learning outcome. The mean score on a scale of 0 -10 was 6.5. The distribution of the scores was 0 – 2.0 ( 9%); 2.1- 4.0 (9%); 4.1-6.0 (17%); 6.1 – 8.0 (30%); 8.1-10(35%) 3f. Use of Assessment Results of this SLO: Describe how assessment results were used to improve student learning. Were assessment results from previous years or from this year used to make program changes in this reporting year? (Possible changes include: changes to course content/topics covered, changes to course sequence, additions/deletions of courses in program, changes in pedagogy, changes to student advisement, changes to student support services, revisions to program SLOs, new or revised assessment instruments, other academic programmatic changes, and changes to the assessment plan.) Since this was a small pilot assessment completed in May 2014, the assessment results have not been used to make program changes. 4 4. Assessment of Previous Changes: Present documentation that demonstrates how the previous changes in the program resulted in improved student learning. The most significant program change that we have made in the last five years, was the addition of a required year-long senior design project to the major. The new course sequence is COMP 490/490L/491. We gave a software engineering assessment test to the Comp 490 students. They did a little better that the students who took the test before the new requirement. They also did better that the students in the prerequisite software engineering class. We will not have more definitive and significant results on improved student learning until we phase in our new assessment plan. The improvement in the software engineering principles assessment test scores since the onset of the senior design project requirement is documentable but we also believe that the senior design project is extremely important for our students for many other reasons. The employers of our graduates are very excited and supportive of the new senior design requirement. Students who complete a year-long senior design project are better prepared to enter the workforce directly after graduation. They will have already grappled with software requirements, professional development and teamwork communication issues that are prevalent in industry. They also are better able to interview for positions in the software industry. 5. Changes to SLOs? Please attach an updated course alignment matrix if any changes were made. (Refer to the Curriculum Alignment Matrix Template, http://www.csun.edu/assessment/forms_guides.html.) See attached list of student learning outcomes assessed in each of the required major courses. This table shows the major alignment of the program student learning outcomes with the core courses. 6. Assessment Plan: Evaluate the effectiveness of your 5 year assessment plan. How well did it inform and guide your assessment work this academic year? What process is used to develop/update the 5 year assessment plan? Please attach an updated 5 year assessment plan for 2013-2018. (Refer to Five Year Planning Template, plan B or C, http://www.csun.edu/assessment/forms_guides.html.) Our previous 5-year assessment plan served as a good template for our assessment activities. We had some difficulty in maintaining the planned pace of assessment activities. Our ABET program review in Fall 2014 revealed some ABET concerns 5 about our assessment process. They preferred an assessment process that (1) assessed SLOs in courses throughout the curriculum ( freshman – senior year courses); and (2) assessed courses with direct assessments of student course work. Before the ABET program review, we assessed SLOs primarily at the senior course level using course independent direct assessment tests and some indirect measurements. Our new ABET plan conforms to the ABET preferences. 7. Has someone in your program completed, submitted or published a manuscript which uses or describes assessment activities in your program? Please provide citation or discuss. None that I know of. 8. Other information, assessment or reflective activities or processes not captured above. 6 Course Alignment Matrix: Student Learning Outcomes Assessed in Required Courses Student Learning Outcomes Required Computer Science Course Comp 122 Comp 182/L Comp 222 Comp 256/L Comp 282 Comp 310 Comp 322/L Comp 333 Comp 380/L Comp 490/L Comp 491 7 a b a.2 a.1 b a.1 a.2 b a.2 b b c d e f e f.2 d c k k g h d e d j j f.1 c c c c c b i i b a.1 h h d a.1 g f.2 f.1 f.2 g g i k i i i k k h i j k List of Student Outcomes Computer Science Program Student Outcomes (a) Apply knowledge of computing and mathematics appropriate to the discipline. a.1 Computing appropriate to the discipline a.2 Mathematics appropriate to the discipline (b) Analyze a problem, and specify the computing requirements appropriate to meet desired need. (c) Apply knowledge of programming concepts, algorithmic principles, and data abstraction to design, implement, and evaluate the software necessary to solve a specified problem. (d) Function effectively on teams to accomplish a common goal. (e) Understand professional, ethical, legal, security, and social issues and responsibilities. (f) Communicate effectively with a range of audiences f.1 Written Communication f.2 Oral Communication. (g) Analyze the local and global impact of computing on individuals, organizations, and society. (h) Recognize the need for and demonstrate an ability to engage in continuing professional development. 8 (i) Use current techniques, skills, and software development tools necessary for programming practice. (j) Model and design of computer-based systems in a way that demonstrates comprehension of the tradeoffs involved in design choices. (k) Apply software engineering principles and practices in the construction of complex software systems 9