Overview of the AQIP Examiner Feedback Report.

advertisement

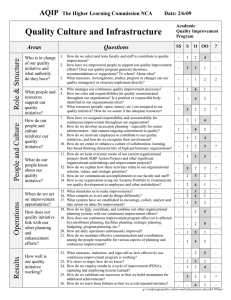

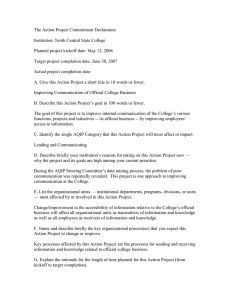

AQIP Examiner Feedback Report: Implications for AQIP Data Mining Project Areas A Report to the AQIP Steering Committee by Kate Peresie Overview of Examiner Feedback Report Respondents: Fall ’04 Employees Reported for AQIP Examiner Employee group AQIP Examiner Respondents Total number of your employees in this group Employee group Administrators Faculty Staff 28 207 109 Administrators Faculty Staff Others Full-time employees Part-time employees Total employees (includes employees from Shared Services) Longevity (as reported by HR June 05) 3 or fewer years 4 – 6 years 7 – 9 years 10 or more years 0 193 151 344 Others Full-time employees Part-time employees Total employees (includes employees from Shared Services) Total number of respondents in this group 23 64 47 Professional Staff, 30 Support Staff 2 136 30 167 Longevity 64 41 21 77 3 or fewer years 4 – 6 years 7 – 9 years 10 or more years Gender Male Female 43 45 7 71 70 96 Since only 2 respondents in “Other,” this category was ignored for this report. Ratings: Ratings are given as means and standard deviations (in parentheses) of a 5 to 1 scale. Organizational Perspective: Is the “big picture,” knowledge of mission/vision/philosophy, competitive environment, goals. Support Staff weakest in all 8 areas (p.9). For All Employees, Short-range Goals are least understood (p.9). Effective Processes: 14 statements on the characteristics of effective processes. Greatest difference in rating of importance of statements was for statement: “is designed to achieve its goals with no unnecessary steps.” Administration 4.39 (0.65), Support Staff 3.77 (0.91), difference of means 0.62 (p.13). Criteria: Items about effectiveness of processes related to each of 9 AQIP Criteria. “Criteria scores offer a systems profile of your institution.” Most effective are Building Collaborative Relationships 3.09 (1.14), Helping Students Learn 2.74 (1.12), Supporting Institutional Operations 2.74 (1.12) (p. 15). Least effective are Valuing People 2.19 (1.14), Leading and Communicating 2.21 (1.11), Planning Continuous Improvement 2.25 (1.13) (p. 15). Greatest difference in rating for Supporting Institutional Operations. Administration 3.07 (1.04), Support Staff 2.44 (1.04), difference of means 0.63 (pp. 16-17). Employees of 7-9 years had lowest rankings for 8 of 9 Criteria (p. 17). Table with best and worst processes within each criterion: For the Criterion of: 1: Helping Students Learn (p. 19) 2: Accomplishing Other Distinctive Objectives (p. 21) 3: Understanding Students’ and Other Stakeholders’ Needs (pp. 22-23) 4: Valuing People 5: Leading and Communicating (p. 26) 6: Supporting Institutional Operations (pp. 27-28) Best Process/Score Placing students in courses for which they are appropriately prepared. 3.05 (1.21) Aligning our distinctive strategic initiatives with our institutional mission, vision, and philosophy. 2.47 (1.05) Identifying which groups to serve. 2.90 (1.04) Hiring people who share our mission, vision and philosophy. 2.89 (1.10) Creating opportunities for faculty and staff to learn and practice leadership skills. 2.46 (1.10) Identifying the needs of students for support services. 3.22 (1.06) 7: Measuring Effectiveness (p. 29) Collecting, storing, and distributing data and information to those who need it. 2.61 (1.16) 8: Planning Continuous Improvement (pp. 30-31) Developing strategies that deal with institutional challenges and opportunities. 2.41 (1.09) Building collaborative relationships with other educational organizations, including those that send us students and those that receive our graduates. 3.64 (1.00) 9: Building Collaborative Relationships (p. 32) Worst Process/Score Defining good teaching. 2.49 (1.03) Agreeing on and regularly analyzing a set of measures of our other strategic initiatives. 2.10 (1.02) Systematically collecting and analyzing the complaints we receive in order to improve. 2.43 (1.08) Addressing faculty, staff and administrator job satisfaction and morale. 1.82 (1.08) Measuring how well our systems for leading and communicating are working. 1.87 (0.98) Regularly evaluating how well our student and administrative support services work. 2.31 (1.02) Analyzing performance data and sharing results throughout the institution. 2.23 (1.05) Measuring and evaluating how well our data collection, storage, and distribution system works. 2.23 (1.04) Evaluating our systems for planning. 2.09 (1.10) Building internal collaborative relationships across different departments and organizational units. 2.49 (1.04) Summary: The most disenfranchised groups are Support Staff, due to lowest rankings of items, and employees of 7 to 9 years, due to lowest rankings of items and lowest response rate to the survey. Processes related to Valuing People (Criterion 4), Leading and Communicating (Criterion 5), and Planning Continuous Improvement (Criterion 8) are in the greatest need of improvement. Best processes, across the criteria, relate to serving students (placement 3.05, identifying needs for support services 3.22, collaborating with other educational organizations 3.64). Worst processes, across the criteria, relate to measuring, analyzing, evaluating our processes. Implications for Data Mining and the Six Project Areas Data supports the need for the Assessment project area since this is all about measuring and evaluating the attainment of student learning outcomes. Also, the Assessment project area helps build internal collaborative relationships (in Criterion 9) and directly ties to Criterion 1: Helping Students Learn. Data supports the need for the Mission and Vision project area through the need for improvement in Leading and Communicating (Criterion 5). This project may also help to reach the disenfranchised groups. Data supports the need for the Strategic Planning project area due to the need for improvement in Leading and Communicating (Criterion 5) and Planning Continuous Improvement (Criterion 8) and the need to measure, analyze and evaluate our processes.