A Best-Fit Approach for Productive Analysis of Omitted Arguments

advertisement

A Best-Fit Approach for Productive Analysis of

Omitted Arguments

Eva Mok & John Bryant

University of California, Berkeley

International Computer Science Institute

Simplify grammar by exploiting the

language understanding process

Omission of arguments in Mandarin Chinese

Construction grammar framework

Model of language understanding

Our best-fit approach

Productive Argument Omission (in Mandarin)

1

2

ma1+ma

gei3

ni3

zhei4+ge

mother

give

2PS

this+CLS

ni3

gei3

yi2

Mother (I) give you this (a toy).

You give auntie [the peach].

2PS give auntie

3

ao

EMP

4

gei3

ni3

gei3

2PS give

ya

Oh (go on)! You give [auntie] [that].

EMP

[I] give [you] [some peach].

give

CHILDES Beijing Corpus (Tardiff, 1993; Tardiff, 1996)

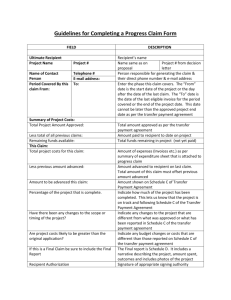

Arguments are omitted with different

probabilities

% elided (98 total utterances)

100.00%

90.00%

80.00%

Giver

Theme

70.00%

60.00%

50.00%

Recipient

40.00%

30.00%

20.00%

10.00%

0.00%

All arguments omitted: 30.6%

No arguments omitted: 6.1%

Construction grammar approach

Kay & Fillmore 1999; Goldberg 1995

Grammaticality: form and function

Basic unit of analysis: construction,

i.e. a pairing of form and meaning constraints

Not purely lexically compositional

Implies early use of semantics in processing

Embodied Construction Grammar (ECG) (Bergen & Chang, 2005)

Problem: Proliferation of constructions

Subj

Verb

Obj1

Obj2

↓

↓

↓

↓

Giver

Transfer

Recipient

Theme

Verb

Obj1

Obj2

↓

↓

↓

Transfer

Recipient

Theme

Subj

Verb

Obj2

↓

↓

↓

Giver

Transfer

Theme

Subj

Verb

Obj1

↓

↓

↓

Giver

Transfer

Recipient

…

If the analysis process is smart, then...

Subj

Verb

Obj1

Obj2

↓

↓

↓

↓

Giver

Transfer

Recipient

Theme

The grammar needs only state one construction

Omission of constituents is flexibly allowed

The analysis process figures out what was omitted

Best-fit analysis process takes burden

off the grammar representation

Utterance

Discourse & Situational

Context

Constructions

Analyzer:

incremental,

competition-based,

psycholinguistically

plausible

Semantic Specification:

image schemas, frames,

action schemas

Simulation

Competition-based analyzer finds the best

analysis

An analysis is made up of:

A constructional tree

A set of resolutions

A semantic specification

The best fit has the

highest combined score

Combined score that determines best-fit

Syntactic Fit:

Constituency relations

Combine with preferences on non-local elements

Conditioned on syntactic context

Antecedent Fit:

Ability to find referents in the context

Conditioned on syntactic information, feature

agreement

Semantic Fit:

Semantic bindings for frame roles

Frame roles’ fillers are scored

Analyzing ni3 gei3 yi2 (You give auntie)

Two of the competing analyses:

ni3

↓

Giver

gei3

↓

yi2

↓

Transfer Recipient

omitted

↓

ni3

↓

Theme

Giver

gei3

↓

omitted

↓

Transfer Recipient

Syntactic Fit:

P(Theme omitted | ditransitive cxn) = 0.65

P(Recipient omitted | ditransitive cxn) = 0.42

(1-0.78)*(1-0.42)*0.65 = 0.08

(1-0.78)*(1-0.65)*0.42 = 0.03

yi2

↓

Theme

Using frame and lexical information to restrict type

of reference

The Transfer Frame

Giver

Lexical Unit gei3

Recipient

Giver

(DNI)

Theme

Recipient

(DNI)

Theme (DNI)

Manner

Purpose

Means

Reason

Place

Time

Can the omitted argument be recovered

from context?

Antecedent Fit:

ni3

↓

Giver

gei3

yi2

omitted

↓

↓

↓

Transfer Recipient Theme

ni3

↓

Giver

gei3

omitted

↓

↓

Transfer Recipient

Discourse & Situational

Context

child

peach

table

mother

auntie

?

yi2

↓

Theme

How good of a theme is a peach?

How about an aunt?

Semantic Fit:

ni3

↓

Giver

gei3

yi2

omitted

↓

↓

↓

Transfer Recipient Theme

ni3

↓

Giver

gei3

omitted

↓

↓

Transfer Recipient

The Transfer Frame

Giver

(usually animate)

Recipient

(usually animate)

Theme

(usually inanimate)

yi2

↓

Theme

The argument omission patterns shown earlier

can be covered with just ONE construction

% elided (98 total utterances)

90.00%

80.00%

Giver

Theme

70.00%

60.00%

P(omitted|cxn):

Subj

Verb

Obj1

Obj2

50.00%

↓

↓

↓

↓

20.00%

Giver

Transfer

Recipient

Theme

0.78

0.42

40.00%

30.00%

10.00%

0.00%

0.65

Each cxn is annotated with probabilities of omission

Language-specific default probability can be set

Recipient

Research goal

A computationally-precise modeling framework

for learning early constructions

Language

Data

Learning

New Construction

Linguistic

Knowledge

16

Frequent argument omission in pro-drop languages

Mandarin example:

ni3 gei3 yi2 (“you give auntie”)

Even in English, there are often no spoken

antecedents to pronouns in conversations

Learner must integrate cues from

intentions, gestures, prior discourse, etc

17

A short dialogue

bie2 mo3 wai4+tou2 a: #1_3 ! (別抹外頭啊)

NEG-IMP apply forehead

Don’t apply [lotion to your] forehead

mo3 wai4+tou2 ke3 jiu4 bu4 hao3+kan4 le a: . (抹外頭可就不好看了啊)

apply forehead LINKER LINKER NEG good looking CRS SFP

[If you] apply [lotion to your] forehead then [you will] not be pretty

…

ze ya a: # bie2 gei3 ma1+ma wang3 lian3 shang4 mo:3 e: !

(嘖呀啊 # 別給媽媽往臉上抹呃)

INTERJ # NEG-IMP BEN mother CV-DIR face on apply

INTERJ # Don’t apply [the lotion] on [your mom’s] face (for mom)

[- low pitch motherese] ma1+ma bu4 mo:3 you:2 . (媽媽不抹油)

mother NEG apply lotion

Mom doesn’t apply (use) lotion

18

Goals, refined

Demonstrate learning given

embodied meaning representation

structured representation of context

Based on

Usage-based learning

Domain-general statistical learning mechanism

Generalization / linguistic category formation

19

Towards a precise computational model

Modeling early grammar learning

Context model & Simulation

Data annotation

Finding the best analysis for learning

Hypothesizing and reorganizing constructions

Pilot results

20

Embodied Construction Grammar

construction yi2-N

subcase of Morpheme

form

constraints

self.f.orth <-- "yi2"

meaning : @Aunt

evokes RD as rd

constraints

self.m <--> rd.referent

self.m <--> rd.ontological_category

21

“you” specifies discourse role

construction ni3-N

subcase of Morpheme

form

constraints

self.f.orth <-- "ni3"

meaning : @Human

evokes RD as rd

constraints

self.m <--> rd.referent

self.m <--> rd.ontological_category

rd.discourse_participant_role <-- @Addressee

rd.set_size <-- @Singleton

22

The meaning of “give” is a schema with roles

construction gei3-V2

subcase of Morpheme

form

constraints

self.f.orth <-- "gei3"

meaning : Give

schema Transfer

subcase of Action

roles

giver : @Entity

recipient : @Entity

theme : @Entity

constraints

giver <--> protagonist

schema Give

subcase of Transfer

constraints

inherent_aspect <-- @Inherent_Achievement

giver <-- @Animate

recipient <-- @Animate

theme <-- @Manipulable_Inanimate_Object

23

Finally, you-give-aunt links up the roles

construction ni3-gei3-yi2

subcase of Finite_Clause

constructional

constituents

n : ni3-N

g : gei3-V2

y : yi2-N

form

constraints

n.f meets g.f

g.f meets y.f

meaning : Give

constraints

self.m <--> g.m

self.m.giver <--> n.m

self.m.recipient <--> y.m

24

The learning loop: Hypothesize & Reorganize

Utterance

World

Knowledge

Discourse &

Situational

Context

Linguistic

Knowledge

reorganize

reinforcement

Analysis

hypothesize

Context Fitting

Partial

SemSpec

25

If the learner has a ditransitive cxn

Form

ni3

Meaning

Addressee

meets

yi2

XIXI

addressee

speaker

MOT

giver

meets

gei3

Context

Discourse

Segment

Give

recipient

Aunt

INV

theme

omitted

Peach

26

Context fitting recovers more relations

Form

ni3

Meaning

Addressee

meets

yi2

Give

recipient

Aunt

theme

omitted

XIXI

giver

meets

gei3

Context

addressee

speaker

MOT

giver

Give

Discourse

Segment

recipient

INV

attentionalfocus

theme

Peach

27

But the learner does not yet have phrasal cxns

Form

ni3

Meaning

Addressee

Context

XIXI

meets

gei3

speaker

MOT

giver

Give

Give

Discourse

Segment

recipient

meets

yi2

addressee

Aunt

INV

attentionalfocus

theme

Peach

28

Context bootstraps learning

Form

ni3

Meaning

Addressee

meets

gei3

meets

yi2

giver

Give

recipient

Aunt

construction ni3-gei3-yi2

subcase of Finite_Clause

constructional

constituents

n : ni3

g : gei3

y : yi2

form

constraints

n.f meets g.f

g.f meets y.f

meaning : Give

constraints

self.m <--> g.m

self.m.giver <--> n.m

self.m.recipient <--> y.m

29

A model of context is key to learning

The context model makes it possible for the

learning model to:

learn new constructions using contextually

available information

learn argument-structure constructions in

pro-drop languages

30

Understanding an utterance in context

Transcripts

Events + Utterances

Schemas +

Constructions

Context Model

Recency Model

Simulation

Analysis +

Resolution

Context Fitting

Semantic Specification

31

Context model: Events + Utterances

Setting

participants,

entities, & relations

Start

Event

Sub-Event

Event

DS

Sub-Event

32

Entities and Relations are instantiated

Setting

CHI, MOT (incl. body parts)

livingroom (incl. ground, ceiling, chair, etc), lotion

Start

caused_motion01

forceful_motion

ds04

motion

admonishing05

speaker = MOT

addressee = CHI

forcefulness = normal

apply02

translational_motion03

applier = CHI

substance = lotion

surface = face(CHI)

mover = lotion

spg = SPG

33

The context model is updated dynamically

Events

Context Model

Recency Model

Extended transcript annotation:

speech acts & events

Simulator inserts events into

context model & updates it

with the effects

Some relations persists over

time; some don’t.

Simulation

34

Competition-based analyzer finds the best analysis

An analysis is made up of:

A constructional tree

A semantic specification

A set of resolutions

A-GIVE-B-X

subj

v

obj2

obj1

Ref-Exp

Give

Ref-Exp

Ref-Exp

Bill

gave

Mary

the book

@Man

Give-Action

Bill

giver

@Woman

Mary

recipient

@Book

book01

theme

35

Combined score that determines best-fit

Syntactic Fit:

Constituency relations

Combine with preferences on non-local elements

Conditioned on syntactic context

Antecedent Fit:

Ability to find referents in the context

Conditioned on syntactic information, feature

agreement

Semantic Fit:

Semantic bindings for frame roles

Frame roles’ fillers are scored

36

Context Fitting goes beyond resolution

Form

ni3

Meaning

Addressee

meets

yi2

Give

recipient

Aunt

theme

omitted

XIXI

giver

meets

gei3

Context

addressee

speaker

MOT

giver

Give

Discourse

Segment

recipient

INV

attentionalfocus

theme

Peach

37

Context Fitting, a.k.a. intention reading

Context Fitting takes resolution a step further

considers entire context model, ranked by recency

considers relations amongst entities

heuristically fits from top down, e.g.

• discourse-related entities

• complex processes

• simple processes

• other structured and unstructured entities

more heuristics for future events (e.g. in cases of

commands or suggestions)

38

Adult grammar size

~615 constructions total

~100 abstract cxns (26 to capture lexical variants)

~70 phrasal/clausal cxns

~440 lexical cxns (~260 open class)

~195 schemas (~120 open class, ~75 closed class)

39

Starter learner grammar size

No grammatical categories (except interjections)

Lexical items only

~440 lexical constructions

~260 open class: schema / ontology meanings

~40 closed class: pronouns, negation markers, etc

~60 function words: no meanings

~195 schemas (~120 open class, ~75 closed class)

40

The process hierarchy defined in schemas

Process

State

State_

Change

Action

Proto_Transitive

Complex_Process

Intransitive_

State

Two_Participant_

State

Mental_State

Serial_Processes

Concurrent_

Processes

Cause_Effect

Joint_Motion

Caused_Motion

41

The process hierarchy defined in schemas

Action

Intransitive_Action

Motion

Translational_

Motion

Expression

Self_Motion

Translational_

Self_Motion

Forceful_Motion

Force_Application

Continuous_

Force_Application

Agentive_Impact

42

The process hierarchy defined in schemas

Action

Cause_Change

Communication

Obtainment

Transfer

Ingestion

Perception

Other_

Transitive_Action

43

Understanding an utterance in context

Transcripts

reorganize

Events + Utterances

Schemas +

Constructions

Context Model

Recency Model

Simulation

Analysis +

Resolution

reinforcement

hypothesize

Context Fitting

Semantic Specification

44

Hypothesize & Reorganize

Hypothesize:

utterance-driven;

relies on the analysis (SemSpec & context)

operations: compose

Reorganize:

grammar-driven;

can be triggered by usage (to be determined)

operations: generalize

45

Composing new constructions

ni3

gei3

Addressee

Give

giver

recipient

theme

Context

MOT

XIXI

Peach

INV

Compose operation:

If roles from different constructions point to

the same context element, propose a new

construction and set up a meaning binding.

46

Creating pivot constructions

ni3

Addressee

giver

meets

gei3

Give

yi2

@Aunt

Addressee

giver

meets

gei3

recipient

meets

ni3

meets

wo3

Give

recipient

@Human

Pivot generalization:

Given a phrasal cxn, look for another cxn that

shares 1+ constituents. Line up roles and bindings.

Create new cxn category for the slot.

47

Resulting constructions

construction ni3-gei3-cat01

constituents

ni3, gei3, cat01

meaning : Give

constraints

self.m.recipient <--> g.m

general construction cat01

subcase of Morpheme

meaning: @Human

construction wo3

subcase of cat01

meaning: @Human

construction yi2

subcase of cat01

meaning: @Aunt

48

Pilot Results: Sample constructions learned

Composed:

chi1_fan4

ni3_chuan1_xie2

ni3_shuo1

bu4_na2

wo3_qu4

ni3_ping2zi_gei3_wo3

ni3_gei3_yi2

wo3_bu4_chi1

eat rice

you wear shoe

you say

NEG take

I go

you bottle give me

you give aunt

I NEG eat

Pivot Cxns:

ni3 {shuo1, chuan1}

ni3 {shuo1, hua4}

wo3 {zhao3, qu4}

bu4 {na2, he1}

{wo3, ma1} cheng2

you {say, wear}

you {say, draw}

I {find, go}

NEG {take, drink}

{I, mom} scoop

49

Challenge #1: Non-compositional meaning

bake

Bake

baker

baked

you

a cake

Context

Bake-Event

MOT

Addressee

@Cake

Give-Event

CHI

Cake

Non-compositional meaning:

Search for additional meaning schemas (in

context or in general) that relate the meanings of

the individual constructions

50

Challenge #2: Function words

bake

Bake

baker

baked

a cake

Context

Bake-Event

MOT

Benefaction

@Cake

Cake

for

you

Addressee

CHI

Function words tend to indicate relations rather

than events or entities

51

Challenge #3: How far up to generalize

Eat rice

Inanimate Object

Eat apple

Eat watermelon

Manipulable

Objects

Unmovable

Objects

Food

Furniture

Want rice

Want apple

Want chair

Fruit

apple

Savory

watermelon

Chair

Sofa

rice

52

Challenge #4: Beyond pivot constructions

Pivot constructions: indexing on particular

constituent type

Eat rice; Eat apple; Eat watermelon

Abstract constructions: indexing on role-filler

relations between constituents

Eat catX

food

Schema Eat

roles

eater <--> agent

food <--> patient

Want catY

wanted

Schema Want

roles

wanter <--> agent

wanted <--> patient

53

Challenge #5: Omissible constituents

Intuition:

Same context, two expressions that differ by one

constituent a general construction with the

constituent being omissible

May require verbatim memory traces of

utterances + “relevant” context

54

When does the learning stop?

Schemas +

Constructions

reorganize

Bayesian Learning Framework

Gˆ argmax P(G | U , Z )

G

argmax P(U | G, Z ) P(G )

G

reinforcement

Analysis +

Resolution

Context

Fitting

hypothesize

SemSpec

Most likely grammar given utterances and context

The grammar prior is a preference for the “kind”

of grammar

In practice, take the log and minimize cost

Minimum Description Length (MDL)

55

Intuition for MDL

S -> Give me NP

S -> Give me NP

NP -> the book

NP -> DET book

NP -> a book

DET -> the

DET -> a

Suppose that the prior is inversely proportional to the size

of the grammar (e.g. number of rules)

It’s not worthwhile to make this generalization

56

Intuition for MDL

S -> Give me NP

S -> Give me NP

NP -> the book

NP -> DET N

NP -> a book

DET -> the

NP -> the pen

DET -> a

NP -> a pen

N -> book

NP -> the pencil

N -> pen

NP -> a pencil

N -> pencil

NP -> the marker

N -> marker

NP -> a marker

57

How to calculate the prior of this grammar

(Yet to be determined)

There is evidence that the lexicalized

constructions do not completely go away

If the more lexicalized constructions are retained,

the size of grammar is a bad indication of degree

of generality

58