Connectionist Models: Lecture 3 Srini Narayanan CS182/CogSci110/Ling109

advertisement

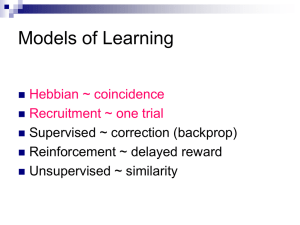

Connectionist Models: Lecture 3 Srini Narayanan CS182/CogSci110/Ling109 Spring 2006 Let’s just do an example 0 i 1 0 i 2 b=1 w01 0.8 w02 0.6 w0b 1/(1+e^-0.5) 0.5 x0 f y0 0.6224 i1 i2 y0 0 0 0 0 1 1 1 0 1 1 1 1 E = Error = ½ ∑i (ti – yi)2 E = ½ (t0 – y0)2 0.5 0.4268 E = ½ (0 – 0.6224)2 = 0.1937 Wij y j i i ti yi yi 1 yi W01 y1 0 i1 0 0 0 t0 y0 y0 1 y0 0 W02 y2 0 i2 0 W0b yb 0 b 0 0.1463 learning rate 0 0 0.6224 0.62241 0.6224 0 0.1463 suppose = 0.5 W0b 0.5 0.1463 0.0731 An informal account of BackProp For each pattern in the training set: Compute the error at the output nodes Compute w for each wt in 2nd layer Compute delta (generalized error expression) for hidden units Compute w for each wt in 1st layer After amassing w for all weights and, change each wt a little bit, as determined by the learning rate wij ipo jp Backpropagation Algorithm Initialize all weights to small random numbers For each training example do For For each hidden unit h: y ( w x ) hi i h i each output unit k: y ( w x ) kh h k k For each output unit k: y (1 y ) (t y ) k k k k k For each hidden unit h: y (1 y ) w h h h hk k Update each network weight wij: wij wij wij with k wij j xij Backpropagation Algorithm “activations” “errors” Momentum term The speed of learning is governed by the learning rate. If the rate is low, convergence is slow If the rate is too high, error oscillates without reaching minimum. w ( n) w ( n 1) i ( n)y j ( n) ij ij 0 1 Momentum tends to smooth small weight error fluctuations. the momentum accelerates the descent in steady downhill directions. the momentum has a stabilizing effect in directions that oscillate in time. Convergence May get stuck in local minima Weights may diverge …but works well in practice Representation power: 2 layer networks : any continuous function 3 layer networks : any function Pattern Separation and NN architecture Local Minimum USE A RANDOM COMPONENT SIMULATED ANNEALING Overfitting and generalization TOO MANY HIDDEN NODES TENDS TO OVERFIT Stopping criteria Sensible stopping criteria: total mean squared error change: Back-prop is considered to have converged when the absolute rate of change in the average squared error per epoch is sufficiently small (in the range [0.01, 0.1]). generalization based criterion: After each epoch the NN is tested for generalization. If the generalization performance is adequate then stop. If this stopping criterion is used then the part of the training set used for testing the network generalization will not be used for updating the weights. Overfitting in ANNs Summary Multiple layer feed-forward networks Replace Step with Sigmoid (differentiable) function Learn weights by gradient descent on error function Backpropagation algorithm for learning Avoid overfitting by early stopping ALVINN drives 70mph on highways Use MLP Neural Networks when … (vectored) Real inputs, (vectored) real outputs You’re not interested in understanding how it works Long training times acceptable Short execution (prediction) times required Robust to noise in the dataset Applications of FFNN Classification, pattern recognition: FFNN can be applied to tackle non-linearly separable learning problems. Recognizing printed or handwritten characters, Face recognition Classification of loan applications into credit-worthy and non-credit-worthy groups Analysis of sonar radar to determine the nature of the source of a signal Regression and forecasting: FFNN can be applied to learn non-linear functions (regression) and in particular functions whose inputs is a sequence of measurements over time (time series). Extensions of Backprop Nets Recurrent Architectures Backprop through time Elman Nets & Jordan Nets Output 1 Output 1 Hidden Context Input α Hidden Context Input Updating the context as we receive input • In Jordan nets we model “forgetting” as well • The recurrent connections have fixed weights • You can train these networks using good ol’ backprop Recurrent Backprop w2 a w4 b w1 c w3 unrolling 3 iterations a b c a b c a b c w1 a w2 w3 b w4 c • we’ll pretend to step through the network one iteration at a time • backprop as usual, but average equivalent weights (e.g. all 3 highlighted edges on the right are equivalent) Models of Learning • Hebbian ~ coincidence • Supervised ~ correction (backprop) • Recruitment ~ one trial Recruiting connections • Given that LTP involves synaptic strength changes and Hebb’s rule involves coincidentactivation based strengthening of connections – How can connections between two nodes be recruited using Hebbs’s rule? The Idea of Recruitment Learning K Y • Suppose we want to link up node X to node Y • The idea is to pick the two nodes in the middle to link them up X N B F = B/N Pno link (1 F ) • Can we be sure that we can find a path to get from X to Y? BK the point is, with a fan-out of 1000, if we allow 2 intermediate layers, we can almost always find a path X Y X Y Finding a Connection in Random Networks For Networks with N nodes and sqrt(N ) branching factor, there is a high probability of finding good links. Recruiting a Connection in Random Networks Informal Algorithm 1. Activate the two nodes to be linked 2. Have nodes with double activation strengthen their active synapses (Hebb) 3. There is evidence for a “now print” signal Triangle nodes and feature structures A B C A B C Representing concepts using triangle nodes Feature Structures in Four Domains Makin Ham Container Push dept~EE Color ~pink Inside ~region Schema ~slide sid~001 Taste ~salty Outside ~region Posture ~palm Bdy. ~curve Dir. ~ away Purchase Stroll emp~GSI Bryant Pea dept~CS Color ~green Buyer ~person Schema ~walk sid~002 Taste ~sweet Seller ~person Speed ~slow Cost ~money Dir. ~ ANY emp~GSI Goods ~ thing Recruiting triangle nodes • Let’s say we are trying to remember a green circle • currently weak connections between concepts (dotted lines) has-color blue has-shape green round oval Strengthen these connections • and you end up with this picture has-color has-shape Green circle blue green round oval Distributed vs Localist Rep’n John 1 1 0 0 John 1 0 0 0 Paul 0 1 1 0 Paul 0 1 0 0 George 0 0 1 1 George 0 0 1 0 Ringo 1 0 0 1 Ringo 0 0 0 1 What are the drawbacks of each representation? Distributed vs Localist Rep’n John 1 1 0 0 John 1 0 0 0 Paul 0 1 1 0 Paul 0 1 0 0 George 0 0 1 1 George 0 0 1 0 Ringo 1 0 0 1 Ringo 0 0 0 1 • What happens if you want to represent a group? • How many persons can you represent with n bits? 2^n • What happens if one neuron dies? • How many persons can you represent with n bits? n Connectionist Models in Cognitive Science Structured PDP (Elman) Hybrid Neural Conceptual Existence Data Fitting Spreading activation and feature structures • Parallel activation streams. • Top down and bottom up activation combine to determine the best matching structure. • Triangle nodes bind features of objects to values • Mutual inhibition and competition between structures • Mental connections are active neural connections Can we formalize/model these intuitions • What is a neurally plausible computational model of spreading activation that captures these features. • What does semantics mean in neurally embodied terms – What are the neural substrates of concepts that underlie verbs, nouns, spatial predicates? 5 levels of Neural Theory of Language Pyscholinguistic experiments Spatial Relation Motor Control Metaphor Grammar Cognition and Language abstraction Computation Structured Connectionism Triangle Nodes Neural Net and learning SHRUTI Computational Neurobiology Biology Neural Development Quiz Midterm Finals The Color Story: A Bridge between Levels of NTL (http://www.ritsumei.ac.jp/~akitaoka/color-e.html A Tour of the Visual System • two regions of interest: – retina – LGN Rods and Cones in the Retina http://www.iit.edu/~npr/DrJennifer/visual/retina.html Physiology of Color Vision Two types of light-sensitive receptors Cones cone-shaped less sensitive operate in high light color vision Rods rod-shaped highly sensitive operate at night gray-scale vision © Stephen E. Palmer, 2002 The Microscopic View What Rods and Cones Detect Notice how they aren’t distributed evenly, and the rod is more sensitive to shorter wavelengths Center Surround cells • No stimuli: – both fire at base rate • Stimuli in center: – ON-center-OFF-surround fires rapidly – OFF-center-ON-surround doesn’t fire • Stimuli in surround: – OFF-center-ON-surround fires rapidly – ON-center-OFF-surround doesn’t fire • Stimuli in both regions: – both fire slowly Color Opponent Cells 25 50 Mean Spikes / Sec +R-G • These cells are found in the LGN +Y-B • Four color channels: Red, Green, Blue, Yellow 25 400 700 50 • R/G , B/Y pairs +G-R 400 25 700 25 • We can use these to determine the visual system’s fundamental hue responses +B-Y 400 700 400 Wavelength (mμ) (Monkey brain) • much like center/surround cells 700