Document 14904542

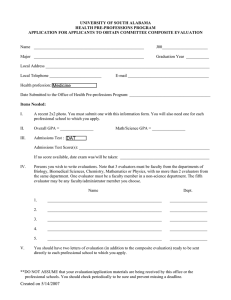

advertisement