The CSIRO ATNF Gigabit Wide-Area Network Shaun W Amy CSIRO Australia Telescope

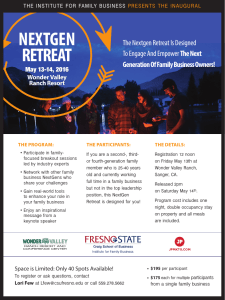

advertisement

The CSIRO ATNF Gigabit Wide-Area Network Shaun W Amy <Shaun.Amy@CSIRO.AU> CSIRO Australia Telescope National Facility Project Aim • Initially provide a 1Gbit/s link to each of the three ATNF observatories: – Parkes, – Mopra (cost/fibre sharing with ANU’s Siding Springs Observatory), – ATCA Narrabri (and then to CSIRO Plant Industry, Myall Vale). • Uses the AARNet Regional Transmission Service (RTS). • Connect each observatory (without aggregation) back to CSIRO ATNF headquarters at Marsfield in Sydney. • Require network performance and stability that can enable “real” e-VLBI. • Production and Research traffic will share the same link. • Extend the network to WASP at UWA (via CeNTIE/GrangeNet) and also to Swinburne University (via the southern leg) of the AARNet Regional Network. An ATNF Telescope “Refresher” • Parkes: – – – – – 64m prime-focus antenna, range of receivers and backends, celebrates its 45th birthday in October, VLBI (including Mk III for geodesy), the star of The Dish. • Mopra: – – – – 22m wheel-on-track, cassegrain antenna, primary use is for mm observations during winter, new spectrometer: MOPS, almost always used for VLBI (including the first Mopra observations). • Narrabri: – – – – – 6 x 22m (with 5 movable), cassegrain antennas, frequency agility, various antenna configurations (5km E-W track with N-S spur), CABB wide-band backend scheduled for 2007-8, VLBI: tied-array mode. The Parkes Telescope The Mopra Telescope The Australia Telescope Compact Array, Narrabri AARNet Regional Transmission Service • Implemented using Nextgen fibre infrastructure. • The RTS provides a connection between the Nextgen connection point on the regional network and the Nextgen POP located in the capital city. • Point-to-point Ethernet service (Layer 2): – the customer can use this however they wish. • Service delivered via CWDM MUX or direct fibre depending on the connection model (see later) • Regional fibre tail builds are the responsibility of the customer not AARNet. • “Last mile” in the capital city is the responsibility of the customer. The Nextgen Network Source: AARNet Pty Ltd Backbone Design • Nextgen: – AARNet have access to two fibre pairs (not the whole Nextgen network), – Pair 1: AARNet DWDM 10Gbit/s service (provides inter-capital city AARNet3 service), – Pair 2: Physical connections to tail sites. • Implemented using Cisco Carrier-class (ONS 15454) optical transmission systems: – initially supports 16 x 10Gbit/s wavelengths, – can be upgraded to 32 x 10Gbit/s with no chassis changes. – each customer 1Gbit/s service is full-line rate (i.e. no oversubscription). • Requires amplification/regeneration every 80-100km: – optical-optical and optical-electrical-optical, – housed in a Controlled Environment Vault (CEV) but these are not always located at ideal locations for site connections! Part of the Nextgen SB2 Segment Source: AARNet Pty Ltd Connecting to the AARNet Regional Transmission Network Source: AARNet Pty Ltd Cost Considerations (for a 1Gbit/s transmission service) • Setup/Install/Construction: – – – – – fibre build (approx $2m), break-out equipment ($32k per location) initial connection charge within a network segment ($60k per circuit), active equipment (switches, routers, optical transceivers, patch leads), travel/labour. • Recurrent: – – – – access charge per circuit ($34k p.a.), fibre maintenance charges (about $40k p.a.), CSIRO equipment maintenance/self-sparing, labour. • Other: – depreciation, – whole-of-life costing and equipment rollover/upgrades. Network Design • • • • • • Implemented by ATNF using mid-range equipment at the observatories capable of 1Gbit/s but NOT 10Gbit/s. Can support “jumbo” frames at Layer 2 but NOT at Layer 3. CSIRO’s corporate IT group are interested in upgrading/exchanging this equipment for high-end hardware that is modular and capable of 10Gbit/s but… Combined Layer 2 (Ethernet) and Layer 3 (IP) network. For e-VLBI, a layer 2 Virtual Local Area Network (VLAN) has been implemented across all ATNF sites (with extensions to UWA and Swinburne), primarily for performance reasons. The e-VLBI VLAN uses so-called CSIRO “untrusted” address space and thus can be accessible from hosts that aren’t connected to this VLAN (e.g. University of Tasmania, JIVE etc): – this external connectivity is via a standard routed IP connection, – this traffic transits a CSIRO firewall appliance. • For production traffic, layer 3 point-to-point links are used which allows for rapid failover to a backup link (via the existing Layer 3 routing protocols): – a recent science-related use of the production network has been to implement Mopra remote observing from Narrabri. A Hybrid Switched/Routed Network Network Protocol and Performance Considerations • TCP or UDP? – currently using TCP. • Data recorders currently running kernel 2.6.16. • What about Ethernet “jumbo” frames: – not currently being used by the ATNF disk/network-based recorders. • A number of TCP variants were tested, including Reno, BIC, highspeed, htcp) and settled on BIC. • Default TCP configuration is not tuned for high-bandwidth, long-haul networks: – TCP window is the amount of un-acknowledged data in the network, – Optimise buffers (TCP window size) using: window = bandwidth x RTT Performance between e-VLBI data recorders (memory-memory) Source: Dr Chris Phillips Future Developments (1) • Additional three 1Gbit/s links to be commissioned: – location of endpoints, – ensure ATNF production and research (e-VLBI) network requirements are met, – satisifying the requirements of CSIRO’s corporate IT group, – load balancing/link sharing considerations. • “Lighting up” the Swinburne connection: – ATNF have an agreement with Swinburne to provide a software correllation facility starting 1 October 2006, – technically easy but legal issues causing the delay. • Is there a simple mechanism to guarantee that e-VLBI gets the required bandwidth when needed (e.g. some form of policing/ratelimiting)? • Is Quality of Service (QoS) required? • Should we use “jumbo” frames as the default even though good results are being obtained with a 1500byte MTU on 1Gbit/s links. Future Developments (2) • The (not-so-wild) West: – Currently uses GrangeNet and CeNTIE (at no direct cost) to provide a dedicated 1Gbit/s path to the hosts at WASP at UWA, – GrangeNet due to close before the end of 2006, – the 10Gbit/s (multiple 1Gbit/s circuits) CeNTIE Melbourne-Perth path will be shutdown in December 2006 – AARNet3 production service is a possible alternative but need to consider the following: • cost (traffic charges and setup), • layer 3 (i.e. IPv4/v6 routed traffic) only, • currently provides 1Gbit/s connections via a somewhat restrictive connection mechanism, – Need to factor in xNTD (and LFD) network requirements. • EXPReS: – engineering of overseas links (will AARNet look to providing UCLP or some sort of hybrid optical-packet technology on one of the two SX Transport 10Gbit/s links between Australia and the USA), • 10Gbit/s and beyond… • Do we need to consider non-Ethernet based services? First Mopra Remote Observing by Dion Lewis (Operations Scientist) on Saturday 29 July 2006