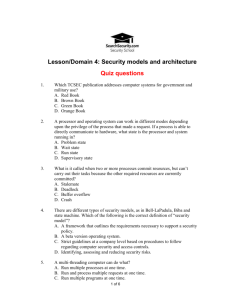

NCSC-TG-030 Library No. S-240,572 Version 1 FOREWORD

advertisement