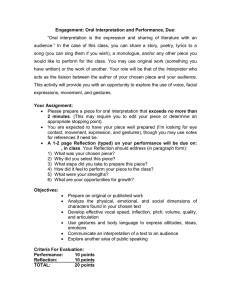

Tactile Hand Gesture Recognition through Haptic Feedback for Affective Online Communication

advertisement

Tactile Hand Gesture Recognition through Haptic

Feedback for Affective Online Communication

Hae Youn Joung and Ellen Yi-Luen Do

College of Architecture

Georgia Institute of Technology

247 4th St Atlanta, GA 30332, USA

{joannejoung, ellendo}@gatech.edu

Abstract. Our study explores how individuals communicate emotions using

tactile hand gestures and provides evidence supporting the link between

emotions and gestures to investigate the usability of tactile hand gestures for

emotional online communication. Tactile hand gestures are used as the source

of information to get to emotions. In this study, behavioral aspects of tactile

hand gestures being used for emotional interaction are observed through a

sensory input device and analyzed using analysis of variance (ANOVA). In user

experiments, subjects perform tactile hand gestures on the sensory input device

in the response of a list of distinct emotions (i.e. excited, happy, relaxed, sleepy,

tired, lonely, angry and alarmed). An analytical method is used to recognize

gestures in terms of signal parameters such as intensity, temporal frequency,

spatial frequency and pattern correlation. We found that different emotions are

statistically associated with different tactile hand gestures. This research

introduces a new way of creating online emotional communication devices that

approximate the use of natural tactile hand gestures in face-to-face

communication.

Keywords: Tactile hand gesture recognition, affective communication, haptic

interface, and tactile stimulation.

1

Introduction

Touch, central to emotional communication, is the simplest and the most

straightforward of all sensory systems [1] and it has been described as the most

fundamental means for people in contact with the world [2]. It is also capable of

communicating and eliciting emotions [3]. Tactile gestures exhibit the natural

capability and tendency of humans to move their hands to express and communicate

emotions. In particular, in emotional communication, tactile hand gestures arouse

human emotions through touch. In face-to-face communication, gestures cover a wide

range of non-verbal communication including body language, facial expressions, hand

gestures, and sign language. Among these various types of gestures, tactile hand

gestures are one form of communication using the sensory modality for touch.

Tactile hand gestures are a natural social tool of expressive behavior that describes

emotions and situations in human social life. A gesture is a motion of the body that

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

contains information [4]. For example, soothing someone’s arm is a tactile gesture.

We can communicate the emotion through the ‘soothing act’. Such tactile hand

gestures are commonly used in face-to-face communication. Their use, however, is

limited in the era of online communication environment.

To enable on-line communication that approximates a face-to-face interaction,

human computer interaction (HCI) and affective computing researchers have

converted emotional messages into the form of text, voice, and video [5], [6]. In these

studies, the authors have discussed an integrated physical, intellectual and social

experience to incorporate the aspect in design for emotional interaction. Other

researchers have designed and developed a mobile emotional messaging system

named eMoto to enable affective loop experiences [7]. The previous studies of

affective computing indicate that the aim of communication should be to design

embodied interaction that harmonizes with our everyday practices and everyday

bodily experiences.

Method of expressing emotions through hand gesture: expressive gestures are used

as a method of expressing emotions through hand gesture to control a device.

Literatures focus tactile interface and haptic device. 'pin alarm' by Hellman and Ypma

allows for setting the waking up time with meaningful expressive actions. By pushing

as many pins as possible you indicate that you want a lot of sleep and by pushing

them one by one you indicate a more urgent situation. Yet the design needs additional

feedback to communicate understanding. Other designs have snooze buttons with

pressure sensors that elicit pushing, stroking or slamming. Yet these expressive

actions offer no feedback to a person [8].

Recently, researchers have also employed various sensory modalities that enable

affective online communication through interactive media. Especially, researchers

have developed a wearable tactile interface that attempts to encourage humans to

share their emotions seamlessly through online digital communication methods.

These emotional communication techniques, however, require an explicit process of

conversion from emotions to predefined formats such as human/computer languages

and symbols. In contrast, tactile hand gestures are a natural and intuitive way to

express personal emotions and situations [9].

Our research focuses on the study of tactile hand gestures for the use in affective

online communication. We are interested in determining how emotions affect the

motion of tactile hand gestures and how we can code different emotions with tactile

hand gestures in devices for online communication. The aim of this study is to better

understand the relationship between hand gestures and emotions. In addition, from

this research we expect that the relationships between touch hand gestures and

emotions are determined. Finally, we suggest applying this research to prototype

called emotion communication device in future work section. We expect prototype

validations show that the gesture-to-emotion conversion can be used to enable the

emotional interaction between humans in distance through a new media. The authors

argue that emotions and actions are closely intertwined and provide an answer to the

following question: can we communicate and decode distinct emotions with tactile

hand gestures through on-line communication devices in a similar way that we do in

face-to-face communication?

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

2

Approach

The aim of this study is to analyze the relationship between human emotions and

corresponding tactile hand gestures, especially those performed with the fingers. To

analyze this relationship, we obtain tactile hand gesture signals by asking participants

to express their emotions while they are holding and interacting with a wearable

tactile user interface device shown in Fig. 1. The device imitates the shape of a human

arm so that subjects in the experiment perform their hand gestures as they express

their emotions on human arm such as dragging, shaking and squeezing.

The participants are asked to perform tactile hand gestures on the interface device

in response to distinct emotions (i.e. excited, happy, relaxed, sleepy, tired, lonely,

angry and alarmed) requested by the researchers. The list of distinct emotions is

defined by Russell’s dimensional model of emotions to be described in Section 2.1

[9]. Russell’s model is widely used as the means of emotion classification in the fields

of emotional research and affective science. The tactile interface device records

hand gestures exerted on the device in terms of signal parameters such as intensity,

temporal frequency, spatial frequency and pattern correlation. Distinct tactile hand

gestures are determined by a combination of these four signal properties to be

described in Section 2.2.

Fig. 1. Proposed wearable tactile interface device facilitating touch sensor arrays.

We analyze the recoded hand gestures and their corresponding emotions using a

statistical analysis method called multivariate analysis of variance (MANOVA) to

find the statistical relationship between the participants’ emotions and their tactile

hand gestures. The study results showing the relationship between the two are

summarized in terms of a look-up table to be used in potential applications such as

online emotional communication.

2.1

Type of Emotions

Theory of emotion is mostly based on cognitive psychology but with contributions

from learning theory, physiological psychology, and other disciplines including

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

philosophy [10]. Among many proposed models of emotion, the emotional states of

the subjects in our research are defined based on Russell’s dimensional model, named

the circumflex model of affect, as shown in Fig 1. In this model, Russell provides the

mental map of how emotions are distributed in a two-dimensional system of

coordinates where the y-axis is the degree of arousal and the x-axis is the valence and

categorizes emotions in terms of pleasure and arousal.

•

•

Pleasure: mental state of being positive.

Arousal: mental state of being awake or reactive to stimuli.

Fig. 2. Russell’s dimensional model of emotions.

2.1

Model of Tactile Hand Gestures

Gestures are defined as nonverbal phrases of actions [11], describing explicit,

symbolic or representational cues revealing cognitive properties [12]. Gestures

involve a wide range of nonverbal human communication, which include body

language, facial expressions, hand gestures, and sign language. To define the types of

tactile hand gesture, we propose a new model that consists of four tactile signal

properties as summarized below.

•

•

•

•

Intensity: the degree of contact pressure.

Temporal frequency: the number of occurrences of contact per unit time.

Spatial frequency: the number of occurrences of contact per unit distance.

Pattern correlation: the vector correlation between input gestures and pattern

symbols such as circle, cross, line, etc.

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

2.3

Mapping between Emotion and Tactile Hand Gesture

This study is to observe which type of hand gestures the participants perform in a

particular emotional situation and to obtain statistically significant data that prove a

possibility of tactile hand gestures’ communication in online environment.

Participants’ tactile hand gesture inputs in experiments are critical for the analysis of

human emotions and furthermore for developing our tactile communication device. In

Section 2.1 and Section 2.2, the model parameters of emotions and gestures are

provided. Based on these models, the statistical relationship between emotional

parameters and corresponding gestures is found by using the collection of statistical

models, analysis of variance (ANOVA). The resultant data are applied to finding the

model-based statistical parametric mapping between the emotional domain and the

hand touch gesture domain.

3

Experiments

Behavioral aspects of tactile hand gestures being used for emotional interaction are

observed and analyzed using a wearable tactile interface device shown in Fig. 1. In

the response of a list of distinct emotions (i.e. excited, happy, relaxed, sleepy, tired,

lonely and angry), subjects perform tactile hand gestures are performed on the tactile

interface device. As we aim to make the subjects emotionally involved in a physical

sense, it is important that the gestures we pick are not singular, iconic or symbolic

gestures, but gestures that give rise to a physical experience that harmonizes with

what the user is trying to express.

The wearable tactile interface device is designed to recognize gestures in terms of

various sensory parameters such as intensity, temporal frequency, spatial frequency,

and pattern correlation. The device facilitates a multi-touch panel (touch area

dimension: 52 x 35 mm ) and its associated electronics (controller board), which are

shown in Fig. 3. The controller board is used to transfer the x-y axis and intensity data

of contact points to analysis software. The obtained pressure data is analyzed for the

correlation between emotions and gestures using the analysis software. The groups in

the gesture domain are mapped to those in the emotional domain.

2

Fig. 3. Multi-Touch demonstration and evaluation kit.

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

Fig. 4. Eight distinct emotions indicated in the Russell’s emotion chart.

3.1

Setup

The research sample set consists of 70 participants, who range in age from 20 to 40

years old (23.15 years old in average), from libraries at Georgia Institute of

Technology and public libraries in Georgia. 35 are female and 35 are male out of 70

participants. Participants are selected from those who actively use hand gestures to

express their emotions. 10 seconds are given for each gestural expression and

participants are asked to express the eight types of emotion. The experiment takes 10

minutes per participant.

The wearable tactile sensor interface with pressure sensor arrays implemented on

its surface is used as a sensory input device. The researcher introduces to participants

the Russell’s dimensional model of emotions. Eight distinct states of emotion

indicated on the Russell’s emotion chart shown in Fig. 4 are expressed by applying

finger gestures to the tactile interface device. Each emotion: (1) excited; (2) happy;

(3) relaxed; (4) sleepy; (5) tired; (6) lonely; (7) angry and (8) alarmed, leads

participants to express the emotions which are analyzed by parameters in Russell’s

dimensional model (Participants may perform tactile hand gestures such as soothing,

pummel, hitting, and squeezing). The obtained pressure data from participants’

gestures are then analyzed using ANOVA. Fig. 5 shows finger pressure points

detected by the tactile interface device that measures pressure by detecting contact

area. Based on the pressed area, the sensor calculates the pressure data in 256 levels.

The tactile interface device is also available to monitor the position of finger contact

points. Multi-finger movements are tracked with cursors.

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

Fig. 5. Contact area based pressure detection of the touch sensor panel.

3.2

Results

The user research using the wearable tactile sensor device provides us evidence of the

relationship between tactile hand gestures and emotions. To assess the relationship,

we determined the correlation between the signal properties of tactile gestures and the

emotional states by performing ANOVA for recorded tactile data.

The balanced one-way ANOVA of the obtained tactile gesture sample data was

performed for the signal properties: (a) intensity; (b) temporal frequency; (c) spatial

frequency; and (d) pattern correlation. Very small p values for all the four signal

properties that are obtained from ANOVA (p = 2.08e-82, p = 1.76e-111, p =

4.88e-13, p = 3.99e-4) indicate that at least one emotional sample mean is

significantly different from the other emotional sample means. The type of emotion

whose sample mean is significantly different from the other can be statistically

distinguished and decoded from the other emotional states by observing the

corresponding property of tactile gesture sample data. As shown in Table 1, the tactile

gesture corresponding to angry emotion contains the mean value of intensity

significantly higher than that of the other emotions. The mean value of temporal

frequency corresponding to alarmed emotion is higher than that of the other emotions.

However, spatial frequency and pattern correlation show less significant distinction in

their mean values among the various emotions.

Each 2-dimensional scatter plot shown in Fig. 7 displays the gesture sample data in

two tactile signal properties out of eight. As shown in Fig. 7(a), the emotion group of

(1) excited, (2) happy, (7) angry and (8) alarmed, which corresponds to high arousal

according to Russell’s dimensional model, are related with gestures with high

intensity and high temporal frequency. In Fig. 7(b), the distributions of gesture

samples in spatial frequency do not vary much among various emotions. However,

gesture samples associated with happy emotion are placed low in spatial frequency as

compared to those with excited, angry and alarmed. Fig. 7(c) shows that the emotion

group of relaxed, sleep, tired and lonely is associated with patterned gestures such as

drawing circles and lines. By observing pattern correlation values, this emotion group

can be distinguished from the others. However, this procedure requires detailed

pattern analysis for accurate decoding, which is the future work of this study.

(a)

(b)

(d)

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

(c)

(a) Contact area based pressure detection of the touch sensor panel.

(b) Tactile gesture scatter plot: temporal frequency vs. spatial frequency.

(c) Tactile gesture scatter plot: intensity vs. pattern correlation.

Fig. 6. Tactile gesture scatter plot: (1) excited; (2) happy; (3) relaxed; (4) sleepy; (5) tired; (6)

lonely; (7) angry; and (8) alarmed.

The emotional state of anger, which corresponds to high arousal and displeasure

according to Russell’s dimensional model, is related to tactile signals of high strength

and low temporal frequency. The results also show that the emotional state of

happiness, which contains high arousal and pleasure, corresponds to tactile signals of

high spatial frequency and high temporal frequency. In addition, the duration of hand

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

gestures is extended when people express amplified emotional states compared to

subtle emotional states.

Table 1.

Mean and standard deviation of tactile gesture samples.

Intensity

Excited

Happy

Relaxed

Sleepy

Tired

Lonely

Angry

Alarmed

3

Temporal Freq.

Spatial Freq.

Pattern Correlation

μ

σ

μ

σ

μ

σ

μ

σ

0.41

0.17

0.052

0.049

0.054

0.074

0.53

0.39

0.11

0.16

0.024

0.030

0.044

0.040

0.21

0.15

2.7

2.3

0.50

0.31

0.31

0.37

1.3

3.1

0.90

0.69

0.40

0.20

0.17

0.21

0.55

0.67

1.8

1.4

1.7

1.4

1.3

1.5

2.1

2.2

0.81

0.72

0.58

0.44

0.62

0.54

0.55

0.69

0.19

0.21

0.26

0.29

0.24

0.34

0.23

0.19

0.13

0.12

0.19

0.18

0.19

0.19

0.18

0.12

Conclusion

In this research, the types of tactile hand gestures are categorized in terms of tactile

signal properties and related to distinct emotions that the gestures originate from. The

findings from this investigation show opportunities and promises to use tactile hand

gestures for the communication of emotions among people using online digital

communication devices at a distance. From user experiments, we found that different

emotions are statistically associated with different tactile hand gestures. However, the

aspect of pattern correlation as one of tactile gesture properties needs to be further

investigated to uncover more gesture information and to better define gesture-emotion

relation. In future study, the relationship between touch hand gestures and emotions

will be applied to emotional online communication devices, and a prototype design of

wearable online tactile communication devices will be demonstrated.

References

1. Geldard, F.A.: Some neglected possibilities of communication. Science, vol. 131, pp. 1583-1588 (1960)

2. Barnett, K.: A theoretical construct of the concepts of touch as they relate to nursing.

Nursing Research, vol. 21, pp. 102--110 (1972)

3. Wolff, P.H.: Observations on the early development of smiling. In: B.M. Foss (Ed.),

Determinant of infant behavior II, pp. 113--138. London: Methuen & Co (1963)

4. Kurtenbach, G., Hulteen, E. A.: Gestures in Human-Computer Communication. In: B.

Laurel., S.J. Mountford (Eds.), The Art of Human-Computer Interface Design, MA:

Addison-Wesley Publishing, pp. 309--317. (1990)

5. Dourish, P.: Where the Action is, the Foundations of Embodied Interaction. MIT Press,

Cambridge, MA (2001)

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg

6. Moen, J.: Aesthetic movement interaction: designing for the pleasure of motion. Doctoral

Thesis, KTH, Numerical Analysis and Computer Science, Stockholm, Sweden (2006)

7. Petra, S.: In situ informants exploring an emotional mobile messaging system in their

everyday practice. Int. J. Human-Computer Studies (2007)

8. Wensveen, S., Overbeeke, K., Djajadiningrat, T.: Touch Me, Hit Me and I Know How You

Feel: A Design Approach to Emotionally Rich Interaction. 3rd conference on Designing

interactive systems: processes, practices, methods, and techniques (2000)

9. Russell, J.A.: A circumflex model of affect. Journal of personality and social psychology,

vol. 34 (1980)

10. Lewis, M.: Bridging emotion theory and neurobiology through dynamic systems modeling.

Behavioral and brain sciences, vol. 28, 169--245 (2005)

11. Fast, J.: Body Language. New York: M. Evans & Company. (1988)

12. Golden-Meadow, S.: Hearing Gesture: How Our Hands Help Us Think. Cambridge, MA,

Belknap Press of Harvard University Press (1999)

HCI International 2011, Volume 6, LNCS 6766, 2011. © Springer-Verlag Berlin Heidelberg