Dynamic Restarts Optimal Randomized Restart Policies with Observation

advertisement

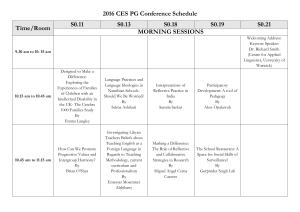

Dynamic Restarts Optimal Randomized Restart Policies with Observation Henry Kautz, Eric Horvitz, Yongshao Ruan, Carla Gomes and Bart Selman Outline Background Optimal strategies to improve expected time to solution using heavy-tailed run-time distributions of backtracking search restart policies observation of solver behavior during particular runs predictive model of solver performance Empirical results Backtracking Search Backtracking search algorithms often exhibit a remarkable variability in performance among: slightly different problem instances slightly different heuristics different runs of randomized heuristics Problematic for practical application Verification, scheduling, planning Heavy-tailed Runtime Distributions Observation (Gomes 1997): distributions of runtimes of backtrack solvers often have heavy tails infinite mean and variance probability of long runs decays by power law (ParetoLevy), rather than exponentially (Normal) Very short Very long Formal Models of Heavy-tailed Behavior Imbalanced tree search models (Chen 2001) Exponentially growing subtrees occur with exponentially decreasing probabilities Heavy-tailed runtime distribution can arise in backtrack search for imbalanced models with appropriate parameters p and b p is the probability of the branching heuristics making an error b is the branch factor Randomized Restarts Solution: randomize the systematic solver Provably eliminates heavy tails Effective whenever search stagnates Add noise to the heuristic branching (variable choice) function Cutoff and restart search after some number of steps Even if RTD is not formally heavy-tailed! Used by all state-of-the-art SAT engines Chaff, GRASP, BerkMin Superscalar processor verification Complete Knowledge of RTD P(t) D t Complete Knowledge of RTD Luby (1993): Optimal policy uses fixed cutoff T * arg min t E ( Rt ) where E ( Rt ) is the expected time to solution restarting every t steps P(t) D T* t Complete Knowledge of RTD Luby (1993): Optimal policy uses fixed cutoff T * arg min E (Tt ) t t E (Tt ) t E (T | T t ) where T is the P(T t ) length of single complete run (without cutoff) P(t) D T* t No Knowledge of RTD Luby (1993): Universal sequence of cutoffs 1, 1, 2, 1, 1, 2, 4, ... is within O(log T *) of the optimal policy for the unknown distribution In practice - 1-2 orders of magnitude Open cases: Partial knowledge of RTD (CP 2002) Additional knowledge beyond RTD Example: Runtime Observations Idea: use observations of early progress of a run to induce finergrained RTD’s D1 P(t) T1 D T* D2 T2 t Example: Runtime Observations What is optimal policy, given original & component RTD’s, and classification of each run? Lazy: use static optimal cutoff for combined RTD P(t) D1 D T* D2 t Example: Runtime Observations What is optimal policy, given original & component RTD’s, and classification of each run? Naïve: use static optimal cutoff for each RTD D1 P(t) T1* D2 T2* t Results Method for inducing component distributions using Bayesian learning on traces of solver Resampling & Runtime Observations Optimal policy where observation assigns each run to a component distribution Conditions under which optimal policy prunes one (or more) distributions Empirical demonstration of speedup I. Learning to Predict Solver Performance Formulation of Learning Problem Consider a burst of evidence over observation horizon Learn a runtime predictive model using supervised learning Observation horizon Short Long Median run time Horvitz, et al. UAI 2001 Runtime Features Solver instrumented to record at each choice (branch) point: SAT & CSP generic features: number free variables, depth of tree, amount unit propagation, number backtracks, … CSP domain-specific features (QCP): degree of balance of uncolored squares, … Gather statistics over 10 choice points: initial / final / average values 1st and 2nd derivatives SAT: 127 variables, CSP: 135 variables Learning a Predictive Model Training data: samples from original RTD labeled by (summary features, length of run) Learn a decision tree that predicts whether current run will complete in less than the median run time 65% - 90% accuracy Generating Distributions by Resampling the Training Data Reasons: The predictive models are imperfect Analyses that include a layer of error analysis for the imperfect model are cumbersome Resampling the training data: Use the inferred decision trees to define different classes Relabel the training data according to these classes Creating Labels The decision tree reduces all the observed features to a single evidential feature F F can be: Binary valued Indicates prediction: shorter than median runtime? Multi-valued Indicates particular leaf of the decision tree that is reached when trace of a partial run is classified Result P(t) Decision tree can be used to precisely classify new runs as random samples from the induced RTD’s Observed F Make Observation D median Observed F t II. Creating Optimal Control Policies Control Policies Problem Statement: A process generates runs randomly from a known RTD After the run has completed K steps, we may observe features of the run We may stop a run at any point Goal: Minimize expected time to solution Note: using induced component RTD’s implies that runs are statistically independent Optimal policy is stationary Optimal Policies Optimal restart policy for using a binary feature F is of the form: (1) Set cutoff to T1 for a fixed T1 TObs or of the form: (2) Wait for TObs steps, then observe F; If F holds, then use cutoff T1 else use cutoff T2 for appropriate constants T1 , T2 Straightforward generalization to multi-valued features Case (2): Determining Optimal Cutoffs (T1** , T2** ,...) arg min E (T1 , T2 ,...) Ti d (T q (t )) i arg min Ti i i i t Ti d q (T ) i i i i where d i is the probability of observing the i -th value of F q (t ) is the probability run succeeds in t or fewer steps Optimal Pruning Runs from component D2 should be pruned (terminated) immediately after observation when: for all 0 : E (T1 , TObs ) E (T1 , TObs ) Equivalently: - T Obs t TObs q ( t ) 2 q2 (TObs ) q2 (TObs ) E (T1 , TObs ) Note: does not depend on priors on Di III. Empirical Evaluation Backtracking Problem Solvers Randomized SAT solver Satz-Rand, a randomized version of Satz (Li 1997) DPLL with 1-step lookahead Randomization with noise parameter for increasing variable choices Randomized CSP solver Specialized CSP solver for QCP ILOG constraint programming library Variable choice, variant of Brelaz heuristic Domains Quasigroup With Holes Graph Coloring Logistics Planning (SATPLAN) Dynamic Restart Policies Binary dynamic policies Runs are classified as either having short or long run-time distributions N-ary dynamic policies Each leaf in the decision tree is considered as defining a distinct distribution Policies for Comparison Luby optimal fixed cutoff For original combined distribution Luby universal policy Binary naïve policy Select distinct, separately optimal fixed cutoffs for the long and for the short distributions Illustration of Cutoffs D1 P(t) T1* T2** Make Observation D T* T1** D2 T2* t Comparative Results Improvement of dynamic policies over Luby fixed optimal cutoff policy is 40~65% Expected Runtime (Choice Points) QCP (CSP) QCP (Satz) Graph Coloring (Satz) Planning (Satz) Dynamic n-ary 3,295 8,962 9,499 5,099 Dynamic binary 5,220 11,959 10,157 5,366 Fixed optimal 6,534 12,551 14,669 6,402 Binary naïve 17,617 12,055 14,669 6,962 Universal 12,804 29,320 38,623 17,359 Median (no cutoff) 69,046 48,244 39,598 25,255 Cutoffs: Graph Coloring (Satz) Dynamic n-ary: Dynamic binary: Binary naive: Fixed optimal: 10, 430, 10, 345, 10, 10 455, 10 342, 500 363 Discussion Most optimal policies turned out to prune runs Policy construction independent from run classification – may use other learning techniques Does not require highly-accurate prediction! Widely applicable Limitations Analysis does not apply in cases where runs are statistically dependent Example: We begin with 2 or more RTD’s Environment flips a coin to choose a RTD, and then always samples that RTD E.g.: of SAT and UNSAT formulas We do not get to see the coin flip! Now each unsuccessful run gives us information about that coin flip! The Dependent Case Dependent case much harder to solve Ruan et al. CP-2002: “Restart Policies with Dependence among Runs: A Dynamic Programming Approach” Future work Using RTD’s of ensembles to reason about RTD’s of individual problem instances Learning RTD’s on the fly (reinforcement learning) Big Picture control / policy runtime Problem Instances Solver dynamic features static features Learning / Analysis Predictive Model