Protecting Wildlife under Imperfect Observation

advertisement

The Workshops of the Thirtieth AAAI Conference on Artificial Intelligence

Computer Poker and Imperfect Information Games: Technical Report WS-16-06

Protecting Wildlife under Imperfect Observation

Thanh H. Nguyen,1 Arunesh Sinha,1 Shahrzad Gholami,1 Andrew Plumptre,2

Lucas Joppa,3 Milind Tambe,1 Margaret Driciru,4 Fred Wanyama,4 Aggrey Rwetsiba,4

Rob Critchlow,4 Colin Beale4

1

{thanhhng, aruneshs, sgholami, tambe}@usc.edu , University of Southern California, Los Angeles, CA, USA

2

aplumptre@wcs.org, Wildlife Conservation Society, USA

3

lujoppa@microsoft.com, Microsoft Research

4

{margaret.driciru,fred.wanyama,aggrey.rwetsiba}@ugandawildlife.org, Uganda Wildlife Authority, Uganda

5

{rob.critchlow,colin.beale}@york.ac.uk, The University of York

Abstract

non-governmental organizations attempt to enforce effective protection of wildlife parks through well-trained park

rangers. In each time period (e.g., one month), park rangers

conduct patrols within the park area, with the aim of preventing poachers from catching animals either by catching

the poachers or by removing the animals traps laid out by

the poachers. During the rangers’ patrols, poaching signs

are collected and then can be used together with other domain features such as animal density to predict the poachers’

behaviors. In essence, learning poachers’ behaviors or anticipating where the poachers often go for poaching is critical

for the rangers to generate effective patrols.

Previous work has modeled the problem of wildlife protection as a defender-attacker SSG problem (Yang et al.

2014; Brown, Haskell, and Tambe 2014; Fang, Stone, and

Tambe 2015). SSGs have been widely applied for solving

many real-world security problems in which the defender

(e.g., security agencies) attempts to protect critical infrastructure such as airports and ports from attacks by an adversary such as terrorists (Tambe 2011; Basilico, Gatti, and

Amigoni 2009; Letchford and Vorobeychik 2011). Motivated by the success of SSG applications for infrastructure

security problems, previous work have applied SSGs for

wildlife protection and leveraged existing behavioral models of the adversary such as Quantal Response (QR) and

Subjective Utility Quantal Response (SUQR) to capture the

poachers’ behaviors (Yang et al. 2014; Brown, Haskell, and

Tambe 2014; Fang, Stone, and Tambe 2015).

However, existing behavioral models in security games

have several limitations when predicting the poachers’ behaviors. First, while these models assume all (or most) attack data is known for learning the models’ parameters, the

rangers are unable to track all poaching activities within the

park area. Since animals are silent victims of poaching, the

dataset contains only the poaching signs collected by the

rangers during their patrols and the large area of the park

does not allow for thorough patrolling of the whole forest

area. This imperfectly observed or biased data can result

in learning inaccurate behavioral models of poachers which

would mislead the rangers into conducting ineffective patrols. Second, while existing behavioral models such as

QR and SUQR are built upon discrete choice models that

posits models of a single agent making a choice. However,

in wildlife protection there are multiple attackers and it is

Wildlife poaching presents a serious extinction threat to many

animal species. In order to save wildlife in designated

wildlife parks, park rangers conduct patrols over the park area

to combat such illegal activities. An important aspect of the

patrolling activity of the rangers is to anticipate where the

poachers are likely to catch animals and then respond accordingly. Previous work has applied defender-attacker Stackelberg Security Games (SSGs) to solve the problem of wildlife

protection, wherein attacker behavioral models are used to

predict the behaviors of the poachers. However, these behavioral models have several limitations which limit their accuracy in predicting poachers’ behavior. First, existing models

fail to account for the rangers’ imperfect observations w.r.t

poaching activities (due to the limited capability of rangers

to patrol thoroughly over a vast geographical area). Second,

these models are built upon discrete choice models that assume a single agent choosing targets, while it is infeasible to

obtain information about every single attacker in wildlife protection. Third, these models do not consider the effect of past

poachers’ actions on the current poachers’ activities, one of

the key factors affecting the poachers’ behaviors.

In this work, we attempt to address these limitations while

providing three main contributions. First, we propose a novel

hierarchical behavioral model, HiBRID, to predict the poachers’ behaviors wherein the rangers’ imperfect detection of

poaching signs is taken into account — a significant advance

towards existing behavioral models in security games. Furthermore, HiBRID incorporates the temporal effect on the

poachers’ behaviors. The model also does not require a

known number of attackers. Second, we provide two new

heuristics: parameter separation and target abstraction to

reduce the computational complexity in learning the model

parameters. Finally, we use the real-world data collected in

Queen Elizabeth National Park (QENP) in Uganda over 12

years to evaluate the prediction accuracy of our new model.

Introduction

Wildlife protection is a global concern. Many species such

as tigers and rhinos, etc are in danger of extinction because

of poaching (Montesh 2013; Secretariat 2013). The extinction of animals could destroy the ecosystem, seriously affecting all living things on the earth including human beings. To prevent wildlife poaching, both governmental and

c 2016, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

371

i and the defender is not protecting that target, the attacker

obtains a reward Ria while the defender gets a penalty Pid .

Conversely, the attacker receives a penalty Pia while the defender achieves a reward Rid . The expected utilities of the

defender and attacker are then computed as the follows:

not possible to attribute an attack to any particular attacker.

Finally, these models were mainly applied for single-shot

security games in which the temporal effect on attacks is not

considered. Yet, in wildlife protection, the poachers repeatedly catch animals in the park, hence it is important to take

past activities into account when reasoning about the poachers’ behaviors.

In this paper, we attempt to address these limitations of

existing behavioral models while providing the following

main contributions. First, we introduce a new hierarchical behavioral model, HiBRID, which consists of two key

components: one component accounts for the poachers’ behaviors and the another component models the rangers’ imperfect detection of poaching signs. HiBRID significantly

advances the existing behavioral models in security games

by directly addressing the challenge of rangers’ imperfect

observations using a detectability component. Furthermore,

in HiBRID, we incorporate the dependence of the poachers’ behaviors on their activities in the past. Last but not

least, we adopt logistic models to formulate the two components of HiBRID that enables capturing the aggregate behavior of attackers without requiring a known number of attackers. Second, we provide two new heuristics to reduce

the computational cost of learning the HiBRID model’s parameters, namely parameter separation and target abstraction. The first heuristic uses the two components of the hierarchical model to divide the set of model parameters into

separate subsets and then iteratively learns these subsets of

parameters separately while fixing the values of the other

subsets. This heuristic decomposes the learning process into

less complicated learning components which could potentially help in speeding up the learning process. The second heuristic of target abstraction works by leveraging the

continuous spatial structure of the wildlife domain, starting

the learning process with a coarse division of forest area

and gradually using finer division instead of directly starting with finer divisions, leading to improved runtime overall

with no loss in accuracy. Third, we conduct extensive experiments to evaluate our new model based on real-world

wildlife data collected in QENP over 12 years.

Uid = ci Rid + (1 − ci )Pid

Uia = ci Pia + (1 − ci )Ria

(1)

(2)

Behavioral Models of Adversaries. In security games, different behavioral models have been proposed to capture the

attacker’s behaviors. The QR model is one of the most popular behavioral model which attempts to predict a stochastic distribution of the attacker’s responses (McFadden 1972;

McKelvey and Palfrey 1995). In general, QR predicts the

probability that the attacker will choose each target to attack

with the intuition is that the higher expected utility of a target, the more likely that the attacker will choose that target.

A more recent behavioral model, SUQR, also attempts to

predict an attacking distribution over the targets (Nguyen et

al. 2013). However, instead of relying on expected utility,

SUQR uses the subjective utility function which is a linear

combination of all features that can influence the attacker’s

behaviors.

Ûia = w1 ci + w2 Ria + w3 Pia

(3)

where (w1 , w2 , w3 ) are the key model parameters which

measure the importance of the defender’s coverage, the attacker’s reward and penalty w.r.t the attacker’s action. Based

on subjective utility, SUQR predicts the attacking probability at target i as follows:

a

eÛi

qi = P

Ûja

je

(4)

SUQR was shown to outperform QR in the context of both

infrastructure security and wildlife protection.

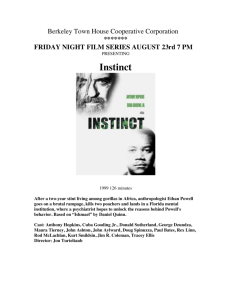

Wildlife Protection.

In

wildlife domains such as

Queen Elizabeth National

Park (QENP) in Uganda

(Figure 1), lives of many

species such as hippos, elephants, and kobis, etc are

in danger because of illegal human activities such as

poaching. The park rangers

attempt to conduct patrols

over a large geographical

area (e.g., ≈ 2500km2 in

QENP) to protect wildlife

from these illegal activities.

While the poachers aim at Figure 1: Queen Elizabeth

catching animals by setting national park

trapping tools such as snares,

the rangers try to combat

with the poachers by confiscating these trapping tools.

In order to conduct effective patrols over the park, it is

important for the rangers to anticipate where the poachers

Background & Related Work

Stackelberg Security Games. In Stackelberg security

games, there is a defender who attempts to optimally allocate her limited security resources to protect a set of targets against an adversary who tries to attack one of the targets (Tambe 2011). One key assumption of SSGs is that

the defender commits to a mixed strategy first while the attacker can observe the defender’s strategy and then take action based on that observation. A pure strategy of the defender is an assignment of her limited resources to a subset

of targets and a mixed strategy of the defender refers to a

probability distribution over all possible pure strategies. The

defender’s mixed strategies can be represented as a marginal

coverage vector over the targets (i.e., the coverage probabilities that the defender will protect each target) (Korzhyk,

Conitzer, and Parr 2010). We denote by N the number of

targets and 0 ≤ ci ≤ 1 the defender’s coverage probability at target i for i = 1 . . . N . If the attacker attacks target

372

are likely to set trapping tools. Fortunately, poaching signs

(e.g., snares) collected by the rangers while patrolling together with other domain features such as animal density,

area slope and habitat, etc can be used to learn the poachers’

behaviors. For example, if the rangers find a lot of snares

at certain locations, it indicates that the poachers often go to

those locations for poaching. However, the rangers’ capability of making observations over a large geographical area

is limited. For example, the rangers usually follow certain

paths/trails to patrol; they can only observe over the areas

around these paths/trails which means that they may not be

able to make observations in other further areas. In addition,

in areas such as dense forests, it is difficult for the rangers

to search for snares. As a result, the rangers’ observations

may be inaccurate. In other words, there may be still poaching activities happening in areas where rangers did not find

any poaching sign. Therefore, totally relying on the rangers’

observations would lead to an inaccurate prediction of the

poachers’ behaviors, deteriorating the rangers’ patrol effectiveness. Furthermore, the rangers are also unaware of the

total number of attacks happening in the park. Finally, when

modeling the poachers’ behaviors, it is critical to incorporate important aspects that affect the poachers’ behaviors

including time dependency of the poachers’ activities and

patrolling frequencies of the rangers.

Previous work in security games have modeled the problem of wildlife protection as a SSG in which the rangers play

in a role of the defender while the poachers refer to the attacker (Yang et al. 2014). The park area can be divided into

a grid where each grid cell represents a target. The rewards

and penalties of each target w.r.t the rangers and poachers

can be determined based on domain features such as animal density. Previous work then focuses on computing the

optimal patrolling strategy for the rangers given that poachers’ behaviors are predicted based on existing adversary behavioral models. However, as explained previously, these

models can not handle the challenges of observation bias

of the rangers, time dependency of the poachers’ behaviors,

as well as unknown number of attackers which hinder them

from predicting well in the wildlife domain.

In ecology research, while previous work mainly focused

on estimating the animal density (MacKenzie et al. 2002),

there is one recent work which we are aware of that attempts

to predict the behaviors of poachers based on wildlife data

which handles the challenge of imperfect observations of the

rangers (Critchlow et al. 2015). However, this work also

has several limitations. First, the proposed model does not

consider the time dependency of the poachers’ behaviors.

This model also does not consider the effect of the rangers’

patrols on poaching activities. Furthermore, the proposed

model is not well evaluated wherein the prediction accuracy

of the model is not measured. Finally, this work does not

provide any solution for generating patrolling strategies for

the rangers given the behavioral model of the poachers.

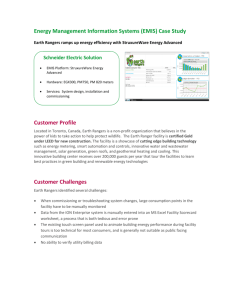

Area habitat

Ranger patrol

Attacking probability

Animal density

Area slope

Detection probability

Distance to

rivers / roads /

villages

…

Ranger observation

Figure 2: Overview of the HiBRID model

Ranger Imperfect Detection), to predict poachers’ behaviors in wildlife domain, taking into account the challenge

of rangers’ imperfect observation. Our model is outlined

in Figure 2. Overall, HiBRID consists of two layers. One

layer models the probability the poachers attack each target

wherein the temporal effect on the poachers’ behaviors is

incorporated. In this layer, a prior number of attacks is not

required to predict the poachers’ behaviors. The next layer

predicts the conditional probability of the rangers detecting

any poaching sign at a target given that the poachers attack

that target. These two layers are then integrated to predict

the final observations of the rangers.

In HiBRID, domain features such as animal density, distance to rivers/roads/villages, as well as area habitat and

slope, etc are incorporated to predict either attacking probabilities or detection probabilities or both. Furthermore, we

incorporate the effect of the rangers’ patrols on both layers, i.e., how the poachers adapt their behaviors according

to rangers’ patrols and how the rangers’ patrols determine

the rangers’ detectability of poaching signs.

Hierarchical Behavioral Model: HiBRID

We denote by T the number of time steps, N the number of

targets, and K the number of domain features. At each time

step t, each target i is associated with a set of feature values

{xkt,i } where k = 1 . . . K and xkt,i is the value of the k th

feature at (t, i). In addition, ct,i is defined as the coverage

probability of the rangers at (t, i). When the rangers patrol

target i at time step t, they have observation ot,i which takes

an integer value in {−1, 0, 1}. Specifically, ot,i = 1 indicates that the rangers observe a poaching sign at target i in

time step i, ot,i = 0 means that the rangers have no observation at target i in time step i and ot,i = −1 means that the

rangers did not patrol target i in time step i. Furthermore, we

define at,i ∈ {0, 1} as the actual action of poachers at (t, i)

which is hidden from the rangers. Specifically, at,i = 1 indicates the poachers attack target i in time step t; otherwise,

at,i = 0 means the poachers did not attack at (t, i). In this

work, we only consider the situation of attacked or not (i.e.,

at,i ∈ {0, 1}); the case of multiple-level attacks would be an

interesting direction for future work. Moreover, we mainly

focus on the problem of false negative observations, meaning that there may still exist poaching activity at locations

where the rangers found no sign of poaching. On the other

hand, we make the reasonable assumption that there is no

Behavioral Learning

In this work, we introduce a new hierarchical behavioral

model, HiBRID (Hierarchical Behavioral model against

373

i = 1…N

a t,i

λ

i = 1…N

c t,i

o t,i

a t+1,i

w

λ

Furthermore, if the poachers attack at (t, i), we predict the

probability that the rangers can detect any poaching signs as

follows:

0

ew [xt,i ,1]

(6)

p(ot,i = 1|at,i = 1, ct,i , xt,i ) = ct,i ×

0

1 + ew [xt,i ,1]

where the first term is the probability that the rangers are

present at (t, i) and the second term indicates the probability that the rangers can detect any poaching sign when patrolling at (t, i). Furthermore, w = {wk } is the (K + 1) × 1

vector of parameters which indicates the significance of domain features in affecting the rangers’ detectability. Here,

we use the same set of domain features xt,i as used in the

attack probability Equation 5, for ease of presentation, yet

the feature sets that influence the attacking probability and

detection probability may be different. For example, the distances to rivers or villages may have impact on the poachers’

behaviors but not the rangers’ detectability. Finally, we use

p(at,i = 1|at−1,i , ct,i ) and p(ot,i = 1|at,i = 1, ct,i ) as the

abbreviations of the LHSs in Equations 5 and 6.

Now, we will explain our approach for learning the parameters (λ, w) of our hierarchical model. The domain features xt,i is omitted in all equations for simplification.

c t+1,i

o t+1,i

w

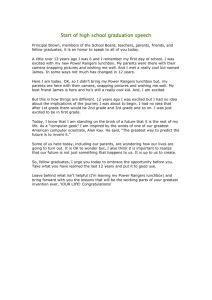

Figure 3: Graphical model representation of HiBRID

false positive observation, meaning that if the rangers found

any poaching sign at a target, poachers did attack that target. In other words, we have p(at,i = 1|ot,i = 1) = 1 and

p(ot,i = 1|at,i = 0) = 0.

The graphical model representation of HiBRID is shown

in Figure 3 wherein the directed edges indicate the dependence between elements of the model. In addition, the grey

nodes refer to known elements for the rangers such as the

rangers’ coverages and observations while the white nodes

represent the unknown elements such as the actual actions of

poachers. In HiBRID, the actual actions of poachers are considered as the latent elements. The elements λ and w are the

model parameters which we will explain later. In Figure 3,

we do not show the domain features for simplification; these

features are known elements in HiBRID. In modeling the

attacking probabilities of the poachers, we assume that the

poachers’ behaviors at,i depends on the poachers’ activities

in the past at−1,i , the rangers’ patrolling strategies ct,i , as

well as the domain features xt,i . For example, the poachers

are more likely to attack targets with high animal density and

low coverage of rangers. They may also tend to come back

to the areas where they attacked before. For modeling the

rangers’ observation uncertainty, we expect that the rangers’

observations ot,i depend on the actual actions of the poachers at,i , the rangers’ coverage probabilities ct,i and domain

features xt,i . Moreover, we adopt the logistic model to predict the poachers’ behaviors; one advantage of the logistic

model compared to the conditional logistic model, SUQR,

is that it does not assume a known number of attacker and

models probability of attack at every target independently.

Thus, given the actual action of poachers, at−1,i , at previous time step (t − 1, i), the rangers’ coverage probability

ct,i at (t, i), and the domain features xt,i = {xkt,i }, we aim

at predicting the probability that poachers attack (t, i) based

on the following logistic formulation:

Parameter Estimation

In order to estimate (λ, w), we attempt to maximize the loglikelihood that the rangers can have observations o = {ot,i }

given domain features x = {xt,i } and the rangers’ coverage

probabilities c = {ct,i } for all time steps t = 1 . . . T and

targets i = 1 . . . N which is formulated as follows:

max log p(o|c, x, λ, w) = max log

λ,w

eλ [xt,i ,ct,i ,at−1,i ,1]

1+e

λ0 [xt,i ,ct,i ,at−1,i ,1]

X

p(o, a|c, x, λ, w) (7)

a

where a = {at,i } is the vector of all actions of poachers.

Due to the presence of unobserved variables a = {at,i }, we

use the standard method, Expectation Maximization (EM),

to decompose the log-likelihood and then solve the problem.

Essentially, EM is a local search method in which given an

initial value for every model parameter, EM iteratively updates the parameter values until it reaches a local optimal

solution of (7). Each iteration of EM consists of two key

steps:

• E step: compute p(a|o, c, (λ, w)old )

P

• M step: max a p(a|o, c, (λ, w)old ) log(p(o, a|c, λ, w))

λ,w

In EM, the E (Expectation) step attempts to compute the

probability that the poachers take actions a = {at,i } given

the rangers’ observations o, the rangers’ patrols c, the domain features x, and current values of the model parameters

(λ, w). On the other hand, in the M (Maximization) step,

it tries to maximize the expectation of the logarithm of the

complete-data likelihood function given the action probabilities computed in the E step. Observe that the objective in

the M step can be split into two additive parts as follows:

0

p(at,i = 1|at−1,i , ct,i , xt,i ) =

λ,w

(5)

XX

p(at,i |o, c, (λ, w)

old

) log p(ot,i , at,i |ct,i , w)

t,i at,i

where λ = {λk } is the (K + 3) × 1 vector of parameters

which measure the importance of all factors that can influence the poachers’ decisions. λK+3 is the free parameter

and λ0 is the transpose vector of λ.

=

XX

old

p(at,i |o, c, (λ, w)

) log p(ot,i |at,i , ct,i , w)

t,i at,i

XX X

old

+

p(at,i , at−1,i |o, c, (λ, w)

) log p(at,i |at−1,i , ct,i , λ)

t,i at,iat−1,i

374

(8)

where a0,i ∈ ∅. In (8), the first component is obtained as a

result of decomposing w.r.t the detection probabilities of the

rangers at every (t, i) (Equation 6). In addition, the second

component results from decomposing according to the attacking probabilities at every (t, i) (Equation 5). Following

this split, for our problem the E step reduces to the computing the following two quantities:

• Total probability: p(at,i |o, c, (λ, w)old )

• 2-step probability: p(at,i , at−1,i |o, c, (λ, w)old )

which can be computed by accounting for missing observations, i.e., ot,i = −1 when rangers do not patrol at (t, i).

This can be done by introducing p(ot,i = −1|at,i ) = 1 when

the rangers’ coverage ct,i = 0.

Although we can decompose the log-likelihood, the EM

algorithm is still time-consuming due to the large number of

targets. Therefore, we use two novel ideas to speed up the algorithm: parameter separation for accelerating the convergence of EM and target abstraction for reducing the number

of targets. The details of these two ideas are explained in the

following sections.

Parameter Separation. Following the technique of multicycle expected conditional maximization (MECM) (Meng

and Rubin 1993), as shown in Equation 8, the objective function can be divided into two separate functions w.r.t attack

parameters λ and detection parameters w: Qd (w) + Qa (λ)

where the detection function Qd (w) is the first term of the

RHS in Equation 8 and the attack function Qa (λ) is the second term. Therefore, instead of learning both sets of parameters simultaneously, we decompose each iteration of EM

into two E steps and two M steps as follows:

Figure 4: Target Abstraction

features. Therefore, we expect that the parameters learned

in both the original and abstracted grid would expose similar characteristics. Hence, the model parameters estimated

based on the abstracted grid could be effectively used to derive the final values of the parameters in the original one.

In this work, we leverage the values of parameters learned

in the abstracted grid in two ways: (i) reduce the number of

restarting points (i.e., initial values of parameters) for reaching different local optimal solutions in EM; and (ii) reduce

the number of iterations in each round of EM. The idea of

target abstraction is outlined in Figure 4 wherein each black

dot corresponds to a set of parameter values at a particular

iteration given a specific restarting points. At the first stage,

we estimate the parameter values in the abstracted grid given

a large number of restarting points R, assuming that we can

run M1 EM iterations. At the end of the first stage, we obtain

R different sets of parameter values; each corresponds to a

local optimal solution of EM in the abstracted grid. Then at

the second stage, these sets of parameter values are used to

estimate the model parameters in the original grid as the following: (i) only a subset of K resulting parameter sets which

refer to the top local optimal solutions in the abstracted grid

are selected as initial values of parameters in the original

grid; and (ii) instead of running M1 EM iterations again, we

only proceed with M2 << M1 iterations in EM since we

expect that these selected parameter values are already well

learned in the abstracted grid and thus could be considered

as warm restarts in the original grid.

• E1 step: compute total probability

• M1 step: w∗ = argmaxw Qd (w, (λ, w)old ); wold =

w∗

• E2 step: compute 2-step probability

• M2 step: λ∗ = argmaxλ Qa (λ, (λ, w)old ); λold = λ∗

The convergence of the above parameter separation follows from the analysis of MECM (Meng and Rubin 1993).

Note that the detection and attack components are simpler

functions compared to the original objective since these

components only depends on the detection and attack parameters respectively. Furthermore, at each EM iteration,

the parameters are more updated based on the decomposition since the attack parameter is now updated based on

the new detection parameters from the E1/M1 steps instead

of the old detection parameters from the previous iteration.

Thus, we expect that by decomposing each iteration of EM

according to attack and detection parameters, EM would

converge more quickly.

Target Abstraction. Our second idea is to reduce the number of targets via target abstraction. By exploiting the spatial structure of the conservation area (i.e., the spatial connectivity between grid cells), we can divide the area into

a smaller number of grid cells by merging each cell in the

original grid with its neighbors to a single bigger cell. The

corresponding domain features are aggregated accordingly.

Intuitively, neighboring cells tend to have similar domain

Experiments

In our experiments, we aim at extensively assessing the prediction accuracy of our HiBRID model compared to existing

behavioral models based on real-world wildlife data. In the

following, we provide a brief description of the real-world

wildlife data used for our experiments.

Real-world Wildlife Data

In learning the poachers’ behaviors, we use the wildlife data

collected by the rangers over 12 years from 2003 to 2014 in

Queen Elizabeth national park in Uganda. This work is accomplished under the collaboration with Wildlife Conservation Society (WCS) and Uganda Wildlife Authority (UWA).

While patrolling, the park rangers record all information

such as locations (latitude/longitude), times, and observa-

375

Models

HiBRID

HiBRID-Abstract

HiBRID-NoTime

Logit

SUQR

SVM

Rainy I

0.78

0.72

0.70

0.47

0.47

0.46

Rainy II

0.73

0.74

0.70

0.59

0.58

0.48

Dry I

0.79

0.71

0.73

0.57

0.58

0.54

Dry 2

0.59

0.56

0.58

0.43

0.43

0.44

Models

HiBRID

HiBRID-Abstract

HiBRID-NoTime

Logit

SUQR

SVM

Rainy I

0.76

0.74

0.72

0.57

0.58

0.53

Rainy II

0.68

0.68

0.65

0.56

0.55

0.46

Dry I

0.75

0.73

0.75

0.57

0.58

0.51

Dry 2

0.74

0.73

0.70

0.58

0.56

0.55

Table 1: AUC: Commercial Animal

Table 2: AUC: Non-Commercial Animal

tions (e.g., signs of human illegal activities). The collected

human signs can be divided into six different groups: commercial animal (i.e., human signs which refer to poaching

commercial animals such as buffalo, hippo and elephant,

etc), non-commercial animal, fishing, encroachment, commercial plant, and non-commercial plant. In this work, we

mainly focus on two types of human illegal activities: commercial animal and non-commercial animal. The poaching data is then divided into four different groups according

to four seasons in Uganda: dry season I (December, January, and February), dry season II (Jun, July, and August),

rainy season I (March, April, and May), and rainy season

II (September, October, November). We aim at learning

behaviors of the poachers w.r.t these four seasons as motivated by the fact that the poachers’ activities usually vary

seasonally. At the end, we obtain eight different categories

of wildlife data w.r.t the two poaching types and four seasons. Furthermore, we achieve a variety of domain features

including the animal density, area slope, habitat, npp, and

locations of villages/rivers/roads based on the instructions

provided by (Critchlow et al. 2015).

The park area is divided into a 1km × 1km grid which

consists of more than 2500 grid cells. All domain features as

well as the rangers’ patrols and observations are then aggregated (or interpolated) into the grid cells. We also refine the

poaching data by removing all abnormal data points such as

the data points which indicate that the rangers conducted patrols outside the QENP park or the rangers moved too fast,

etc. Since we attempts to predict the poachers’ actions in

the future based on their activities in the past, we apply a

time window (i.e., five years) with an 1-year shift to split the

poaching data into eight different pairs of training/test sets.

For example, the oldest training/test sets correspond to fouryear data (2003–2006) for training and one-year (2007) data

for testing. In addition, the latest training/test sets refer to

the four years (2010–2013) and one year (2014) of data for

training and testing respectively. The prediction accuracy of

each category (according to seasons and poaching types) is

averaged over these eight different training/test sets.

and 2. Overall, Tables 1 and 2 show that HiBRID provides

the best prediction accuracy, demonstrating the significant

advance of incorporating the observation uncertainty and the

temporal effects into predicting the poachers’ behaviors.

Summary

In summary, learning poachers’ behaviors or anticipating

where the poachers often go for poaching is critical for the

rangers to generate effective patrols. In this work, we propose a novel hierarchical behavioral model, HiBRID, to predict the poachers’ behaviors wherein the rangers’ imperfect

detection of poaching signs is taken into account. Furthermore, our HiBRID model incorporates the temporal effect

on the poachers’ behaviors. The model also does not require

a known number of attackers. Moreover, we provide two

new heuristics: parameter separation and target abstraction to reduce the computational complexity in learning the

model parameters. Finally, we use the real-world data collected in Queen Elizabeth National Park (QENP) in Uganda

over 12 years to evaluate the prediction accuracy of our new

model. The experimental results demonstrate the superiority

of our model compared to other existing models.

References

Basilico, N.; Gatti, N.; and Amigoni, F. 2009. Leaderfollower strategies for robotic patrolling in environments

with arbitrary topologies. In AAMAS.

Brown, M.; Haskell, W. B.; and Tambe, M. 2014. Addressing scalability and robustness in security games with

multiple boundedly rational adversaries. In GameSec.

Critchlow, R.; Plumptre, A.; Driciru, M.; Rwetsiba, A.;

Stokes, E.; Tumwesigye, C.; Wanyama, F.; and Beale, C.

2015. Spatiotemporal trends of illegal activities from rangercollected data in a ugandan national park. Conservation Biology.

Fang, F.; Stone, P.; and Tambe, M. 2015. When security

games go green: Designing defender strategies to prevent

poaching and illegal fishing. In IJCAI.

Korzhyk, D.; Conitzer, V.; and Parr, R. 2010. Complexity of computing optimal stackelberg strategies in security

resource allocation games. In AAAI.

Letchford, J., and Vorobeychik, Y. 2011. Computing

randomized security strategies in networked domains. In

AARM.

MacKenzie, D. I.; Nichols, J. D.; Lachman, G. B.; Droege,

S.; Andrew Royle, J.; and Langtimm, C. A. 2002. Esti-

Prediction Accuracy

In our experiments, we evaluate six different models: HiBRID, HiBRID-Abstract (HiBRID with target abstraction),

HiBRID-NoTime (HiBRID without considering the temporal effect on the poachers’ behaviors), Logit (Logistic Regression), SUQR, and SVM (Support Vector Machine). We

use AUC (Area Under the Curve) to measure the prediction

accuracy of these models. The results are shown in Tables 1

376

mating site occupancy rates when detection probabilities are

less than one. Ecology 83(8):2248–2255.

McFadden, D. 1972. Conditional logit analysis of qualitative choice behavior. Technical report.

McKelvey, R., and Palfrey, T. 1995. Quantal response equilibria for normal form games. Games and economic behavior 10(1):6–38.

Meng, X.-L., and Rubin, D. B. 1993. Maximum likelihood estimation via the ecm algorithm: A general framework. Biometrika 80(2):267–278.

Montesh, M. 2013. Rhino poaching: A new form of organised crime1. Technical report, University of South Africa.

Nguyen, T. H.; Yang, R.; Azaria, A.; Kraus, S.; and Tambe,

M. 2013. Analyzing the effectiveness of adversary modeling

in security games. In AAAI.

Secretariat, G. 2013. Global tiger recovery program implementation plan: 2013-14. Report, The World Bank, Washington, DC.

Tambe, M. 2011. Security and Game Theory: Algorithms,

Deployed Systems, Lessons Learned. Cambridge University

Press.

Yang, R.; Ford, B.; Tambe, M.; and Lemieux, A. 2014.

Adaptive resource allocation for wildlife protection against

illegal poachers. In AAMAS.

377