EBPU Masterclass 4 7 Steps to Quality Improvement Miranda Wolpert 1

advertisement

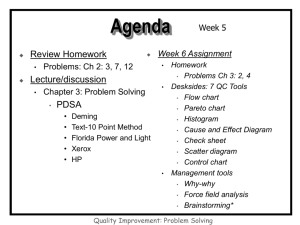

EBPU Masterclass 4 7 Steps to Quality Improvement Miranda Wolpert 1 Session objectives By the end of this 2-part masterclass, you will know how to: • • • • Identify opportunities for improvement Collect relevant information Use information for improvement Implement and evaluate change 2 Setting the scene • Questions from YOU…?? • Quality and Quality Improvement • Improvement vs Accountability vs Research • Key questions to focus the process 3 Levels of transformation Child and family Individual Practitioner Team Service National Aggregate 4 Routes to transformation Quality Improvement (QI) Change Research (R) Understanding 5 Act Plan QI Study Do R 6 What is Quality? • Care that is – Safe – Timely – Effective – Efficient – Equitable – Person centred • “ Patients families and clinicians would be better off if we professionals recalibrated our work such that we behaved with patients and families not as hosts in the care system but as guests in their lives” Don Berwick 7 What is Quality Improvement? • Making changes that will lead you in a new desired direction – for example • Better outcomes for children and young people • Children and young people given more information about treatments so that they make informed choices and become active partners in the decision making process • Children and young people feel listened to • All improvement requires change but not all change leads to improvement 8 Key Elements Required for Improvement • Will to do what it takes to change to a new system (way of doing things) • Ideas on which to base the design of the new system • Execution of the ideas 9 Practice Research Activity Individual practitioner reflection Supervision, regular monitoring / evaluation of individual practice, progress Monitoring / evaluation of team practice and progress Local evaluation, monitoring / evaluating a service’s outcomes Local research aimed at national audience National / international research aimed for national / international audience Local Authority or PCT, possibly other areas nationally while acknowledging caveats Nationally / internationally Generalisability Individual case Individual practitioner Individual team (possibly other teams matched on a number of features) Local Authority or PCT (possibly other LAs / PCTs matched on a number of features) Timescales Ongoing, iterative process Can be either Discrete research project Requires ethical approval? Unlikely Sometimes Almost always Uses for the data Inform practice Both Add to the general evidence base 10 The Three Faces of Performance Measurement Aspect Improvement Accountability Research Improvement of care Comparison, choice, reassurance, spur for change New knowledge Test observable No test, evaluate current performance Test blinded or controlled Accept consistent bias Measure and adjust to reduce bias Design to eliminate bias • Sample Size “Just enough” data, small sequential samples Obtain 100% of available, relevant data “Just in case” data • Flexibility of Hypothesis Hypothesis flexible, changes as learning takes place No hypothesis Fixed hypothesis • Testing Strategy Sequential tests No tests One large test • Determining if a Change is an Improvement Run charts or Shewhart control charts No change focus Hypothesis, statistical tests (t-test, F-test, chi square), p-vlaues • Confidentiality of the Data Data used only by those involved with improvement Data available for public consumption and review Research subjects’ identities protected Aim Methods: • Test Observability • Bias 11 R Lloyd, Institute for Healthcare Improvement Five fundamental principles of improvement 1. Knowing why you need to improve 2. Having a feedback mechanism to tell you if the improvement is happening 3. Developing an effective change that will result in improvement 4. Testing a change before attempting to implement 5. Knowing when and how to make the change permanent 12 …The Model for Improvement is a tried and tested framework for applying these five principles 13 Key questions to focus the process • What are we trying to accomplish? • How will we know that a change is an improvement? • What changes can we make that will result in improvement? 14 The seven steps to quality improvement…. 1. 2. 3. 4. 5. 6. 7. Form the Team Set Aims Establish Measures Select Changes Test Changes Implement Changes Spread Changes 15 Part 1 – Getting started with QI 1. Form the team 2. Set aims - that are measurable, time-specific, and apply to a defined population 3. Establish measures - to determine if a specific change leads to improvement 4. Select changes – that are most likely to result in improvement 16 Step 1 Forming the Team • Including the right people is crucial – Review the aim (what you are trying to accomplish) – Be sure the team includes people familiar with all the different processes that relate to the aim • Effective teams include members representing three different kinds of expertise: – Senior leader (have ‘clout’) – Topic expertise ( NB: Changing Relationships via, for example, Shared Decision Making, relies on at least two sources..) – Day to day leader 17 Activity 1 Forming the team • • • Have you got the right people? Who else do you need? Think about people who operate in different parts of the system, offer different kinds of expertise (including clinical, specialist, project management etc.), can fulfil the range of roles needed to design, deliver and evaluate the project. 18 Step 2 Set aims • Improvement requires setting aims – clear enough to guide the effort, grand enough to excite the participants • Aim should be time specific and measurable –how much by when – (eg 95% of children attending Dr X’s clinic will “feel listened to” by Dec 2011) • Define specific group of children/young people/families affected • Team must agree the aim • Allocating people and resources necessary to accomplish the aim is crucial 19 Activity 2 Setting Aims (15mins) What are we trying to accomplish? Aims need to be measurable, time-specific, and apply to a defined population. 20 Step 3 Establish Measures… • Critical for knowing whether or not changes introduced are leading to improvement • Measurement for learning, not for judgement or comparison • Three types of measures: – Outcome measures – Process measures – Balancing measures • Need baseline measures (where you are starting from) 21 Three Types of Measures • Outcome Measure(s): Directly related to the aim of the project (the following are examples only) – % of young people who feel listened to; – % of young people with ADHD who have a better outcome; – % of young people with depression who are clear about the treatment options available • Process Measures: Measuring the activities/processes you have introduced to achieve your aim; measures of whether an activity has been accomplished – % of young people who have a 1:1 weekly meeting with their key worker – % of young people with ADHD who are on medicine X – % of young people with depression who were provided with the DVD about depression • Balancing Measures: Don’t want performance in other important areas to get worse whilst concentrating on the project-want to ensure they are maintained or even improved – ? Waiting time for Dr X’s clinic 22 Run chart (graph) Sep-0 7 Jul-0 7 M ay-0 7 M ar-0 7 Jan-0 7 No v -0 6 Sep-0 6 Jul-0 6 M ay-0 6 M ar-0 6 Jan-0 6 • Displays measures so you can see what is happening • Lets you know if change is resulting in improvement • Key changes 7 made can be 6 noted (annotated) 5 on the chart to 4 make it more 3 meaningful 2 • Lets you know if 1 improvements 0 made are maintained over time 23 How to construct a run chart 1. State the question the run chart will answer and obtain data necessary to answer the question 2. Develop the horizontal scale (usually time) 3. Develop vertical scale 4. Label graph 5. Calculate and place a median of the data on the run chart – Median required when applying some of the rules used to interpret a run chart – Place median only after a minimum of 10 data points available – Median is the number in the middle of the data set when the data is recorded from the highest to the lowest 6. Add additional information to the chart – Add a goal or target line if appropriate – Annotate unusual events, changes tested or other pertinent information on the chart at an appropriate location 24 45 Example of Annotated Run Chart: Improved Access Began backlog reducion 40 35 30 Reduced Appt types 25 20 15 Provider Back from Vacation Cross Trained Staff Protocols Protocols Tested 10 5 0 May-02 Jun-02 Jul-02 Aug-02 Sep-02 Oct-02 Nov-02 Dec-02 25 Family of Measures for Depression Population Percent of Patients with Structured Diagnostic Assessment in Record 100 Goal percent 80 60 40 20 0 Nov-99 100 Dec-99 Jan-00 Feb-00 M ar-00 Apr-00 M ay-00 Jun-00 Jul-00 Aug-00 Sep-00 Oct-00 Nov-00 Dec-00 Feb-01 Percent of Patients with Follow-up Structured Assessment at 4-8 Weeks 80 percent Jan-01 Goal 60 40 20 0 Nov-99 Jan-00 Feb-00 M ar-00 Apr-00 M ay-00 Jun-00 Jul-00 A ug-00 Sep-00 Oct-00 Nov-00 Dec-00 Jan-01 Feb-01 Average Change in MHI5 for Patients Treated After 12 weeks 25 Avg. change in MHI5 Dec-99 20 Goal 15 10 5 0 Nov-99 Dec-99 Jan-00 Feb-00 M ar-00 Apr-00 M ay-00 Jun-00 Jul-00 Aug-00 Sep-00 Oct-00 Nov-00 Dec-00 Jan-01 Feb-01 26 Measurement Guidelines Need a balanced (outcome, process and balancing) set of 3 to 8 measures reported each month to assure that the system is improved Focus on the vital few • Very carefully define each of the measures (numerator and denominator) – – • examples of outcome measures : % of patients who felt they were fully involved as active partners in choosing an appropriate treatment; % of patients who have full record access examples of process measures: % of patients provided with the relevant decision aid; % of patients with an agreed documented course of action; % of patients who received the chosen treatment Begin reporting your measures immediately and continue reporting monthly – Develop run charts (graphs) to display your measures each month • Report an appropriate sample size (usually the denominator) with measures each month : a random sample of 20 or all ( if less than 20) is usual • Integrate measurement into daily routine • • Annotate graph (run chart) with changes Make run charts visible – provides important feedback 27 Measurement Assessment Content Area: ______________________________________________ Measure Is this measure logical? (Yes/No) Are there alternatives to the current measure? If so, what are they? Are the operational definitions clear? Are the data collection procedures reasonable? What challenges exist to collecting this measure? 28 Activity 3 Establishing measures (15 mins) Begin to consider: – – – Outcome measure(s) (relates directly to your aim) Process Measures (measures of whether an activity/process has been accomplished) Balancing measure (measure of something that might be adversely affected by the improvement work) Focus on the vital few! 29 Step 4 Select Changes • All improvement requires change (although all change does not lead to improvement!!) • Evidence based changes/recommended guidelines for improvement may exist for your topic…. • In circumstances where evidence based changes don’t exist one can use hunches, theories, ideas and use small rapid cycle testing 30 How to select or develop a change…. • Understanding of processes and systems of work – e.g. process mapping of referral pathway or clinics • Creative thinking - challenging boundaries, rearranging the order of steps, visualising the ideal etc – e.g., what would an ideal clinic look like from YP perspective… remove the constraints! • Adapting known good ideas - learn from the best known practice, evidence based changes etc; includes “bundle” concept – Bundle concept = theory that when several evidence-based interventions/guidelines are grouped together and applied in a single ‘protocol’, it will improve patient outcome • A knowledge of generic change concepts is useful (these plus vital subject matter knowledge can be used to develop changes that result in improvement) 31 Activity 4 Plan, Do, Study, Act: Begin to consider what changes are likely to result in improvement (15 mins) 32 Part 2 – Making change happen 5. Test the changes -before implementing 6. Implement changes 7. Spread changes 33 Step 5 Test Changes • PDSA (Plan,Do, Study,Act)–shorthand for testing a change in a real work setting • Small rapid scale testing – Minimises resistance – Indicates whether proposed change will work in environment in question – Provides opportunity to refine change as necessary before implementing on a broader scale – Rapid cycle starts with e.g. one young person, one doctor, one nurse, one parent, one clinic ,…..moving to – 1, 3, 5, all! – These changes happen in hours and days not weeks and months 34 How to test a change…. • Try the change on a temporary basis and learn about it’s potential impact • Adapt the idea/change to a local situation • Small scale initially, include differing conditions as scale is increased • Explicitly plan the test, including the collection of data 35 How to support testing changes with data • • • • Turn observations into data Collect and display data Learn from data Understand variation (using run charts…) – Normal variation/Special cause 36 Plan, Do, Study, Act What changes are we going to make based on our findings What exactly are we going to do? Act Study What were the results? Plan Do When and how did we do it? 37 The Model for Improvement • Set aims that are measurable, time-specific, and apply to a defined population • Establish measures to determine if a specific change leads to improvement • Select changes most likely to result in improvement • Test the changes before implementing T. Nolan et al. www.ihi.org 38 The Improvement Model • “This model is not magic, but it is probably the most single useful framework I have encountered in twenty years of my own work in quality improvement……” » Don Berwick , President IHI 39 MODEL FOR IMPROVEMENT A P S D CYCLE:____DATE:____ Objective for this PDSA Cycle PLAN: QUESTIONS: Use a PDSA form to organize, standardize and document your tests! PREDICTIONS: PLAN FOR CHANGE OR TEST: WHO, WHAT, WHEN, WHERE PLAN FOR COLLECTION OF DATA: WHO, WHAT, WHEN, WHERE DO: CARRY OUT THE CHANGE OR TEST; COLLECT DATA AND BEGIN ANALYSIS. STUDY: COMPLETE ANALYSIS OF DATA; SUMMARIZE WHAT WAS LEARNED. ACT: ARE WE READY TO MAKE A CHANGE? PLAN FOR THE NEXT CYCLE. 40 Overall Aim: Change Idea 1 Change Idea 2 Change Idea 3 Change Idea 4 Change Idea 5 Change Idea 6 Other change Ideas Develop Changes based on the Change Ideas 41 Activity 5 Plan, Do, Study, Act: 2. Select one change and plan a first test of the change (PDSA cycle) on the form provided (25 mins) 42 Step 6 Implement Changes • Implementing change means rolling it out on a broader scale or making it a permanent part of how things are done (eg entire pilot population or entire unit) • After small scale testing, learning and refining through several PDSA cycles a change is ready for implementation ....more on this next time! 43 Step 7 Spread Changes • Spreading change involves taking a successful implementation from a pilot unit/population and replicating in other parts of the organisation or other organisations i.e., change is now adopted at multiple locations ....more on this next time! 44 The Sequence of Improvement Make part of routine operations Test under a variety of conditions Theory and Prediction Testing a change Spreading a change to other locations Implementing a change Act Plan Study Do Developing a change 45 Real Time Audit • Usefulness of real time auditing has been repeatedly demonstrated in industry • Provides real time process measures where changes for improvement have been introduced ( eg next 5 patients, 5 charts per day, 5 charts per week etc as appropriate) 46 Traditional v Real time Process Audit • Traditional Audit – Long time scales reflect historical practice – Feedback often occurs after many relevant staff have moved on – Done for purposes of formal evaluation • Real time Audit - Reflects current practice - Provides the quick timely feedback necessary for focused improvement - Done to engage staff directly in continuous improvement efforts 47 Tips for success…and ultimate sustainability • Leadership Commitment – Regular attention to improvement work – Remove barriers to progress – Recognise and celebrate success • Make it difficult to revert to old ways • Culture of improvement and deeply engaged staff – Measurement for improvement, not judgement – Begin with early adopters – Be clear about the benefits to stakeholders 48 • Pay attention to on going training and education needs • Measurement over time (monthly) – Display data – Note performance against aim • Remember sequence of improvement ie test, implement, spread • Never too early to plan for spread but before carrying out the plan certain things should be in place including – Existence of successful sites that are the source of the specific ideas to be shared as well as – Evidence that the ideas result in the desired outcomes 49 Key References • Langley, G. et. al. The Improvement Guide. JosseyBass Publishers, San Francisco, 2009 (2nd Edition). • Solberg. L. et. al. “The Three Faces of Performance Improvement: Improvement, Accountability and Research.” Journal of Quality Improvement 23, no.3 (1997): 135-147 • Institute for Healthcare Improvement : www.ihi.org • http://www.ihi.org/IHI/Topics/Improvement/Improvem entMethods/HowToImprove/ • http://www.institute.nhs.uk/sustainability_model/ general/welcome_to_sustainability.html 50 With thanks to Noeleen Devaney, Quality Improvement Advisor at the Health Foundation for sharing her expertise and providing guidance for this Masterclass 51