Social-Psychological Harmonic Oscillators in the Self-Regulation of Organizations and Systems:

advertisement

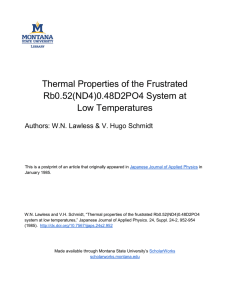

Quantum Informatics for Cognitive, Social, and Semantic Processes: Papers from the AAAI Fall Symposium (FS-10-08) Social-Psychological Harmonic Oscillators in the Self-Regulation of Organizations and Systems: The Physics of Conservation of Information (COI) William F. Lawless1 and Donald A. Sofge2 1 Paine College, 1235 15th Street, Augusta, Georgia, USA, wlawless AT paine.edu 2 Naval Research Laboratory, 4555 Overlook Avenue SW, Washington, DC, donald.sofge AT nrl.navy.mil understand the intelligent behavior of humans, animals and machines (Ying, 2010). Our prior research (Lawless et al., 2010b) has established that cooperation is required for organizational effectiveness, especially as organizations trade growth in size for reduced instability to gain market control, but the tradeoff means that increased cooperation reduces an organization's ability to innovate. However, in a system (Lawless et al., 2010a), such as the European Union, Haiti, or China, forced cooperation increases corruption and reduces effectiveness in responding to emergencies (e.g., earthquakes). From other research (Ostrom, 2009), we know that self-regulated systems are more effective than centrally controlled or privately owned systems, except when self-regulation compromises social welfare such as corrupting the practice of science (Lawless et al., 2005). We also know that if an algorithm exists to run an organization perfectly (Conant & Ashby, 1970), the organization emits zero information (Conant, 1976), making a perfectly run organization appear to be dark to outsiders but also itself (Lawless et al., 2010b), accounting for the negligible associations between managers and the performance of their firms (Bloom et al., 2007). Inversely, the turmoil in a competitive society produces more “lightness” from additional information than darkness from its absence, a paradox involving tradeoffs underlying the physics of the conservation of information that must be resolved for systems composed of humans, machines and robots to be able to computationally regulate themselves. Presently, the conventional model of interdependence is provided by game theory, ineffective for several reasons that include its static nature even when used in repeated or "dynamic" games (Lawless et al., 2007); its inability to predict (Sanfey, 2007); and its unsatisfactory modeling of interdependence problems (Schweitzer et al., 2009). In our search for a better model of interdependence, we noted Feynman (1996) postulated that conventional models of entanglement were inefficient, an observation that we had also made about existing theories of interdependence (Lawless et al., 2007). Abstract Using computational intelligence, our ultimate goal is to self-regulate systems composed of humans, machines and robots. Self-regulation is important for the control of mixed organizations and systems. An overview of self-regulation for organizations and systems, characterized by our solution of the tradeoffs between Fourier pairs of Gaussian distributions that affect decision-making differently, is provided. A mathematical outline of our solution and a sketch of future plans are provided. Introduction Tradeoffs invoke a differential uncertainty principle for organizations and systems between four interrelated bistable variables: the possible location of a planned event and its spatial frequency; associated energy and time expenditures; action and self-reports of that action; or plans and their execution (Lawless et al., 2010b). We have found that as certainty increases in one factor socially interdependent with another, uncertainty increases in the other. The physics of these tradeoffs have different impacts whether the focus is on an organization or system of organizations. In this paper we lay out a conceptual path to the solution we have found for the tradeoffs in selfregulating organizations and systems. We have previously established theoretically that computational intelligence can exist to control organizations, systems, and systems of systems (Lawless et al., 2010b). Less-well developed are the mathematical proofs to support control, which we briefly sketch in this paper. It is an important problem for several reasons. Success will not only help to understand organizations and systems, but also to control organizations and systems with computational intelligence. This fits with the two goals of Artificial Intelligence: to build intelligent machines; and to Copyright © 2010, Association for the Advancement of Artificial Intelligence (www.aaai.org). All rights reserved. 77 Further, Rieffel (2008) concluded that quantum information theory has benefited quantum and classical theories, especially entanglement and control (in the social context, we use “classical” to mean traditional). Working with Rieffel's theme, similarities exist between social interdependence and quantum entanglement. Both interdependence and entanglement are fragile, quickly breaking down when interacting with an external environment (e.g., quantum "decoherence" and social interference). Unknown states for both are impossible to copy (e.g., quantum's "no cloning theorem"; and a movie of human behavior requires a long development: the 2009 movie Avatar took 16 years to finish). Both produce powerful computational effects (e.g., entanglement reduces computations from exponential to polynomial time; and interdependence is central to social problem solving on juries, scientific journals, entertainment, etc.). Both defy classical descriptions; e.g. "intuition is dangerous in quantum mechanics." (Gershenfeld, 2000, p. 253), while classical interpretations of interdependent social states commonly produce two incommensurable or irreconcilable stories in every social situation, the touchstone of juries, trumpeted by Aeschylus in his play Oresteia; in modern jurisprudence, incommensurability in the competing views between a prosecutor and defense attorney are required for justice (Freer & Purdue, 1996). That interdependence produces incommensurable stories is not only the foundation of game theory, but also the first mathematical solution to the Prisoner's Dilemma Game, known today after Nash (1951), its discoverer, as Nash equilibria (NE). However, Axelrod (1984) characterized NE solutions as pernicious to social welfare, recommending that with evolution, society should replace individual "selfinterests" with cooperation. Simon (1990), too, construed cooperation as altruism. Simon concluded that altruism and bounded rationality were acceptable to Darwinian models of evolution. But from our perspective based on organizational and system tradeoffs, the replacement of self-interest with altruism and bounded rationality reduces the likelihood of innovation and evolution in human affairs (Lawless et al., 2010b). We believe that our approach with the conservation of information (more or less variance in the tradeoffs by managers choosing among interdependent Gaussian distributions) is high-risk research. Deriving a theory of complementarity to produce uncertainty in tradeoffs among conjugate variables, as suggested by Bohr for action and observation, was considered by Von Neumann & Morgenstern (1953, p. 148), the developers of game theory, to be "inconceivable". Nonetheless, guided by Bohr, our discovery transforms game theory (Lawless et al., 2010b). The mathematics we envision eventually will provide a system, and the organizations within it, the ability to selfregulate using computational intelligence. But for this paper, we focus on self-regulation in the physics of the tradeoffs between cooperation and competition. To develop interdependence theory, we adapted Cohen's (1995) interpretation of classical uncertainty principle for signal processing. Self-Regulation Our research project began with trying to understand the mismanagement of U.S. military nuclear wastes (Lawless, 1985). Lilienthal (1963), the first leader of the U.S. Atomic Energy Commission (AEC) recognized that AEC's selfregulation would compromise the practices of its scientists. AEC management determined the priorities for its scientists that it chose to receive funding, independent of merit, allowing AEC's (and ERDA's and DOE's) management to manipulate its scientists, control the data that was collected, and determine the results that were published. In contrast, and with the first ever prediction with the physics of the conservation of information (Lawless et al., 2005), competition driven by independent scientific peer review has had a positive impact of the practice of science by DOE scientists by reducing the influence of DOE management on its scientists. But all organizations prefer self-regulation, including commercial ones. The U.S. Federal Reserve Board, the European Union, and the Roman Catholic Church are examples. But in these cases, self-regulation depends on their determination of "transparency" to meet its objectives. Can self-regulation ever work? Not for commercial or private enterprises according to Hardin (1968), who believed that a common resource had to be regulated by a central authority with enforced cooperation or privatized to sustain the resource. However, Ostrom (2009), a nobel laureate in economics for 2009, demonstrated that a resource commons can be successfully self-regulated by an association of users. She challenged the conventional wisdom that the management of property common to multiple users conflicts with individual self-interests had to be poorly managed. In these cases failure means the likely loss of a resource (e.g., salmon in the Pacific Northwest). And yet Ostrom found that outcomes are often better than predicted by Hardin. She found that successful resource users frequently managed its self-regulation. Ostrom's characterizations of successful community associations are: self-governance: clearly defined group boundaries; rules matched to local needs; individual responsibilities proportional to benefits; users participating in rule modifications; community rights respected by external authorities; and a graduated system of sanctions used by community members with low-cost conflict resolution to self-regulate appropriate resource consumption. From our perspective, the key characteristic is the establishment and maintenance of interdependence between resources and users who share the benefits and costs (Lawless et al., 2009). 78 scientists). Adopting a system to reduce this uncertainty was the first recommendation, leading to the eIRB system now installed at E-MDRC (an IRB is an Institutional Review Board required to conduct research on humans and animals; eIRB is an electronic or web-based IRB.). But in overcoming fragmentation by enforcing cooperation across its system, the MDRCs must not adversely impact its practices of science. Specific to DOE or the military MDRCs, top-down (minority) control is desired to optimize its operations in a way that fulfills its mission in the field. However, minority control encumbers the practice of science, which depends on NE (e.g., challenges to prevailing scientific theories). In organizations, reducing the existence of internal centers of conflict (NE) is often the goal of management. But suppressing NE makes the practice of science inefficient or ineffective, creating a paradox (exceptions exist, such as IBM). Thus, for optimal performance, scientists conducting research within an organization should be governed by its chain of command (e.g., achieving its mission), whereas to produce top-notch scientific research in a system, scientists must be governed by internal and external NE, a paradox. Since DOE and the MDRCs have the specific missions of being knowledge generators, this paradox should not be resolved. Instead, construing the paradox as a source of tension helps to conceptualize the dual role of system scientists, where individual tension can be exploited to both power mission performance and to change its vision in a way that revitalizes the organization and the system over time. Theory Collecting information from well-defined networks or organizations for social network analysis (SNA) is relatively straightforward. But even when the information is readily available, the signals collected from social networks have not led to valid predictions about their actions or stability (NRC, 2009; Schweitzer et al., 2009). For "Dark" social networks (DSNs), comprised of illicit drug gangs or terrorists (Carley, 2006), uncovering information to compute an SNA is orders of magnitude more difficult. This failure with SNAs, game theory, and organizations in general (Pfeffer & Fong, 2005) has led to a wide request for new social theory to better understand the effects of interdependence in social networks and organizations (Jasny et al., 2009; NRC, 2009). For example, Barabási (2009) concluded that room needs to be made for a new theory "to understand the behavior of the systems … [and] the dynamics of the processes ... [to] form the foundation of a theory of complexity." (p. 413) COI predicts that organizations with unified commands are more stable than those under dual or shared commands (i.e., fragmented; Lawless et al. 2007); larger organizations are more stable than smaller ones; and that, unlike systems, the best run organizations have a clear mission under a central chain of command but with minimum bureaucracy. These findings buttress the theory first proposed by Conant (1976) that optimal organizational performance requires effective communication and lines of communication (channels), effective coordination (management) with minimum blocking processes (bureaucracy), and with minimal generation of internal noise. In addition to COI, we have proposed that two complementary processes operate in an organization or a system. First, restorative forces act via negative feedback to meet a business model or worldview (e.g., missions, baselines, or standards such as "best business practices"). Second, inductive forces act via positive feedback to progressively change the current operational procedures (e.g., vision; business re-engineering; business transformation). Based on field results, we postulated that system fragmentation characterizes the existence of non-cohesive work cultures and practices. For an organization, fragmentation impedes the execution of an organization's business model (Lawless et al., 2007). In our case study of the military's system of Medical Department Research Centers (MDRC; MDRC is an acronym for a fictitious name to represent the system in order to keep the real organizations, one of seven, anonymous. By extension, EMDRC is one of the seven sites in the system) designed to teach research methods to its physicians, we found that by increasing inefficiency, fragmentation increased the difficulty of executing its mission, or even knowing the effectiveness of the system at any point with a desired degree of confidence. Fragmentation was reflected by an increase in the uncertainty in the knowledge about MDRC's publication rates, publication quality (scientific impacts), and scientific peer status (the comparative quality of its Mathematical Model We have postulated that information about an organization's market size forms a Gaussian distribution coupled to its Fourier transform as a multiplicative Fourier pair that ideally equals or exceeds a constant, producing COI (Rieffel, 2007). We have identified four sets of Fourier pairs that describe interdependence in the tradeoffs for organizations or systems. First, larger organizations are more stable (lower stock market volatility) or "darker" than smaller organizations, motivating organizations to grow in size with mergers and acquisitions (Andrade et al., 2001). Second, even for well-known organizations, the more skilled they become, the "darker" become the signals of their presence to observers and to themselves (Landers & Pirrozolo, 1990; Lawless et al., 2000), in effect, hiding their presence; in contrast, system effectiveness requires information from NE. Third, as certainty in one factor grows, uncertainty in its Fourier paired cofactor grows, creating tradeoffs between conjugate variables (i.e., an exactness in two conjugate variables cannot occur simultaneously). Illustrating this for self-reported and interview data, the meta-analysis by Baumeister and colleagues (2005) found that self-esteem, often studied in psychology, was negligibly correlated to academic and work performance. Fourth, the more focused an organization's operational center-of-gravity, the more able 79 it is to replicate its business model or plan geospatially (Lawless et al., 2009). By studying the fluctuations an organization or system daily experiences across these four pairs of interdependent cofactors, COI suggests that it is possible to reverse engineer them from the information they produce in response to perturbations. But to complete our theory, we need in addition a mechanism to measure the effects of an NE on social welfare across a system or between two organizations from a methodologically social perspective. For that we use Lotka-Volterra type equations to produce limit cycles (May, 1973; see Figure 1). Conservation of Information, NEs, and SPHOs A Hilbert Space (HS) is an abstract space defined so that vector positions and angles permit distance, reflection, rotation and geospatial measurements, or subspaces with local convergences where these measurements can occur. That would allow real-time determinations of situated, shared situational awareness in localizing the center of gravity for a target organization or system, σx-COG, to represent the standard deviation in the shared uncertainty, and σk to similarly represent the standard deviation in spatial frequencies of its patterns across physical space (e.g., the mapping of social-psychological or organizational spaces to physical networks). As an NE, it establishes an "oscillation" between orthogonal socio-psycho-geospatial operators A and B such that L-V Notional Chart Series1 7 2 N, in population units 6 Series2 4 5 3 5 [A,B] = AB - BA = iC ≠ [B,A] 4 3 3 2 1 1 This type of oscillation defines a social-psychological decision space embedded within an organization or system. It is called an "oscillator" because decision-making occurs during sequential or turn-taking sessions that "rotate" attention for the topic under discussion in the minds of listeners or deciders first in one valence direction (e.g., "endorsing" a proposition) followed by the opposite (e.g., "rejecting" a proposition) to produce an oscillation or "rocking" back and forth process for a social-psychological harmonic oscillator (SPHO), like the merger and acquisition (M&A) negotiations between a hostile predator organization and its prey target. Considering discrete Fourier transforms, that these operations are incommensurable suggests that social knowledge is only derived from non-commutative orthogonal operators (i.e., the eigenstates of a Hermitian operator are orthogonal if their inner product is zero, as in <ψm|ψn> = 0). Pure oscillations are unrealistic ("frictionless"; May, 1973) and possibly driven by illusions (endless discussions of religion, or risk perceptions common to cooperation processes). We have found that these are more likely from enforced cooperation under consensus than majority rules (i.e., the "gridlock" between DOE Hanford and its consensus citizens board, rather than accelerated cleanup at DOE SRS pushed by its majority rule board), because consensus rules, at least in DOE, specifically reject science as a determinant (Bradbury et al., 2003). The lack of an SPHO identifies decisions made by minority (consensus) or authoritarian rules (e.g., decisions common to military, authoritarian government or CEO business decisions; cf. Lawless et al., 2007). Unlike an organization's central command, a democratic space could be defined for a system as a space where decisions characterized by SPHOs are made by majority rule (e.g., jury, political, or faculty decisions). The key to building the abstract representations necessary to construct an SPHO is to locate opposing clusters of the shared interpretations of concepts 6 4 2 7 5 0 1 2 3 4 5 6 (1) 7 Time, units Figure 1. Instead of a limit cycle (N1 versus N2; in May, 1973), the data are displayed with N over time, t. Arbitrary parameters produce "frictionless" oscillations from an NE. We interpret N1 and N2 to be in competition at time 1 (and t = 3.5, 6 and 7). The public acts at time 2 (and t = 3, 4 and 5) to produce social stability. Figure 2. Despite the arbitrary nature of the data in the Figure 1, the 2010 campaign for Senator in Illinois captures the limit cycle on the left from about the end of January 2010 to the end of April (at the far left, the Democrat is on top, but falls beneath the Republican by the end; for the contest between Obama and Clinton in 2008, see Lawless et al., 2010b). 80 geospatially across physical space or via a sociopsychological network anchored or mapped to physical network space. SHPOs should generalize to entertainment; e.g., Hasson and his colleagues (2004) found that a Clint Eastwood movie engages an audience's attention with this rocking process. This insight suggests that the reverse engineering of forcibly darkened organizations (e.g., terrorists, Mafia) is possible (Lawless et al., 2010b). The disturbance of a system oscillating between conjugate bistable states |ψ> by an operator Xn produces an observable xn with probability |an|2, causing eigenfunction |ψ> to collapse into an eigenstate |n> unless |ψ> is already in one (i.e., a classical image or interpretation). an is a coefficient of an orthonormal basis. Thus, |an|2 is normalized. According to Bohr (1955), complementarity actors and observers and incommensurable cultures generate conjugate or bistable information couples that he and Heisenberg (1958) suggested paralleled the uncertainty principle at the atomic level. Our model tests their speculation and extends it to role conflicts (Lawless et al., 2009). Even for mundane social interactions, Carley (2002) concluded that humans become social to reduce uncertainty. Thus, the information available to any human individual, organization, or system is incomplete, producing uncertainty. More importantly, this uncertainty has a minimum irreducibility that promotes the existence of tradeoffs between any two factors in an interaction (uncertainty in worldviews, stories or business models, ΔWV, and their execution, Δv; uncertainty in centers of gravity ΔxCOG and spatial frequencies, Δk; and uncertainty in energy, ΔE, and time, Δt). Given [A,B] = iC, and the difference δ A = A - <A>, where <A> is the expectation value of A, and its variance is <δ A2>, then [δ A,δ B] = iC; further, <δ A2><δ B2> > ¼ <C2>, giving the Heisenberg uncertainty principle ΔAΔB >1/2<C> (see Gershenfeld, 2000, p. 256). The uncertainty relation models the variance around the expectation value of two operators along with the expectation value of their commutator. The uncertainty in these oscillations can be reformulated to establish that Fourier pairs of standard deviations become: σ Aσ B > ½ pilots and book-knowledge of air combat maneuvering (Lawless et al., 2000); and captures the discrepancy between game-theory preferences and actual choices made during games (Kelley, 1992). It must allow rotation vectors as a function of the direction of rotation. It must permit measurements between vectors and rotations. And a model of interdependence must enable a mathematics of interdependent (ι) bistability where measurements disturb or collapse the ι states that occur during socialpsychological interactions in physical space. Up to this point in time, we have simulated equation (2) for organizations. In the future, we plan to finalize the mathematics and simulate it for a system. Two operators A and B are community interaction matrices for an NE that locate ι objects in social space (shared conceptual space) geospatially. ι states are non-separable and non-classical; disturbances collapse ι states into classical information states. Two agents form an NE, one as Einstein and the other as Bohr, and meet in Copenhagen to discuss the implications of conjugate variables in quantum mechanics, the two exchanging incommensurable world views that profoundly disturb science and society even today (e.g., Frayn's 1998 play Copenhagen), together generating bistable social perspectives that drive competition, moderated by a neutral audience that exploits it to solve its problems. Cooperation may make an organization work better, but competition and conflict across a system of organizations produce classical interpretations of social reality that generate the unending debates that underlie technological innovation and social evolution. This conclusion, based on physics, significantly advances our understanding of human organizations and systems. Acknowledgments This material is based upon work supported by, or in part by, the U. S. Army Research Laboratory and the U.S. Army Research Office under grant number W911NF-10-1-0252. References Axelrod, R. (1984). The evolution of cooperation. New York, Basic. Andrade, G. M.-M., Mitchell, M.L. & Stafford, E. (2001). New Evidence and Perspectives on Mergers, Harvard Business Working Paper No. 01-070. http://ssrn.com/abstract=269313. Barabási, A.-L. (2009). "Scale-free networks: A decade and beyond." Science 325: 412-3. Baumeister, R. F., Campbell, J.D., Krueger, J.I., & Vohs, K.D. (2005, January). "Exploding the self-esteem myth." Sci. American. Bloom, N., Dorgan, S., Dowdy, J., & Van Reenen, J. (2007). "Mgt practice and productivity." Qtrly J. Econ 122(4): 1351-1408. Bohr, N. (1955). Science and the unity of knowledge. The unity of knowledge. L. Leary. New York, Doubleday: 44-62. Bradbury, J., A. Branch, K.M., & Malone, E.L. (2003). An evaluation of DOE-EM Public Participation Programs (PNNL-14200). (2) Equation (2) indicates that as variance in factor A broadens, variance in factor B narrows. Summary To summarize, a model of interdependence in the interaction or organizations must be able to test the proposition that information in organizational interactions can be modeled by the conservation of information. It can do this if it reflects conjugate perspectives; e.g., between prosecutors and defense attorneys (Busemeyer, 2009); between actions and observations or between multiple cultures (Bohr, 1955); between USAF combat fighter jet 81 Lawless, W. F., Rifkin, S., Sofge, D.A., Hobbs, S.H., AngjellariDajci, F., & Chaudron, L. (2010b). "Conservation of Information: Reverse engineering dark social systems." Structure and Dynamics: eJournal of Anthropological and Related Sciences. Lilienthal, D. (1963). Change, hope, and the bomb. Princeton, NJ, Princeton University Press. May, R. M. (1973/2001). Stability and complexity in model ecosystems. Princeton, NJ, Princeton University Press. Nash, J. F., Jr. (1951). "Non-cooperative games." Annals of Mathematics Journal 54: 296-301. NRC (2009), Applications of Social Network Analysis for building community disaster resilience, Magsino, S.L. Rapporteur, NRC for DHS, Workshop Feb 11-12, 2009, Washington, DC: National Academy Press. Ostrom, E. (2009). "A general framework for analyzing sustainability of social-ecological systems." Science 325: 419-422 Pfeffer, J., & Fong, C.T. (2005). "Building organization theory from first principles." Organization Science 16: 372-388. Rand, D. G., Dreber, A., Ellingsen, T., Fudenberg, D., & Nowak, M.A. (2009). "Positive Interactions Promote Public Cooperation Export." Science 325(5945): 1272-1275. Rieffel, E. G. (2007). Certainty and uncertainty in quantum information processing. Quantum Interaction, Stanford, AAAI Press. Rieffel (2008), Quantum computing, arXiv: 0804.2264v2 [quantph], 26 September 2008. Sanfey, A. G. (2007). "Social decision-making: Insights from game theory and neuroscience." Science 318: 598-602. Schweitzer, F., Fagiolo, G., Sornette, D., Vega-Redondo, F., Vespignani, A., & White, D.R. (2009). "Economic networks: The new challenges." Science 325: 422-5. Simon, H. A. (1990). "A mechanism for social selection and successful altruism." Science 250(4988): 1665 - 1668. Von Neumann, J., and Morgenstern, O. (1953). Theory of games and economic behavior. Princeton, Princeton Univ. Press. Ying, M. (2010), Quantum computation, quantum theory and AI.Artificial Intelligence, 174: 162-176. Busemeyer, J. & Trueblood, J., (2009). Comparison of quantum and Bayesian inference models, In Bruza et al., 2009, Springer. Carley, K. M. (2002). Simulating society: The tension between transparency and veridicality. Social Agents, U Chicago, ANL. Carley, K.M. (2006), Destabilization of covert networks, Computational & Mathematical Organizational Theory, 12: 51-66. Cohen, L. (1995). Time-frequency analysis: theory and applications, Prentice Hall Signal Processing Series. Conant, R. C., & Ashby, W.R. (1970). "Every good regulator of a system must be a model of that system." Int J Sys Sci, 1(2): 899-97. Conant, R. C. (1976). "Laws of information which govern systems." IEEE Transaction on Systems, Man, and Cybernetics 6: 240-255. Feynman, R. (1996). Feynman lectures on computation. New York, Addison-Wesley. Freer, R.D. & Purdue, W.C. (1996), Civil procedure, Cincinnati: Anderson. Gershenfeld, N. (2000). The physics of information technology. Cambridge, Cambridge University Press. Hardin, G. (1968). "The Tragedy of the Commons." Science 162: 1243-1248. Hasson, U., Nir, Y., Levy, I., Fuhrmann, G., & Malach, R. (2004). Science 303: 1634-1640. Heisenberg, W. (1958/1999). Physics and philosophy. The revolution in modern science. New York, Prometheus Books, pp. 167-186. Jasny, B. R., Zahn, L.M., & Marshall, E. (2009). Science 325: 405. Kelley, H. H. (1992). "Lewin, situations, and interdependence." Journal of Social Issues 47: 211-233. Landers, D.M., and Pirozzolo, F.J. (1990), Panel discussion: Techniques for enhancing human performance, Ann. mtg APA, Boston. Lawless, W. F. (1985). "Problems with military nuclear wastes." Bulletin of the Atomic Scientists 41(10): 38-42. Lawless, W. F., Castelao, T., and Ballas, J.A. (2000). "Virtual knowledge: Bistable reality and the solution of ill-defined problems." IEEE Systems Man, and Cybernetics 30(1): 119126. Lawless, W. F., Bergman, M., & Feltovich, N. (2005). "Consensus-seeking versus truth-seeking." ASCE Practice Periodical of Hazardous, Toxic, and Radioactive Waste Management 9(1): 59-70. Lawless, W. F., Bergman, M., Louçã, J., Kriegel, N.N. & Feltovich, N. (2007). "A quantum metric of organizational performance: Terrorism and counterterrorism." Computational & Mathematical Organizational Theory 13: 241-281. Lawless, W. F., Sofge, D.A., & Goranson, H.T. (2009). Conservation of Information: A New Approach To Organizing Human-Machine-Robotic Agents Under Uncertainty. Quantum Interaction. Third International Symposium, QI-2009. P. Bruza, Sofge, D.A., Lawless, W.F., Van Rijsbergen, K., & Klusch, M. . Berlin, Springer-Verlag. Lawless, W. F., Angjellari-Dajci, F., & Sofge, D. (2010a). EU Economic Integration: Lessons of the Past, and What Does the Future Hold?, Dallas, TX, Federal Reserve Bank of Dallas. 82