Document 13877997

advertisement

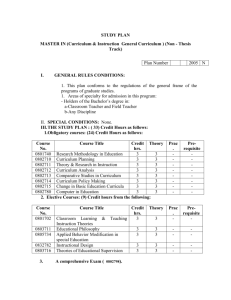

Introducing CORE Values, Measures & Methods Common Challenges Implemen<ng ROM as PBE Successfully Introducing ROM: A Single Case Study Model: Evidence Based Prac<ce Ac<vity: Service systems generate ques<ons relevant for rigorous research to assess the poten<al for prac<ce Product: Sets common and specific data goals drawn from pool of standardised and face-­‐ valid tools Method: Rigorous efficacy studies Meta-­‐analy<c studies and randomised controlled trials Product: Sets standards of guidelines for prac<<oners in rou<ne seHngs Yield: Services are led to deliver evidence-­‐based interven<ons Pu2ng quality at the heart of therapy Yield: research is led to inves<gate issues important to whole service Method: Relevant effec<veness studies and prac<ce research within services linked through prac<ce research networks Ac<vity: Rigorous research delivers hypotheses relevant for naturalis<c inves<ga<on through prac<ce applica<ons Model: Prac<ce Based Evidence Prac<ce-­‐based Evidence Barkham, S=les, Lambert & Mellor-­‐Clark (2010) Pu2ng quality at the heart of therapy RCTs PBE Data Top-­‐down driven by researchers BoTom-­‐up driven by prac<<oners Formal design defines process Informal design Led by researcher allegiance Focused on service delivery Stringent inclusion criteria Naturalis<c Single, specific manualised treatment All treatment as delivered in prac<ce Rich data on small N Rich data on large N The CORE Outcome Measure CORE-­‐OM is a 34-­‐item ques<onnaire designed to measure a client’s global distress across 4 domains § Subjec<ve well-­‐being § Commonly experienced problems or symptoms § Life and social func<oning § Risk to self and others Evans, C., Mellor-­‐Clark, J., Margison, F., Barkham, M., Audin, K., Connell, J. & McGrath, G. (2000). CORE: Clinical Outcomes in Rou<ne Evalua<on. Journal of Mental Health, 9(3), 247-­‐255. Evans, C., Connell, J., Barkham, M., Margison, F., McGrath, G., Mellor-­‐Clark, J. & Audin, K. (2002). Towards a standardised brief outcome measure: Psychometric proper<es and u<lity of the CORE-­‐OM. Bri=sh Journal of Psychiatry, 180, 51-­‐60. Pu2ng quality at the heart of therapy Modeling Common Mental Health Problems Onset and Effects and Treatment 4 0 Events Remoralise Remediate Rehabilitate Subjec<ve well-­‐being Problems or symptoms Func<oning Pu2ng quality at the heart of therapy Risk Barkham, M., Mellor-­‐Clark. J., Connell, J., & Cahill J. (2006) A CORE approach to prac<ce-­‐based evidence: A brief history of the origins and applica<ons of the CORE-­‐OM and CORE System. Counselling & Psychotherapy Research, 6, 3-­‐15. 4 All items 3 2 1 0 N= 1 084 8 63 Non-cl ini cal Cl inical Samp le Evans, C, Connell, J., Barkham, M., Margison, F., Mellor-­‐ Clark, J., McGrath, G. & Audin, K. (2002). Towards a standardised brief outcome measure: Psychometric proper<es and u<lity of the CORE-­‐OM. Bri<sh Journal of Psychiatry, 180, 51-­‐60. Pu2ng quality at the heart of therapy 'Jacobson Plot' showing clinical and reliable change for CORE OM completed pre and post therapy (n=1087) Post therapy CORE total mean score 4 3.5 3 Clinically reliable and significant deterioration 2.5 No reliable change 2 1.5 Clinical cut off 1 Clinically reliable and significant improvement 0.5 0 0 0.5 1 1.5 2 2.5 3 Pre therapy CORE total mean score Pu2ng quality at the heart of therapy 3.5 4 Pu2ng quality at the heart of therapy CORE ‘Quality Evalua<on’ Model Mellor-­‐Clark, J. and Barkham M (2006). The CORE System: Developing and delivering prac<ce-­‐based evidence through quality evalua<on. In C. Feltham & I. Horton (eds.), Handbook of Counselling and Psychotherapy. 2nd Edi<on. London: Sage Publica<ons. Mellor-­‐Clark, J., and Barkham, M. (2012). Using the CORE System to support service quality development. In C. Feltham & I. Horton (eds.), Handbook of Counselling and Psychotherapy. 3rd Edi<on. London: Sage Publica<ons. Pu2ng quality at the heart of therapy Using the CORE System for Service Quality Development Referral Do client profiles suggest equity in their representa<on of local popula<ons? Wai<ng Are first contact sessions easy to access? Assessment Are clients’ assessed problems appropriate to the therapies offered? Therapy How efficiently does the service use its resources and how acceptable are therapy experiences to clients? Ending How effec<ve is therapy? Mellor-­‐Clark, J. (2006). Developing CORE performance indicators for benchmarking in NHS primary care psychological therapy and counselling services: An editorial introduc<on. Counselling & Psychotherapy Research. Mellor-­‐Clark, J. and Barkham, M. (2006). Editorial: Using Clinical Outcomes in Rou<ne Evalua<on. European Journal of Psychotherapy and Counselling, 8, 137-­‐140. Pu2ng quality at the heart of therapy Learning from CORE Outcomes? The propor<on of clients that have post-­‐therapy outcomes using tradi<onal T1+T2 measurement methods is typically around 25% of those aTending assessments and 50% of those entering therapy. Similar propor<ons appear common in IAPT datasets despite the increased frequency of measurement. The propor<ons of clients measured to achieve clinical and/or reliable change is typically around 1-­‐in-­‐3 of those assessed. Similar propor<ons are found in IAPT datasets and in PBE data collected by Lambert and colleagues using the OQ Suite. CORE Outcome data pools provide liTle evidence of superiority of any of the common UK therapy models. CBT, psychodynamic therapy and humanis<c counselling all have similar outcome profiles in terms of recovery and improvement for clients presen<ng with mild to moderate severity profiles. Dave Saxon and Michael Barkham (2013; in press) suggest far more differences can be found between therapists than between the models of therapy they prac<ce. So what’s our response . . . . . . Pu2ng quality at the heart of therapy COPYRIGHT: CORE IMS LTD Pu2ng quality at the heart of therapy Revisi<ng the Personal Ques<onnaire (Shapiro, 1975; Elliot et al, 1999) Pu2ng quality at the heart of therapy COPYRIGHT: CORE IMS LTD 100 OQ Total Score 95 90 OT_Fb 85 OT-NFb 80 NOT-NFb I & II 75 NOT-Fb I & II 70 NOT-Fb+CST I&II 65 T/Pat Fb 60 NOT-Fb+CST III 55 50 Pre-test Pu2ng quality at the heart of therapy Feedback Post-test Lambert’s Results (2011) Recovered or Improved No Change Deteriorated NOT-NFb (n = 286) 60 (21%) 165 (58%) 61 (21%) NOT-Fb (n = 298) 104 (35%) 154 (52%) 40 (13%) NOT-Fb+CST (n = 239) 121 (51%) 102 (43%) 16 (6%) What do clinicians’ think about using rou<ne CORE measurement? Pu2ng quality at the heart of therapy Pu2ng quality at the heart of therapy In addition, treatment dropout rates are estimated to be in the range of 20% (adult) up to 40% - 60% (child) ROM tools could be useful to supplement clinical judgement as there’s no current evidence to suggest practitioners are able to accurately detect when their clients are worsening RCTs demonstrate where ROM tools are used to supplement clinical judgement in trials, clients in the feedback group were 3.5 times more likely to achieve reliable change The sum of evidence suggests that it is in the clients’ best interest to formally monitor treatment responses in order to increase the potential for reliable post-treatment change Source: Mellor-­‐Clark et al. (2014) Rou<ne Outcome Measurement PBE data suggest 50% of clients show no reliable change when treatment ends and 10% experience deterioration In addition, treatment dropout rates are estimated to be in the range of 20% (adult) up to 40% - 60% (child) ROM tools could be useful to supplement clinical judgement as there’s no current evidence to suggest practitioners are able to accurately detect when their clients are worsening RCTs demonstrate where ROM tools are used to supplement clinical judgement in trials, clients in the feedback group were 3.5 times more likely to achieve reliable change The sum of evidence suggests that it is in the clients’ best interest to formally monitor treatment responses in order to increase the potential for reliable post-treatment change Rou<ne Outcome Measurement PBE data suggest 50% of clients show no reliable change when treatment ends and 10% experience deterioration Surveys report practitioners estimate 85% of their clients improve or recover at the end of their treatment – negating the potential value of ROM Practitioners are overscheduled with no time to assess ROM systems, plan implementation, interpretation, reporting and client feedback Practitioners may resist ROM because they believe that clients may find it a burden or that the process may interfere with the alliance Implementing ROM needs software, training and support that’s not currently funded leaving services to finance from existing tight budgets Practitioners lack confidence that data will be managed confidentially, or interpreted reliably, leaving them feeling exposed to performance assessment Source: Mellor-­‐Clark et al. (2014) Learning from Passive ROM Implementa<on? Prac<<oners carry a wide range of beliefs, aHtudes, feelings, and experiences into the introduc<on of rou<ne outcome measurement that are rarely if ever systema<cally assessed by managers or researchers. Measurement is commonly implemented as an administra<ve and/or technical process rather than a clinical one that strip client’s ROM responses of therapeu<c significance. Where ROM data are reviewed they are rarely explored in any depth for fear of exposing individual prac<<oners. This perpetuates clinical apathy, poor data quality and minimum reflec<vity on service development implica<ons. Pu2ng quality at the heart of therapy Pu2ng quality at the heart of therapy QIF Phases QIF Cri<cal Steps Assess the Host SeHng 1. Assess needs and resources. 2. Assess fit. 3. Assess capacity/readiness for change. 4. Make decisions about innova<on adapta<ons. 5. Secure prac<<oner buy-­‐in. 6. Build service capacity. 7. Staff recruitment. 8. Deliver pre-­‐implementa<on training. Create a Structure for Implementa<on 9. Create an implementa<on team. 10. Develop an implementa<on plan. Deploy Post-­‐ implementa<on Support Strategies 11. Technical assistance/coaching/supervision. 12. Process evalua<on. 13. Suppor<ve feedback mechanisms. Improve Future Applica<ons 14. Learning from experience. QIF Phases Assess the Host SeHng QIF Cri<cal Steps 1. Assess needs and resources. 2. Assess fit. 3. Assess capacity/readiness for change. 4. Make decisions about innova<on adapta<ons. 5. Secure prac<<oner buy-­‐in. 6. Build service capacity. 7. Staff recruitment. 8. Deliver pre-­‐implementa<on training. CORE IMS ROM Implementa<on Resources and Processes i. Meet with nominated service’s ROM Lead to conduct a Pre-­‐implementa<on Planning Mee<ng and undertake a Service Profile Survey to assess the fit between the service’s aspira<ons and their readiness for organisa<onal change. ii. Administer Rou<ne Outcome Measurement Survey to all service prac<<oners and managers to assess individual philosophical and prac<cal aHtudes towards sessional ROM rela<ve to tradi<onal T1+T2 and discre<onal measurement. iii. Select and/or review nominated ROM Mentors in light of survey results. iv. Create a local Implementa<on Management Group to review data from the ROM Survey, set appropriate quarterly data targets, and agree off-­‐track ac<ons as advance remedial steps for missed targets. Create a Structure 9. Create an implementa<on team. for Implementa<on 10. Develop an implementa<on plan. v. Write and deploy Implementa<on Plan to communicate the concrete quarterly performance indicators defining successful implementa<on and remedial ac<ons for missed targets. vi. Deliver Training and E-­‐learning Resources that address the common ROM restraints to help build a consensus opportunity. Deploy Post-­‐ implementa<on Support Strategies Improve Future Applica<ons 11. Technical assistance/coaching/supervision. 12. Process evalua<on. 13. Suppor<ve feedback mechanisms. vi. Deliver Data Quality Reports at Months 1, 2, 3 and 6 to profile individual prac<<oner engagement rela<ve to data quality targets. vii. Provide Mentor Support Calls to discuss implica<ons of data quality reports and Chair Quarterly IMG Mee<ngs to agree repara<ve ac<ons to keep service on-­‐track to meet agreed targets. ix. Support Mentors to teach their Mentees to Clear Flags with brief reflec<ve case notes for all clients lacking any reliable improvement on sessional measurement scores aser 3-­‐6 sessions (dura<on depending on case mix). 14. Learning from experience. x. Provide and manage a ‘Basecamp’ Resource to encourage Managers, Mentors and Mentees to chart their ROM implementa<on ‘journey’ – reflec<ng on how challenges were overcome and itera<vely sharing posi<ve experien<al and empirical yields as they occur in (near) real-­‐<me. Introducing Rou<ne Outcome Measurement An Ac=on Research Case Study Pu2ng quality at the heart of therapy QIF Phase 1: Assess the Host SeHng QIF Cri<cal Steps CORE IMS ROM Implementa<on Resources and Processes 1. Assess needs and resources. 2. Assess fit. 3. Assess capacity/readiness for change. 4. Make decisions about innova<on adapta<ons. 5. Secure prac<<oner buy-­‐in. 6. Build service capacity. 7. Staff recruitment. 8. Deliver pre-­‐implementa<on training. i. Meet with nominated service’s ROM Lead to conduct a Pre-­‐ implementa<on Planning Mee<ng and undertake a Service Profile Survey to assess the fit between the service’s aspira<ons and their readiness for organisa<onal change. Pu2ng quality at the heart of therapy ii. Administer Rou<ne Outcome Measurement Survey to all service prac<<oners and managers to assess individual philosophical and prac<cal aHtudes towards sessional ROM rela<ve to tradi<onal T1+T2 and discre<onal measurement. iii. Select and/or review nominated ROM Mentors in light of survey results. QIF Phase 2: Create a Structure for Implementa<on QIF Cri<cal Steps CORE IMS ROM Implementa<on Resources and Processes iv. Create a local Implementa<on Management Group to review data from the ROM Survey, set appropriate quarterly data targets, and agree off-­‐track ac<ons as advance remedial steps for missed targets. 9. Create an implementa<on team. v. Write and deploy Implementa<on Plan to communicate the concrete quarterly performance indicators defining successful implementa<on and remedial ac<ons for 10. Develop an implementa<on plan. missed targets. vi. Deliver Training and E-­‐learning Resources that address the common ROM restraints to help build a consensus opportunity. Pu2ng quality at the heart of therapy iv. Deliver Training and E-­‐learning Resources that address the common ROM restraints to help build a consensus opportunity. Pu2ng quality at the heart of therapy Reframing rou<ne outcome measurement “The psychotherapist learns liTle or nothing from successes. They mainly confirm in him his mistakes, while his failures on the other hand, are priceless experiences in that they not only open a deeper truth, but force him to change his views & methods.” Carl Jung (1875-­‐1961) Pu2ng quality at the heart of therapy QIF Phase 3: Deploy Post-­‐implementa<on Support Strategies QIF Cri<cal Steps 11. Technical assistance/coaching/ supervision. 12. Process evalua<on. 13. Suppor<ve feedback mechanisms. Pu2ng quality at the heart of therapy CORE IMS ROM Implementa<on Resources and Processes vi. Deliver Data Quality Reports at Months 1, 2, 3 and 6 to profile individual prac<<oner engagement rela<ve to data quality targets. vii. Provide Mentor Support Calls to discuss implica<ons of data quality reports and Chair Quarterly IMG Mee<ngs to agree repara<ve ac<ons to keep service on-­‐track to meet agreed targets. QIF Phase 4: Improve Future Applica<ons QIF Cri<cal Steps CORE IMS ROM Implementa<on Resources and Processes ix. Support Mentors to teach their Mentees to Clear Flags with brief reflec<ve case notes for all clients lacking any reliable improvement on sessional measurement scores aser 3-­‐6 sessions (dura<on depending on case mix). 14. Learning from experience. Pu2ng quality at the heart of therapy x. Provide and manage a ‘Basecamp’ Resource to encourage Managers, Mentors and Mentees to chart their ROM implementa<on ‘journey’ – reflec<ng on how challenges were overcome and itera<vely sharing posi<ve experien<al and empirical yields as they occur in (near) real-­‐<me. CORE Net Flags – Off Track Pu2ng quality at the heart of therapy Learning from Ac<ve ROM Implementa<on? Appropriately resourced, led and managed, ROM implementa<on can be a success that brings unity, curiosity and pride to services. Leadership appears a cri<cal factor of success and effec<ve process ineffec<vely led will fail to meet data quality and engagement targets. Prac<<oner safety is paramount and the challenges that the ROM process places on self-­‐efficacy assessments shouldn’t be under-­‐ es<mated. Pu2ng quality at the heart of therapy Pu2ng quality at the heart of therapy