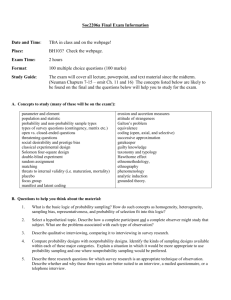

Independent Multidisciplinary Science Team (IMST)

advertisement