From: AAAI Technical Report FS-94-01. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

Case-Based Plan Recognition in Dynamic Domains

Dolores

Cafiamero

Yves

Kodratoff

CNRS-LRI

Bat. 490, Universit6 Paris-Sud

91405 Orsay Cedex, France

{ lola, yk}@lri.lri.fr

Jean-Fram;ois

Delannoy

Michel

BarBs

Dept. of ComputerScience

DRET-SDR

University of Ottawa

4,medelaPorte

d’Issy,

Ontario K1N6N5, Canada

00460ParisArmies,

dclannoy

@csi.uottawa.ca

France

command, not to issue commands. In the context of

Abstract

Inthe context

ofa Command,

Control,

Communication

and

electronic war, commanders

find it more and moredifftcult

Intelligence

(C31)

system

wehavebuilt

a CBRmodule

for

to simply process the huge amountsof data they are made

planrecognition.

Itisbased

onknowledge

representation aware of. The main role of C3I is thus to perform data

structures

called

XPlans,

inspired

inpartby Schank’s

fusion as intelligently as possible. Nevertheless, the same

Explanation

Patterns.

Theuncertainty

inherent

toan

data mayhave very different interpretations depending on

uncontrolled

flow

ofinput

andthepresence

oflacunary

data

the context. Evenfor "simple" data fusion, determiningthe

make

difficult

theretrieval

ofcases.

This

ledustodevelop

context is equivalent to guessing what the enemy’s

analgorithm

forpartial

andprogressive

matching

ofthe

intentions and plans are. This is why a high-level

target

caseontosomeofthesource

cases.

Thismatching

interpretation layer is necessaryevenfor a C3I that does not

amounts

inpractice

to a credit

assignment

mechanism,

included

inthealgorithm

associated

witheachXPlan.

This

pretend more than helping a commander avoid being

method

hasbeendesigned

tomeettherequirements

of a

bogged under huge amounts of information.

l project

DRET

tobuild

a decision

support

module

fora C3I

Our system is "real-world" as it has to deal with real

system--the

MATIS

project.

Itstasks

istointerpret

and

data--an evolving situation described at a level which is

complete

theresults

ofanintelligent

"pattern-recognitionlower than that of self-contained actions, and which

and-data-fusion"

module

inorder

tomaketheintentions

consists of punctual events. Our data have been partially

underlying

therecognized

situation

explicit

tothedecisioncollected by Intelligence, and we must adapt to their

maker.

Thisadvice

isgiven

asa causal

explanation

ofan

knowledgerepresentation for our input/output (our other

agent’s

behavior

from

low-level

information.

information sources are the 3 lower levels of MATIS,see

next section). Thesedata present six undesirable features:

Introduction

1. Measures are taken in a fixed way. This means that

incoming data may not contain the information

In thecontext

of a Command,

Control

Communication

and

characterizing a situation. For example, in order to

Intelligence

(C3I)systemwe havebuilta Case-Based recognize that a car stopped at a traffic light, one usually

Reasoning

(CBR)module

forplanrecognition.

It is based

assumesthat_ the car is recordedwhile stoppedat the light.

on knowledge

representation

structures

calledXPlans,

This maynot be true for our data, which mayonly report of

inspired

in partby Schank’s

Explanation

Patterns

(Schank, a car slowing downbefore a light, and the measurementof

1986;Schankand Kass,1990),but whichhavebeen

the car at nullspeed

maybemissing.

designed

torecognize

an agent’s

plansandmotivations

in

2.

Since

measurements

performed

at somepointmaytake

dynamicdomainsandfromlow-level

data.XPlansare

some

time

to

reach

the

interpretation

center,

thetemporai

basically

prototypical

casesofplanscontaining

both,

the

ordering

of

input

data

may

not

be

the

same

as

the

ordering

standard

behavior

ofan agentinsomegivencircumstances

that

occurred

in

the

field.

Consequently,

a

hypothesis

must

(planningknowledge),

and knowledgeabouthow

neverbe discarded

on thegrounds

of missing

dataat a

interpretthe behaviorof an agent in that same

given

time,

sinceconfwming

datamayarrive

later.

circumstances

(recognition

knowledge).

Plausible

plans

3.There

aredifferent

levels

ofgranularity

inthedata.

For

reflecting

thestandard

behavior

in thecircumstances

in

whichtheobserved

agentcurrently

findshim/herself

are

example,

someinformation

may be veryrelevant

for a

combat

section

andnotatallfora company,

etc.

usedto interpret

theactual

agent’s

behavior.

Theyare

progressively

confu’mcd

or rejected

depending

on the

4. Forevery

level

of granularity,

theunitsareperforming

compliance

oftheobserved

withthestandard

behavior.

tasksat whichtheynormally

collaborate.

Eachagentis

Ourplanrecognition

system

isa module

tobeintegrated intelligent

initsway,butitisnotfreetothreaten

inany

ina C31system.

Current

C3Isystems

areintended

to help

waythegeneral

plan(ona battlefield,

"backtracking"

usually

implies

heavylosses).

Freedom

is strongly

limited,

i DRET

is a service of the French Minist~re de la D#fense

butnever

totally

absent.

Nationale.

5.Dataarelacunary

andcanbenoisy.

16

6. Datacontain informationthat represents actions which

take place at specific locations and at specific points in

time.

Aseventhrequirementof a different nature mustalso be

met. Since a C3I systemis intended to help a commander

whohas heavyresponsibifities, andwhois held personally

responsibleof his/her decisions,it is not possibleto make

decisions on data fusion in a waywhichis not directly

understandable to the commander.Answershave to be

presented to the commander

in his/her ownlanguage.This

explanatoryfacultyis veryhigh-level,but it is also another

requirement of real-life: a C3I system which does not

explainits actionswill at best be reluctantlyacceptedunder

heavyconstraints. This seventhrequirementis especially

relevant for decision supportsystemswhichare to be used

in critical domains.

Strong specifications as the seven above cannot be

exactlymetin the presentstate of the art, that saystittle

about recognitionfromevents, or fromnoisy andlacunary

input, althoughthese questionsare classic in statistical

signal recognition and in the machinelearning community

(Kodratoff et al., 1990). Thedifferent approachesonly

providepartial answersto these problems.Plan recognition

is generallyconsideredin an ideal context, wherethe input

is supposedto be 100%correct (violating requirement5)

and consists of actions, that is, chunks of activity

representingwell-definedtransitions in the si01~tion(thus

violating requirement1). Suchideally clean contexts can

be described in strong-theory domains, and makeit

possible the application of formal techniques such as

abduction (Charniak and McDermott,1985; Kautz, 1991;

Allen et at, 1991;van BeckandCohen,1991)or syntactic

parsing(Vilain, 1990).Lacunarityis addressedby (Pelavin,

1991) whorefines on Kautz by incorporating partial

descriptions of the world(correspondingto our lacunary

input) andexternal events(as in our notionof an evolving

situation). However,he does not consider false data.

Uncertaintyhas been well addressedby probabilistic and

spreading action approaches, whichhave in common

the

use of a confidencevalueattachedto the plans retained as

hypotheses. (Charniak and Goldman,1991) propose

probabilistic model of plan recognition based on a

Bayesiannetwork,wherethe input is low-level, and the

output as well, whereashigh-level output is one of our

requirements.Modelsof spreadingactivation (Quast, 1993;

Langeand Wharton,1993) maybe moreflexible as they

allowa moreversatile specificationof the behaviorof the

library nodesin the recognitionprocess.Withinthe framebasedapproachto plan recognition, (Schankand Riesbeck,

1991) have a powerfuluse of conunonsense

knowledgeand

of explanation questions, but cannot interpret evolving

situations. In the narrative understandingsystemGENESIS

(Mooney,1990a&b)combinescase-based recognition and

explanation-based learning for automatic schema

acquisition. However,this approach do not address

problemsof lacunarity, uncertainty, and low-levelinput.

(Laskowskiand Hofmann,1987) have efficient belief and

17

time-handling mechanisms,but their approach is again

basedon actions, not on events.

In order to cope at least partially with all these

requirements, we have developed a form of knowledge

representation called here XPlans. These knowledge

representationstructuresare essentially cases or prototypes

of plans whichare progressivelyrecognizedas the indices

of the field are gathered. Theygive an interpretation of

low-levelreal data in terms of a plan applied by an agent

and of the causes and motivations that explain this

behavior.

The MATIS Project

Ourplan recognition systemis to be integrated in MATIS

(Mod~le d’Aide d la ddcision par le Traitement de

l’Information Symbolique--"modelof decision-aid by

symbolic information processing"). The MATIS

modelis

designedto providesupport for military decision (Bar~s,

1989)in critical or difficult situations,withinthe contextof

C3I. However,it can obviouslybe of use in all civilian

contexts in whichdecision makingbecomesdifficult. It

coversa comprehensive

range of tasks, fromthe processing

of basic raw data (generally numeric)to the handling

"knowledge

objects", i.e., elementswhichcarry somedirect

relevancefor the decision-maker.

MATISArchitecture

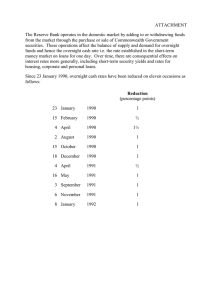

Somehypotheses are used to somehowanticipate the

situation, or to enumeratepossiblebehaviorsof the enemy,

by taking into account some knowledgestored in the

memory

of the system. This knowledgeconsists of facts

already knownabout the situation. Facts are confirmedby

the doctrine (see Figure 1). There is no need for these

hypothesesto be exact at fast, since they are mainlyused

to initialize the model,andthey will be changedaccording

to the situation as perceivedby the upper layers. These

hypotheses can be seen as a layer previous to MATIS

interpretationlayers (Figure1):

¯ Thefast layer consists of a perceptualsystemmadeof a

numberof sensor families whichtrack various physical

parameters.It performsdata fusion on basic data observed

by the sensors---observations.Thenumericoutput fromthe

sensor families is convertedto symbolicformatby a fast

interpretationtreatment,called perceptionin Figure1.

¯ Thesecond layer identifies elementsrelevant to the

action, e.g., a car, the orderof battle, etc. It attributes a

nameto eachrelevant elementor entity.

¯ Thethird layer groupsentities into significantcollections

of objects---association.

Thesethree layers progressivelyenrich the information

flowing through the reasoning system of MATIS,

mainly

by increasing the semantic content of each knowledge

object.

Our plan recognition system can be seen as a fourth

layer. It handlesthe semanticallyrichest informationof the

third layer. Thefourth layer doesnot bear just on objects,

but rather on their behavior--using XPlans, it interprets

this behavior in terms of predefinedplans and goals.

incremental evidence assignment. This robust approach is

adopted in our system. In addition to the requirements

imposed by the nature of data, several characteristics

follow from this robust approach:

¯ The input consists of an imposedsequence of events (not

of actions): (a) they are snapshots of the observedactivity

(they have no duration), situated at whatever point of the

behavior of a car; on the contrary, an action spans some

amount of time; Co) they ate measured at any time, not

necessarily at the time of a qualitative transition. In fact,

qualitative transitions have to be reconstructed from

events---for example, the system must find that the car is

turning left, based on the position and speed of the car at

various instants.

¯ The system must be able to process massive input, and to

smoothout the incidence of false data.

¯ The output must include a list of plausible plans, the

confidence rate of which must be incrementally confn’med.

¯ The system must be able to interpret low-level data in

terms of high-level concepts so that the advice provided

can be easily understood by the user. This advice must also

be satisfactorily justified.

0

Field dire

(eo.radar

0

[~¢ture s. I nft’lred pl ¢1~Jres, et¢~

m

Percaptlon

Red wodd

-

Symbolic world

Figure 1: MATISarchitecture and knowledgeprocessing.

Our plan recognition system can be seen as a fourth layer.

Feedbackoccurs only through factual knowledge.

Finally, a presentation layer displays the semantically

enriched data in different strata depending on the user’s

needs. These strata correspond to the following levels in

the objects perceived: Shapes, like elements of a vehicle,

objects, like vehicles, collections of objects, e.g., armored

companies, relations amongobjects and their collections,

elementary moves, e.g., tank # 32 moves forward, or

company# 3 moves Eastwards.

In MATIS

there is no feedback from the semantic levels

onto the numericdata, contrary to the present tendency to

require high-level understanding to help correct potential

errors in the numericdata wheneverthey induce high-level

contradictions. In fact, there is a quite strict sequential

process of relevance enrichment, i.e., while compressing

information, the understandability of knowledgeobjects is

progressively improved. Similarly, there is no feedback

from a higher semantic level to a lower one, and the

informationcontent must strictly increase fromthe lower to

the higher levels. The only exception to this rule is the

possibility to add new facts into the factual knowledge

base. Addedfacts can come either from the user, or from

the plan recognition module. A consequenceof this "almost

no-feedback" policy was a stringent requirement on the

robustness of the interpretation

modules, that must

inherently be able to handle noisy and irregular data.

Constraints on Plan Recognition

In real conditions of operation C3I systems are unavoidably

faced with massive amountsof low-level data, which are

not altogether reliable, and maylack at crucial moments.

Such a context imposes weak-theory modeling and

18

The Problem Situation

The domainchosen for the initial modelinghad to present

the features listed above. In a car driving domain, our

system must face the following type of problems. A car-the agent--is moving.Its driver is supposedto be a rational

agent with goals or motivations (e.g., saving time, saving

gas, etc.) that will lead higher to drive in a certain way

rather than another in order to satisfy a particular goal in a

specific situation. The observer--the recognition system

with cooperation from the decision maker--cannot see the

car directly. In principle, it knowsnothing about the agent.

The recognition situation is a case of keyhole recognition

(Cohen et al., 1981), i.e., the agent is unawareof being

observed, and the observer cannot attribute to the actor any

intention to cooperate in the recognition process. The only

information the observer has available are sets of low-level

data such as speeds, positions, and distances, whicharrive

periodically. As additional assumption, the observer

considers this agent "at the knowledgelevel". That is, it

considers this agent, which is embedded in a task

environment, as composedof bodies of knowledge(about

the world), someof whichconstitute its goals, and someof

which constitute the knowledge needed to reach these

goals---the agent processes its knowledgeto determine the

actions to take. Its behavioral law is the principle of

rationalitym"If an agent has knowledgethat one of its

actions will lead to one of its goals, then the agent will

select that action" (Newell, 1982)--but in its more

pragmatic form of the twO-step rationality theory (van de

Velde, 1993). In this form, the practical application of the

principle of rationality is constrained by the boundariesof a

model of the problem-situation (Catiamero, 1994), which

takes into account the specific circumstances

in which the

task is to be solved--’task features’. This way, the

assumptionis madethat the behaviorof the agent is both

rational and practical. Basedon this assumption,and from

the availablelow-leveldata, the observerfiaust interpret the

car driver’s behavior. In particular, it mustexplain: (a)

whatthe driver seemsto be doing,e.g., overtakea car; Co)

hows/he doesit, e.g., faster than it wouldbe safe; and(c)

whys/he acts this wayrather than another--whathis/her

goalsor motivationsare (savingtime, etc.).

Plan Recognition

Based on XPlans

plan recognitioncan be definedas the interpretation of a

changing situation. It can be seen as a process of

understandinginverseto that of planning0Vilensky,1981,

1983):while planninginvolvesthe constructionof a plan

whoseexecutionwill bring abouta desiredstate (goal),

plan recognitionthe observerhas to followthe goals and

plans of actors in a situation in order to makeinferences.

This process implies making sense out of a set of

observations by relating them to previous knowledgeso

that the observer can integrate those observationsin an

overall picture of the situation. In other words, plan

recognitionhere is consideredas understandinga problem

situation by relating someobservations to previous

knowledge

about an agent’s goals and plans. Accordingto

Schank (Schank, 1986) this form of understanding

closelyrelated to twoother cognitiveprocesses:

¯ Explanation.Theactions of others can be explainedby

understandinghowthey fit into a broader plan. "Saying

that an action is a step on a coherentplan towardsa goal,

explainsthat action."(p. 70).

¯ Learning.Learningis usually definedas adaptationto a

newsituation, but for Schankit also means"finding or

creating a newstructure that will render a phenomenon

understandable."

(p. 78).

In this line, weuse a set of patterns to understandand

explain the behavior of an agent. Our approach is to

interpret a coherent behavior in terms of schemataor

frames, with a double presupposition concerning the

observedagent:

¯ Intentionality. The agent has somepurpose. This

excludesinexplicablebehavior.

¯ Rationality. Theagenthas the faculty of pursuinga goal

by setting him/herself some subgoals. This excludes

randombehavior.

These assumptions allow us to explain and make

predictions about the future behaviorof the agent on the

basis of previousknowledge.Previousknowledgeconsists

of a library of prototypicalplans indicatingwhata standard

(ideally rational) agentshoulddo in different situations

obtaincertain goals.

Structure and Organization of XPlans

XPlans are inspired in part by Schank’s Explanation

Patterns. However, they present some substantial

differences, since the contextweare workingin is entirely

19

different fromSchank’s.Wehaveto consider an evolving

situation in whichmassive,uncertain and lacunar), input

correspondingto events arrives in an uncontrollableflow.

Therefore, we need a moreelaborated domainknowledge

in orderto give a logic andunderstandable

interpretationto

row data that do not have a meaningby themselves.

Additionally,weconsidertwo points of view: (a) that of

standard agent, whois supposedto behavein a rational

way,i.e., planningsequencesof actions in order to attain

some goaismplanning knowledge; and (b) that of

observerwhotries to understandwhata real agentis doing

by comparing the information s/he gets to his/her

knowledgeabout what a standard agent should do in that

circumstances---recognitionknowledge.Weneedthus two

types of semanticallydifferent rules: planningrules and

recognition rules, whichare based on the planningones.

Bothtypes of rules are integrated in complexstructures~

schemata---including,amongother information, the rule

application context. Recognition knowledgeis highly

contextual,since oneaction can respondto different causes

in various contexts, and hence must be given different

interpretations. For example,excessive speed wouldbe

associatedto different interpretations in the contextof a

plan for overtakinga car or in the context of a plan for

approachinga red light. Among

other information, XPlans

include the followingelements:

¯ Parameters:List and description of the variables used,

includingconfidencevalue.

¯ Preconditions:The basic facts that musthold for the

plan to be considered.Whenthey hold, the plan is said to

be active andits recognitionalgorithmis applied.

¯ Planningalgorithm(PA): Descriptionof the behaviorof

a standard(ideal) driver in the situation describedby the

XPlan.It consists of the sequenceof phasesthat the agent

should follow to accomplishthe plan. Every phase has

associated twodifferent types of rules: activation rules,

whichcall anotherplan (and optionallydeactivatethe plan)

whenpreconditions do not hold anymore, and behavior

rules, whichresult in the modificationby the agentof the

value of one or moreparameters.

¯ Recognitionrules (RR): Theycontrol the modificationof

the confidence value of the plan, and assign an

interpretation to the observed behavior, according to

behaviorrules in the PA.

¯ Postconditions: Theydescribe sufficient conditions-elementsof the worlddescription after completionof the

plan--for deactivatingthe plan, both in the case the plan

2.

has succeeded

andin the case it has failed

2 Failure conditions are considered because something

external to the agent mayhappenthat prevents it from

accomplishingthe plan (e.g., car2 suddenlystopped and

cart collides into care). This is becausein this domainthe

situation changesnot only as a consequence

of the agent’s

behavior,but also independently

of it.

¯ Motivations: Theyrepresent the goal pursued by the

agentwhenadoptinga specific strategy rather than another

to accomplish

a ta~k.

XPlansare organizedin the formof a hierarchy(Figure

2) whichconstitutesa library of prototypicalcases.

"apgoach

"a~coach

"ad ~--ed

green

oa

~t"

~ ~

~¢h red Ight"

-’~"

...............

, u~u.

"~.do~,~.~o~"

"p~.s rea llgh~

"plum ~’een Bgh~

.~~~

bellmdmoth~rcar"

"slow anoth~

downbdoce

bdm~d

~, ~h~

Figure

2: A subpart

~.~~lJ~et

down bd~

¢~,red~ l~l~ beh~

of the hierarchy

of XPlans relative

to

driving.

There are two maintypes of links in this taxonomy.The

first type of link (dark arrows)expressesthe Spec~ii7~tion

of the context (preconditions) in whichthe XPlantakes

place. The XPlanthat this type of link gives rise to

correspondsto a task that the agent must perform. The

other type of link (gray arrows)expressesthat the child

XPlan is a consequenceof a choice of the driver---a

prototypicalstrategy chosento performa task accordingto

a certain motivation.

deactivated in four ways: (a) If their postconditionsare

satisfied; (b) Whencalling another, a plan maydeactivate

itself if it becomes

useless; (c) Below

a certain threshold

the confidence value (0.1), a plan is automatically

deactivated. A low confidence value can be reached

throughapplication of recognition rules with a negative

effect on the confidence--reflectingdiscrepancybetween

the driver’s courseof action andthe standardbehavior--or

whenfor several events no recognition rule will apply

(attrition mechanism); (d) Whena plan has been

successfullycompleted,its concurrentsare deactivated.

The general loop for the processing of an event is as

follows: Initially, only the root node is active. The

postconditionsof active plans are examined

first; the plans

whosepostconditionshold are deactivated,as well as their

concurrents.Thena depth-first search on the preconditions

of childrenplans is started. If the preconditionsof a plan

hold, it is activatedandthe parent planis deactivated.At

this point, a recognition algorithmis applied to every

single active plan. If the recognitionrules of a plan cannot

be applied,its confidencevalueis slightly decreased.If the

confidence value of a plan is smaller than a certain

threshold (fixed to 0.1), that plan is automatically

deactivated(its confidencevalueis forceddownto 0).

The recognition algorithmapplied on each plan is the

following.For everyneweventin the input, the activation

rules are checkedand triggered if applicable; other plans

are activatedas specifiedin that rules, andthe plan itself

can be deactivated. If the plan is still active, for each

applicable behaviorrule, the ideal value of the parameter

used as a reference is calculated and comparedto the

measuredactual value. Then,basedon their difference, the

confidencevalueof the plan is adjusted--it is increasedif

the differenceis smallor nufl, decreasedelse.

Figure3 showsthe evolutionof the confidencevaluesof

three plans: at the beginning,the three of themcould be

plausiblehypothesesto interpret the driver’s behavior,but

only one of themwill be successfullycompletedand hence

confmned.

The Recognition Process

The plan recognition process consists basically of the

retrieval of cases (prototypical plans) from the XPlan

hierarchyin order to interpret andexplainthe behaviorof

the agent fromthe low-leveldata feeding the system.The

uncertainty inherent to an uncontrolledflow of input and

the presenceof lacunarydammakedifficult the retrieval of

cases. This led us to developan algorithmfor partial and

confidence value

progressivematchingof the target case onto someof the

successfully

sourcecases stored in the library. This matchingamounts

initial values of the

1

~pleted

in practice to a credit assignmentmechanism,

includedin a

recognitionalgorithmassociatedwith eachXPlan.

In orderto recognizethe plan that the agentis applying,

wetry to followhis/her steps by producinginterpretative

concurrent to

hypotheses, as specific as data allow, and prudently

~.

0..~

~ successful

plan

confu’mingor disqualifying themas newdata arrive. The

~ow

dropped

mechanism we propose is somehowcomparable to

spreadingactivation: plans activate oneanother downthe

under a minimumthreshold:

"~.~_

hierarchical structure of the plan library, or across the

abandoned

hierarchy. Severalconcurrenthypothesescan be plausible

interpretations of the agent’s behavior. This meansthat

succession of "events" (input information) time

multipleplans can be simultaneouslyactive. Plans can be

activated in two ways:(a) Forwardactivation of the most Figure 3: Evolutionof the confidencevalues of several

specific set of preconditions.If a plan is active, it checks plans.

the preconditionsof its subtree. If the preconditionsof a

planin the subtreehold, it is activated;(b) Activationof

plan by another through activation rules. They can be

2O

Whenall the plans in a certain branch have been

deactivated, the root node is made active again. This

corresponds to the case where a sequence has come to an

end, e.g., a car has driven past the traffic lights, and a new

situation has to be considered.

knowledgethat is not necessary for the recognition process

but useful for explanation is then responsible for providing

them. In this way, the performance of the recognition

systemis not affected.

Conclusion

Providing

Advice for Decision

Support

Explanations provided to the user play a essential role in

our system(Caflameroet al., 1992), since it is intended to

help a decision maker in critical domains. Our user has

heavy responsibilities

and s/he is held personally

responsible of his/her decisions; therefore, explanations

must be provided to him/her in his/her own language.

Indeed, we can define the overall objectives of the system

as: (1) relating the observedbehavior described in terms

low-level data to high-level structures that describe the

tactics or strategy applied by a rational agent in a given

situation; (2) interpreting this behavior in terms of the

agent’s motivations; and (3) providing satisfactory

explanations to user’s questions.

Advice is given as a causal interpretation--explanationmfrom the observer’s point of view, of lowlevel input data in terms of the agent’s plausible plan,

strategy, and motivation in a specific situation. A causal

account of the agent’s behavior seemsto be the right level

of interpretation in our case (Delannoyet al., 1992), given

that we must deal with intentional systems (Dennett, 1978;

Seek 1990)--agents that have desires and motivations, and

act accordingly. In addition, we consider these agents "at

the knowledge level". This interpretation--and

more

generally, the explanations provided by the system--is the

result of a trade-off amongthree factors: (1) the constraints

imposedby the robust approach to plan recognition, (2) the

purpose of the recognition task--decision support in

critical domains---and(3) the user’s needs for information.

Three main types of needs--and hence of explanations--are distinguished:

1. A reliable source of information. The interpretation

provided is aimedat understanding a situation in the world

by relating it to somepredefined high-level concept. This

explanation is dynamically constructed during the problem

solving process.

2. A means to test the user’s own hypotheses. They are

considered and, if plausible, addedto the list of possible

explanations.

3. Justification of different elementsof the interpretation, to

ensure that the system’s reasoning and/or conclusion can be

trusted.

Onlyexplanations of type 1 and 2 take part in the plan

recognition problem-solving process. Explanations of type

3 are considered only upon user’s request. They ate

constructed after plan recognition problem solving has

taken place, and its generation constitutes a complex

problem-solving process itself. They require additional

knowledgethat is not present in the recognition system, for

the sake of efficiency. A separate modulecontaining the

21

This paper describes a wayto performplan recognition in a

dynamic domain from low-level data. Plausible plans

reflecting the standard behavior of a rational agent in a

given situation arc used to interpret the actual agent’s

behavior. Monitoring the difference between both

behaviors allows to progressively confnan or reject the

different plausible hypothe~s.

Weaddress the problematic of plan recognition by

tackling several real-world characteristics that_ are not well

accommodatedby current techniques, such as low-level,

noisy, and lacunary data, a dynamic environment where

changes occur not only as a consequence of the agent’s

behavior. Frominput consisting of parameter values, which

give a partial description of the current situation, the

system must produce high-level output.

The recognition system we propose is based on a library

of prototypical cases or plans structured as frames. It uses a

mechanism comparable to spreading activation through

which plans activate one another downthe hierarchical

structure of the plan library, or across the hierarchy. In

other words, we try to walk on the steps of the agent by

producing interpretative hypotheses, as specific as data

allow, and prudently confirming or disqualifying them.

A CLOSprototype has been developed. Its graphical

interface allows the users to select input, analysis mode

(automatic or stepwise), display a bar chart of current

confidencevalues of active plans and a historic curve of the

confidence values, and to select several types of

explanations.

In addition to improvingexplanations, several interesting

problemscan be the object of future work. Among

others, a

better treatment of temporalrelations, and a deeper study of

the correspondence between the planning algorithm

(domain and strategic knowledge) and the recognition

rules. In this sense, a knowledge-level modelof the plan

recognition task has been proposed (Caflamero, 1994),

which aims at achieving a better understanding of the

rationale behind this problem-solvingbehavior, and offers

a guide for the acquisition of plan recognition knowledge.

Acknowledgments.This research is partially funded by

DRETunder contract 91-358.

References

Allen, J.F., Kautz, H.A., Pelavin, R.N., and Tenenberg,

LD. 1991. Reasoning about Plans, Morgan Kaufmann.

Bar~s, M. 1989. Aide h la d6cision darts les syst~mes

informatis6s de commandement:r61e de l’information

symbolique. DRETReport,July 1989, Pads.

Caflamero, D. 1994. Modeling Plan Recognition for

Decision Support. In Proceedings of the 8th European

Workshop on Knowledge Acquisition

(EKAW’94),

Hoegaarden, Belgium, September 26-29. SpringerVerlag, LNAIseries. Forthcoming.

Caflamero, D., Delannoy, L-F., and Kodratoff, Y. 1992.

Building Explanations in a Plan Recognition System for

Decision Support. Proceedings of the ECAIWorkshop

on Improving the Use of Knowledge-Based Systems

with Explanations, Vienna, Austria, August 1992;

Technical Report LAFORIA

92/21, Universit~ Paris VIL

France, pp. 35-45.

Charniak, E. and Goldman, R.P. 1991. A Probabilistic

Modelof Plan Recognition. Proc. Ninth AAAI, pp. 160165.

Charniak, E. and McDermott, D. 1985. Introduction to

Artificial Intelligence, Addison-Wesley.

Cohen, P.R., Perrault, C.R., and Allen, J.F. 1981. Beyond

question answering. In W. Lenhert and M. Ringle (eds.),

Strategies for Natural Language Understanding,

Lawrence Erlbaum, pp. 245--274.

Delannoy, J.-F., Cagamero, D., and Kodratoff, Y. 1992.

Causal Interpretation from Events in a Robust Plan

Recognition System for Decision Support. Proc. Fifth

International Symposium on Knowledge Engineering,

pp. 179-189,Sevilla, Spain, October5-9.

Dennett, D.C. 1978. Brainstorms: Philosophical Essays on

Mind and Psychology, Bradford Books.

Kautz, H.A. 1991. A Formal Theory of Plan Recognition

and its Implementation. In Reasoningabout Plans, eds.

J.F. Allen, H.A. Kautz, and J.D. Tenenberg, pp. 69-125,

Morgan Kaufmann.

Kodratoff, Y., Rouveirol, C., Tecuci, G., and Dural, B.

1990. Symbolicapproachesto uncertainty. In Intelligent

Systems: State of the Art and Future Directions, eds.

Z.W. Ras and M. Zermanova, pp. 226-255, Ellis

HorwoodLtd.

Lange, T.E. and Wharton, C.M. 1993. DynamicMemories:

Analysis of an Integrated Comprehensionand Episodic

MemoryRetrieval Model. Proc. 13th lntenational Joint

Conferenceon Artificial Intelligence (IJCAI), pp. 208213, Chambery, France, August 28-September 3,

Morgan Kaufmann.

Laskowski, S.J. and Hofman, E.J. 1987. Script-based

reasoning for Situation Monitoring. Proc. AAA187, pp.

819-823, Morgan Kaufmann.

Mooney, R.J. 1990a. A General Explanation-Based

Learning Mechanismand its Application to Narrative

Understanding, Morgan Kaufmann.

Mooney, R.J. 1990b. Learning Plan Schemata From

Observation: Explanation-Based Learning for Plan

Recognition. Cognitive Science, 14: 483-509.

Newell, A. 1982. The Knowledge Level. Artificial

Intelligence, 18: 87-127.

Pelavin, R.N. 1991. Planning With Simultaneous Actions

and External Events. In Reasoningabout Plans, eds. J.F.

Allen, H.A. Kautz, and J.D. Tenenberg, pp. 127-211,

Morgan Kaufmann.

22

Quast, K.J. 1993. Plan Recognition for Context-Sensitive

Help. Proc. ACMConference on Intelligent

User

Interfaces, pp. 89-96.

Schank, R.C. 1986. Explanation Patterns: Understanding

Mechanically and Creatively,

Lawrence Erlbaum

Associates.

Schank, R.C. and Kass, A. 1990. Explanations, Machine

learning, and Creativity. In Machine Learning, An

Artificial Intelligence Approach, Volume III, eds.

Y. Kodratoff, R.S. Michalski, pp. 31-48, Morgan

Kaufmann.

Schank, R.C. and Riesbeck, C. (eds.) 1991. Inside CaseBased Reasoning, Lawrence Erlbanm Associates.

Seel, N. 1990. From Here to Agent Theory. AISBQ72.

Spring: 15-25.

van Beck, P. and Cohen, R. 1991. Resolving plan

ambiguity for cooperative response generation.

Proc.Tenth AAAI, pp. 938-944.

van de Velde, W. 1993. Issues in Knowledge-Level

Modeling. In J.-M. David, J.-P. Krivine, and R.

Simmons(eds.), Second Generation Expert Systems.

Springer-Verlag, pp. 211--231.

Vilain, M. 1990. Getting serious about parsing plans: A

grammatical analysis of plan recognition. Proc.Ninth

AAA/,pp. 190-197, Boston, MA.

Wilensky, R.W. 1981. Meta-Planning: Representing and

Using Knowledge about Planning in Problem-Solving

and Natural Language Understanding..

Cognitive

Science, 5: 197-233.

Wilensky, R.W. 1983. Planning and Understanding: A

Computational

Approach to Human Reasoning,

Addison-Wesley.