From: AAAI Technical Report FS-93-04. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved.

Extracting a Domain Theory from Natural Language to

Construct a Knowledge Base for Visual Recognition

LawrenceChachereand Thierry Pun

ComputerScience Center, University of Geneva,

24 rue General Dufour, CH-1211Geneva4, Switzerland

e-mail: chachere@cui.unige.ch

Introduction

Ascomputervision research shifts fromsmaller applications to more general object recognition problems

whichinvolveverylarge sets of object classes, there is

an increasing amountof interest in feature indexing

schemes.Feature indexing raises the important issue

of howto construct a knowledgebase of associations

betweenkey discriminative features and their respective object classes. It is clear that someamountof automationis necessaryin order to build such knowledge

bases for verylarge recognitionsystems.

Visual recognition is a very difficult domainfor machine learning. Concept formation has two components: 1. finding appropriate attributes whichgive a

well-behavedclass membership

function, and 2. given

attribute vectors, finding the class membership

function. Althoughmost learning has been concernedwith

the latter problem,the first is at least as importantfor

manydomains,and probably more difficult [Rendell,

1990]. Whilethe idea of using inductive learning to

generate object class descriptions for a computervision systemis not new, e.g., [Shepherd1983; Lehrer

et. al, 1987; Cromwell

and Kak1991], attribute selection has tendedto be either ad hoc or application oriented, since there is no universally established set of

appropriatevisual attributes.

Anadditional difficulty whenconsideringvision problemsis that manyof the existing similarity-based inductive learning systemsare not directly applicable if

one takes into account the large numbersof training

examplesthey typically rely on. Thereexists biological ~videncethat it is possibleto formulategoodprototypes with very fewa’aining examples,e.g., [Cerella,

1979].Atopic that is currentlyreceivinga lot of attention in machinelearning is the integration of domain

theory within existing empiricaltechniques[Lebowitz,

1990]. Domainknowledge reduces the burden imposedon statistics for ensuringcorreclnessof output.

This paper describes a proposedapproachfor learning

characteristic features of objects whichis beingdeveloped for use by the GenevaVision System[Pun 1990].

The following section introduces the use of natural

languageas a heuristic knowledge

source of visual information[Cbachere,1992]. The next two sections di-

vide the concept formation problem in terms of

defining appropriateattributes (extraction of natural

languagedefinedfeatures) and inductivetraining. The

final section showssomeresults that have thus far

been obtained on an application domainof leaf images.

Natural Language as a Knowledge Acquisition Heuristic

Oursystemconsidersa feature significant if it is encodedas an adjective in natural language.Thusan attribute is deemedappropriateif it can take the value

of one or morenatural languagefeatures. There are

several advantagesof this feature selection criterion.

First, natural languageis an extremelyrich and reliable source of perceptual information.Secondly,concepts defined by natural language are easy to

understandby humans,e.g., it is mucheasier for a

person to determinethe correcmessof output fromthe

hue attribute than an ad hoc attribute suchas an ’excess red’ ratio, whichis commonly

derived from the

red/green/bluerepresentation of color images.Understandability of parametersis helpful for mostcomplex

AI problemswhichare difficult to theoretically verify.

A third advantageis that verbal feature values can

serve as heuristics during the induction process. For

example,yellow can be considereda significant numericalinterval withinthe hueattribute.

Perhaps the maininconveniencewith a natural languagecriterion is that lowlevel vision methodsfor

deriving manyof these features are not readily available. Vision methodshave generally been customized

for easily derivable numericfeatures, e.g., compactness, elongatedness,and various other aspect ratios,

withouta deepinvestigationof the utility of the various features for higher level object classification

tasks. Naturallanguagefeatures tend to be symbolic;

thus, the inevitable necessityof translating signals to

abstract higher level representations is immediately

put to question.

Extraction

of Natural Language Features

A library of symbolic features was constructed

through a systematic search for adjectives, by means

85

of standard references (computerizedWebster’s English dictionary and Roget’s thesauruses). A vocabulary of 102 common

adjectives describing shape and

color has beenobtained, for whichfeature extraction

techniquesare being developed.The shape adjectives

are either global(e.g., triangular,oval, cordate),contour-descriptive(e.g., smooth,dentate, jagged),or localized (e.g., pointed, rounded, or notched). Some

shape features can be further characterized by more

specific adjectives, e.g., dull or sharp-pointed.Color

adjectives consist of a basic set and subcolors of

members

of the basic seL Thebasic set is definedby a

recent study of 98 different languages [Berlin and

Kay,1991] whichsuggesteda linguistic universality

and evolution towards 11 basic color terms (white,

black, red, green, yellow, blue, brown,gray, orange,

purple, and pink). Textureand spatial adjectives are

also important,but are moredifficult and will be considered in future work.

The imagesignal to symboltransition is a knowledge

engineeringtask whichvaries fromfeature to feature.

Whilea few of these features have absolute definitions, the majority do not. The approachwe use for

extracting shape features is basedon contour analysis. Contourchains are created using a Sobeledgedetector. Global shapedescriptors are then derived by

template matching.To determinewhetheran object is

triangular, an ideal geometrictriangle is superimposed onto the region enclosedby the contour chain

outlining the object. If the ratio of overlapbetween

the twoareas is extremelyhigh, triangular is assigned

as the global shape. A threshold for ratio of overlap

255

white

will alwaysbe somewhat

subjective, but is systematically determined by measurementstaken on sample

triangular-shaped images. Regardinglocalized shape

descriptions,it is difficult to determinethe significant

locations on an object where local measurements

should be obtainedwithout the use of heuristics. One

possibility is to use simpleverbal heuristics. For example, our implementationwill routinely examinethe

baseandtop of an object, or to the tip of a protrusion

of an object.

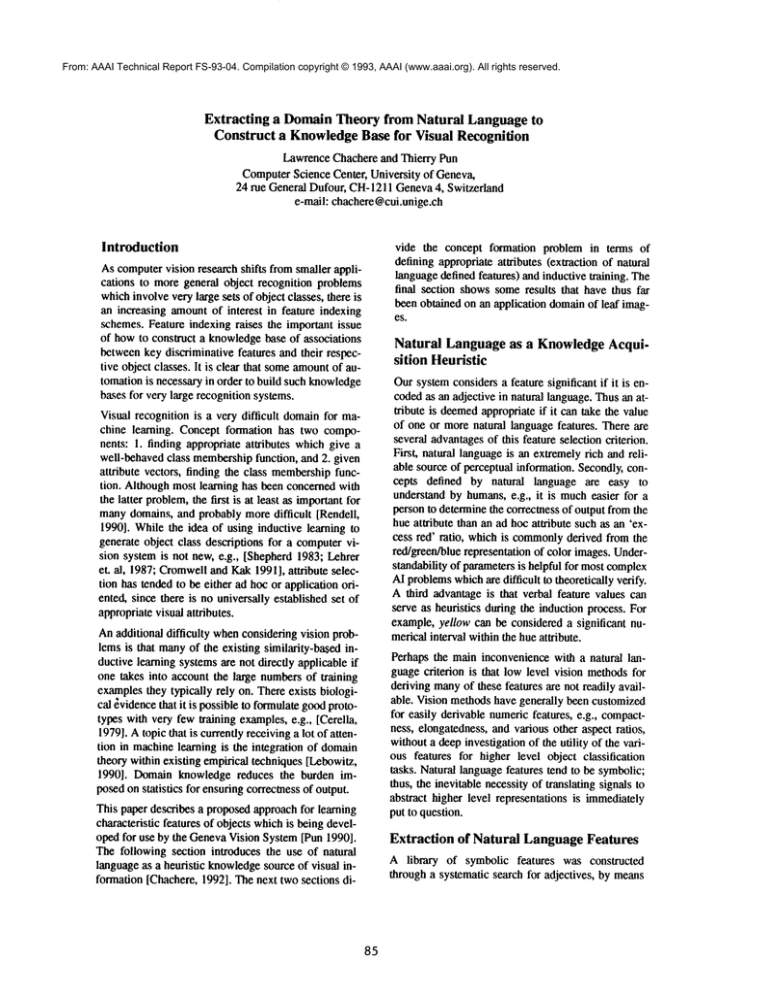

For the purpose of color characterization, a color

nameknowledgebase was created by processing imagesof labelled color samplesand computingtheir respectivehue, lightness, and saturation values fromthe

RGBimage signals. The examplesincluded a prototype sampleof each basic color, borderline samples

(e.g., borderlinereds: orangered andviolet red), and

proper sub-colors (e.g., red shades: maroon,scarlet,

etc.). It is importantto considerthat lightness greatly

influences the definitions of basic colors whichare

generallythoughtof as hues, both linguistically [Bettin and Kay, 1991] and biologically (the BezoldBruckeEffect) [Wyszeckiand Stiles, 1982]. For example,the conceptof yellow implies a high lightness

value; a ’yellow’hue with a low lightness value will

be perceived either as brownor green. Since it is

probablynot possible to define perfect regular functions, in particular within the hue-lightnessplane for

chromaticcolors in basic set (Figure 1), assignment

a basic color to an unlabeledregion is accomplished

using a nearest neighborschemein the 3-dimensional

HLSspace.

pink

:

¯...........

e’::i

.............

oralagq.¯

o e.

..’¯¯

’"

....

¯.......

"%L-o/o/ /

W. ¯" -: //

red..’-....’/

..e

"?:r.-

~’"

b2...... grn~---.

....

ce ..........

" .....

¯ .........

~ blue

*

1.0

’o

purpl~

....

¯ ’ ........,O"

Ill

=

0

Y

O. ~ ~ .-

o

,.

0

black

..O ",’,

/ Ogl

2.0

3.0

4.0

5.0

6.0

hue H

2x(=0)

Figure 1: distribution of color sampleson hue-lightnessplane;gl, g2 are twosub-colors of green,

respectevelyParis green and olive green) g2 is an extrememember

of the green class.

86

Given HLScoordinates of a fairly homogeneously

coloredregion of an image,a color description is attached as follows. A nearest neighbor within the

knowledgebase of colors is determined using a

weighted Euclidean distance measurement.The regionRis assignedthe basic color of its nearest neighbor (Fig. 1, R---> gl ~ green), unless the nearest

neighboris an exa’emedata point (e.g. g2) within its

basic color class and the distance of the region’s HLS

to the class prototypeis larger than the distance betweenthat nearest neighborand the class prototype.

If such a case arises, the region is characterized as

’undefinedcolor’, indicating a gap in the knowledge

base. Sincecolor termsare definedsubjectively rather than functionally, it seemsinappropriate to have

the systemstatistically guess color labels in unchartered areas of the HLSspace.

In addition to the assignmentof a basic color, a region mayalso be modified with more detailed features.If the HLSof a region is extremelyclose to the

HLScoordinatesof its nearest neighbor,it will also

acquire the subcolor label of the neighbor. Various

adjectives whichmodifythe basic color description

(e.g., pale, dark,light, greenish)are attachedif applicable. For example,dark is appendedto a blue region

whichhas a relatively lowlightness value within the

blue class of blue colors. Standarddefinitions of subcolors help to define thresholds for these modifiers.

For example,navy and indigo ate dark shades of blue

by definition, and thus are the lightness values corresponding to these samples within the knowledge

base.

Training

Training in our systemis accomplishedusing images

of labelled objects. The label will include a basic

class name,and optionalhierarchical links to broader

classes. It is assumedthat all examplesare labelled

withbasic level categories[Rosch,1978].It is possible that two randomclass membersof a very broad

category are likely to have morevisual differences

than similarities. Nevertheless,if higher level taxonomiccategories are supplied, the systemtries to formulate conceptsin the samemanneras for the basic

categories. This use of taxonomic knowledgecan

greatly help to economizethe learning procedure,

since it allows evidenceto be shared betweensibling

classes via inheritance froma parent category.

Both numericand symbolic data are supplied from

the feature extraction routines discussedin the previous section. Symbolicvalues alwayscorrespondto a

natural languageadjective. Numericinformationcannot be ignored,since it is possiblethat certain numeric ranges within an attribute maynot mapto any

natural language symbol. Whena new example is

87

supplied for a given class, all data is gatheredfrom

previous examples.Generalizedfeatures are created

by applyingbasic generalizationoperators are to each

attribute; interval closingfor numericvalues, and tree

climbing and disjunction for symbols[Michalski,

1983].

Thenext step after generalizationis to evalutate each

generalized feature. This is accomplishedby simple

measurements

of intraclass similarity and interclass

differences:

Similarity =

fraction of class samplesequal to symbol

rank of symbolin class

symbols

Differences = (cl * numberof completeclasses discriminated by feature) + (c2 / overall frequency

feature)

It is emphasized

that if the set of attributes definedin

the previoussection is adequate,the statistical task

neednot be complicated.The similarity function decreases significantly with each symboladdedto a disjunction, but does allow for exceptions. For example,

an observation of 50 diamonds,47 white, 1 yellow, 1

pink, and 1 blue, wouldyield --=> .94/1 + .02/2 + .02/

3 + .02/4 = .962, indicating that white is generally

characteristic of diamondsdespite the variety of different exceptions.Interclass discriminationis not accomplished by an exhaustive proof that a given

combinationof features will uniquelydistinguish a

concept, but rather a by measuringthe discriminative

powerand uniquenessof individual features, cl and

c2 in the aboveequation are weightedcoetficientss,

respectively corresponding to discrimination and

uniqueness.

Additionalevaluation factors include inheritance (a

feature is not characteristic of a basic class if it is

characteristic of a parent class) and wordcommonality biases derived from wordcorpuses (higher weight

is given to features whichare morecommonly

used in

natural language). For simplicity of discussion, the

important topic of spatial relationships, such as

above(Part-A, Part-B), has been avoided. However,

we are currently investigating natural languageheuristics to help reduce someof the complexityof this

problem. Regular or common

patterns can be represented as unarypredicates, rather than binary or higher order predicates, in terms of adjectives such as

palmateor 4-legged.

Aset of characteristicfeaturesfor an objectclass is finally producedby ranking all the evaluatedfeatures.

The highest ranking features are chosenas characteristics, whilefeatures withlowevaluationsare discarded. Since the purpose of the output is for indexing

rather than a completerecognition,it is only important

that the selected features are goodkeys for recognition, rather than an exhaustiveconceptdescription of

the object class. It is importantto note that the independenceof these output characteristic features makes

themsuitable to a variety of recognition procedures

(e.g., decision trees, parallel discrimination procedures).

An Example

For the purposeof testing the learning system,a database was created consisting of 275 images of noncompoundleaves from Europeanbroadleaf trees. The

data set consists of 55 differenct classes, 5 examples

of eachclass. Thesize and distribution of this data set

was established for two purposes: 1. To demonstrate

the ability to formulate goodconcept descriptions

fromsmall sets of training examples;and 2. To dem-

onstrate the ability to deal with discriminationproblems involving large numbersof different classes.

Eachleaf imagein this databaseis attached with four

labels, a species name(the basic category), a genus

name(e.g., maples,oaks), a family name(e.g., beech

family, whichincludes oaks, beeches, chesmuts)and

the inclusivelabel "leaf".

Table1 displays initial results by based upontwoof

the feature functions, for the purposeof demonstrate

discriminationof three selected leaf classes fromthe

database(Figure 2). Theapexof each leaf waslocated

by finding the axis of symmetry

and assumingthat the

leaves were oriented with the apexat the top of the

image.Theapex angle feature is computedby measuring the angle of the two edges extending from the

apexpoint. Leaveshavetwosignificant parts, a blade

and a petiole and therefore a color wasdeterminedfor

both subregions.

to

black alder

apricot

lemon

Figure 2: exampleimagesselected from3 of the 55 leaf classes.

Leaf

Apexangle

Apex shape

description

HLSvalues of blade and

petiole

Colordescriptions

lemonI

1.2383

pointed

(2.34,126,63), (2.13,206,51)

green / yellow

lemon2

1.25

pointed

(2.31,119,63),(2.11,199,50)

green/yellow

lemon 3

1.0264

pointed

(2.38,155,69), (2.12,174,51)

yellowishgreen / yellow

apricot 1

1.5611

pointed

(1.75,88,45), (1.12,175,51)

dk brwnishgreen/darkpink

apricot 2

1.4745

pointed

(1.88,92,46), (0.98,100,59)

dark brownishgreen / red

apricot 3

1.419

pointed

(1.72,86,46), (0.99,123,58)

dark brownishgreen / red

blackalder 1

3.8281

notched

(2.32,1.05,60), (2.25,165,54)

green / yellowishgreen

black alder 2

3.4735

noshed

(2.09,97,55),(2.25,166,50)

green / yellowishgreen

black alder 3

3.5457

notched

(2.32,110,59), (2.32,163,48)

green / yellowishgreen

Table1: extracted features. Apexangle ranges from0 to 2g, values are "pointed"if < x, "notched"if >

n, "straight" if veryclose to ~. HueHranges0 to 2r¢ wherepurered---m/3,puregreen--n,pureblue---5x/3.

LightnessL ranges from0 to 255where0=blackand 255=white.Saturation S is a percentage.

88

Basedon the data in Table 1, the training procedure

makesthe followingcharacteristic features: apricot -->

dark brownishgreen blade, pointed apex, red or dark

pink petiole; black alder --> notchedapex, yellowish

green petiole; lemon~ yellow petiole, pointed apex;

leaf --~ green blade. Sincethe three examplesare from

different families, no descriptions are formulatedat

that level. Withmoreexamplesof different leaf classes, petiole color=yellowishgreen will becomea default leaf characteristics rather than a black alder

characteristic. Thesystemwouldalso eventually learn

that red/darkpinkis a veryrare petiole color and thus

it should emergewith a higher ranking within the set

of apricotleaf characteristics.

ries in the Pigeon. Journal of ExperimentalPsychology: Human

Perception and Performance,5.

Chachere, L.E (1992). ReasearchSummary.In workshopnotes, ConstrainingLearningwith Prior Knowledge, AAAI-92,78-80.

Cromwell, R.L. and Kak, A.C. (1991). Automatic

generationof object class descriptions using symbolic learning techniquesIn Proc. of the 9th National

Conf.on Artificial Intelligence, 710-717.

Lebowitz,M.(1990). The Utility of Similarity-Based

Learningin a WorldNeedingExplanation. In Michalski, R.S., and Kodratoff,Y. (eds.), MachineLearning:

AnArtificial Intelligence Approach,volume3, chapter 15, MorganKaufmann.

Lebrer, N.B., Reynolds,G. and Griffith, J. (1987).

Initial HypothesisFormationin ImageUnderstanding

Using an Automatically Generated KnowledgeBase.

DARPA,

Image Understanding Workshop,520-537.

Theresults for these examplesmatchthe classification

descriptions are close to whatone wouldfind in a typical field guideto trees. Thebladecolor of the apricot

leaves is an exception.TheHLScoordinates of those

examplesactually mapinto an undefinedregion within the color knowledge

base slightly closer to a green

data point than the next nearest neighbor, a brown

point. Furthermoreit has beendeterminedthat the numerical values are not quite correct. Thediscrepancy

wastraced to a loss of accuracyin the imageacquisition process, using a PerfectScanTM Mikrotek600Z

scanner. Higherquality scanners and camerasfor imageacquisitionare likely to producebetter results.

Lenat, D.B. and Guha, R.V. (1989). Building Large

Knowledge-Based

Systems: Representation and Inference in the CycProject. Addison-Wesley.

Michalski, R.S. (1983). A Theoryand Methodology

of InductiveLearning.In Michalski,R.S., Carbonell,

J.G., and Mitchell, T.M. (eds.), MachineLearning:

AnArtificial Intelligence Approach,chapter 4, Morgan Kaufmann.

Pun, T. (1990). The GenevaVision System:Modules,

integration and primal access. TechnicalReport no.

90.06, ComputingScience Center, University of

Geneva.

Rendell, L. (1990). Commentary

to Lebowitz, M.,

The Utility of Similarity-BasedLearningin a World

NeedingExplanation.In Michalski, R.S. and Kodratoff, Y.(eds.), op.cit.

Conclusion

Theultimategoal in this research is to formulategood

visual conceptsabout object classes in an efficient

manner,without relying uponlarge numbersof training examples.There are still manynatural language

features which remain to be developed. The task of

extracting such features mayseemarduous. However,

webelieve that there is no shortcut for difficult AI

problems;perhaps the key element is to havelots of

knowledge[Lenat and Guha, 19891. The uncertain

rate of progress towardsa general visual recognition

system indicates that such an effort maywell prove

worthwhile.

Rosch, E. (1978). Principles of Categorization.

Rosch,E. and Lloyd, B.B. (eds.) Cognitionand Categorization, 28-39, LawrenceErlbaumAssociates.

Shepherd, B.A. (1983). Anappraisal of a decision

tree approachto imageclassification. In Proceedings

of IJCAI, 473-475.

Wyszecki,G. and Stiles, W.(1982). Color Science:

Concepts and Methods,Quantitative Data and Formulae. Wiley.

Acknowledgments

This research is supported by a Swiss National Research Fund grant, 20-26475.89. The authors would

like to express their deep gratitude to Catherine de

Garrini for her major role in the implementationof

feature extraction of shapeadjectives.

References

Berlin, B. and Kay, P. (1991). Basic Color Terms:

Their Universality and Evolution.University of California Press, Berkeley,CA.

Cerella, J. (1979). Visual Classes and NaturalCatego-

89