Studying the Role of Embodiment ...

advertisement

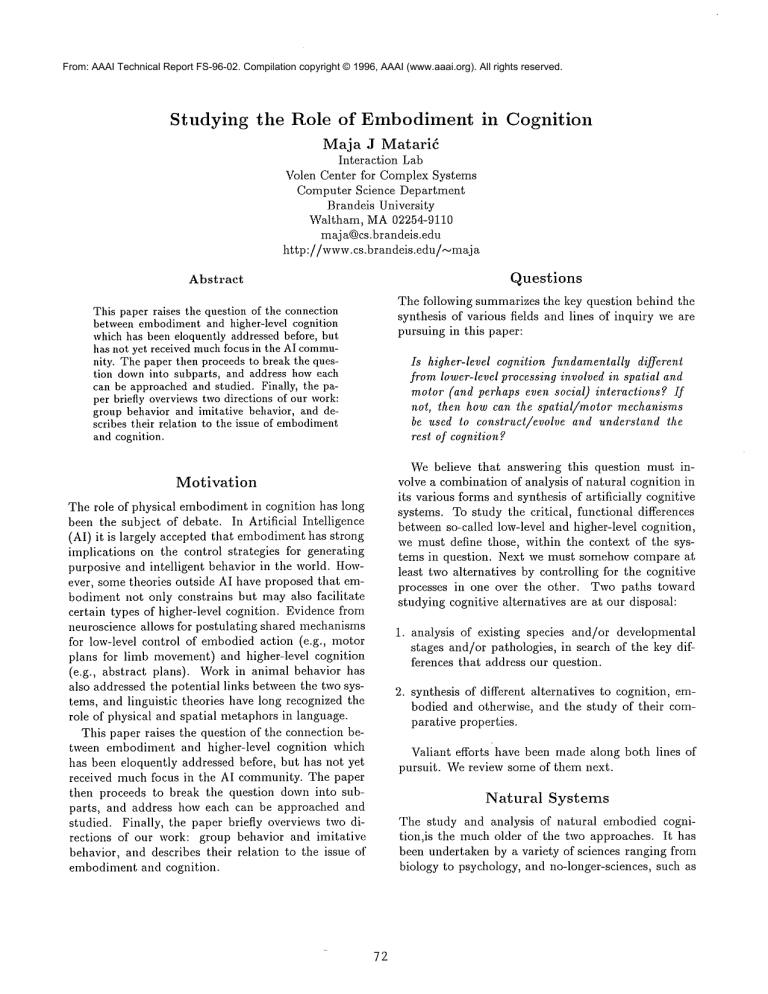

From: AAAI Technical Report FS-96-02. Compilation copyright © 1996, AAAI (www.aaai.org). All rights reserved. Studying the Role of Embodiment in Cognition Maja J Matarid Interaction Lab Volen Center for Complex Systems Computer Science Department Brandeis University Waltham, MA02254-9110 maj a@cs.brandeis.edu http://www.cs.brandeis.edu/,~maj a Abstract Questions The following summarizes the key question behind the synthesis of various fields and lines of inquiry we are pursuing in this paper: This paper raises the question of the connection between embodimentand higher-level cognition which has been eloquently addressed before, but has not yet received muchfocus in the AI community. The paper then proceeds to break the question downinto subparts, and address howeach can be approachedand studied. Finally, the paper briefly overviewstwo directions of our work: group behavior and imitative behavior, and describes their relation to the issue of embodiment and cognition. Is higher-level cognition fundamentally different from lower-level processing involved in spatial and motor (and perhaps even social) interactions? not, then how can the spatial/Zmotor mechanisms be used to construct/evolve and understand the rest of cognition? Motivation The role of physical embodimentin cognition has long been the subject of debate. In Artificial Intelligence (AI) it is largely accepted that embodimenthas strong iinplications on the control strategies for generating purposive and intelligent behavior in the world. However, some theories outside AI have proposed that embodiment not only constrains but may also facilitate certain types of higher-level cognition. Evidence from neuroscience allows for postulating shared mechanisms for low-level control of embodied action (e.g., motor plans for limb movement) and higher-level cognition (e.g., abstract plans). Workin animal behavior has also addressed the potential links between the two systems, and linguistic theories have long recognized the role of physical and spatial metaphors in language. This paper raises the question of the connection between embodiment and higher-level cognition which has been eloquently addressed before, but has not yet received much focus in the AI community. The paper then proceeds to break the question down into subparts, and address how each can be approached and studied. Finally, the paper briefly overviews two directions of our work: group behavior and imitative behavior, and describes their relation to the issue of embodinlent and cognition. 72 ~,’Ve believe that answering this question must involve a combination of analysis of natural cognition in its various forms and synthesis of artificially cognitive systems. To study the critical, functional differences between so-called low-level and higher-level cognition, we must define those, within the context of the systems in question. Next we must somehow compare at least two alternatives by controlling for the cognitive processes in one over the other. Two paths toward studying cognitive alternatives are at our disposal: . 2. analysis of e~sting species and/or developmental stages and/or pathologies, in search of the key differences that address our question. synthesis of different alternatives to cognition, embodied and otherwise, and the study of their comparative properties. Valiant efforts have been made along both lines of pursuit. Wereview some of them next. Natural Systems The study and analysis of natural embodied cognition,is the much older of the two approaches. It has been undertaken by a variety of sciences ranging from biology to psychology, and no-longer-sciences, such as phrenology (Gould 1981). Volumes of data on animal behavior allow us to perform comparative studies across species, including comparisons of close evolutionary relatives such as humans and chimpanzees (Goodall 1971) to more distant cousins like humans and monkeys (Cheney & Seyfarth 1991, Cheney Seyfarth 1990) to many-times-removed family members (Chase 1994, Chase & Rohwer 1987, McFarland 1987, Gould 1982). While these studies can tell us muchabout cognition, they cannot speak directly to the role embodiment within a species. To address that, researchers have endeavored to remove or at least diminish bodily capacities on a particular animal, and observe the results. For obvious reasons, such studies are done on a small set of species, none of which are typically considered to be capable of so-called higher-level cognition. These studies largely address mapping of neural functionality rather than cognitive/behavioral questions even remotely as abstract as the one we have posed. In contrast to animal work, where structure can be ablated in a premeditated and controlled fashion but the subjects’ cognitive capabilities are lacking, work on human pathology offers no such control in the choice of deficits, but provides more than sufficiently complex subjects. Muchof the humandata on highly cognitive deficits are, even today, anecdotal and sparse, but important strides have been madeprecisely in the direction of studying the mind-body connection ?). Finally, humandevelopmental research is an avenue that allows for comparative studies of various deficits and capacities related to the question of cognition. The gradual development and acquisition of both motor and cognitive skills in children provides perhaps the largest corpus of data for addressing our question. Artificial Systems As discussed above, two key problems with biological data are 1) their incompleteness, due to our inability to experiment with arbitrary systems, and 2) their abundance and disconnectedness. The study of artificial systems allows us to deal with both of the problems at once by giving us free reign over what capabilities we include in the system and what biological properties we choose to model. Indeed, we have such unlimited freedom of what we study, that we can be accused of irrelevance. In contrast to the first approach, the study of natural systems, the second one is relatively new, and mired with all the methodological problems of a hopeful nascent science. Post-synthetic analysis, i.e., studying systems one has built, presents unlimited opportunities for bias, subjectivity, and unconscious cheating. As a "science of the artificial" (Si- 73 mon1969), the second pursuit should borrow from the first. To focus in on specific questions to be addressed with computational approaches, we can break down our initial inquiry about the role of embodimentinto the following: ¯ Are the computational and representational structures necessary for motor control and spatial reasoning themselves sufficient for so-called higher-level (more abstract) cognition or are fundamentally different computational capabilities necessary/present? Those who would like to claim a positive answer to this question unfortunately have no solid evidence to support it, but nor do the opposers. Biology provides no straightforward evidence we are currently capable of understanding, although many eloquent arguments for and against the claim have been made (Dennett 1995). Until we are better able to analyze the biological evidence, a synthetic approach is the best first step in attempting to answer at least the question of how possible it is that higher-level reasoning can be a simple extension of lower-level structures. Somebranches of AI have begun to address this problem, as discussed below. ¯ Is humanhigher-level cognition based on so-called lower-level metaphors and constructs including spatial, motor, and social ones? What might human non-spatial/non-motor representations come from and look like? The abundance of spatial and body-based metaphors in human linguistic expressions (Lakoff & Johnson 1980, Johnson 1987, Lakoff 1992) can be used as evidence for the body as the foundational system of reference for higher-level as well as lower-level reasoning. One might ask if any representations, other than the traditional symbolic ones used in AI and its spinoffs, employ encodings that are not fundamentally based on spatio-motor information (Jackendoff 1992). As above, the most productive means of addressing this question is through a synthetic approach, at least until nmch more is knownabout biological cognition. ¯ Do current methods for modeling embodied insect and animal behavior (including humanoid robots) automatically assume a negative answer to the questions above by separating control and cognition? Partially due to AI’s legacy of designer-imposed modularity, almost all of today’s systems separate the low and high levels of reasoning, even if the latter are themselves none too high (Brooks & Stein 1994). The question of this separation has been a topic of hot dispute for the past decade in the robot/agent control community, where reactive, deliberative, hybrid, and behavior-based systems have all been proposed, demonstrated, and debated as alternatives (Matarid 1995, Matarid 1992a, Brooks 1991). Of those, behavior-based systems may present a possible alternative to this separation, but empirical demonstrations of several such systems, combining low and high-level cognition, are still lacking. Meanwhile,the connectionist communityhas wholeheartedly embraced variations of neural-network models that present a monolithic alternative (McNaughton & Nadel 1990, l~umelhart James L. McClelland 1986). Historically not separated into modules, these systems present, to many, the most promising alternative. However,they are also yet to be scaled up to contain both low-level control and highlevel cognition. Interestingly, manyefforts in this direction have resulted in less monolithic, more hierarchical variations that begin to resemble more modular, M-style systems. Thus, while parts of the connectionist community are moving from monolithic to hierarchical, parts of the AI conmmnityare moving form the hierarchical to the monolithic. Not surprisingly, various hybrid alternatives have been implemented, but again largely for specialized systems. In Pursuit ically evaluating behaviors from the basis set (Matarid 1992b, Matarid 1994a). Wehave also extended the basis behavior idea to serve as a foundation for learning in the group domain. Wefirst demonstrated that behaviors, coupled with their triggering conditions, constitute an effectively minimized learning space for multiple robots automatically discovering strategies for group foraging, through the use of a formulation of shaping in reinforcement learning (Matarid 1994c, Matarid 1996@ Wethen expanded the behavior set to include social behaviors, and demonstrated that the same approach to behavior representation can be successfully used to learn a non-greedy social policy that included yielding and information sharing (Matarid 1994b). Finally, demonstrated the ideas on a tightly-coupled cooperative task in which a pair of robots had to learn to cooperate and comnmnicate (Simsarian & Matarid 1995). Our work with embodied agents has shown that the behaviors that served as a stable and effective basis for multi-agent interactions were firmly grounded in the immediate, local, physical interactions between the agents. The behaviors in the basis set included safe-wandering, aggregating, dispersing, following, and homing, all of which were spatial. Even the comparatively higher-level social behaviors, yielding and communicating, involved physical interaction. While it is not surprising that learning to yield involved physical interactions between the agents, it is perhaps more interesting that learning to communicatedid as well. In order to learn to share information about the world, the agents needed repeated physical interactions with each other and with the objects in order for the difficult credit assignment problem to be resolved in the multi-agent domain (Matarid 1996b). Complementary to our efforts with physically embodied multi-robot systems, we are also pursuing work with disembodied multi-agent systems consisting of abstract computational agents. For example, a 4,096agent simulation was developed to study resource sharing between heterogeneous spatially distributed agents to determine under what conditions cooperation would emerge and when it would be the most effficient/stable strategy (Kitts 1996). More abstract information agents were also explored in a number of projects. In one, a US economic model was used as a testbed for exploring local and global multi-agent optimization methods. In another, set of Fortune 500 companies was modeledto study the effects global effects of local trading patterns. The embodied and disembodied nmlti-agent efforts are being pursued in parallel in search of common principles underlying group dynamics. Comparisons of Answers Having considered different lines of pursuit toward answering the question of the role of embodiment,in this section we briefly describe the specific research directions we are focusing on. Social Behavior and Cognition One direction of our work pursues a research program for synthesizing and analyzing group behavior and learning in situated agents. The goal of the research is to understand the types of simple local interactions which produce complex and purposive group behaviors. Wefocus on a synthetic, bottom-up, behaviorbased approach that can be used to structure and simplify the process of both designing and analyzing emergent group behaviors. Our approach utilizes a biologically-inspired notion of basis behaviors as a substrate for control and learning, both at the individual and the collective level, and for both the interactions within an individual agent’s cognitive processes and between multiple agents in a social context. Wehave tested the basis behavior concept by developing a basis behavior set for embodied, mobile agents interacting in complex domains. Wealso introduced methods for selecting, formally specifying, algorithmically implementing, and empir- 74 between the two domains also allow us to study embodiment-specific questions. Imitative Behavior perimental domains: on a dynamical simulation of a human body, on a group of mobile robots, and on a collection of software agents. To keep the model behaviorally relevant, we base and constrain it with data from cognitive neuroscience, psychophysics, and ethology. For the purposes of this paper, one of the most interesting aspects of the imitation work is the role of embodiment. Psychophysical experiments have shown that people tend to a limited part of a. demonstrated behavior and are still capable of successful imitation (Matarid 1996a). For exaple, when shown complex arm movements, subjects fixate on the hand, but can accurately reconstruct the entire posture. The resulted imitated behavior tends to depend more on what the subjects are told (i.e., primed with) than on their observations prior to imitation. This guides us to postulate two testable theories: and Cognition Another active line of our research is into the mechanislns underlying imitalion. As one of the most ubiquitous forms of learning in nature, imitation presents an important research problem in AI and machine learning, as well as in the behavior and neural sciences. As argued above, most adaptive systems involve both cognitive and subcognitive processes. The tension between what is typically referred to as lowlevel control (e.g., motor control, obstacle avoidance, navigation) and high-level cognition (e.g., planning) is ubiquitous in adaptive systems ranging from pathplanning robots to information agents (Maes 1994). One of the key aspects of such systems is the abstraction of data/information between different modules. Fully reactive systems (Brooks 1986, Agre & Chapman 1987, Rosenschein & Kaelbling 1986), behavior-based systems (Matarid 1991, Matarid 1992c, Maes 1989) and hybrid systems (Firby 1987, Arkin 1989, Georgeff Lansky 1987, Payton 1990, Connell 1991) have all been proposed as alternatives to solving the data abstraction problem in control. However, not much work has yet been done in scaling up such systems to problems that require complex enough control and challenging enough planning abilities to demandmultiple interacting representational systems. Weare using the problem of learning by imitation to study the interaction between multiple data representations. Imitation involves the interaction of perception, memory, and motor control, subsystems which typically utilize very different representations, which in this task must interact to produce and learn novel behavior patterns. Gaining insight into the mechanisms of imitation is compelling from the standpoint of AI and behavioral sciences, since the propensity for it appears to be innate (McFarland 1985, McFarland 1987, Gould 1982) and the mechanism is phylogenetically old, found in someinvertebrates (Fiority &Scotto 1992), many birds (Moore 1992), aquatic mammals (Mitchell 1987), and finally primates and humans. all cases, it is a faster and moreefficient form of acquiring new behaviors than its traditional classical conditioning counterpart; it is critical during development and remains an important aspect of social interaction and adaptation throughout life. Consequently, learning by imitation presents a rich domain for studying multi-representational interaction, a crucial aspect of embodiment. Weare developing a model of learning by imitation and testing it empirically on multiple systems in three different ex- 1. The specification of a "task" or "behavior" to be imitated is typically muchmore abstract than the particular demonstrated model, with the exception of specific motor skills, and thus may involve higherlevel cognitive interpretation. This would explain the surprising lack of true imitative behavior in species other than humans, chimpanzees, and dolphins (Tomasello, Kruger & Rather 1993, Mitchell 1987, Davis 1973). 2. The performance of imitation invokes various previously learned and possibly innate capabilities that simplify, and likely guide, the observational process. For example the humancapability to accurately reconstruct posture from only a single saccade implies that complete internal kinematic and dynamic models are involved. At a more abstract level, the mistakes children makein being overly specific in imitation (i.e., imitating superfluous details) and conversely the mistakes adults makein being overly general in imitation (i.e., leaving out what they consider to be superfluous details) both point to imitationspecific computational processes that 1) develop over time, and 2) involve a transition from purely embodied and spatial to the more abstract representations. Toward the end goal of modeling imitation and studying the role of embodimentand its interaction with higher-level aspects of the imitative task, we are beginning to address the many facets of the imitation system: the perceptual component, the nmltirepresentational transforms, the motor system, and the learning component. Just as our nmlti-agent work is organized around the principle of basis behaviors, our imitation work also involves the use of such behaviors, both in the perceptual and in the motor compo- 75 nents of the system. Weare exploring the possibility that the perceptual and motor systems develop in parallel, aided though the imitative process. The motor primitives that provide the complex and growing motor repertoire affect and are affected by perceptual primitives which allow for parsing a complex continuous stream of visual and other sensory inputs. One of the projects currently under way develops methods that automatically extract perceptual primitives using biases imposed by the motor system. Wehope that our parallel efforts in the two domains, group behavior and imitative behavior, will yield results we can use for comparative studies toward the goal of studying embodiment. Chase, I. D. ~’~ Rohwer, S. (1987), ’Two Methods for Quantifying the Development of Dominance Hierarchies in Large Groups with Application to Harris’ Sparrows’, Animal Behavior 35, 1113-1128. Cheney, D. L. L: Seyfarth, 1%. M. (1990), HowMonkeys See the World, The University of Chicago Press, Chicago. Cheney, D. L. ~ Seyfarth, R. M. (1991), 1%eading Minds or 1%eading Behaviour?, in A. Whiten, ed., ’Natural Theories of Mind’, Basil Blackwell. Connell, J. H. (1991), SSS: A Hybrid Architecture Applied to Robot Navigation, in ’IEEE International Conference on Robotics and Automation’, Nice, France, pp. 2719-2724. Summary This paper has explored the pursuit of the role of embodiment in cognition. Wehave proposed a general question, broken it into more specific subquestions, enumerated ways of pursuing research programs aimed at answering parts of the inquiry, and reviewed some work in several related disciplines. In the last part of the paper we described two directions of our research, the study of group behavior and imitative behavior, aimed at the general question relating embodimentand cognition. Damasio, A. R. (1994), set/Putnam, NY. Descartes’ Error, Gros- Davis, J. M. (1973), hnitation: A Review and Critique, in Bateson L: Klopfer, eds, ’Perspectives in Ethology’, Vol. 1, Plenum Press. Dennett, D. C. (1995), Darwin’s Dangerous Idea, Simon & Schuster, NY. Fiority, G. L: Scotto, P. (1992), ’Observational Learning in Octopus vulgaris’, SCI 256, 545-547. Acknowledgments The research reported here was supported in part by the National Science Foundation CAREER Grant IRI9624237 and in part by the National Science Foundation Infrastructure Grant CDA-9512448. Firby, R. J. (1987), Aninvestigation into reactive planning in complex domains, in ’Proceedings, Sixth National Conferenceon Artificial Intelligence’, Seattle, pp. 202-206. Georgeff, M. P. & Lansky, A. L. (1987), Reactive Reasoning and Planning, in ’Proceedings, Sixth National Conferenceon Artificial Intelligence’, Seattle, pp. 677-682. References Agre, P. E. ~ Chapman, D. (1987), Pengi: An Implementation of a Theory of Activity, in ’Proceedings, AAAI-87’, Seattle, WA,pp. 268-272. Arkin, R. C. (1989), Towards the Unification of Navigational Planning and Reactive Control, in ’AAAI Spring Symposiumon Robot Navigation’, pp. 1-5. Goodall, J. (1971), In the Shadow of Man, Houghton Mifflin, Boston. Gould, J. L. (1982), Ethology; The Mechanisms and Evolution of Behavior, ~’V. W. Norton ~= Co., NY. Bermudez, J. L., Marcel, A. & NaomiEilan, e. (1995), The Body and the Self, MIT Press, Cambridge, MA. Gould, S. J. (1981), The Missmeasure of Man, W. W. Norton & Co., NY. Brooks, R. A. (1986), ’A Robust Layered Control System for a Mobile Robot’, IEEE Journal of Robotics and Aulomation P~A-2, 14-23. Brooks, R. A. (1991), Intelligence Without Reason, ’Proceedings, IJ CAI-91’. Jackendoff, 1%. (1992), Languages of the Mind, MIT Press, Cambridge, MA. Brooks, 1%. A. & Stein, L. A. (1994), ’Building Brains for Bodies’, AutonomousRobots 1, 7-25. Kinsbourne, M. (1995), Awareness of One’s Own Body: An Attentional Theory of Its Nature, Development, and Brain Basis, in J. L. Bermudez, A. Marcel ~= N. Eilan, eds, ’The Bodyand the Self’, MITPress, Cambridge, MA. Johnson, M. (1987), The Body in the Mind: The BOdily Basis of Meaning, Imagination, and t~eason, The Chicago Univeristy Press, Chicago. Chase, I. D. (1994), Generating Societies: Social Organization in Humans, Animals and Machines, Harvard University Press, Cambridge, MA. 76 Kitts, B. (1996), The Evolution of Multi-celled Organization, Technical Report working paper, Volen Center for ComplexSystems, Brandeis University. Matarid, M. J. (1996b), Learning in Multi-Robot Systems, in G. Weiss & S. Sen, eds, ’Adaptation and Learning in Multi-Agent Systems’, Vol. Lecture Notes in Artificial Intelligence Vol. 1042, SpringerVerlag, pp. 152-163. Lakoff, G. (1992), Women, Fire, and Dangerous Things, University of Chicago Press, Chicago. Matarid, M. J. (1996c), ’Reinforcement Learning the Multi-Robot Domain’, to appear in Autonomous Robots. Lakoff, G. & Johnson, M. (1980), Metaphors We Live By, University of Chicago Press, Chicago. Maes, P. (1989), The Dynamicsof Action Selection, ’IJCAI-89’, Detroit, MI, pp. 991-997. McFarland, D. (1985), Animal Behavior, Cummings, Menlo Park, CA. Maes, P. (1994), ’Modeling Adaptive Autonomous Agents’, Artificial Life 1(2), 135-162. McFarland, D. (1987), The Oxford Companion to Animal Behavior, in ’Oxford, University Press’. Matarid, M. J. (1991), ’Behavior Synergy Without Explicit Integration’, SIGARTBulletin, the Procceedings of the AAAI Spring Symposium on Integrated Inielligenl Archiieetures 2(4), 130-133. McNaughton, B. L. & Nadel, L. (1990), Hebb-Marr Networks and the Neurobiological Representation of Action in Space, in M. A. Gluck & D. E. Rumelhart, eds, ’Neuroscience and Connectionist Theory’, Lawrence Erlbaum Associates, Hillsdale, NJ. Matarid, M. J. (1992a), Behavior-Based Systems: Key Properties and hnplications, in ’IEEE International Conference on Robotics and Automation, Workshop on Architectures for Intelligent Control Systems’, Nice, France, pp. 46-54. Benjmnin Mitchell, R. W. (1987), A Comparative-Developmental Approach to Understanding Imitation, in Bateson & Klopfer, eds, ’Perspectives in Ethology’, Vol. 7, Plenum Press. Matarid, M. J. (1992b), Designing Emergent Behaviors: From Local Interactions to Collective Intelligence, in J.-A. Meyer, H. Roitblat &S. Wilson, eds, ’From Animals to Animats: International Conference on Simulation of Adaptive Behavior’. Moore, B. R. (1992), ’Avian MovementImitation and a new Form of Mimicry: Tracing the Evolution of a Complex Form of Learning’, Behavior 122, 614-623. Matarid, M. J. (1992c), ’Integration of Representation Into Goal-Driven Behavior-Based Robots’, IEEE Transactions on Robolics and Automation 8(3), 304312. Matarid, M. J. (1994a), Interaction and Intelligent Behavior, Technical Report AI-TR-1495, MITArtificial Intelligence Lab. Payton, D. (1990), Internalized Plans: a representation for action resources, in P. Maes, ed., ’Designing Autonomous Agents: Theory and Practice from Biology to Engineering and Back’, MITPress. Rosenschein, S. J. &Kaelbling, L. P. (1986), The Synthesis of Machines with Provable Epistemie Properties, in J. Halpern, ed., ’Theoretical Aspects of Reasoning About Knowledge’, Morgan Kaufmann, Los Altos, CA, pp. 83-98. Matarid, M. J. (1994b), Learning to Behave SoeiMly, in D. Cliff, P. Husbands, J.-A. Meyer &S. Wilson, eds, ’From Animals to Animats: International Conference on Simulation of Adaptive Behavior’, pp. 453-462. l~umelhart, D. E. & James L. McClelland, e. (1986), Parallel Distributed Processing, MIT Press, Cambridge, MA. Matarid, M. J. (1994c), RewardFunctions for Accelerated Learning, in W. W. Cohen &H. Hirsh, eds, ’Proceedings of the Eleventh International Conference on Machine Learning (ML-94)’, Morgan Kauffman Publishers, Inc., NewBrunswick, NJ, pp. 181-189. Simon, H. (1969), The Sciences of the Artificial, Press. Sacks, O. (1987), The Man Who Mistook His Wife for a Hat, Harper & Row, NY. MIT Simsarian, K. T. & Matarid, M. J. (1995), Learning Cooperate Using Two Six-Legged Mobile Robots, in ’Proceedings, Third European Workshop of Learning Robots’, Heraklion, Crete, Greece. Matarid, M. J. (1995), ’Issues and Approaches In the Design of Collective Autonomous Agents’, Robotics and Aulonomous Systems 16(2-4), 321-331. Tomasello, M., Kruger, A. C. & Rather, H. H. (1993), ’Cultural Learning’, The Journal of Behavioral and Brain Sciences 16(3), 495-552. Matarid, M. J. (1996a), Analyzing Visual Behaviors in Hand Gesture hnitation, Technical Report Computer Science Technical Report CS-96-185, Brandeis University. 77