From: AAAI Technical Report SS-94-06. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

Integrating Planning and Execution in Stochastic Domains

RichardDeardenand Craig Boutilier

Department

of Computer

Science, Universityof British Columbia

Vancouver, BC, CANADA,V6T 1Z4

{dearden, cebly}@cs.ubc.ca

Abstract

a certain action is deemed

best (for a givenstate) it should

be executed and its outcomeobserved. Subsequentsearch

Weinvestigate planning in time-critical domains

for the best next action can proceedfromthe actual outcome,

representedas MarkovDecisionProcesses. To reignoringother unrealizedoutcomesof that action.

ducethe computational

cost of the algorithmweexIn general, a fixed-depthsearch will tend to be greedy,

ecuteactionsas weconstructthe plan, andsacrifice

choosingactions that provide immediaterewardat the exoptimalityby searchingto a fixed depthandusinga

penseof long-termgain. Toalleviate this problemweassume

heuristicfunctionto estimatethe valueof states. Ala heuristicfunctionthat estimatesthe valueof eachstate, acthoughthis paperconcentrateson the searchprocecountingfor future states that mightbe reachedin additionto

dure, wealso discusswaysof constructingheuristic

that state’s immediatereward.This prevents(to someextent)

functionsthat are suitablefor this approach.

the problemof globallysuboptimal

choicesdueto finite horizoneffects. Knowledge

of certain propertiesof the heuristic

function allowthe searchtree to be pruned.Wedescribe one

1 Introduction

methodof constructingheuristic functions that allowsthis

Anoptimal solution to a decision-theoretic planningprob- informationto be easily determined.This constructionalso

lem requires the formulationof a sequenceof actions that

producesdefault actionsfor eachstate, in essence,generating

maximizes

the expectedvalueof the sequenceof worldstates

a reactive policy. Oursearch procedurecan be viewedas

throughwhichthe planningagentprogressesby executingthat

usingdeliberationto refine the reactivestrategy.

plan. Deanet al. (1993b; 1993a)havesuggestedthat many

In the next section wedescribe MDPs

and a samplerepresuch problemscan be represented as Markovdecision prosentationfor this decisionmodel.Section3 describesthe basic

ceases (MDPs).This allows the use of dynamicprogramming algorithm,includingthe searchalgorithm,the interleavingof

techniquessuch as valueorpolicy iteration (Howard

1971)

search and execution, as well as possible pruningmethods.

computeoptimalpolicies or coursesof action. Indeed,such Section4 discussessomewaysof constructingthe heuristic

policies solve the moregeneral problemof determiningthe

evaluationfunctions. Section 5 examinesthe computational

best actionfor everystate. Unfortunately,

this optimalityand cost of the algorithm,anddescribessomepreliminaryexpergenerality comesat great computational

expense.

imentalresults.

Deanet al. (1993b; 1993a) have proposed a planning

methodthat relaxes these requirements.Anenvelopeor sub- 2 The Decision Model

set of states that mightbe relevant to the planningproblem

at hand(e.g., givenparticularinitial andgoalstates) is con- Let S be a finite set of world states. In manydomainsthe

strutted, andan optimalpolicy is computed

for this restricted states will be the models(or worlds)associated with some

of

spacein an anytimefashion. Clearly,optimalityis sacrificed logical language,so ISl will be exponentialin the number

since importantstates mightlie outsidethe envelope,as is

atomsgeneratingthis language.Let ,4 be a finite set of acgenerality, for the policy makesno mentionof these ignored tions availableto an agent. Anaction takes the agentfromone

states. In (Deanet al. 1993b)it is suggestedthat domain- worldto another,but the result of an actionis known

onlywith

specific heuristics will aid in initial envelopeselectionand someprobability. Anaction maythen be viewedas a mapenvelopealteration.

ping fromS into probability distributions over S. Wewrite

Weproposean alternative methodfor dealing with Markov Pr(sx,a, s2) to denotethe probabilitythat s2 is reachedgiven

decision modelsin a real-time environment.Wesuggestthat

that action a is performedin state sl (embodying

the usual

MDPs

be explicitly viewedas search problems. Real-time Markovassumption).Weassumethat an agent, onceit has

constraintscanbe incorporated

by restricting the searchhoriperformed

an action, can observethe resulting state; hencethe

zon. This is the basic idea behind,for example,Korf’srealprocessis completelyobservable.Uncertaintyin this model

results only fromthe outcomesof actions being probabilistime heuristic search algorithm.In stochastic domainsthere

is anotherimportantreasonfor interleavingexecutioninto the

tic, not fromuncertainty about the state of the world. We

planningprocess,namely,to restrict the searchspaceto the

assumea real-valued rewardfunction R, with R(s) denoting

actual outcomes

of probabilistic actions. In particular, once the (immediate)

utility of beingin state s. Forour purposes

55

Action

Move

Discrirainant

Office

-.Office

Move

Rain,-~Umb

BuyCoffee

-,Office

GetUmbrella

Office

Office

Conditions

Reward

I-IasUserCoffee,--,Wet

1.0

I-IasUserCoffee, Wet

0.8

--,HasUserCoffee, --Wet

0.2

--,HasUserCoffee, Wet

0.0

Prob.

0.9

0.1

Office

0.9

0.1

Wet

0.9

0.1

HRC

0.8

0.2

1.0

Umbrella

0.9

0.1

HUC,-~HRC 0.8

-~HRC

0.1

0.1

-~HRC

0.8

0.2

1.0

Effect

-~Office

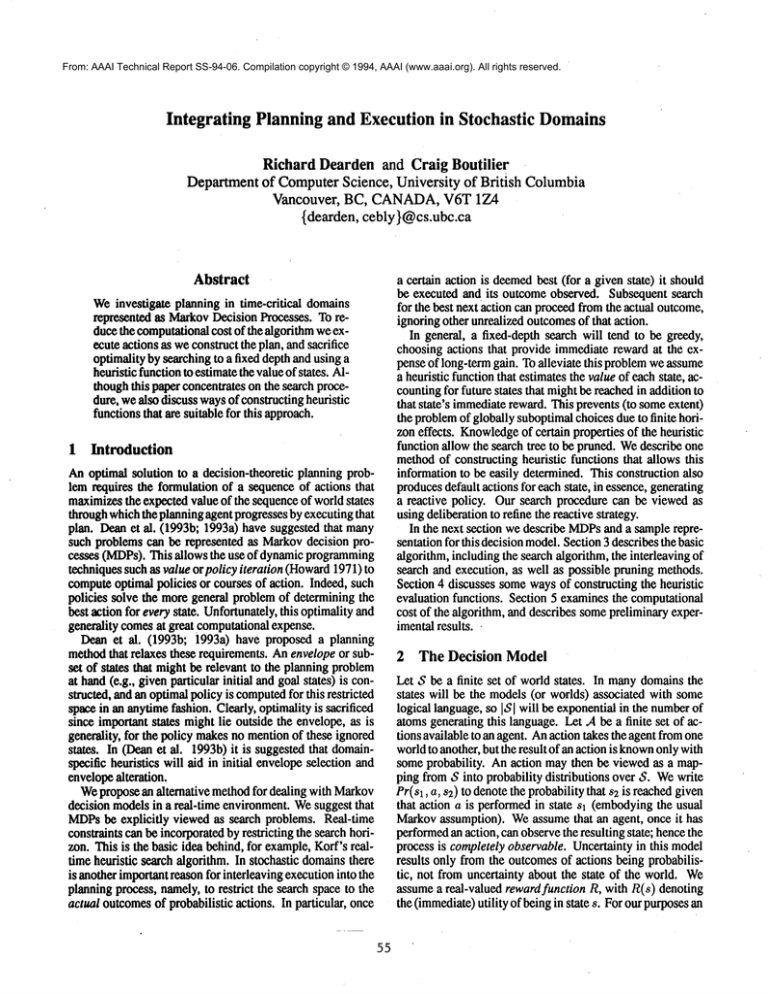

Figure 2: An example of a reward function for the coffee

delivering robot domain.

is performedin a state s satisfying Di, then a randomeffect

from ELi is applied to s. For example, in Figure 1, if the

DelCoffee

Office,HRC

agent carries out the GetUmbrellaaction in a state whereOffice is true, then with probability 0.9 Umbrellawill be true,

and every other proposition will remain unchanged,and with

~Office,HRC

probability 0.1 there will be no changeof state. For convenience, we mayalso write actions as sets of action aspects as

~HRC

illustrated for the Moveaction in Figure 1 (Boutilier and Dearden 1994). The action has two descriptions which represent

two independentsets of discrir0inants, the cross productof the

Hgure 1: An example domain presented as STRIPS-style

aspects is used to determine the actual effects. For example,

action descriptions. Note that HUCand HRCare HasUserif Rain and Office are true, and a Moveaction is performed

Coffee and HasRobotCoffeerespectively.

then with probability 0.81 -~Office, Wet will result, and so

on. This representation of domainsin terms of propositions

MDP

consists of,.q, .A, R and the set of transition distributions

also provides a natural wayof expressing rewards. Figure 2

showsa representation of rewards for this domain. Only the

{Pr(., a, .) : a E A}.

propositionsHasUserCoffeeand Wet affect the reward for any

Acontrol policy Ir is a function ~- : ,.q ~ A. If this policy

is adopted, lr(s) is the action an agent will performwhenever given state.

it finds itself in state s. Given an MDP,an agent ought to

This frameworkis flexible enoughto allow a wide variety

adopt an optimal policy that maximizesthe expected rewards

of different rewardfunctions. Oneimportantsituation is that

accumulatedas it performsthe specified actions. Weconcenin whichthere is someset S~c_ S of goal states, and the agent

trate here on discounted infinite horizon problems:the value

tries to reach a goal state in as few movesas possible) Since

of a reward is discounted by some factor/3(0 </3 < 1)

we are interleaving plan construction and plan execution, the

each step in the future; and we want to maximizethe expected

time required to plan is significant whenmeasuringsuccess;

accumulateddiscounted rewards over an infinite time period.

but as a first approximation

we can represent this type of situIntuitively, a DTPproblem can be viewed as finding a good

ation with the following rewardfunction (Deanet al. 1993b):

(or optimal) policy.

R(s) = 0 ifs E Sg and R(s) = -1 otherwise.

Theexpected value of a fixed policy ~r at any given state s

is specified by

3 The Algorithm

V,(s) = n(s) + ~Pr(s, ~(s),t).

t66

Since the factors V.(s) are mutually dependent, the value of

~r at any initial state s can be computed

by solving this system

of linear equations. Apolicy Ir is optimal

if V, (s) >_ V,~, (s)

for all s E ,q andpolicies 7r’.

Althoughwe represent actions as sets of stochastic transitions from state to state, we expect that domainsand actions will usually be specified in a moretraditional form for

planning purposes. Figure 1 showsa stochastic variation of

STRIPSrules (Kushmerick, Hanks and Weld 1993) for a domain in which the robot must deliver coffee to the user. An

effect E is a set of literals. If weapply E to somestate s, the

resulting state satisfies all the literals in E and agrees with s

for all other literals. Theprobabilistic effect of an action is

a finite set El, ...E, of effects, with associated probabilities

Pl,..., Pn where ~ Pi : 1.

Since actions mayhave different results in different contexts, we associate with each action a finite set D1,..., D. of

mutually exclusive and exhaustive sentences called discriminants, with probabilistic effects ELI, ..., EL,~. If the action

56

Our algorithm for integrating planning and execution proceeds by searching for a best action, executing that action,

observingthe result of this execution, and iterating. Theunderlying search algorithmconstructs a partial decision tree to

determinethe best action for the current state (the root of this

tree). Weassumethe existence of a heuristic function that estimates the value of each state (such heuristics are described

in Section 4). The search tree maybe prunedif certain properfies of the heuristic function are known.This search can be

terminated whenthe tree has been expandedto somespecified

depth, whenreal-time pressures are brought to bear, or when

the best action is known(e.g., due to complete pruning, or

becausethe best action has beencachedfor this state).

Oncethe search algorithmselects a best action for the current state, the action is executed and the resulting state is

observed. By observing the new state, we establish which

of the possible action outcomesactually occurred. Without

this information, the search for the best next action wouldbe

1If a "final" state stops the process, wemayuse self-absorbing

states.

Initial First First

State Action State

Second Second State Utility of 2ndact. Actionandvalue

Action

State Value given1st state

of first state

¯ x p---0.9, V=2

A~¯ y p--¯.l, v=3 } U=2.1 ~ Act.AV(t)=2.39

Cp=O

t~~¯

p---0.7,V--O

¯

/O~¯

~i

p=O.3,

V=l} u--o.3

pffiO.7,

V=O

/

p---0.3, V=4} U=I.2

p=0.9, V=O

~ Act.A

Bestaction

andvalue

\,

U=2.23

V(u)=l.58

\

p=O.1,v=2} u--t2

Act.BV(s)=2.64

p--0.9, V=2

p=0.1, V=0 } U=l.8 ~ Act.A V(v)=2.62

~v (p-=-o.5

a

1,=o.6,

v=]

~

p--0.4, V=2} U=1.4

x~

p=O.l, V=4

p=0.9, V=0} U=0.4 ~ Act.B V(w)=3.25/

/

U=2.94

p--o.5,

v=3

p=o.5,v=2

} u=2.5

R(t) = R(u)= 0.5, R(v) = R(w)=

=0, R(y)=

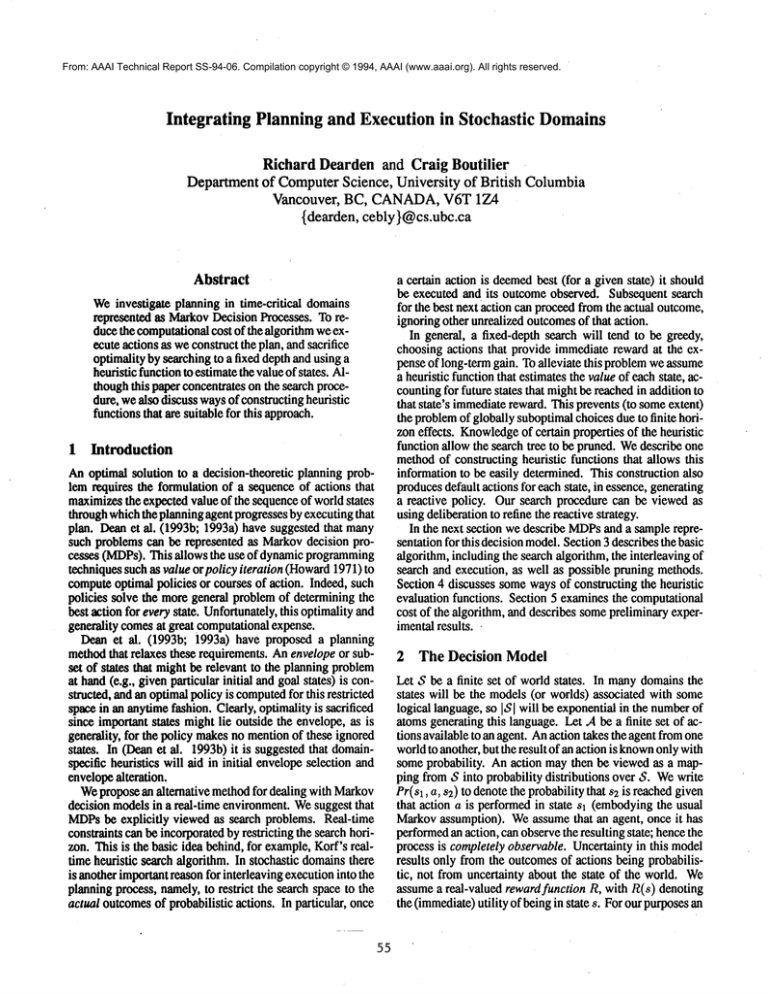

Figure3: Anexample

of a two-levelsearchfor the best action fromstate s.

forced to accountfor every possible outcomeof the previous action. Byinterleaving executionand observationwith

search,weneedonly searchfromthe actualresulting state.

In skeletal form,the algorithmis as follows.Wedenoteby

s the currentstate, andbyA*(t) 2the best actionfor state t.

1. If state s has not beenpreviouslyvisited, build a partial

decision tree of all possible actions andtheir outcomes

beginningat state s, usingsomecriteria to decidewhen

to stop expanding

the leavesof the tree. Usingthe partial

tree andthe heuristic function,calculatethe best action

A*(s)E .,4 to performin state s. (This value may

cachedin cases is revisited.)

2. ExecuteA*(s).

3. Observethe actual outcome

of A*(s). Updates to be this

observedstate (the state is known

with certainty, given

the assumptionof completeobservability).

4. Repeat.

Thepoint at whichthe algorithmstops dependson the characteristics of the domain.

Forexample,

if there are goalstates,

and the agent’s task is to reach one, planningmaycontinue

until a goalstate is reached.In process-oriented

domains,the

algorithmcontinuesindefinitely. In our experiments,wehave

typicallyrun the algorithmuntil a goalstate is reached(if one

exists) or for a constantnumber

of steps.

3.1 Action Selection

Herewediscussstep oneof the high-levelalgorithmgivenin

Section3. Toselect the bestactionfor a givenstate, the agent

needs to estimate the value of performingeach action. In

orderto dothis, it buildsa partial decisiontree of actionsand

2Initially A*(t) mightbe undefined

for all t. However,

if the

heuristicfunctionprovidesdefaultreactions(seeSection4), it

usefulto thinkof theseas the bestactionsdetermined

bya depthO

search.

57

resulting states, andusesthe tree to approximate

the expected

utility of eachaction. Thissearchtechniqueis related to the

.-minimaxalgorithmof Ballard (1983). As weshall see

Section3.2, there are similarities in the waywecan prunethe

searchtree as well. Figure3 showsa partial tree of actions

twolevels deep. Fromthe initial state s, if weperformaction

A, wereach state t with probability 0.8, and state u with

probability0.2. Theagentexpandsthese states with a second

action and reaches the set of secondstates. To determine

the actionto performin a givenstate, the agentestimatesthe

expectedutility of eachaction. If s andt are states,/~ is the

factor bywhichthe rewardfor future states is discounted,and

V(t) is the heuristicfunctionat state t, the estimatedexpected

utility of actionAiis:

U(A,I ) - A,, t)v(t)

tE8

V(s) Is):if Aj

s is

a leaf

node

V(s) = R(s) + max{U(Aj

E .,4

} otherwise

Figure3 illustrates the processwith a discountingfactor of

0.9. Theutility of performingaction Aif the worldwerein

state t is the weighted

sumof the valuesof beingin states a:

andy, whichis 2.1. Sincethe utility of action B is 0.3, we

select actionAas the best (givenour currentinformation)for

state t, andmakeV(t) R(t) + fl U ( AIt) = 2.39. Th

e utility

of actionAin state s is Pr(s, A, t ) V( t ) +Pr(s, A, u ) V

giving U(AIs) = 2.23. This is lower than U(BIs), so we

select B as the best action for state s, recordthe fact that

A*(8) is B, and execute B. Byobserving the world, the

agentnowknowswhetherstate v or wis the newstate, andcan

build on its previoustree, expanding

the appropriatebranchto

twolevels anddeterminingthe best action for the newstate.

Noticethat if (say) v results fromactionB, the tree rooted

state wcan safely be ignored--the unrealizedpossibility can

haveno further impacton updatedexpectedutility (unless

is revisitedvia somepath).

States

/~

MAXstep

(~ Val.=7

b

t~

t’~

~ Val.=7

Actions

AVERAGEstep

Val.=5

[~]

States

p_.~tT--

~.,

~stimated Val.=3

k~J

/ \

,,~."

r~_.0.5

Estimated V~’--4 6

N~stimated Ual.=2

(b)

(a)

Figure4: Twokindsof pruningwhere~(s) < 10 andis accurateto 4-1. In (a), utility pruning,the trees at UandVneednot

searebed,whilein Co), expectationpruning,the trees belowT andUare ignored,althoughthe states themselvesare evaluated.

action are combined.Twosorts of cuts can be madein the

search tree. If weknowboundson the maximum

and/or the

minimum

values of the heuristic function, utility cuts (much

like c~ and fl cuts in minimaxsearch) can be used. If the

heuristic function is reasonable, the maximum

and minimum

valuesfor anystate can be bounded

easily usingknowledge

of

the underlyingdecision process. In particular, with maximum

and minimum

immediaterewards of R+ and R-, the maximum

and minimum

expectedvalues for any state are bounded

+ and ~ ¯ R-, respectively. If we have bounds

by ~

¯

R

i --p

t --/.,

on the error associatedwiththe heuristic function,expectation

cuts maybe applied. Theseare illustrated with examples.

Utility Pruning Wecan prune the search at an AVERAGE

step if weknowthat no matter what the value of the

remainingoutcomesof this action, wecan never exceed

the utility of someother action at the precedingMAX

step. For example,

considerthe searchtree in Figure4(a).

Weassumethat the maximum

value the heuristic function can take is 10. Whenevaluating action b, since

weknowthat the value of the subtree rootedat T is 5,

and the best that the subtrees belowUand V could be

is 0.1 x 10 + 0.2 x 10 = 3, the total cannotbe larger

than 3.5 + 1 + 2 = 6.5 so neither the tree belowUnor

that belowVis worthexpanding.This type of pruning

requires that weknowin advancethe maximum

value of

the heuristic function. Theminimum

value can be used

in a morerestricted fashion.

3.2 Techniquesfor Limitingthe Search

Asit stands, the searchalgorithmperformsin a very similar ExpectationPruningFor this type of pruning, weneed to

knowthe maximum

error associated with the heuristic

wayto minimax

search. Determiningthe value of a state is

function(see (Boutilier andDe,arden1994)for a way

analogous to the MAX

step in minimax,while calculating

estimatingthis value).If weare at a maximizing

step and,

the value of an action can be thought of as an AVERAGE

even

taking

into

account

the

error

in

the

heuristic

funcstep, whichreplaces the MINstep (see also (Ballard 1983)).

tion, the action weare investigating cannotbe as good

Whenthe search tree is constructed, wecan use techniques

as someother action, then wedo not needto expandthis

similar to those of Alpha-Betasearch to prunethe tree and

action further. For example,considerFigure4(b), where

reducethe numberof states that mustbe expanded.Thereare

weassumethat 1;(S) is within +1of its true (optimal)

two applicable pruningtechniques. To makeour description

value. Wehave determinedthat U(alS) = 7, therefore

clearer, wewill treat a singleply of searchas consistingof two

any potentially better action musthavea valuegreater

steps, MAX

in whichall the possibleactions froma state are

than 6. Since p(S, a, T)V(T)+ p(S, a, U)V(U)< 4,

compared,and AVERAGE,

wherethe outcomesof a particular

If the agentfinds itself in a state visited earlier, it may

use the previouslycalculated andcachedbest action A*(s).

This allowsit to avoidrecalculatingvisited states, andwill

considerablyspeedplanningif the sameor related problems

mustbe solvedmultipletimes, or if actions naturallylead to

"cycles" of states. Eventually,A*(s) couldcontain a policy

for every reachable state in S, removingthe need for any

computation.

In Figure3, the tree is expandedto depth two. Thedepth

can obviouslyvary dependingon the available time for computation. Thedeeperthe tree is expanded,the moreaccurate

the estimatesof the utilities of eachaction tend to be, and

hence the moreconfidenceweshould have that the action

selected approachesoptimality.

If there are rn actions, andthe number

of states that could

result fromexecutingan actionis onaverageb, then a tree of

depthone will require O(mb)steps, twolevels will require

O(m262),and so on. The potentially improvedperformance

of a deepersearchhas to be weighedagainstthe time required

to perform the search (Russell and Wefald1991). Rather

than expandto a constantdepth, the agentcouldinstead keep

expanding

the tree until the probabilityof reachingthe state

being considereddrops belowa certain threshold. This approach mayworkwell in domainswherethere are extreme

probabilitiesor utilities. Pruningof the searchtree mayalso

exploit this information.

58

evenif b is as goodas possible(giventhese estimates),

it cannotachievethis threshold,so there is no needto

search further belowT and U.

7~inducesa partition of the state spaceinto sets of states,

or clusters whichagree on the truth values of propositions

in 7~. Furthermore,the actions fromthe original ’concrete’

state spaceapply directly to these clusters. This is due to

Although

wehavenot yet empiricallyinvestigatedthe effects

the fact that eachactioneither mapsall the states in a cluster

of pruningonthe size of the searchtree, utility pruningcanbe to the samenewcluster, or changesthe state, but leaves the

expectedto produceconsiderablesavings.It will beespecially cluster unchanged.Thesetwofacts allowus to performpolicy

valuable whennodesare orderedfor expansionaccordingto

iteration onthe abstractstate space.Thealgorithmis:

their probabilityof actuallyoccurringgivena specificaction.

1. Constructthe set of relevantpropositions7~. Theactions

Expectationpruningrequires a modificationof the search

are left unchanged,

but effects on propositionsnot in

algorithmto checkall outcomesof an action to see if the

are ignored.

weightedaverageof their estimatedvaluesis sufficient to justify continuednodeexpansion.This meansthat the heuristic

2. Use~ to partition the state spaceinto clusters.

value of sibling nodes mustbe checkedbefore expandinga

3. Usethe policyiteration algorithmto generatean abstract

givennode.Takinginto accountthe cost of this, andthe diffipolicy for the abstract state space. For details of this

culty of producingtight boundson V, this typeof pruningmay

algorithmsee (Howard1971;Deanet al. 1993b).

not be cost-effective in somedomains.However,the method

of generatingheuristic functions in (Boutilier andDearden

Byaltering the numberof reward-changingpropositions

1994)(see the next section for a brief discussion)produces in ~, wecan vary its size, and hencethe granularity and

just such bounds.Expectationpruningis closely related to

accuracyof the abstract policy. This allowsus to investigate

whatKoff(1990)calls alpha-pruning.Thedifferenceis that

the tradeoff betweentime spent buildingthe abstract policy

whileKorfreliesona propertyof the heuristicthat it is always andits degreeof optimality. Thepolicy iteration algorithm

increasing,werely on an estimateof the actual error in the

also computes

the valueof eachcluster in the abstract space.

heuristic.

This value can be usedas a heuristic estimate of the value

of the cluster’s constituent states. Oneadvantageof this

approachis that it allowsus to accuratelydeterminebounds

4 GeneratingHeuristic Functions

on the differencebetweenthe heuristic value for anystate,

Wehaveassumedthe existenceof a heuristic function above. andits valueaccordingto an optimalpolicy -- see (Boutilier

Wenowbriefly describe somepossible methodsfor generating and Dearden1994)for details. As shownabove,this fact is

these heuristics. Theproblem

is to build a heuristic function very usefulfor pruningthe searchtree. Asecondadvantage

of

whichestimatesthe valueof eachstate as accuratelyas pos- this methodfor generatingheuristic valuesis that it provides

sible with a minimum

of computation.In somecases such a

defaultreactionsfor eachstate.

heuristic mayalready be available. Here wewill sketch an

approach

for domainswith certain characteristics, andsuggest 4.2 Other Approaches

ideas for other domains.

Thealgorithmdescribedabovefor buildingthe heuristic function is certainly not appropriatein all domains.Certaindo4.1 Abstractionby IgnoringPropositions

mainsare morenaturally representedby other means(naviIn certain domains,actions mightbe representedas STRIPS- gation is oneexample).In someeases abstractionsof actions

like rules as in Figure10 andthe rewardfunctionspecifiedin

and states mayalready be available (Tenenberg

1991).

termsof certainpropositions.If this is the casewecanbuildan

For robot navigationtasks, an obviousmethodfor clusterabstractrepresentationof the state spaceby constructinga set

ing states is basedon geographicfeatures. Nearbylocations

7~ of relevantpropositions,andusingit to constructabstract can be clusteredtogetherinto states that representregionsof

stales eachcorresponding

to all the states whichagreeon the

the map,but providingactionsthat operateonthese regionsis

values of the propositionsin 7~. A completedescription of

morecomplex.Oneapproachis to assumesomeprobability

our approach,alongwiththeoretical andexperimentalresults,

distribution over locationsin eachregion,andbuild abstract

can be found in (Boutilier and Dearden1994). However

actions as weightedaveragesover all locationsin the region

will broadlydescribethe techniquehere.

of the corresponding

concreteaction. Thedifficulty with this

Toconstruct 7~, wefirst construct a set of immediately approachis that it is computationally

expensive,requiringthat

relevant propositions2~7~.Theseare propositionsthat have everyaction in everystate by accountedfor whenconstructing

significant effect on the rewardfunction. For example,in

the abstractactions.

Figure 2, both HasUserCoffeeand Wet have an effect on

If abstract actions (possibly macro-operators(Fikes and

the rewardfunction; but to producea small abstract state

Nilsson1971))are alreadyavailable, weneedto find clusters

space, ZT~mightinclude only HasUserCoffee,

since this is

to whichthe actions apply. In manycases this maybe easy as

the propositionwhichhas the greatest effect on the reward the abstract actionsmaytreat manystates in exactlythe same

function.

way,hencegeneratinga clustering scheme.In other domains,

7~ will includeall the propositionsin ZT~,but also any a similar weightedaverageapproachmaybe needed.

propositionsthat appearin the discriminantof an action which

allowsus to changethe truth valueof somepropositionin 7~. 5 SomePreliminaryResults

Formally,7~ is the smallestset suchthat: 1) 2~7~C 7~; and

2) if P E 7~ occursin an effect list of someaction, then all

Weare currently exploring,both theoretically andexperipropositionsin the corresponding

discriminantare in ~.

mentally,the tradeoffsinvolvedin the interleavingof planning

59

Timeper action

2

I

0

I

Search 2

Depth

3

0.015

I

Search

Depth 2

3

0.081

0.662

4

....

~

Accumulated

rewardfor 20 actions

7.5

12.9 15

I

OpL

Pol.

6.25

4

~n

5.111

12.9

(a)

(b)

Abstract

Policy

No.of Errors

Total Error.

Max.Error

AverageError

Average

non-zero

2OO

753.773

5.4076

2.956

1 Step 2 Step 3 Step 4 Step 5 Step

Search Search Search Search Search

144

212.528

3.9607

0.830

3.769 1.476

136

136

161.068 161.068

2.7372 2.7372

0.629 0.629

21

11.160

2.1045

0.044

21

8.834

2.1045

0.034

1.184

0.531

0.421

1.184

Error

(c)

Figure 5: Timing(a) and value (b) of policy for a 256 state, six action domainfor various depths of search. Table (c) shows

comparisonof the policies induced by search to various depths with the optimal policy.

and execution in this framework. Wecan measure the complexity of the algorithm as presented. Let m= [A[ be the

number of actions. Wewill assume that when constructing

the search tree for a state, we explore to depth d, and that

the branching factor for each action (the maximum

numberof

3outcomesfor the action in any given state) is at most b.

Thecost of calculating the best action for a single state is

d. The cost per state is slightly less than this since we can

mab

reuse our calculations, but the overall complexityis O(md).

The actual size of the state space has no effect on the algorithm; rather it is the numberof states visited in the execution

of the plan that affects the cost. This is clearly domaindependent, but in most domainsshould be considerably lower than

the total numberof states. Mostimportantly, the complexity

of the algorithm is constant and execution time (per action)

can be boundedfor a fixed branching and search depth. By

interleaving execution with search, the search space can be

drastically reduced. Whenplanning for a sequence of n actions the execution algorithm is linear in n (with respect to

the factor mdbd);a straightforward search without execution

for the same numberof actions is O(b").

Someexperimental tests provide a preliminary indication

that this frameworkmaybe quite valuable. To generate the

results discussed in this section, we used a domainbased on

the one described in Figures 1 and 2 but with another item

aWeignore preconditionsfor actions here, assumingthat an action can be "attempted"in any circumstance.However,preconditions mayplaya usefulrole bycapturinguser-supplied

heuristicsthat

filter out actionsin situations in whichthey oughtnot (rather than

cannot) be attempted.This will effectively reduce the branching

factor of the searchu’e~.

60

(snack) that the robot mustdeliver, and a robot that only carries one thing at a time. Weconstructed the heuristic function

using the procedure described in Section 4, with HasUserCoffee as the only immediatelyrelevant proposition, ignoring

the proposition HasUserSnack;thus, T¢ = {HasUserCoffee,

Office, HasRobotCoffee, HasRobotSnack}.

The domaincontains 256 states and six actions. All timing results were produced on a Sun SPARCstation1. Computing an optimal policy by policy iteration required 130.86

seconds, while computinga sixteen state abstract policy

(again using policy iteration) for the heuristic function required 0.22 seconds. Figure 5(a) shows the time required

for searching as a function of search depth, while (b) shows

the average accumulated reward after performing twenty actions from the state (-~HasRobotCoffee, ~HasUserCoffee,

-~HasRobotSnack, -~HasUserSnack, Rain, ~Umbrella, Ofrice, ~We0.4

As the graphs show, a look-ahead of four was necessary

to producean optimal policy from the abstract policy for this

particular starting point, but even this muchlook-aheadonly

required 5.111 seconds to plan for each action, for a total

(13 step) planning time of 66.44 seconds, about half that

computingthe optimal policy using policy iteration. While

policy iteration is clearly superiorif plans are required for all

possible starting states, by sacrificing this generality to solve

a specific problem the search methodprovides considerable

computationalsaving. Weshould point out that no pruning has

4Thisis the state

that requiresthe longestsequence

of actionsto

reacha state withmaximal

utility; i.e., the state requiringthe"longest

optimalplan."

beenperformedin this example.Furthermore,as mentioned

above,policy iteration will require moretime as the state

space grows,whereasour search methodwill not.

Figure5(c) comimres

the values of the policies that each

depth of searching producedwith the value of the optimal

policy overthe entire state space.Sincethe rangeof expected

valuesof states in this domainis 0 to 10, the avergaeerrors

produced

by the algorithmare quite small, evenwithrelatively

little search. Thetable suggeststhat searchingto depthn is

at least as goodas searchingto depth n - 1 (althoughnot

alwaysbetter), andin this domain,is considerablybetter than

followingthe abstract policy. In noneof the domainswehave

tested has searchingdeeperproduceda worsepolicy, although

this maynot be the casein general(see (Pearl1984)for a proof

of this for minimax

search).

6 Conclusions

Wehave proposeda frameworkfor planning in stochastic

domains.Further experimental workneeds to be done to

demonstrate

the utility of this model.In particular, werequire

an experimentalcomparisonof the search-executionmethod

andstraightforwardsearch, as well as further comparison

to

exactmethodslike policyiteration andheuristic methodslike

the envelopeapproachof Deanet al. (1993b). Weintend

use the framework

to explorea number

of tradeoffs (e.g., as

in (Russell and Wefald1991)). In particular, wewill look

at the advantagesof a deepersearch tree, andbalance this

withthe cost of buildingsucha tree, andat the tradeoff betweencomputationtime and improvedresults whenbuilding

the heuristic function. To illustrate these ideas weobserve

that if the depthof the searchtree is O, this corresponds

to a

reactivesystemwherethe best actionfor eachstate is obtained

fromthe abstractpolicy. If eachcluster for the abstractpolicy contains a single state, wehaveoptimalpolicy planning.

Theusefulnessof these tradeoffs will vary whenplanningin

different domains.

Some

of the characteristics of domains

that will affect our

choicesare:

¯ T/me:for time-critical domains

it maybe better to limit

timespentdeliberating(perhapsadoptinga reactivestrategy basedon the heuristic function). A moredetailed

heuristic functionand a smallersearch tree maybe appropriate.

¯ Continuity:if actionshavesimilareffects in largeclasses

of states andmostof the goalstates are fairly similar, we

can use a less detailedheuristic function(moreabstract

policy).

¯ Fan-out:if there are relatively fewactions, andeach

action has a smallnumberof outcomes,wecan afford to

increasethe depthof the searchtree.

¯ Plausiblegoals:if goalstates are hardto reach, a deeper

search tree anda moredetailed heuristic function may

be necessary.

¯ Extremeprobabilities:with extremeprobabilities it may

be worth only expandingthe tree for the mostprobable outcomesof each action. This seemsto bear some

relationship to the envelopereconstructionphaseof the

recurrentdeliberationmodelof Deanet al. (1993a).

61

In the future wehopeto continueour experimentalinvestigation of the algorithmto look at the efficacy of pruning

the search tree, as well as experimenting

with variable-depth

search, andthe possibilityof improving

the heuristic function

by recording newlycomputedvalues of states, rather than

best actions. This last idea will allowus to investigate the

tradeoff betweenspeed (whenthe agent is in a previously

visited state) and accuracywhenselecting actions. Wealso

hopeto investigate the performance

of this approachin other

types of domains,includinghigh-level robot navigation,and

schedulingproblems,andto further investigate the theoretical propertiesof the algorithm,especially throughanalysis

of the value of deeper searchingin producingbetter plans

(Pearl 1984). Ourmodelcan be extendedby relaxing some

the assumptionsincorporatedinto the decision-model.Semimarkovprocesses as well as partially observableprocesses

will require interesting modificationsof our model.Finally,

wemustinvestigate the degreeto whichthe restricted envelope approachmaybe meshedwith our model.

Acknowledgments

Discussionswith Mois6sGoldszmidthaveconsiderably influencedour viewand use of abstraction for MDPs.

References

Ballard, B. W. 1983. The *-minimax search procedure for trees

containing chance nodes. Artificial Intelligence, 21:327-350.

Boutilier, C. and Dearden,R. 1994. Using abstractions for decisiontheoretic planning with time constraints. (submitted).

Dean, T., Kaelbling, L. P., Kirman, J., and Nicholson, A. 1993a.

Deliberation scheduling for time-critical decision making. In

Proceedingsof the Ninth Conferenceon Uncertainty in Artificial Intelligence, pages 309-316, Washington,D.C.

Dean, T., Kaelbling, L. P., Kirman, J., and Nicholson, A. 1993b.

Planning with deadlines in stochastic domains.In Proceedings

of the Eleventh National Conferenceon Artificial Intelligence,

pages 574-579, Washington, D.C.

Fikes, R. E. and Nilsson, N. J. 1971. Strips: A newapproachto the

application of theorem proving to problemsolving. Artificial

Intelligence, 2:189-208.

Howard, R. A. 1971. DynamicProbabilistic Systems. Wiley, New

York.

Korf, R. E. 1990. Real-timeheuristic search. Artiftciallntelligence,

42:189-211.

Kushmerick,

N., Hanks,S., andWeld,D. 1993.Analgorithmfor

probabilisticplanning.Technical

Report93-06-04,

University

of Washington,

Seattle.

Pearl, J. 1984.Heuristics:IntelligentSearchStrategiesfor Computer ProblemSolving. Addison-Wesley,

Reading,Massachusetts.

Russell,S. J. andWefald,E. 1991.Dothe RightThing:Studiesin

LimitedRationality.M1T

Press, Cambridge.

Tenenberg,

J. D.1991.Abstractionin planning.In Allen,J. F.,

Kautz,H.A., Pelavin,R. N., andTenenberg,

J. D., editors,

ReasoningaboutPlans, pages213-280.Morgan-Kaufmann,

SanMateo.