From: FLAIRS-01 Proceedings. Copyright © 2001, AAAI (www.aaai.org). All rights reserved.

Decision Tree Rule Reduction Using Linear Classifiers in Multilayer Perceptron

DaeEun Kim

Sea Woo Kim

Division of Informatics

University of Edinburgh

5 Forrest Hill

Edinburgh, EHI 2QL, United Kingdom

daeeun@dai.ed.ac.uk

Dept. of Information and

Communication Engineering, KAIST

Cheongryang l-dong

Seoul, 130-011, Korea

seawoo@ngis.kaist.ac.kr

Abstract

relationship betweendata points, using a statistical technique. It generates manydata points on the response surIt has beenshownthat a neuralnetworkis better thana

face

of the fitted curve, and then induces rules with a

direct applicationof inductiontrees in modelingcomdecision

tree. This method was introduced as an alterplex relations of input attributes in sampledata. We

native measure regarding the problem of direct applicaproposethat conciserules be extractedto supportdata

tion of the induction tree to raw data (Irani &Qian 1990;

withinput variablerelations overcontinuous-valued

atKim1991). However,it still has the problem of requiring

tributes. Thoserelations as a set of linear classifiers

manyinduction rules to reflect the responsesurface.

can be obtained from neural networkmodelingbased

onback-propagation.

Alinear classifier is derivedfrom

In this paper we use a hybrid technique to combineneua linear combinationof input attributes and neuron

ral networksand decision trees for data classification (Kim

weightsin the first hiddenlayer of neuralnetworks.It

& Lee 2000). It has been shownthat neural networks are

is shown

in this paperthat whenweuse a decisiontree

better than direct application of induction trees in modoverlinear classifiers extractedfroma multilayerpereling nonlinear characteristics of sample data (Dietterich,

ceptron, the numberof rules can be reduced.Wehave

Hild, & Bakiri 1990; Quinlan 1994; Setiono & Lie 1996;

tested this methodoverseveral data sets to compare

it

Fisher & McKusick 1989; Shavlik, Mooney, & Towell

withdecisiontree results.

1991). Neural networks have the advantage of being able to

deal with noisy, inconsistent and incompletedata. A method

Introduction

to extract symbolicrules from neural networkshas beenproposed to increase the performanceof the decision process

The discovery of decision rules and recognition of patterns

(Andrews, Diederich, &Tickle 1996; Taha & Ghosh1999;

from data examples is one of the most challenging probFu

1994; Towcll &Shavlik Oct 1993; Setiono & Lie 1996).

lems in machinelearning. If data points contain numerical attributes,

induction

treemethods

needthecontinuous- The KTalgorithm developed by Fu (Fu 1991) extracts rules

valued

attributes

tobemadediscrete

withthreshold

values. from subsets of connected weights with high activation in a

trained network. The Mof N algorithm clusters weights of

Induction

treealgorithms

suchasC4.5build

decision

trees

the trained networkand removesinsignificant clusters with

byrecursively

partitioning

theinput

attribute

space

(Quinlow active weights. Then the rules are extracted from the

lan1996).

Thetreetraversal

fromtherootnodetoeachleaf

weights (Towell & Shavlik Oct 1993).

leads

tooneconjunctive

rule.

Eachinternal

nodeinthedecisiontree

hasa splitting

criterion

orthreshold

forcontinuous- A simple rule extraction algorithmthat uses discrete activalued

attributes

topartition

somepartoftheinput

space,

vations over continuous hidden units is presented in by Seandeachleafrepresents

a class

related

totheconditions

of

tiono and Taha (Setiono &Lie 1996; Taha & Ghosh1999).

eachinternal

node.

They used in sequence a weight-decay back-propagation

Approaches

basedon decision

treesinvolve

making

the

over a three-layer feed-forward network, a pruning process

continuous-valued

attributes

discrete

ininput

space,

creatto removeirrelevant connectionweights, a clustering of hidingmanyrectangular

divisions.

Asa result,

theymayhave

den unit activations, and extraction of rules from discrete

theinability

todetect

datatrends

ordesirable

classifica- unit activations. They derived symbolic rules from neural

tionsurfaces.

Eveninthecaseofmultivariate

methods

of

networks that include oblique decision hyperplanes instead

discretion

which

search

inparallel

forthreshold

values

for

of general input attribute relations (Setiono &Liu 1997).

morethanonecontinuous

attribute

(Fayyad

& Irani1993;

Also the direct conversion from neural networks to rules

has an exponential complexity whenusing search-based alKweldo

& Kretowski

1999),

thedecision

rulesmaynotreflect

datatrends

orthedecision

treemaybuild

manyrules

gorithm over incoming weights for each unit (Fu 1994;

withthesupport

of a smallnumber

of examples

or ignore

Towell & Shavlik Oct 1993). Most of the rule extraction

somedatapoints

bydismissing

themasnoisy.

algorithms are used to derive rules from neuron weights

A possible process is suggested to grasp the trend of the

and neuronactivations in the hidden layer as a search-based

method. An instance-based rule extraction methodis sugdata. It first tries to fit it with a given data set for the

Copyright

©2001,

AAAI,

All rightsreserved.

48O

FLAIRS-2001

gested to reduce computationtime by escaping search-based

methods(Kim &Lee 2000). After training two hidden layer

neural networks, the first hidden layer weight parametersare

treated as linear classifiers. Theselinear differentiated fimctions are chosenby decision tree methodsto determinedecision boundariesafter re-organizing the training set in terms

of the newlinear classifier attributes¯

Our approach is to train a neural network with sigmoid

functions and to use decision classifiers based on weight

parameters of neural networks. Then an induction tree selects the desirable input variable relations for data classification. Decision tree applications have the ability to determineproper subintervals over continuousattributes by a discretion process. This discretion process will cover oblique

hyperplanes mentionedin Setiono’s papers. In this paper,

we havetested linear classifiers with variable thresholds and

fixed thresholds. The methodsare tested on various types of

data and compared with the method based on the decision

tree alone.

Problem Statement

Induction trees are useful for a large numberof examples,

and they enable us to obtain proper rules from examples

rapidly (Quinlan 1996). However,they have the difficulty

in inferring relations betweendata points and cannot handle

noisy data.

.... f ..............................

"._~..

° i

1

(a)

(b)

(c)

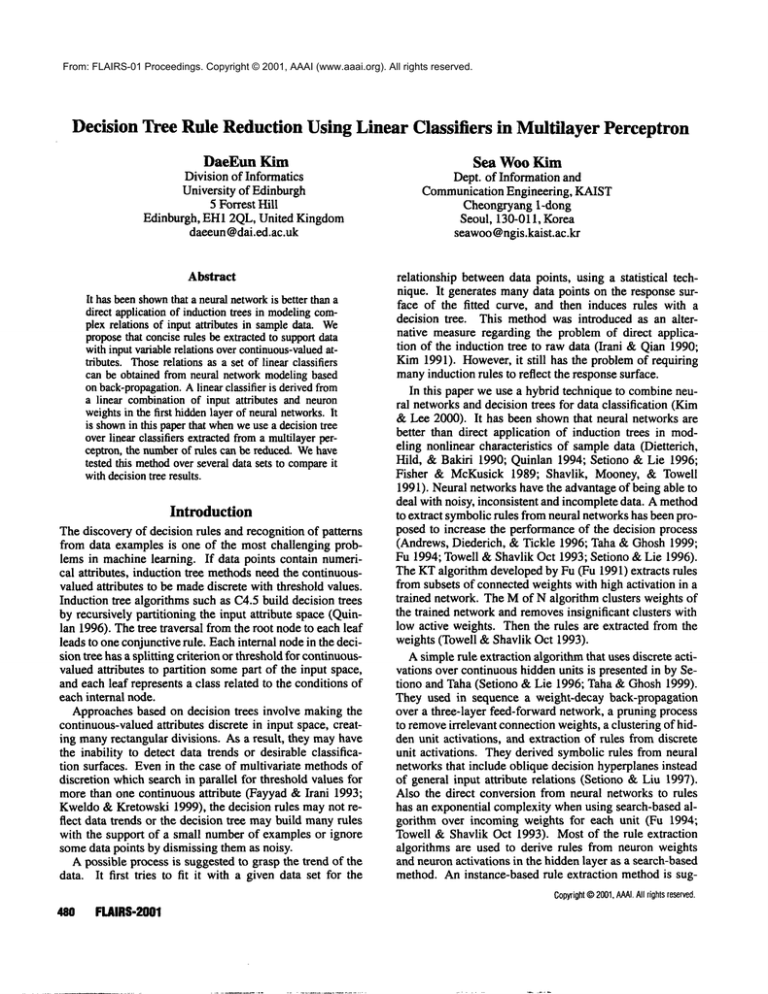

Figure 1: Example(a) data set and decision boundary(O

class 1, X : class 0) (b)-(c) neural networkfitting (d) data

with 900 points (Kim & Lee 2000)

Wecan see a simple exampleof undesirable rule extraction discoveredin the inductiontree application¯ Figure1 (a)

displays a set of 29 original sample data with two classes¯

It appears that the set has four sections that have the boundaries of direction from upper-left to lower-right. A set of

the dotted boundarylines is the result of multivariate classification by the induction tree. It has six rules to classify

data points. Even in C4.5 (Quinlan 1996) run, it has four

rules with 6.9 % error, makingdivisions with attribute y.

Therules do not catch data clustering completelyin this example. Figure l(b)-(c) showsneural networkfitting with

back-propagationmethod¯In Figure l(b)-(c) neural network

nodes have slopes alpha = 1.5, 4.0 for sigmoids, respectively. After curve fitting, 900 points were generated uniformly on the response surface for the mappingfrom input

space to class, and the response values of the neural networkwere calculated as shownin Figure l(d). The result

C4.5 to those 900 points followed the classification curves,

but produced55 rules¯ The production of manyrules results

from the fact that decision tree makespiecewise rectangular divisions for each rule. This happensin spite of the fact

that the responsesurface for data clustering has a correlation

betweenthe input variables.

As shownabove, the decision tree has a problemof overgeneralization for a small number of data and an overspecialization problem for a large numberof data. A possible suggestion is to consider or derive relations between

input variablt s as another attribute for rule extraction¯ However, it is difficult to find input variablerelations for classification directly in supervised learning, while unsupervised

methodscan use statistical methodssuch as principal component analysis (Haykin 1999).

Method

The goal for our approachis to generate rules following the

shape and characteristics of response surfaces. Usually induction trees cannot trace the trend of data, and they determinedata clustering only in terms of input variables, unless

we apply other relation factors or attributes. In order to improveclassification rules from a large training data set, we

allow input variable relations for multi-attributes in a set of

rules¯

NeuralNetworkandLinearClassifiers

Weuse a two-phase method for rule extraction over

continuous-valuedattributes. Given a large training set of

data points, the first phase, as a feature extraction phase, is

to train feed-forward neural networks with back-propagation

and collect the weight set over input variables in the first

hidden layer. A feature useful in inferring multi-attribute

relations of data is foundin the first hiddenlayer of neural

networks. The extracted rules involving networkweight values will reflect features of data examplesand provide good

classification boundaries. Also they maybe more compact

and comprehensible,comparedto induction tree rules.

In the secondphase, as a feature combinationphase, each

extracted feature for a linear classification boundaryis combined together using Booleanlogic gates. In this paper, we

use an induction tree to combineeach linear classifier.

The highly nonlinear property of neural networks makes

it difficult to describe howthey reach predictions. Although

their predictive accuracy is satisfactory for manyapplications, they have long been considered as a complexmodelin

terms of analysis. By using expert rules derived from neural networks, the neural networkrepresentation can be more

understandable¯

It has been shownthat a particular set of functions can

NEURAL

NETWORK

/ FUZZY 481

be obtained with arbitrary accuracy by at most two hidden layers given enough nodes per layer (Cybenko 1988).

Also one hidden layer is sufficient to represent any Boolean

function (Hertz, Palmer, & Krogh 1991). Our neural network structure has two hidden layers, where the first hidden layer makesa local feature selection with linear classifiers and the second layer receives Boolean logic values from the first layer and maps any Boolean function. The second hidden layer and output layer can be

thought of as a sum of the product of Boolean logic

gates. The n-th output of neural networks for a set of

data is Fn

= f(EN2w ,d()Sj

2 ---N1 wjkf(

1 --No w°ad))

After training data patterns with a neural networkby backpropagation,wecan havelinear classifiers in the first hidden

layer.

For a nodein the first hiddenlayer, the activation is defined as Hj = f(Y~i N° aiWij) for the j-th node where

No is the numberof input attributes, ai is an input, and

f(x) = 1.0/(1.0 -’ ~z) is a s igmoid function. When we

train neural networks with the back-propagationmethod, a,

the slope of the sigmoid function is increased as iteration

continues. If we have a high value of a, the activation of

each neuronis close to the property of digital logic gates,

whichhas a binary value of 0 or 1.

Exceptfor the first hiddenlayer, we can replace each neuron by logic gates if we assume we have a high slope for

the sigmoidfunction. Input to each neuronin the first hidden layer is represented as a linear combinationof input attributes and weights, )--~N aiWij. This forms linear classifiers for data classification as a feature extraction over data

distribution.

WhenFigure l(a) data is trained, we can introduce new

attributes aX + bY + e, where a, b, c are constants. We

use two hidden layers with 4 nodes and 3 nodes, respectively, where every neuron node has a high sigmoid slope

to guarantee desirable linear classifiers as shownin Figure l(c). Wetransformed 900 data points in Figure l(d)

into four linear classifier data points, and then we added

the classifier attributes {L1, L2, L3, L4}to the original attributes x, y. Induction tree algorithm used those six attributes {x, y, LI, L2, Ls, L4} for its input attributes.

Then we could obtain only four rules with C4.5, while a

simple application of C4.5 for those data genetated 55 rules.

The rules are given as follows:

rule 1

rule2

rule 3

rule4

if (1.44x + 1.73y <= 5.98), thenclass

if(1.44x+ 1.73y > 5.98)

and (1.18x + 2.81y <= 12.37) then class

: if(1.44x + 1.73y > 5.98)

and (1.18x + 2.81y > 12.37)

and (0.53x + 2.94y < 14.11), then class

: if(1.44x + 1.73y > 5.98)

and (1.18x + 2.81y > 12.37)

and (0.53x + 2.94y > 14.11), then class

:

:

These linear classifiers exactly match with the boundaries

shownin Figure 1 (c), and they are moredominantfor classi482

FLAIRS-2001

fication in terms of entropy minimizationthan a set of original input attributes itself. Evenif weinclude input attributes,

the entropy measurementleads to a rule set with boundary

equations. Theserules are moremeaningfulthan those of direct C4.5 application to raw data since their divisions show

the trend of data clustering and howeach attribute is correlated.

Linear Classifiers

for Decision Trees

Induction trees can split any continuous value by selecting

thresholds for given attributes, while it cannot derive relations of input attributes directly. Thus, before induction

trees are applied to a given training set, weput newrelation

attributes consisting of linear classifiers in the training set,

whichare generated fromthe weight set in a neural network.

Wecan represent training data as a set of attribute column

vectors. Whenwe have linear classifiers extracted from a

neural network,each linear classifier can be a columnvector

in a training set, wherethe vector size is equal to the number

of the original training data. Eachlinear classifier becomesa

newattribute in the training set. If we represent original input attribute vectors and neural networklinear classifiers as

U-vectorsand L-vectors, respectively, C-vectors, L-vectors,

and {U + L}-vectors will form a different set of training

data; each set of vector is transformedfrom the same data.

Thosethree vector sets were tested with several data sets in

the UCI depository (Blake, Keogh, & Merz 1998) to compare the performance (Kim &Lee 2000).

It is believed that a compactset of attributes to represent

the data set shows a better performance. Addingoriginal

input attributes does not improvethe result, but it makesits

performanceworse in most cases. C4.5 has a difficulty in

selecting properly the mostsignificant attributes for a given

set of data, because it chooses attributes with local entropy

measurementand the methodis not a global optimization of

entropy. Also, especially whenonly linear classifiers from

neural network,L-vectors, are used, it is quite effective in

reducing the numberof rules (Kim& Lee 2000).

Generally manydecision tree algorithms have muchdifficulty in feature extraction. Whenwe add manyunrelated

features(attributes)to a training set for decisiontrees, it has

tendency to worsenperformance.This is because the induction tree is basedon a locally optimalentropy search. In this

paper, a compactL-linear classifier methodwas tested. We

used L-linear classifiers with fixed thresholds and variable

thresholds.

In the linear classifier methodwith fixed thresholds, all

instances in the training data are transformed into Boolean

logic values through dichotomyof node activations in the

first hidden layer; then Booleandata are applied to the induction algorithmC4.5. In the linear classifiers with variable

thresholds, a set of linear classifiers are taken as continuousvalued attributes. TheC4.5 application over instances of linear classifiers will try to find the best splitting thresholdsfor

discretion over each linear classifier attribute. In this case,

each linear classifier attribute mayhave multivariate thresholds to handle marginalboundariesof linear classifiers.

data

wine

iris

blagast-w

ios~sphere

pinla

glass

bupa

C4.5

orain (%) [ test (%)

1.2 4- 0.1

7.9 4- 1.3

1.9 4- 0.1

5.4"4-0.7

I.I 4-0.1

4.74- 0.5

1.6 4- 0.2

10.44- I.I

15.14- 0.8 26.4 4- 0.9

6.7 4- 0.4 32.0 4- 1.5

12.94- 1.5 34.54- 2.0

Linear

Classifier

[

train (%) [ test (%)

0.0 4- 0.0

3.6 4- 0.9

0.7 4- 0.2

4.7 4- 1.5

0.9 4- 0.2

4.4 4- 0.3

1.2 4- 0.2

8.8 4- 1.5

15.84- 0.4 27.04- 0.9

6.6 4- 0.8

33.6

4-3.I

10.24- 1.1

33.74- 2.1

nodes

4-3-3

4-5-3

4-7-3

4-10-3

4-3-3-3

4-5-4-3

4-7-4-3

neuralnetwork

wain(%)

test (%)

O.84- 0.1

4.3 4- 1.5

0.5 4- 0.1

4.74- I.I

5.2 4- 1.1

0.5 4- 0.2

0.4-I- 0.2 5.1 4- 1.2

4.5-II.I

0.7

-40.1

0.64.0.1

4.1-1.-1.3

0.54-0.1 4.74- 0.9

veriable

T’[ L.I’

# rules

3.8-1-0.3

3.9 4- 0.4

3.94-0.2

3.94-0.1

3.7 q- 0.2

4.04* 0.4

3.9 4- 0.2

’[.L}

fixedT

# rules

3.44- 0.2

4.0 4- 0.5

4.1.4- 0.4

4.4 4- 0.6

3.8 4- 0.2

4.1 4-0.2

4.0 4- 0.4

Ca)

Table1: Dataclassification errors in C4.5andlinear classifier methodwith variablethresholds(error rates in linear

classifier methodshowthe best result amongseveral neural

networkexperiments)

0 1

-- g .m .

.

t z, J.

variable

thresholds

nodes train (%) test (%)

4-3-3

0.74.0.1

5.14. 1.3

4-5-3

0.7 4* 0.2 6.O4- 1.7

4-7-3 0.7 4- 0.2 4.64*1.1

4-10-3 0.8-4-0.1 5.2 4- 1.6

4-3-3-3 0.9 "4"0.1 5.9 4- 1.4

4-5-4-30.7 4- 0.2 4.7 4- 1.5

4-7-4-3 0.8 4- 0.2 4.7 4- 1.0

fixedthresholdst

t, }

train (%) (%)

1.7 4-0.3

4.84- 1.4

1.8 4-0.3 5.1 4- 1.2

1.5 4-0.3

5.3 4- 1.1

1.4 4- 0.2 5.2 4- 1.4

2.6 4- 0.7 6.14- 1.7

2.0 4- 0.4 5.3 4- 1.2

2.2 4- 0.4 5.14- 1.0

Table2: iris dataclassification result (a) neuralnetworkerror rate and the numberof rules with the linear classifier

method(b) error rate in linear classifier methodwith variable thresholdsandfixed thresholds

¯ --

(a)

(b)

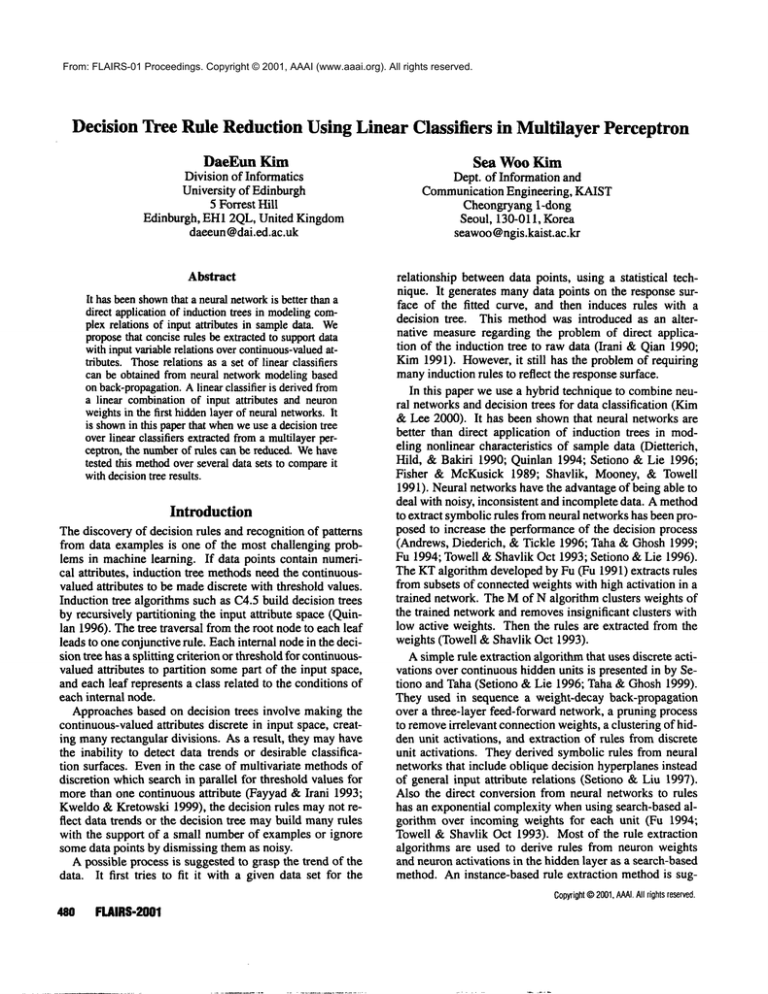

Figure 2: ComparisonbetweenC4.5 and linear classifier

method(a) averageerror rate in test data with C4.5, neural

network,andlinear classifier (b) the numberof rules with

C4.5 andlinear classifier method

Experiments

Ourmethodhas been tested on several sets of data in the

UCI depository (Blake, Keogh, & Merz1998). Figure

showsaverageclassification errorrates for C4.5, neuralnetworksandthe linear classifier method.Table 1 showserror

rates to comparethe pure C4.5 methodandour linear classifier method.Theerror rates wereestimatedby runningthe

complete10-fold cross-validation ten times, andthe average

andthe standarddeviation for ten runs weregivenin the table. Several neural networkswere tested for each data set.

Table 2-3 shows examplesof different neural networksand

their linearclassifier results.

Ourmethodsusing linear classifiers are better than C4.5

in somesets and worsein other data sets such as glass and

pima whichare hard to predict even in neural network.The

result supportsthe fact that the methodsgreatly dependon

neuralnetworktraining. If neuralnetworkfitting is not correct, thenthe fitting errors maymisleadthe result of linear

classifier methods.Normally,the C4.5 applicationshowsthe

errorrate is very highfor trainingdata in Table1. Theneural networkcan improvetraining performanceby increasing

the numberof nodes in the hiddenlayers as shownin Table

2-3. However,it does not guaranteeto improvetest set performance.In manycases, reducingerrors in a training set

tendsto increasethe errorrate in a test set by overfitting.

The error rate difference betweena neural networkand

the linear classifier methodexplains that somedata points

are located on marginalboundariesof classifiers. It is due

to the fact that our neuralnetworkmodeluses sigmoidfunctions with high slopes instead of step functions. When

acti-

n~s

6-5-2

6-10-2

6-5-5-2

6-8-6-2

6-10-7-2

neural network

~n <%) I testc%)

17.94- 1.1

31.94- 1.6

10.24.1.1

32.84- 2.1

15.24- 0.9

32.84- 1.9

9.3 4- 0.7

32.14- 1.9

7.34-1.4

32.74- 2.2

variable

T~L }

#~

10.04- 1.3

15.3

4*1.7

10.6.4-1.3

14.34*2.1

16.24- 1.7

fixedT~-LJ.

#r~ es

7.2

4-0.7

16.34- 1.7

9.4 4- 0.9

19.54- 1.3

24.4

4*2.3

(a)

nodes

6-5-5-2

6-8-6-2

6-10-7-2

"[L}

L} [1

variable thresholds

fixed thresholdsf

train (%)

test (%)

train (%)

(%)

17.84- 1.5 32.84- 1.9

25.24- 1.9

34.14- 1.3

14.84- 1.4 33.74*2.1

19.94*1.5

36.24- 3.1

17.54- 1.0 33.64* 2.3

22.24- 1.1

34.34- 2.3

13.64*1.5

32.54* 1.7 15.14- 0.6

34.04- 2.4

15.24- 1.3

32.74- 2.9

17.34- 0.7 32.74- 1.6

(b)

Table 3: bupadata classification result (a) neuralnetwork

error rate andthe number

of rules with the linear classifier

method

(b) errorrate in linear classifier method

withvariable

thresholds andfixed thresholds

vation is near 0.5, the weightedsumof activations maylead

to different output classes. If the numberof nodes in the

first hiddenlayer is increased, this marginaleffect becomes

larger as observedin Table2 - see fixed thresholds.

Figure 2(b) shows that the numberof rules using our

methodis significantly smaller than that using conventional

C4.5in all the data sets. To reducethe number

of rules, linear classifiers with the Booleancircuit modelgreatly depend

on the number

of nodesin the first hiddenlayer. It decreases

the numberof rules whenthe numberof nodes decreases

in the first hiddenlayer, whilethe errorrate performance

is

similar withinsomelimit, regardlessof the number

of nodes.

Thelinear classifier methodwithvariablethresholdsalso depends on the numberof nodes. The reason whythe number

of rules is proportionalto the number

of nodesis related to

the searchspace of Booleanlogic circuits. Thelinear classifier methodwith the Booleancircuit modeloften tends to

generate rules that have a small numberof support examples, while variable threshold modelprunesthose rules by

NEURAL

NETWORK

/ FUZZY 483

adjusting splitting thresholds in the decision tree. Twohidden layer neural networks are not significantly more effective in terms of error rates and the numberof rules than one

hidden layer as shownin Table 2-3. Thus, neural networks

with one hidden layer maybe enoughfor UCIdata set.

Mostof the data sets in the UCIdepository have a small

numberof data examplesrelative to the numberof attributes.

The significant difference between a simple C4.5 application and a combinationof C4.5 application and a neural networkis not seen distinctively in UCIdata in terms of error

rate, unlike the synthetic data in Figure 1. Information of

data trend or input relations can be moredefinitely described

whengiven manydata examplesrelative to the numberof attributes.

Table 2-3 showsthat neural networkclassification is better than linear classifier applications. Even thoughlinear

classifier methods are good approximations to nonlinear

neural networkmodelingin the experiments, we still need

to reduce the gap betweenneural networktraining and linear

classifier models. There is a trade-off betweenthe number

of rules and error rate performance.Weneed to explain what

is the optimal numberof rules for a given data set for future

study.

Conclusions

This paper presents a hybrid methodfor constructing a decision tree from neural networks. Our methoduses neural

network modeling to find unseen data points and then an

induction tree is applied to data points for symbolicrules,

using features from the neural network. The combination

of neural networks and induction trees will compensatefor

the disadvantages of one approach alone. This methodhas

advantages over a simple decision tree method. First, we

can obtain goodfeatures for a classification boundaryfrom

neural networks by training input patterns. Second, because

of feature extractions about input variable relations, we can

obtain a compactset of rules to reflect input patterns.

Westill have muchwork ahead, such as reducing the

numberof rules and error rate together, and finding the

optimal numberof linear classifiers.

References

Andrews,R.; Diederich, J.; and Tickle, A. 1996. A survey

and critique of techniquesfor extracting rules from trained

artificial neural networks. Knowledge-Based

Systems 8(6).

Blake, C.; Keogh, E., and Merz, C. 1998. UCIrepository

of machinelearning databases. In Preceedingsof the Fifth

International Conference on MachineLearning.

Cybenko, G. 1988. Continuous valued neural networks

with two hidden layers are sufficient. Technical report,

Technical Report, Departmentof ComputerScience, Tufts

University, Medford, MA.

Dietterich, T.; Hild, H.; and Bakiri, G. 1990. A comparative study of ID3 and backpropagation for english textto-speech mapping. In Proceedings of the 1990 Machine

Learning Conference, 24-31. Austin, TX.

484

FLAIRS-2001

Fayyad, U., and Irani, K. 1993. Multi-interval discretization of continuous-valued

attributes for classification learning. In Proceedings of IJCAI’93, 1022-1027. Morgan

Kaufmann.

Fisher, D., and McKusick,K. 1989. An empirical comparison of ID3 and backpropagation. In Proceedings of 11th

International Joint Conferenceon AI, 788-793.

Fu, L. 1991. Rule learning by searching on adaptive nets.

In Preceedingsof the 9th NationalConferenceon Artificial

Intelligence, 590-595.

Fu, L. 1994. Neural Networks in ComputerIntelligence.

NewYork: McGraw-Hill.

Haykin, S. 1999. Neural networks : a comprehensivefoundation. UpperSaddle River, N.J.: Prentice Hall, 2nd edition.

Hertz, J.; Palmer, R.; and Krogh,A. 1991. Introduction to

the Theory of Neural Computation.RedwoodCity, Calif.:

Addision Wesley.

Irani, K., and Qian, Z. 1990. Karsm: A combinedresponse

surface / knowledgeacquisition approachfor deriving rules

for expert system. In TECHCON’90

Conference, 209-212.

Kim,D., and Lee, J. 2000. Handlingcontinuous-valuedattributes in decision tree using neural networkmodeling.In

EuropeanConference on MachineLearning, Lecture Notes

in Artificial Intelligence 1810, 211-219.Springer Verlag.

Kim, D. 1991. Knowledgeacquisition based on neural

network modeling. Technical report, Directed Study, The

University of Michigan, AnnArbor.

Kweldo,W., and Kretowski, M. 1999. An evolutionary algorithmusing multivariate discretization for decision rule

induction. In Proceedings of the Third EuropeanConference on Principles of Data Miningand KnowledgeDiscovery, 392-397. Springer.

Quinlan, J. 1994. Comparingconnectionist and symbolic

learning methods. In Computational Learning Theory and

Natural Learning Systems, 445-456. MITPress.

Quinlan, J. 1996. Improveduse of continuousattributes in

C4.5. Journal of Artificial Intelligence Approach(4):7790.

Setiono, R., and Lie, H. 1996. Symbolicrepresentation of

neural networks. Computer29(3):71-77.

Setiono, R., and Liu, H. 1997. Neurolinear: A system

for extracting oblique decision rules fromneural networks.

In European Conference on Machine Learning, 221-233.

Springer Verlag.

Shavlik, J.; Mooney,R.; and Towell, G. 1991. Symbolic

and neural learning algorithms: Anexperimental comparison. MachineLearning 6(2): 111-143.

Taha, I. A., and Ghosh, J. 1999. Symbolicinterpretation

of artificial neural networks. IEEETransactions on Knowledge and Data Engineering 11(3):448-463.

Towell, G., and Shavlik, J. Oct. 1993. Extracting refined

rules from knowledge-based neural networks. Machine

Learning 13(1):71-101.