From: FLAIRS-01 Proceedings. Copyright © 2001, AAAI (www.aaai.org). All rights reserved.

A Comparisonof Noise Handling Techniques

Choh Man Teng

cmteng @ai.uwf.edu

Institute for Humanand MachineCognition

University of West Florida

40 South Alcaniz Street, Pensacola FL 32501 USA

Abstract

Imperfectionsin data can arise frommanysources. Thequality of the data is of primeconcernto any task that involves

data analysis. It is crucial that wehavea goodunderstanding

of data imperfectionsand the effects of various noise handling techniques. Westudy here a numberof noise handling

approaches,namely,robust algorithmsthat are tolerant of

someamountof noisein the data, filtering that eliminatesthe

noisy instancesfromthe input, and polishingwhichcorrects

the noisy instances rather than removing

them. Weevaluated

the performance

of these approachesexperimentally.Theresults indicatedthat in additionto the traditional approach

of

avoidingoverfitting, bothfiltering andpolishingcan be viable mechanisms

for reducingthe negativeeffects of noise.

Polishingin particular showedsignificant improvement

over

the other twoapproachesin manycases, suggestingthat even

thoughnoise correction adds considerablecomplexityto the

task, it also recoversinformation

not availablewiththe other

twoapproaches.

Introduction

Imperfections in data can arise from manysources, for instance, faulty measuringdevices, transcription errors, and

transmission irregularities. Except in the most structured

and synthetic environment,it is almost inevitable that there

is somenoise in any data we have collected. Data quality

is crucial to any task that involves data analysis, and in particular in the domainsof machinelearning and knowledge

discovery, where we have to deal with copious amountsof

data. It is thus essential that we have a goodunderstanding

of data imperfections and the effects of various noise handling techniques.

Wehave identified three main approaches to coping with

noise, namely,robust algorithms, filtering, and correction.

In this paper we study these three approaches experimentally, using a representative methodfrom each approachfor

evaluation. The effectiveness of the three methodsare comparedin the setting of classification.

Belowwe will first discuss the three general approaches

to noise handling, and their respective advantagesand disadvantages. Wewill describe one of the more novel approaches, data polishing, in moredetail. Thenwe will outCopyright~) 2001,AmericanAssociationfor Artificial Intelligence(www.aaai.org).

All rights reserved.

line the setup for experimentation,and report the results on

predictive accuracy and size of the classifiers built by the

three methods in our study. Additional observations are

givenin the last section.

Approachesto Noise Handling

Noise in a data set can be dealt with in three broad ways.

Wemayleave the noise in, filter it out, or correct it. In the

first approach,the data set is taken as is, with the noisy instances left in place. Algorithmsthat makeuse of the data

are designedto be robust; that is, they can tolerate a certain

amountof noise. This is typically accomplishedby avoiding overfitting, so that the resulting classifier is not overly

tuned to account for the noise. This approach is taken by,

for example, c4.5 (Quinlan 1987) and CN2(Clark &Niblett

1989).

In the secondapproach, the data is filtered before being

used. Instances that are suspected of being noisy according to certain evaluation criteria are discarded (John 1995;

Brodley & Friedl 1996; Gamberger, Lavra~, & D~eroski

1996). Aclassifier is then built using only the retained instances in the smaller but cleaner data set. Similar ideas

can be found in robust regression and outlier detection techniques in statistics (Rousseeuw&Leroy 1987).

In the third approach,the noisy instances are identified,

but instead of tossing themout, they are repaired by replacing the corrupted values with more appropriate ones. The

corrected instances are then reintroduced into the data set.

One such method, called polishing, has been investigated

in (Teng 1999; 2000).

There are pros and cons to adopting any one of these approaches. Robust algorithms do not require preprocessing

of the data, but a classifier built froma noisy data set maybe

less predictive and its representation maybe less compact

than it could have been if the data were not noisy. By filtering out the noisy instances fromthe data, there is a tradeoff betweenthe amountof informationavailable for building

the classifier and the amountof noise retained in the data set.

Data polishing, whencarried out correctly, wouldpreserve

the maximalinformation available in the data, approximating the noise-free ideal situation. Thebenefits are great, but

so are the associated risks, as we mayinadvertently introduce undesirable features into the data whenwe attempt to

correctit.

KNOWLEDGE

DISCOVERY269

Wewill first outline the basic methodologyof polishing

in the next section, and then describe the experimentalsetup

for comparingthese three approachesto noise handling.

Polishing

Traditionally machine learning methods such as the naive

Bayes classifier typically assumethat different components

of a data set are (conditionally) independent. It has often

been pointed out that this assumptionis a gross oversimplification; hence the word "naive" (Mitchell 1997, for exampie). In manycases there is a definite relationship within

the data; otherwise any effort to mineknowledgeor patterns

from the data wouldbe ill-advised.

Polishing takes advantage of this interdependency betweenthe componentsof a data set to identify the noisy elements and suggest appropriate replacements. Rather than

utilizing the features only to predict the target concept, we

can just as well turn the process aroundand utilize the target

together with selected features to predict the value of another feature. This provides a meansfor identifying noisy

elements together with their correct values. Note that except

for totally irrelevant elements,each feature wouldbe at least

related to someextent to the target concept, even if not to

any other features.

The basic algorithm of polishing consists of two phases:

prediction and adjustment. In the prediction phase, elements

in the data that are suspected of being noisy are identified

together with a nominated replacement value. In the adjustment phase, we selectively incorporate the nominated

changesinto the data set. In the first phase, the predictions

are carried out by systematically swappingthe target and

particular features of the data set, and performinga ten-fold

classification using a chosenclassification algorithmfor the

prediction of the feature values. If the predicted value of a

feature in an instance is different from the stated value in

the data set, the location of the discrepancyis flagged and

recorded together with the predicted value. This information is passed on to the next phase, where we institute the

actual adjustments.

Since the polishing process itself is based on imperfect

data, the predictions obtained in the first phasecan contain

errors as well. Weshould not indiscriminately incorporate

all the nominatedchanges. Rather, in the second phase, the

adjustment phase, we selectively adopt appropriate changes

from those predicted in the first phase, using a numberof

strategies to identify the best combinationof changesthat

wouldimprovethe fitness of a datum. Weperforma ten-fold

classification on the data, and the instances that are classified

incorrectly are selected for adjustment. A set of changesto

a datumis acceptableif it leads to a correct prediction of the

target conceptby all ten classifiers obtained from the tenfold process.

Further details of polishing can be found in (Teng 1999;

2000).

Experimental Setup

Belowwe report on an experimentalstudy of three representative mechanismsof the the noise handling approaches we

270

FLAIRS-2001

have discussed, and comparetheir performanceon a number

of test data sets.

The basic learning algorithm we used is c4.5 (Quinlan

1993) the decision tree builder. Three noise handling mechanismswere evaluated in this study.

Robust: c4.5, with its built in mechanismsfor avoiding

overfitting. Theseinclude, for instance, post-pruning,

and stop conditions that prevent further splitting of a leaf

node.

Filtering : Instances that havebeen misclassified by the decision tree built by c4.5 are discarded, and a newtree is

built using the remainingdata. This is similar to the approach taken in (John 1995).

Polishing : Instances that have been misclassified by the

decision tree built by c4.5 are polished, and a newtree is

built using the polished data, according to the mechanism

described in the previous section.

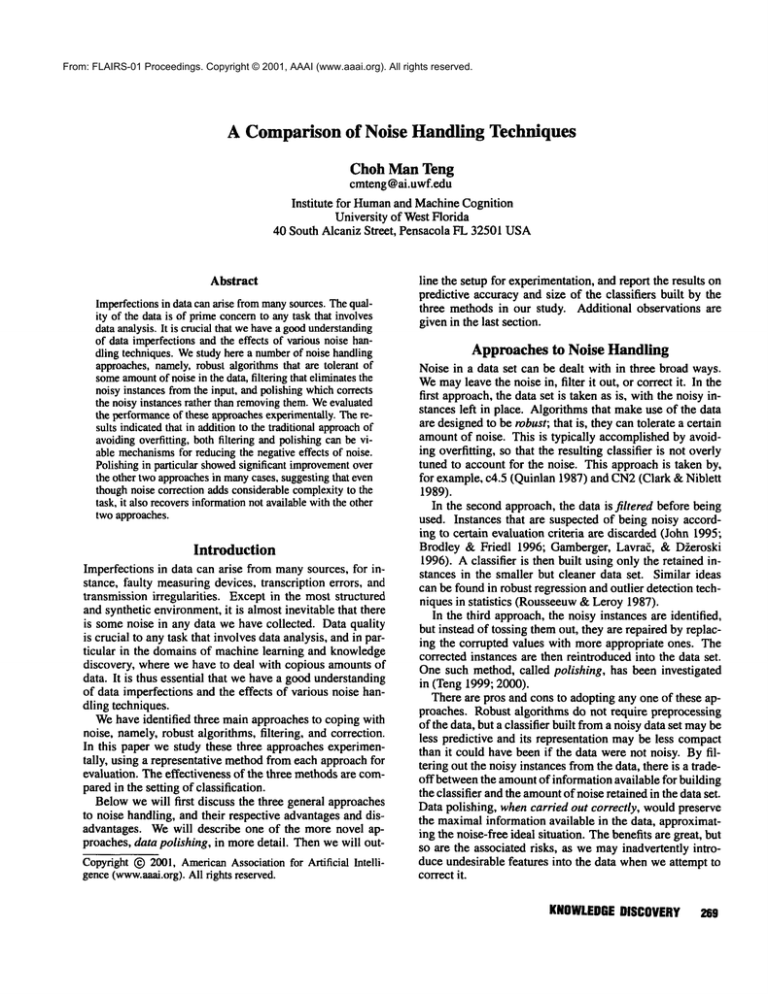

Twelvedata sets from the UCIRepository of machinelearning databases (Murphy& Aha 1998) were used. These are

shownin Table 1. The training data was artificially corrupted by introducing randomnoise into both the attributes

and the class. A noise level of z%meansthat the value of

each attribute and the target class is assigned a randomvalue

z%of the time, with each alternative value being equally

likely to be selected.

The actual percentages of noise in the data sets are given

in the columnsunder "Actual Noise" in Table 1. These values are never higher, and in almost all cases lower, than the

advertised z%, since the original noise-free value could be

selected as the randomreplacement as well. Also shownin

TableI are the percentagesof instances with at least one corrupted value. Note that even at fairly low noise levels, the

majority of instances contain someamountof noise.

Results

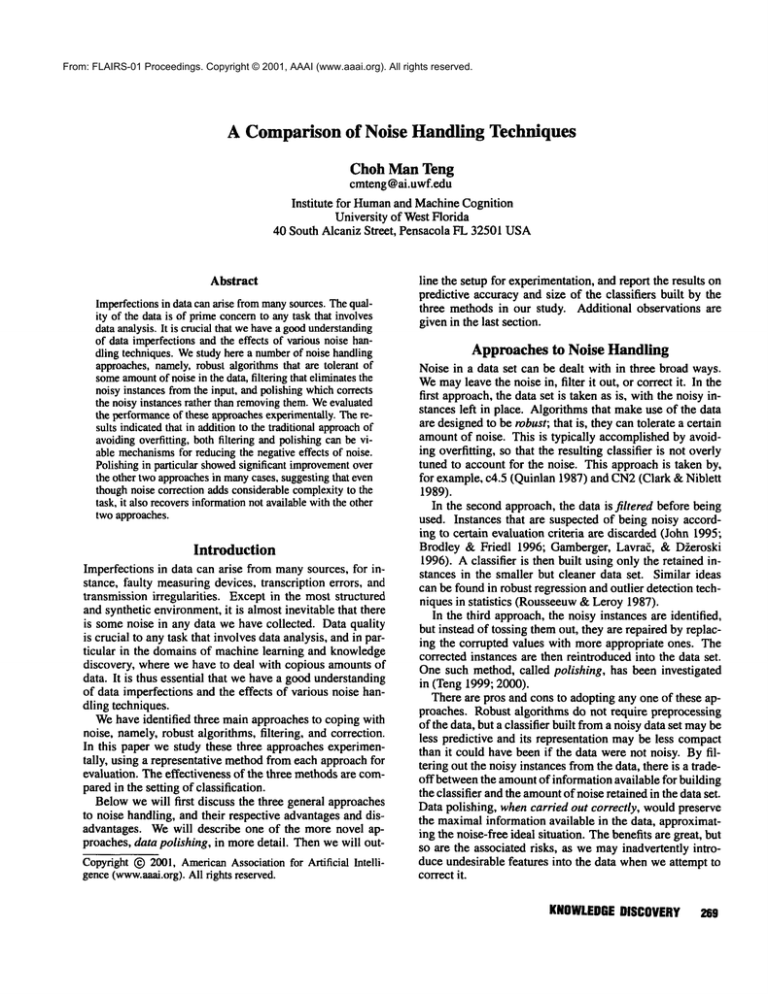

Weperformeda ten-fold cross validation on each data set,

using the abovethree methods(robust, filtering, and polishing) in turn to obtainthe classifiers. In each trial, nine parts

of the data were used for training, and the remaining one

part was held for testing. Wecomparedthe classification accuracy and size of the decision trees built. The results are

summarizedin Tables 2 and 3.

Table2 showsthe classification accuracy and standard deviation of trees obtained using the three methods. Wecompared the methodsin pairs (robust vs. filtering; robust vs.

polishing; filtering vs. polishing), and differences that are

significant at the 0.05 level using a one-tailed paired t-test

are markedwith an .. (An "*" indicates the latter method

performedbetter than the former in the pair being compared;

a "-*" denotes that the difference is "reverse": the former

methodperformedsignificantly better than the latter.)

Of the three methodsstudied, we can establish a general

ordering of the resulting predictive accuracy. Exceptfor the

nursery data set at the 0%noise level, and the zoo data set

at the 10%noise level, in all other cases, wherethere was a

significance difference, polishing gaverise to a higher classification accuracythan filtering, and filtering gaverise to a

DataSet

audiology

LED-24

lenses

lung cancer

mushroom

NoiseLevel

Actual Noise

O%

10%

20%

30%

4O%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

40%

0.0%

5.2%

10.6%

15.5%

21.4%

0.0%

6.8%

14.6%

21.4%

28.4%

0.0%

5.3%

10.3%

15.4%

20.9%

0.0%

5.8%

13.3%

15.6%

23.3%

0.0%

7.3%

14.8%

21.9%

27.5%

0.0%

7.3%

14.8%

22.1%

29.5%

Instances

with Noise

0.0%

96.0%

100.0%

100.0%

100.0%

0.0%

39.4%

66.8%

82.9%

90.5%

0.0%

74.8%

94.2%

98.3%

99.9%

0.0%

29.2%

50.0%

45.8%

75.0%

0.0%

100.0%

100.0%

100.0%

100.0%

0.0%

82.5%

97.4%

99.8%

100.0%

DataSet

NoiseLevel

Actual Noise

nursery

0%

10%

20%

30%

4O%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0.0%

6.9%

13.9%

20.8%

27.9%

0.0%

7.6%

14.3%

22.0%

29.9%

0.0%

5.6%

11.5%

17.0%

22.9%

0.0%

7.4%

15.0%

22.5%

30.1%

0.0%

6.4%

12.9%

19.8%

26.0%

0.0%

5.4%

11.8%

15.6%

20.0%

promoters

soybean

splice

vote

ZOO

Instances

with Noise

0.0%

47.4%

74.2%

87.7%

94.7%

0.0%

97.2%

100.0%

100.0%

100.0%

0.0%

85.7%

96.2%

99.6%

100.0%

0.0%

98.9%

100.0%

100.0%

100.0%

0.0%

66.2%

89.2%

97.7%

99.8%

0.0%

63.4%

87.1%

98.0%

100.0%

Table 1: Noisecharacteristics of data sets at variousnoise levels.

higher classification accuracy than c4.5 alone. The results

suggested that both filtering and polishing can be effective

methodsfor dealing with imperfections in the data. In addition, we also observedthat polishing outperformedfiltering

in quite a numberof cases in our experiments, suggesting

that correcting the noisy instances can be of a higher utility

than simplyidentifying and tossing these instances out.

Nowlet us look at the average size of the decision trees

built from data processed by the three methods. The resuits are shownin Table 3. There is no clear trend as to

which methodperformed the best, but in almost all cases,

the smallest trees weregivenby either filtering or polishing.

Whichof the two methodsworkedbetter in terms of reducing the tree size seemedto be data-dependent. In about half

of the data sets, polishinggavethe smallest trees at all noise

levels, while the results were moremixedfor the other half

of the data sets.

Tosomeextent it is expectedthat both filtering and polishing wouldgive rise to trees of a smaller size than plain c4.5.

By eliminating or correcting the noisy instances, both of

these methodsstrive to makethe data more uniform. Thus,

fewer nodes are neededto represent the cleaner data. In addition, the data sets obtained from filtering are smaller in

size than both the correspondingoriginal and polished data

sets, as someof the instances have been eliminated in the

filtering process. However,judging from the experimental

results, this did not seemto pose a significant advantagefor

filtering.

Remarks

Westudied experimentally the behaviors of three methodsof

coping with noise in the data. Our evaluation suggested that

in addition to the traditional approachof avoidingoverfitting, both filtering and polishing can be viable mechanisms

for reducingthe negative effects of noise. Polishing in particular showedsignificant improvementover the other two

approachesin manycases. Thus, it appears that even though

noise correction adds considerable complexityto the task, it

also recovers information not available with the other two

approaches.

One might wonderwhywe did not use as a baseline for

evaluation the "perfectly filtered" data sets, namely,those

data sets with all knownnoisy instances removed.(It is possible in this setting, since we addedthe noise into the data

sets ourselves.) While such a data set wouldbe perfectly

clean, it wouldalso be very small. Table 1 showsthe percentages of instances with at least one noisy element. Even

at the 10%noise level, in the majorityof data sets morethan

50%of the instances are noisy. This percentage grows very

quickly to almost 100%as the noise level increases. Thus,

we wouldhave had only very little data to work with if we

KNOWLEDGE

DISCOVERY 271

Data Set

audiology

car

LED-24

lenses

lung cancer

mushroom

nursery

promoters

soybean

splice

vote

zoo

Noise Level

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

Classification Accuracy:/: Standard Deviation

Robust

Filtering

Polishing

78.0 -4- 7.7%

73.0 4- 7.9%

67.8 4- 8.0%

54.8 -l- 11.5%

33.6 -4- 11.7%

93.2 4- 1.7%

86.3 -4- 2.6%

83.6 4- 3.3%

76.5 4- 2.3%

74.5 4- 2.1%

100.0-4- 0.0%

100.0 4- 0.0%

92.3 4- 4.3%

76.2 4- 4.7%

49.4 4- 5.9%

83.3 -4- 30.7%

50.0 4- 24.7%

55.0 4- 28.9%

48.3 4- 32.9%

58.3 4- 38.2%

50.0 4- 23.9%

30.8 -4- 24.2%

45.8 4- 37.5%

57.5 4- 26.0%

50.8 -4- 16.9%

100.0-4- 0.0%

99.9 4- 0.1%

99.7 -4- 0.4%

98.9 4- 0.6%

98.6 -4- 0.5%

97.0 -4- 0.4%

94.4 -I- 0.4%

90.9 4- 0.6%

90.0 -4- 0.7%

87.4 -I- 1.1%

75.64- 13.5%

73.04- 12.5%

65.9::k9.0%

55.64-10.3%

55.64-14.7%

92.14- 2.0%

86.2::k4.9%

83.04- 3.3%

72.24- 6.1%

50.7 -t- 8.4%

94.04- 1.3%

89.34- 1.8%

83.14- 2.1%

73.14- 4.0%

61.64- 2.9%

94.7 -I- 2.0%

94.7 4- 2.5%

94.0 4- 2.1%

92.9 4- 3.2%

92.4 4- 3.1%

92.24-7.2%

91.24-8.1%

83.24- 7.8%

77.4-I-11.4%

78.34-11.5%

77.5 ::k 7.8%

73.0 -1- 7.8%

67.7 -I- 5.9%

54.5 4- 11.5%

42.5 4- 8.0%

92.8 -4- 1.6%

86.3 -I- 2.5%

83.6 -4- 3.2%

76.5 4- 2.0%

74.4 -4- 2.2%

100.0-1- 0.0%

100.0-4- 0.0%

95.5 4- 3.8%

83.2 4- 3.9%

53.8 4- 6.1%

83.3 4- 30.7%

50.0 4- 24.7%

55.0 -I- 28.9%

48.3 4- 32.9%

58.3 -I- 38.2%

47.5 4- 22.4%

43.3 4- 31.1%

39.2 4- 32.9%

55.0 4- 27.7%

56.7 4- 20.0%

100.0-4- 0.0%

99.9-4- 0.1%

99.7-4- 0.4%

98.9 4- 0.6%

98.6-4- 0.5%

96.8 4- 0.4%

94.5 -I- 0.5%

91.0 4- 0.6%

90.0 -4- 0.7%

87.4 -4- 1.1%

75.64- 13.5%

73.0::k12.5%

66.8::k8.2%

59.3-4-14.1%

57.44-14.7%

91.84- 2.2%

85.74-4.7%

82.54-4.6%

76.7-4-3.7%

51.2 4- 5.5%

94.14- 1.5%

89.64- 1.6%

83.54- 1.7%

73.1-4-3.2%

63.94- 2.2%

94.7 4- 2.0%

94.7 4- 2.5%

93.6 4- 1.7%

92.7 4- 2.9%

92.4 4- 3.1%

92.24-7.2%

88.34-9.3%

84.24-6.5%

79.34-9.2%

80.34-9.8%

80.2 4- 7.0%

73.0 4- 5.8%

70.9 4- 5.2%

61.6 -4- 7.8%

48.2 -4- 5.7%

92.9 4- 1.6%

86.7 4- 2.9%

84.3 4- 2.7%

80.6 4- 2.5%

77.1 4- 2.0%

100.0 4- 0.0%

100.0 4- 0.0%

97.2 4- 2.2%

90.0 4- 3.3%

68.1 4- 5.3%

86.7 4- 30.5%

78.3 -4- 31.7%

73.3 4- 32.7%

56.7 -4- 41.6%

60.0 -4- 30.9%

54.2 4- 31.0%

46.7 4- 23.6%

54.2 4- 37.1%

55.0 -I- 27.7%

63.3 4- 24.8%

100.0 4- 0.0%

100.0 4- 0.1%

100.0 4- 0.1%

99.3 4- 0.6%

98.8 4- 0.5%

96.8 4- 0.3%

94.5 4- 0.4%

91.2 4- 0.8%

90.3 4- 1.0%

88.1 4- 0.7%

77.54- 16.7%

80.44- 10.4%

78.54- 12.7%

67.94- 15.0%

58.54- 14.8%

92.14- 1.8%

88.74-2.3%

85.84- 3.6%

76.7::k4.4%

55.4 4- 3.7%

94.24- 1.4%

91.84- 1.5%

88.34- 1.5%

83.84- 2.6%

72.34- 3.0%

94.7 -I- 2.5%

95.2 4- 3.0%

95.4 4- 2.3%

90.6 4- 5.5%

92.8 4- 3.9%

93.14- 6.4%

95.24- 7.7%

85.24- 9.1%

86.24-4.7%

87.14-7.8%

Significant Difference

Robust/ Robust/ Filtering/

Filtering Polishing Polishing

m,

-,

*

*

*

*

*

Table 2: Classification accuracy with standard deviation. An "," indicates a significant improvementof the latter method over

the former at the 0.05 level. A "-*" indicates a "reverse" significant difference: the former method performed better than the

latter.

272

FLAIRS-2001

Data Set

audiology

car

LED-24

lenses

lung cancer

mushroom

Noise Level

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

!0%

20%

30%

40%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

40%

Robust

50.5

66.6

90.3

125.0

143.3

173.4

108.5

103.7

88.8

41.6

19.0

78.2

193.4

335.8

490.8

6.4

4.2

3.5

3.8

3.9

19.0

20.6

15.8

12.2

16.6

30.6

214.3

268.3

345.0

535.2

Filtering

45.4

44.3

58.8

76.8

89.3

169.1

105.1

104.0

81.7

41.3

19.0

69.4

134.6

231.0

347.2

6.4

4.0

3.5

3.8

3.9

15.0

19.0

15.4

11.0

15.0

30.6

207.2

244.8

325.5

519.2

Polishing

47.0

56.2

90.9

121.6

130.1

169.1

102.1

102.7

104.0

61.2

19.0

34.8

85.6

162.4

317.8

5.0

6.7

3.4

7.3

4.5

14.2

23.0

15.4

11.8

16.2

30.6

109.9

124.6

129.3

286.4

Data Set

nursery

promoters

soybean

splice

vote

Zoo

Noise Level

O%

10%

2O%

3O%

4O%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

40%

0%

10%

20%

30%

4O%

0%

10%

20%

30%

4O%

Robust Filtering Polishing

508.4

473.3

464.1

315.3

304.5

286.4

177.0

167.6

149.5

174.8

164.9

133.6

258.0

235.3

212.6

21.4

12.2

21.4

21.8

12.2

21.8

28.2

15.0

28.2

32.2

29.0

17.4

34.6

32.6

34.2

94.1

88.7

91.2

157.9

131.6

162.7

202.5

146.7

189.2

278.5

184.0

218.7

328.7

209.2

289.4

171.8

168.2

156.2

318.2

294.6

120.6

537.4

512.2

233.4

836.2

793.4

335.4

1143.0

1036.6

680.2

14.5

14.5

5.8

17.8

17.2

13.6

20.8

19.0

11.8

43.3

38.5

24.7

27.1

27.1

19.6

17.8

17.6

16.2

24.2

21.2

21.8

19.8

20.6

22.2

30.2

21.2

30.0

35.4

25.8

30.0

Table 3: Average size of the pruned decision trees

had opted for a perfectly filtered data set. Note that, however, ironically we mayend up in this situation if our filtering technique becomes too effective.

While we have evaluated the performance of the noise

handling methods against each other, there is no reason why

we cannot combine these methods in practice. The different

methods address different aspects of data imperfections, and

the combined mechanism may be able to tackle noise more

effectively

than any of the individual component mechanisms alone. However, first we need to achieve a better understanding of the behaviors of these mechanisms before we

can utilize them properly.

References

Brodley, C. E., and Friedl, M. A. 1996. Identifying and

eliminating mislabeled training instances. In Proceedings

of the Thirteenth National Conference on Artificial Intelligence.

Clark, P., and Niblett, T. 1989. The CN2induction algorithm. Machine Learning 3(4):261-283.

Gamberger, D.; Lavra~, N.; and D~eroski, S. 1996. Noise

elimination in inductive concept learning: A case study in

medical diagnosis. In Proceedings of the Seventh International Workshop on Algorithmic Learning Theory, 199212.

John, G. H. 1995. Robust decision trees: Removing outliers from databases. In Proceedings of the First International Conference on Knowledge Discovery and Data Mining, 174-179.

Mitchell, T. M. 1997. Machine Learning. McGraw-Hill.

Murphy, P. M., and Aha, D.W. 1998. UCI repository

of machine learning databases. University of California,

Irvine, Department of Information and Computer Science.

www.

ics.

uci.

edu/~mlearn/MLRepository,

html.

Quinlan, J. R. 1987. Simplifying decision trees. International Journal of Man-MachineStudies 27(3):221-234.

Quinlan, J. R. 1993. C4.5: Programs for Machine Learning. Morgan Kaufmann.

Rousseeuw, P. J., and Leroy, A. M. 1987. Robust Regression and Outlier Detection. John Wiley & Sons.

Teng, C.M. 1999. Correcting noisy data. In Proceedings of the Sixteenth International Conference on Machine

Learning, 239-248.

Teng, C. M. 2000. Evaluation noise correction. In Lecture

Notes in Artificial Intelligence: Proceedings of the Sixth

Pacific Rim International Conference on Artificial Intelligence. Springer-Verlag.

KNOWLEDGE

DISCOVERY

273