SUBSPACE BASED VOWEL-CONSONANT SEGMENTATION of R. Muralishankar’, A. Kjaya krishna2 and A. Rarnakrishnan’

advertisement

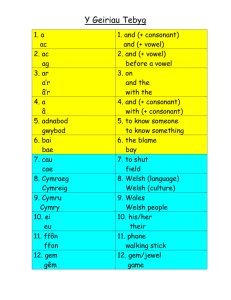

SUBSPACE BASED VOWEL-CONSONANT SEGMENTATION R. Muralishankar’, A. Kjaya krishna2 and A. G. Rarnakrishnan’ ‘Department of Electrical Engineering *Department of Electrical Communication Engineering Indian Institute of Science, Bangalore-560012, INDIA. sripad, ramkiag@ee.iisc.ernet.in vkrishna@protocol.ece.iisc.ernet.in ABSTRACT In our (knowledge-based) synthesis system [I],we use single instances of basic-units, which are polyphones such as CV, VC, VCV. VCCV and VCCCV, where C stands for consonant and V for vowel. These basic-units are recorded in an isolated manner from a speaker and not from continuous speech or carrier-words. Modification of the pitch, amplitude and duration of basic-units is required in our speech synthesis system I I]to ensure that the overall characteristics of the concatenated units matches with the true characteristic of the target word or sentence. Duration modification is carried out on the vowel parts of the basic-unit leaving the consonant portion in the basic-unit intact. Thus, we need to segment these polyphones into consonant and vowel parts. When the consonant present in any basic-unit is a plosive or fricative, the energy based method is good enough to segment the vowel and consonant parts. However, this method fails when there is a co-articulation between the vowel and the consonant. We propose the use of orientedprincipal comporierir analysis (OPCA) to segment the co-articulated units. The test feature vectors (LPC-Cepsrmm & Mel-Cepsrmm) are projected on the consonant and vowel subspaces. Each of these subspaces are represented by generalized eigenvectors obtained by applying OPCA on the training feature vectors. Our approach successfully segments co-articulated basic-units. 1. INTRODUCTION For the purpose of synthesis. speech often needs to be segmented into phonetic units. Manual segmentation is tedious, time consuming and error prone. Due to variability both in human visual and acoustic perceptual capability, it is almost impossible to reproduce the manual segmentation results. Hence manual segmentation is inherently inconsistent. Automatic sementation is not faultless, but it is inherently consistent and results are reproducible. Ideally, one likes to have an automatic segmentation which can handle basicunits uttered by different speakers. There are two broad categories of speech segmentation 121 namely, implicit and explicit. Implicit methods split up the utterance without explicit information, such as the phonetic transcription, and are based on the definition of a segment as a spectrally stable part of a signal. In 121, a segment is defined as a number of consecutive frames whose spectra are similar. Here, the normalized correlation C;,j between the LPC smoothed log-amplitude spectra of the i i h and jihframes in the utterance is used as the measure of similarity. If the correlation equals I , then the e l h and jihframes are identical. A heuristically chosen threshold value defines the beginning and end of the significant part of the correlation curve. This threshold defines the extent to which the correlation is allowed to drop within one segment. Explicit segmentation methods split up the utterance into segments that are defined 0-7803-7997-7/03/$17.00 8 2003 IEEE by phonetic transcription. In general, explicit methods have the disadvantage that the reference patterns need to be generated before the method can be used 121. The results obtained in [Z]show that the explicit scheme is inaccurate as compared to the implicit one. Finally, a combination of the two methods is proposed in [Z]. 1.1. Motivation for OPCA based Segmentation In our synthesis scheme, concatenation is always performed across identical vowels. Changes in duration, pitch and amplitude are obtained by processing the vowel parls only. Thus, the segmentation of basic-units into vowel and consonant parts is needed to keep the consonant portion of the waveform intact. Plosives, affricates and fricatives have a common property of low energy when compared with any of the vowels. Figure 1 shows the performance of energy based segmentation for plosive and co-articulated basic-units. As shown in Figs. I(a) and (b), accurate segmentation is obtained for non co-articulated units, and not for co-articulated basic-units. The m e consonant part /y/ in the signal ley01 is shown in Fig. I (b) with the boundaries dotted. In our implicit approach, we avoid thresholding of intermediate result to obtain V and C boundary. We collect an ensemble of feature vectors of length N corresponding to different vowels and obtain the N x N vowel covariance matrix C,,. Similarly we obtain the consonant covariance matrix C,. The generalized eigen vectors (GEV) of C, and C, are arranged in the decreasing order of eigenvalues. The GEV corresponding to G and 6, are used to project the feature vectors of a given basic-unit on to vowel and consonant subspaces and the projection norms are evaluated. This approach is effective for co-articulated basic-units. 2. FEATURE TRANSFORMATION When we consider an individuaLvowel or consonant, there exist techniques like LPC to model their statistical properties. While segmenting the vowel pan of a basic-unit, we can consider the vowel information (VI) in the feature vectors as the signal and the consonant information (CI) as noise. Similarly when the segmentation of the consonant part is required, we can view CI as signal and VI as noise. We present a linear feature transformation that aims at finding a subspace, of the feature space, in which the Signal-to-Noise ratio (SNR) is maximum. Such a decomposition can be anived at by representing VI and C1 by training vectors obtained using manual seamentation of the basic-units exlracted from the data base collected by us for Tamil synrhesis sysfem. The directions in the feature space where the SNR is maximum can be obtained by the generalized eigenvalue decomposition of the covariance matrices of the above vectors. Consider a linear transformation matrix W that maps the original feature vectors x on to 2. 589 P = lYTz (1) 06s (8) (L) (01) hq uahp s! paz!m! -!mu aq 01 uo!isun) uouaius aqi ' s n u "€1 xuiem asueq.\os aqi JO iueu!uuaiap aqi s! .iaueas. aqi JO asueuen aqi JO amseam aidmy v (€1 = AI"3,kl = h13,ill 9 2 hq ua@ aie uo!ieuuojsuen 'aye eauiern aswuehosi!aqi uaqi 'painquis!p hileuuou aq o i palunsse are ' p pue " p 40 ruo!isunj Li!suap aqi 31 'uo!ieuuojslren aqi iaije 13 30 i e q 01 [A 30 a a u e g n aqi 30 o!iei aqi saz!m!xem ieqi M e puy 01 q s ! ~ aM .Lian!ixdsaJ " p pue *p 4 0 sueam aqi iuasaJdaJ ' p pue " p araqm [J"P (Z) - "P)@ - 'P)lZ [J"P - "P)("p - "P)lZ = '3 = "3 se uaiium aq ma uoisan Su!u!eii asaqi '03 s a q m u asuegno2 a u .aseds arnieaj leuguo aqi u! 'LiaA!laadsaJ '13pue [A Su!u!eiuos sJoi3a.z Su!u!w aqi iuasaJdad " p pue " p i a l 'suUInio2 iuapuadapu! Ipeaug u1 qi!m xuielu w x zl ue s! i+i pue 'u 5 w '~oiaahieuo!suam!p-w ue s! f 'Joisah ieuo!suau!p-u w s! x aJaqm '(poqiam paseq KZJaua %!En uo!eiuamSas :awl lea!i (9) '/eye/i e q s qsaads .I 3!d -Jab snonu!iuoJ) /aha/ leu%!spaieina!rre-o3 (a) 'poqlam paseq KSJaua B u y uo!~eiuamSas i!un-s!sea by N . In the transform domain, the convolution in Eq.11 can be written as X k ( e J w ) = X(ej"')H,(eJ") (13) We know that LPC-Cepstrum is the inverse Fourier transform of the logarithm of the all-pole LPC spectrum. Thus, when the input features x(n) are the LPC-cepsual vectors, X(ej"') represents the log spectrum. Therefore, according to Eq.13, the transformation process can be seen as a multiplication of the log spectrum by the frequency response of the filters Hk(z).Since the transformation matrix is derived from the data (through generalized eigenvalue decomposition), the frequency response of the filters corresponding to the largest eigenvalues indicates the relative importance of different frequency bands for basic unit segmentation. The first four pincipal component filters for vowels and consonants are shown in Figs 3 and 4, respectively. From Figs 3 and 4, we see that the frequency response of the first four principal filters indicate the relative importance of mid-frequency region of the speech spectrum for vowels and low and high frequency regions for consonants. In [ S ] , it is demonstrated that the low and high frequency regions of the speech spectrum convey more speaker information than the mid-frequency regions. Combining our results with those of 151, we can say that the speech i!fonnafion in the mid-frequency region of the speech spectrum corresponds to vowel infoniiafion. Similarly, speaker infoniiafiori in the low and high frequency regions corresponds to consonanr infonnafion. From this, we can conclude that the consonants have more speaker infomation. 4. OPCA METHOD FOR V-C SEGMENTATION The GEVV and GEVCs are obtained by solving equations 5 and 6. The test signal is divided into overlapping frames and the feature \'ector zk corresponding to the kth frame is obtained using LPCC or MCC. We evaluate the norm-contours as follows. I Fig. 3. Frequency responses of the first four principal filters for vowels. ad P n a p l F6lm ?SiP-#Filr -:m. 4mP-ek - B .m p -5 z .m ,=I ,=I -10 0 N,, and N, give the norm-contours from V and C subspaces. Norms of the projections of the feature vectors (derived from the test basicunit) on GEVV and GEVC give the iionn-confours. One of them represents the vowel information and the other, the consonant information. The resulting norm contours obtained for a test signal cross each other at the beginning and end of consonant region of a given test basic-unit. The segmentation points are the ones where N,(k)= N,(k).We found that optimum results were obtained when M=3. 5. RESULTS AND DISCUSSION Basic-unit segmentation experiments were conducted on database collected from a female volunteer for our Kannada syirhesis system. The database has isolated utterances of VC, CV, VCV, VCCV and VCCCV. GEVV and GEVC were obtained from our Tamil database recorded from a male volunteer. In the case of all the Indian languages, diphthongs have distinct symbols and are grouped with and called as vowels. Our notations and analysis follow this system t m . Feature vectors were obtained for each frame of a test basic-unit. Duration of each frame of speech was 30 ms, with an overlap of 20 ms between successive frames. Each frame of speech was Hamming windowed and processed to yield a 13-dimesional feature vector. For obtaining MCC, the Mel-scale was simulated using a set of 24 triangular filters and for LPCC, a l Z i h order LPC analysis was performed after preemphasis with a = 0.95. We have seen in Fig.l(b) that energy based segmentation fails to identify consonant regions in co-articulated basic-units. Results of our -50 0 , 2 F W W <**a 1 4 -35 O ( 2 F 1 1 WW J Fig. 4. Frequency responses of the first four principal filters for consonants. Fig. 5. (a) Speech signal Ieyol. (b) Its segmentation into vowel (/el and lo/) and consonant (/yo regions, using both the vowel and consonant norm-contours. (c) Spectrogram of Ieyol. 59 1 o..y , , ,, / , , , , I I Fig. 6. (a) Speech signal laimiil. (b).lts segmentation into vowel (/ail and hi/) and consonant ( I d regions, using our algorithm. (c) S p e c t r o m of laimiil. Fig. 7. (a) Speech signal Iaullol. (b) Its segmentation into vowel (Iaul and 101) and consonant (1110 regions, using vowel and consonant subspaces. (c) Spectrogram of Iaullol. segmentation algorithm are shown in Figs. 5 to 8, along with the respective spectrograms. These test basic-units cover most of the classes of speech. For the same basic-unit shown in Fig. I(b), consonant region has been correctly identified (see Fig. 5 ) using our algorithm. Here, the consonant is a glide, "IyP. In the basicunit shown in Fig. 6, vowel /ail is diphrhong and the consonant is nasal. In Fig. 7, consonant is a liquid, "nlf'. Fig. 8 shows the segmentation of a VCCV basic-unit, where the consonants lyl and I d , are glide and a nasal respectively. The classes of speech considered here are difficult to segment because they possess high co-articulation in combination with vowels. We found that the segmentation performance with MCC features is better than that with LPCC features. So, the results shown here have been obtained using MCC features. The figures show that the transition of the second formant frequency clearly matches with the duration between norm crossovers in all the spectrograms. In 171, it is shown that data-driven Principal component analysis P C A ) approach, though significantly easier to implement than Linear discriminant analysis (LDA), gives a comparable performance as LDA. So, we considered data-driven OPCA approach for basic-unit segmentation. We applied our technique on 600 co-articulated units involving consonants such as lyl. I d , Id and N, in combination with vowels. When tested on the basic-units (distinct from the training set) from the same male speaker (Tamil mother tongue). we obtain correct segmentation of the V-C boundary in more than 85% of the cases. Further, when the same is applied on units from a speaker of opposite sex, speaking a different language (Kannada), resulted in correct segmentation in 80% of the co-articulated units tested. 6. CONCLUSION We have proposed a segmentation algorithm that effectively handles co-articulated basic-units. The filter bank interpretation of the feature transformation throws light on the relative significance of different frequency bands for vowels and consonants. We found that mid-frequency region in the speech spectrum has more vowel information; low and high frequency regions have higher consonant information. The vowel norm-contour clearly shows a dip in the consonant region and is not affected by speaker variations in a test basic-unit. Consonant norm-contour is, to some extent, affected by speaker variations in a test basic-unit. This can be explained by the presence of speaker information in low and high frequency regions of speech spectmm as shown in [ 5 ] . 7. REFERENCES [ I ] G. L. Jayavardhana Rama. A. G. Ramakrishnan, R. Muralishankar and P. Prathibha, "A complete text-to-speech synthesis system in Tamil," IEEE workshop on Speech Synthesis, 2002. 121 Jan P. van Hemen. "Automatic segmentation of speech:' IEEE Trans. SignalPmc., vol. 39. no. 4. pp. 1008-1012. Apr. 1991. I31 R. Duda and P. Hart Parrern Classificarion arid Scene Analysis, New York Wiley, 1973. 141 N. Malayath, H. Hermansky and A. Kain, "Towards decomposing the sources of variability in speech," in pmc. EUROSPEECH.97, Rhodes, Greece, 1997. [51 A. Vijaya krishna, Feature Tmnsfommmrionfor Speaker Idenri- ficarion, M. Sc(Engg) thesis, IISc, 2W2. [ 6 ] A. Makur, "BOT's based on nonuniform filter banks," IEEE Trans. SignalPmc.,vol. 44, no. 8,pp. 1971-1981, 1996. Fig. 8. (a) Speech signal Ioymol. (b) Its seapentation into vowel (lo0 and consonant (lyl and Iml) regions, using our algorithm. (c) Spectrogram of Ioymol. [7] 1. W. Hung, H. M. Wang and L. S. Lee, "Comparative analysis 592 for data-driven temporal filters obtained via PCA and LDA in speech recognition:' inproc. EUROSPEECH'OI,2001.