Computing on Mio Scripts #1

advertisement

Computing on Mio

Scripts #1

Timothy H. Kaiser, Ph.D.

tkaiser@mines.edu

Director - CSM High Performance Computing

Director - Golden Energy Computing Organization

http://inside.mines.edu/mio/tutorial/

1

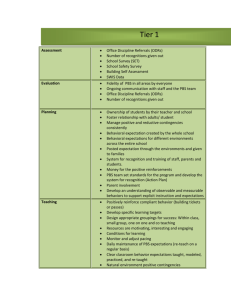

Topics

•

•

Introduction to High Performance Computing

✓Purpose and methodology

✓Hardware

✓Programming

Mio overview

✓History

✓General and Node descriptions

✓File systems

✓Scheduling

2

More Topics

•

Running on Mio

✓Documentation

• Batch scripting

• Interactive use

• Staging data

• Enabling Graphics sessions

• Useful commands

3

Miscellaneous Topics

•

•

•

Some tricks from MPI and OpenMP

Libraries

Nvidia node

4

Mio Environment

•

When you ssh to Mio you are on the head node

•

•

Shared resource

•

You have access to basic Unix commands

DO NOT run parallel, compute intensive, or

memory intensive jobs on the head node

•

•

Editors, file commands, gcc...

Need to set up your environment to gain

access to HPC compilers

5

Mio HPC Environment

•

•

•

You want access to parallel and high

performance compilers

•

Intel, AMD, mpicc, mpif90

Commands to run and monitor parallel

programs

See: http://inside.mines.edu/mio/page3.html

•

•

Gives details

Shortcut

6

Shortcut Setup

http://inside.mines.edu/mio/page3.html

Add the following to you .bashrc file and log out/in

if [ -f

/usr/local/bin/setup/setup ]; then

source /usr/local/bin/setup/setup intel ; fi

•Openmpi parallel MPI environment

•Compilers

•MPI compilers

•Intel 11.x

•MPI run commands

•AMD

•Portland Group

•Python 2.6.5 and 3.1.2

•NAG

Should give you:

[tkaiser@mio ~]$ which mpicc

/opt/lib/openmpi/1.4.2/intel/11.1/bin/mpicc

[tkaiser@mio 7~]$

Jobs are Run via a Batch System

Ra and Mio are shared resources

•

Purpose of Batch System:

•

•

•

•

Give fair access to all users

Have control over where jobs are run

Set limits on times and processor counts

Allow exclusive access to nodes while you own

them

8

Batch Systems

•

•

Create a script that describes:

•

•

•

What you want to do

What resources (# nodes) you want

How long you want the resources

Submit your script to the queuing system

•

•

Wait for some time for your job to start

•

•

Resources

Time required

Your job runs and you get your output

9

Maui is the similar top level scheduler on Mio

Moab -Top level Batch System on RA

•

Product of Cluster Resources

•

http://www.clusterresources.com/pages/

products/moab-cluster-suite/workloadmanager.php

•

Marketing pitch from the web page:

Moab Workload Manager is a policy-based job scheduler and

event engine that enables utility-based computing for clusters.

Moab Workload Manager combines intelligent scheduling of

resources with advanced reservations to process jobs on the

right resources at the right time. It also provides flexible policy

and event engines that process workloads faster and in line with

set business requirements and priorities.

10

Running a Job

•

•

After you write a batch script you submit it to be run

Commands

•

•

msub on RA

qsub on Mio

•

•

We recommend that you alias msub=qsub on Mio

qsub is on RA but you should not normally use it

11

Running and monitoring jobs

•Use msub or qsub to submit the job

•qstat to see its status (Q,R,C)

•qdel to kill the job if needed

[tkaiser@ra ~/mydir]$ qsub mpmd

8452

[tkaiser@ra ~/mydir]$ qstat 8452

Job id

Name

User

------------------ -------------- ------------8452.ra

testIO

tkaiser

[tkaiser@ra ~/mydir]$

[tkaiser@ra ~/mydir]$

[tkaiser@ra ~/mydir]$ qstat -u tkaiser

Job id

Name

User

------------------ -------------- ------------8452.ra

testIO

tkaiser

[tkaiser@ra ~/mydir]$

[tkaiser@ra ~/mydir]$

[tkaiser@ra ~/mydir]$ qdel 8452

12

Time Use S Queue

-------- - ----0 R SHORT

Time Use S Queue

-------- - ----0 R SHORT

A Simple Script for a Parallel job

!/bin/bash

Scripts contain normal shell commands

#PBS -l nodes=1:ppn=8

and comments designated with a #PBS

#PBS -l walltime=02:00:00

that are interpreted by the scheduler

#PBS -N testIO

On RA you must specify an account

#PBS -A account

We may have accounts on MIO in the

#PBS -o stdout

future

#PBS -e stderr

#PBS -V

#PBS -m abe

#PBS -M tkaiser@mines.edu

The command mpiexec normally is

#---------------------used to launch MPI jobs. On Mio

cd $PBS_O_WORKDIR

it can launch “np” copies of a

mpiexec -np 8 ./c_ex00

serial program

13

A Simple Script for a MPI job

Command

Comment

!/bin/bash

We will run this job using the "bash" shell

#PBS -l nodes=1:ppn=8

We want 1 node with 8 processors for a total of 8

processors

#PBS -l walltime=02:00:00

We will run for up to 2 hours

#PBS -N testIO

The name of our job is testIO

#PBS -o stdout

Standard output from our program will go to a file stdout

#PBS -e stderr

Error output from our program will go to a file stderr

#PBS -A account

An account is required on RA - from your PI

#PBS -V

Very important! Exports all environment variables from the

submitting shell into the batch shell.

#PBS -m abe

Send mail on abort, begin, end

#PBS -M tkaiser@mines.edu

Where to send email to

#----------------------

Not important. Just a separator line.

cd $PBS_O_WORKDIR

Very important! Go to directory $PBS_O_WORKDIR which is

the directory which is where our script resides

mpiexec -np 8 ./c_ex00

Run the MPI program c_ex00 on 8 computing cores.

14

use n to suppress

(cell phone?)

“PBS” Variables

Variable

Meaning

PBS_JOBID

unique PBS job ID

PBS_O_WORKDIR

jobs submission directory

PBS_NNODES

number of nodes requested

PBS_NODEFILE

file with list of allocated nodes

PBS_O_HOME

home dir of submitting user

PBS_JOBNAME

user specified job name

PBS_QUEUE

job queue

PBS_MOMPORT

active port for mom daemon

PBS_O_HOST

host of currently running job

PBS_O_LANG

language variable for job

PBS_O_LOGNAME

name of submitting user

PBS_O_PATH

path to executables used in job script

PBS_O_SHELL

script shell

PBS_JOBCOOKIE

job cookie

PBS_TASKNUM

number of tasks requested (see pbsdsh)

PBS_NODENUM

node offset number (see pbsdsh)

15

Another example

#!/bin/bash

#PBS -l nodes=2:ppn=8

#PBS -l walltime=00:20:00

#PBS -N testIO

#PBS -o out.$PBS_JOBID

#PBS -e err.$PBS_JOBID

#PBS -A support

#PBS -m n

#PBS -M tkaiser@mines.edu

#PBS -V

cat $PBS_NODEFILE > fulllist

sort -u $PBS_NODEFILE > shortlist

cd $PBS_O_WORKDIR

mpiexec -np 16 $PBS_O_WORKDIR/c_ex01

[tkaiser@ra ~/mpmd]$ cat fulllist

compute-9-9.local

compute-9-9.local

compute-9-9.local

compute-9-9.local

compute-9-9.local

compute-9-9.local

compute-9-9.local

compute-9-9.local

compute-9-8.local

compute-9-8.local

compute-9-8.local

compute-9-8.local

compute-9-8.local

compute-9-8.local

compute-9-8.local

compute-9-8.local

[tkaiser@ra ~/mpmd]$ cat shortlist

compute-9-8.local

compute-9-9.local

[tkaiser@ra ~/mpmd]$ ls err* out*

err.8453.ra.mines.edu out.8453.ra.mines.edu

16

Where’s my Output?

•

Standard Error and Standard Out don’t show up

until after your job is completed

•

Output is temporarily stored in /tmp on your

nodes

•

•

•

You can ssh to your nodes and see your output

Looking into changing this behavior, or at least

make it optional

You can pipe output to a file:

•

mpiexec myjob > myjob.$PBS_JOBID

17

Useful Commands

Command

Description

canceljob

cancel job

checkjob

provide detailed status report for specified job

changeparam

control and modify job

mdiag

provide diagnostic reports for resources, workload, and

scheduling

mjobctl

control and modify job

releasehold

release job defers and holds

releaseres

release reservations

sethold

set job holds

showq

show queued jobs

showres

show existing reservations

showstart

show estimates of when job can/will start

showstate

show current state of resources

ls /usr/local/maui/bin

18

More Useful commands

Command

Description

tracejob

trace job actions and states recorded in TORQUE logs

pbsnodes

view/modify batch status of compute nodes

qalter

modify queued batch jobs

qdel

delete/cancel batch jobs

qhold

hold batch jobs

qrls

release batch job holds

qrun

start a batch job

qsig

send a signal to a batch job

qstat

view queues and jobs

qsub

submit a job

http://www.clusterresources.com/torquedocs21/a.acommands.shtml

19

Let’s do some examples

cd $DATA

tests]$ mkdir cwp

tests]$ cd cwp

cwp]$ wget http://inside.mines.edu/mio/tutorial/cwp.tar

[tkaiser@mio tests]$

[tkaiser@mio

[tkaiser@mio

[tkaiser@mio

...

...

We already

did this part

2011-01-11 13:31:06 (48.6 MB/s) - `cwp.tar' saved [20480/20480]

[tkaiser@mio cwp]$

tar -xf *tar

[tkaiser@mio tests]$

cd $DATA/cwp

[tkaiser@mio cwp]$ make

...

mpicc -o c_ex00 c_ex00.c

/opt/intel/Compiler/11.1/069/lib/intel64/libimf.so: warning: warning: feupdateenv is not implemented and will always

fail

mpif90 -o f_ex00 f_ex00.f

/opt/intel/Compiler/11.1/069/lib/intel64/libimf.so: warning: warning: feupdateenv is not implemented and will always

fail

rm -rf fmpi.mod

icc info.c -o info_c

ifort info.f90 -o info_f

cp info.py info_p

chmod 700 info_p

...

...

[tkaiser@mio cwp]$ cd scripts1

[tkaiser@mio cwp]$ ls

batch1 batch2 c_ex00

info_c info.c info_f

c_ex00.c

info.f90

cwp.tar f_ex00

info_p info.py

f_ex00.f

makefile

20

What we have

•

[c_ex00.c, c_ex00]

•

•

[f_ex00.f, f_ex00]

•

•

Serial programs in C, Fortran and python that print the node name and

process id. Creates a node name process id

batch1

•

•

hello world in Fortran and MPI

[info.c, info_c] [info.f90, info_f] [info.py]

•

•

hello world in C and MPI

Batch file to run the 1 of the examples in parallel using mpiexec

batch1b

•

Runs all of the examples

21

info.c

#include <unistd.h>

#include <sys/types.h>

#include <stdio.h>

#include <stdlib.h>

main() {

char name[128],fname[128];

pid_t mypid;

FILE *f;

/* get the process id */

mypid=getpid();

/* get the host name */

gethostname(name, 128);

/* make a file name based on these two */

sprintf(fname,"%s_%8.8d",name,(int)mypid);

/* open and write to the file */

f=fopen(fname,"w");

fprintf(f,"C says hello from %d on %s\n",(int)mypid,name);

}

22

info.f90

program info

USE IFPOSIX ! needed by PXFGETPID

implicit none

integer ierr,mypid

character(len=128) :: name,fname

! get the process id

CALL PXFGETPID (mypid, ierr)

! get the node name

call mynode(name)

! make a filename based on the two

write(fname,'(a,"_",i8.8)')trim(name),mypid

! open and write to the file

open(12,file=fname)

write(12,*)"Fortran says hello from",mypid," on ",trim(name)

end program

subroutine mynode(name)

! Intel Fortran subroutine to return

! the name of a node on which you are

! running

USE IFPOSIX

implicit none

integer jhandle

integer ierr,len

character(len=128) :: name

CALL PXFSTRUCTCREATE ("utsname", jhandle, ierr)

CALL PXFUNAME (jhandle, ierr)

call PXFSTRGET(jhandle,"nodename",name,len,ierr)

end subroutine

23

info.py

#!/opt/development/python/2.6.5/bin/python

import os

# get the process id

mypid=os.getpid()

# get the node name

name=os.uname()[1]

# make a filename based on the two

fname="%s_%8.8d" % (name,mypid)

# open and write to the file

f=open(fname,"w")

f.write("Python says hello from %d on %s\n" %(mypid,name))

24

Example Output From the Serial

Programs

[tkaiser@mio cwp]$

./info_c

ls -lt mio*

[tkaiser@mio cwp]$

-rw-rw-r-- 1 tkaiser tkaiser 41 Jan 11 13:47

mio.mines.edu_00050205

[tkaiser@mio cwp]$ cat mio.mines.edu_00050205

C says hello from 50205 on mio.mines.edu

[tkaiser@mio cwp]$

25

C MPI example

#include

#include

#include

#include

<stdio.h>

<stdlib.h>

<mpi.h>

<math.h>

/************************************************************

This is a simple hello world program. Each processor prints out

it's rank and the size of the current MPI run (Total number of

processors).

************************************************************/

int main(argc,argv)

int argc;

char *argv[];

{

int myid, numprocs,mylen;

char myname[MPI_MAX_PROCESSOR_NAME];

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&numprocs);

MPI_Comm_rank(MPI_COMM_WORLD,&myid);

MPI_Get_processor_name(myname,&mylen);

/* print out my rank and this run's PE size*/

printf("Hello from %d of %d on %s\n",myid,numprocs,myname);

MPI_Finalize();

}

26

Fortran MPI example

!*********************************************

! This is a simple hello world program. Each processor

! prints out its rank and total number of processors

! in the current MPI run.

!****************************************************************

program hello

include "mpif.h"

character (len=MPI_MAX_PROCESSOR_NAME):: myname

call MPI_INIT( ierr )

call MPI_COMM_RANK( MPI_COMM_WORLD, myid, ierr )

call MPI_COMM_SIZE( MPI_COMM_WORLD, numprocs, ierr )

call MPI_Get_processor_name(myname,mylen,ierr)

write(*,*)"Hello from ",myid," of ",numprocs," on ",trim(myname)

call MPI_FINALIZE(ierr)

stop

end

27

Our Batch file, batch1

#!/bin/bash

#PBS -l nodes=2:ppn=8

#PBS -l walltime=08:00:00

#PBS -N test_1

#PBS -o outx.$PBS_JOBID

#PBS -e errx.$PBS_JOBID

#PBS -r n

#PBS -V

##PBS -m abe

##PBS -M tkaiser@mines.edu

#----------------------------------------------------cd $PBS_O_WORKDIR

# get a short and full list of all of my nodes

sort -u $PBS_NODEFILE > mynodes.$PBS_JOBID

sort

$PBS_NODEFILE > allmynodes.$PBS_JOBID

# select one of these programs to run

# the rest of the lines are commented

# out

export MYPROGRAM=c_ex00

#export MYPROGRAM=f_ex00

#export MYPROGRAM=info_p

#export MYPROGRAM=info_c

#export MYPROGRAM=info_f

echo "running" $PBS_O_WORKDIR/$MYPROGRAM

mpiexec -np 16 $PBS_O_WORKDIR/$MYPROGRAM

28

Running the job

[tkaiser@mio cwp]$ qsub batch1

3589.mio.mines.edu

[tkaiser@mio cwp]$ ls -lt | head

total 1724

-rw------- 1 tkaiser tkaiser

466

-rw------- 1 tkaiser tkaiser

64

-rw------- 1 tkaiser tkaiser

0

-rw------- 1 tkaiser tkaiser

8

Jan

Jan

Jan

Jan

11

11

11

11

14:08

14:08

14:08

14:08

outx.3589.mio.mines.edu

allmynodes.3589.mio.mines.edu

errx.3589.mio.mines.edu

mynodes.3589.mio.mines.edu

[tkaiser@mio cwp]$ cat mynodes.3589.mio.mines.edu

n45

n46

[tkaiser@mio cwp]$ cat allmynodes.3589.mio.mines.edu

n45

n45

n45

n45

n45

n45

n45

n45

n46

n46

n46

n46

n46

n46

n46

n46

29

The “real” output

[tkaiser@mio cwp]$ cat outx.3589.mio.mines.edu

running /home/tkaiser/data/tests/cwp/c_ex00

Hello from 0 of 16 on n46

Hello from 6 of 16 on n46

Hello from 1 of 16 on n46

Hello from 5 of 16 on n46

Hello from 12 of 16 on n45

Hello from 2 of 16 on n46

Hello from 10 of 16 on n45

Hello from 4 of 16 on n46

Hello from 8 of 16 on n45

Hello from 9 of 16 on n45

Hello from 3 of 16 on n46

Hello from 11 of 16 on n45

Hello from 7 of 16 on n46

Hello from 13 of 16 on n45

Hello from 15 of 16 on n45

Hello from 14 of 16 on n45

[tkaiser@mio cwp]$

30

Running a serial program with

mpiexec

export MYPROGRAM=info_p

[tkaiser@mio cwp]$ qsub batch1

3590.mio.mines.edu

[tkaiser@mio cwp]$

[tkaiser@mio

total 1792

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

cwp]$ ls -lt

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

tkaiser

[tkaiser@mio cwp]$ cat n46_00051792

Python says hello from 51792 on n46

31

0

44

64

8

36

36

36

36

36

36

36

36

36

36

36

36

36

36

36

36

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

Jan

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

14:13

errx.3590.mio.mines.edu

outx.3590.mio.mines.edu

allmynodes.3590.mio.mines.edu

mynodes.3590.mio.mines.edu

n46_00051792

n46_00051793

n46_00051794

n46_00051795

n46_00051796

n46_00051797

n46_00051798

n46_00051799

n47_00051784

n47_00051785

n47_00051786

n47_00051787

n47_00051788

n47_00051789

n47_00051790

n47_00051791

Our Batch file, batch1b

#!/bin/bash

#PBS -l nodes=2:ppn=8

#PBS -l walltime=08:00:00

#PBS -N test_1

#PBS -o outx.$PBS_JOBID

#PBS -e errx.$PBS_JOBID

#PBS -r n

#PBS -V

##PBS -m abe

##PBS -M tkaiser@mines.edu

#----------------------------------------------------cd $PBS_O_WORKDIR

# get a short and full list of all of my nodes

sort -u $PBS_NODEFILE > mynodes.$PBS_JOBID

sort

$PBS_NODEFILE > allmynodes.$PBS_JOBID

# run each program, one after the other

for P in c_ex00 f_ex00 info_p info_c info_f ; do

export MYPROGRAM=$P

echo "running" $PBS_O_WORKDIR/$MYPROGRAM

mpiexec -np 16 $PBS_O_WORKDIR/$MYPROGRAM

done

32

Another Parallel Run Command

Mio has a second parallel run command not

connected to MPI: beorun

BEORUN(1)

BEORUN(1)

NAME

beorun - Run a job on a Scyld cluster using dynamically selected nodes.

SYNOPSIS

beorun

[ -h, --help ] [ -V, --version ] command [ command-args... ] [ --map node list ] [ --all-cpus ] [

--all-nodes ]

[ --np processes ] [ --all-local ] [ --no-local ] [ --exclude node list ]

DESCRIPTION

The beorun program runs the specified program on a dynamically selected set of cluster nodes. It generates a

Beowulf job map from the currently installed beomap scheduler, and starts the program on each node specified

in the map.

beorun --no-local --map 21:22:24 ./info_c

33

beorun

•

•

Works on the head node (bad idea)

Can also work inside a batch script

•

•

Use the batch system to allocate a set of nodes

•

Run the command

Create a node list for the command from your

set of nodes (tricky)

34

One way to create a list of nodes

sort

$PBS_NODEFILE > allmynodes.$PBS_JOBID

export NLIST=`awk '{ sum=sum":"substr($1,2);

allmynodes.$PBS_JOBID`

}; END { print substr(sum,2) }' \

[tkaiser@mio cwp]$ cat allmynodes.3590.mio.mines.edu

n46

n46

n46

n46

n46

n46

n46

n46

n47

n47

n47

n47

n47

n47

n47

n47

46:46:46:46:46:46:46:46:47:47:47:47:47:47:47:47

35

beorun in a batch file

batch2

#!/bin/bash

#PBS -l nodes=2:ppn=8

#PBS -l walltime=08:00:00

#PBS -N test_2

#PBS -o outx.$PBS_JOBID

#PBS -e errx.$PBS_JOBID

#PBS -r n

#PBS -V

##PBS -m abe

##PBS -M tkaiser@mines.edu

#----------------------------------------------------cd $PBS_O_WORKDIR

# get a short and full list of all of my nodes

sort -u $PBS_NODEFILE > mynodes.$PBS_JOBID

sort

$PBS_NODEFILE > allmynodes.$PBS_JOBID

# select one of these programs to run

# the rest of the lines are commented

# out

#export MYPROGRAM=info_p

#export MYPROGRAM=info_c

export MYPROGRAM=info_f

#

#

#

#

#

create a list of nodes that can be used by the

Mio command beorun. This next line takes the

names form the file and strips off the "n" to

just give us an node number. It then puts a

":" between the numbers

export NLIST=`awk

echo $NLIST

'{ sum=sum":"substr($1,2);

}; END { print substr(sum,2) }' allmynodes.$PBS_JOBID`

echo "running" $PBS_O_WORKDIR/$MYPROGRAM

beorun --no-local --map $NLIST

$PBS_O_WORKDIR/$MYPROGRAM

36

Results

[tkaiser@mio

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

-rw------- 1

cwp]$ ls -lt n*_* *mio.mines.edu

tkaiser tkaiser

0 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 45 Jan 11 15:02

tkaiser tkaiser 92 Jan 11 15:02

tkaiser tkaiser 64 Jan 11 15:02

tkaiser tkaiser

8 Jan 11 15:02

[tkaiser@mio cwp]$ cat n44_00055776

Fortran says hello from

55776

[tkaiser@mio cwp]$

on n44

37

errx.3599.mio.mines.edu

n44_00055776

n44_00055777

n44_00055778

n44_00055779

n44_00055780

n44_00055781

n44_00055782

n44_00055783

n45_00055784

n45_00055785

n45_00055786

n45_00055787

n45_00055788

n45_00055789

n45_00055790

n45_00055791

outx.3599.mio.mines.edu

allmynodes.3599.mio.mines.edu

mynodes.3599.mio.mines.edu

Selecting Particular nodes

•

Replace the “#PBS -l nodes=2:ppn=8” line in your

script with...

•

•

•

•

#PBS -l nodes=2:ppn=12

•

requests 2 12 core nodes

#PBS -l nodes=n4:ppn=8

•

requests node n4

Can also be done on the qsub command line

•

qsub batch1 -l nodes="n1:ppn=8+n2:ppn=8"

Listed nodes are separated by a “+”

38

Running Interactive

39

Interactive Runs

•

It is possible to grab a collection of nodes and run

parallel interactively

•

•

•

•

Nodes are yours until you quit

Might be useful during development

Running a number of small parallel jobs

Required (almost) for running parallel debuggers

40

Multistep Process

•

•

•

•

•

Ask for the nodes using qsub

•

•

•

qsub -I -V -l nodes=1:ppn=8

qsub -I -V -l nodes=1:ppn=12

qsub -I -V -l nodes=n4:ppn=8

You are automatically Logged on to the nodes you are given

cat $PBS_NODEFILE to see list

Go to your working directory

Run your job(s)

Don’t abuse it, lest you be shot.

41

Example

[tkaiser@ra ~/guide]$qsub -I -V -l nodes=1 -l naccesspolicy=singlejob -l walltime=00:15:00

qsub: waiting for job 1280.ra.mines.edu to start

qsub: job 1280.ra.mines.edu ready

[tkaiser@compute-9-8 ~]$cd guide

[tkaiser@compute-9-8 ~/guide]$mpiexec -n 4 ./c_ex00

Hello from 0 of 4 on compute-9-8.local

Hello from 1 of 4 on compute-9-8.local

Hello from 2 of 4 on compute-9-8.local

Hello from 3 of 4 on compute-9-8.local

[tkaiser@compute-9-8 ~/guide]$

[tkaiser@compute-9-8 ~/guide]$mpiexec -n 16 ./c_ex00

Hello from 1 of 16 on compute-9-8.local

Hello from 0 of 16 on compute-9-8.local

Hello from 3 of 16 on compute-9-8.local

Hello from 4 of 16 on compute-9-8.local

...

...

Hello from 15 of 16 on compute-9-8.local

Hello from 2 of 16 on compute-9-8.local

Hello from 6 of 16 on compute-9-8.local

[tkaiser@compute-9-8 ~/guide]$

[tkaiser@compute-9-8 ~/guide]$exit

logout

qsub: job 1280.ra.mines.edu completed

[tkaiser@ra ~/guide]$

42

Ensures

exclusive access

Challenge

•

Take the example that we have run

•

•

Run the MPI and serial programs interactively

Run the serial programs using beorun

43