“Agricola” Board Game Assist Program EGGN 512 – Computer Vision Introduction

advertisement

“Agricola” Board Game Assist Program

EGGN 512 – Computer Vision

Derek Lang

May 7, 2012

Introduction

German-style board games are a class of board games that usually rely on strategy, deemphasize luck and conflict, and keep all players in play until the end of the game [1]. Typically, the

rules are simple to keep the game accessible for players of different ages and nationalities. One

notable exception is the game Agricola. There are 360 cards, and 17 different types of tokens (to a

total of 317 total) that are used for the game. The game's concept is that of farm management in late

17th century Europe. A player's board represents their farm. The score is largely based on what tokens

are present on their farm.

The purpose of this paper is to provide a means of processing an image of a player's end-game

board, and to compute a score. Hopefully, this algorithm will provide a quick means of calculating

the score and determining the winner of the game.

Color images are taken using a DSLR camera as JPEGs under the same lighting conditions. 17

images were used (see Fig. 1), 1 for calibrating the board detection, 16 of varying end-game

conditions. The images were then rectified using the Bibble ® software lens correction plugin. All code

was developed in Matlab as a proof of concept.

This paper will first discuss the existing literature relevant to this project. It will then describe

in detail the techniques used in this project. The project results will be shown. Finally, a discussion of

the results and recommendations on future work will be presented along with reference material. An

appendix of all the code developed for this project is included at the end of the paper.

Related Work

Markers and recognizable patterns have been used extensively to determine the pose of objects

in relation to a camera. One common example is the concentric contrasting circle (CCC) [2]. A CCC

consists of a white circle centered in a black circle. An intensity threshold can be taken of the image,

and correspondence between the centroids of the circles will determine a CCC detection. However,

using multiple black and white CCCs will need a non-trivial solution to determine the geometry of

the points, so colored CCCs will be used.

Color segmentation is usually considered where the colors and lighting conditions may not be

known in advance. One method by Wang and Watada [3] uses the Karhunen-Loeve transform to

extract the most information from a color image, into a 2-dimensional form, and to use an Otsu [4]

threshold to segment. Another method would use K-means clustering [5] to determine which colors

to threshold around. For this project, the colors of the board are known in advance, so a simple

threshold can be determined through experimentation.

The SIFT algorithm [6] is a widely used method for creating and matching feature descriptors.

In SIFT, a keypoint is detected from a LOG scale-space, and a feature vector is created from the

neighborhood gradient around that keypoint. Ke and Sukthankar [7] developed an optimization of

the SIFT algorithm. The algorithm alters the last step of the SIFT algorithm (keypoint description) and

instead of building the descriptors from the local image gradients of the image, a precomputed

eigenspace is used to express the gradient images, which is used to project a keypoint's local image

gradient, deriving a feature vector that only contains the top neigenvectors for a given image

gradient.

Calonder, Lepetit, Strecha, and Fua [8] also developed a feature descriptor, which they called a

'Binary Robust Independent Elementary Feature' (BRIEF). An image patch surrounding a keypoint

can be described as a binary string of comparisons between different regions of the image patch. The

descriptor is very fast to compute, and the Hamming distance can be used to match features, which is

faster and more efficient than the L2 norm used in other feature descriptors.

Algorithm

The main algorithm can be described in the following steps:

1. Detection of the board

2. Detection of tiles

3. Detection of player pieces:

◦ Persons

◦ Stables

◦ Fences

4. Detection of livestock pieces

5. Detection of crop pieces

6. Detection of pastures

7. Calculation of score

Although there are a lot of different pieces to consider for this project, there are many factors

that help to simplify the task. Knowledge of the rules of the board can be used as logic that will

reduce the amount of detections that have to be made and help check for errors. Once the detections

have been made, a score can be calculated using lookup tables.

Board Detection

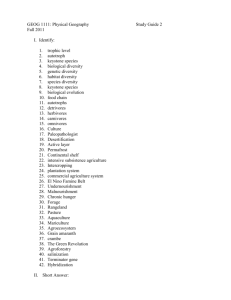

Fig. 1: Samples of the test images.

The board is detected by placing four color markers similar to CCCs [1] at the four

corners of the board. The image is converted to HSI values, and a narrow band hue threshold of the

image can be taken for each of the targets. The white marker centers can be found by taking a

threshold of the intensity of the image. The markers are then found by matching the centroid of the

colored circle to the centroid of the white center. Finding the four markers will give a correspondence

to the known geometry of the board, which can be used to create a transform to convert the image

into a orthornormal perspective. See Fig. 4 for an example of this normalization.

The normalized test image can be further subdivided into 54 regions that can be categorized as

follows: 15 squares where most of the pieces are placed, the 38 bordering regions where the fence

pieces are placed, and a region at the top of the board where a 'stockpile' of extra pieces for the player

is placed. This reduces the complexity of detection algorithms, as fewer pieces will have to be

detected at once, and smaller images to process will be faster to process.

Fig. 2: Test image (left) and orthonormal transformed image (right)

Tile Detection

The tiles that are placed on the board are of four different types: Wooden house, Clay house,

Stone house, and Field. The SIFT algorithm with a coarse Hough space [9] is used to determine a

match between the template and the test region. Since there are 16 different images used for each of

the tile types in the game, it is possible that 3,456 SIFT comparisons will be made. A few

simplifications are made to increase the likelihood of matching and reduce the number of

comparisons:

1. A full transformation between the matching sets of points is not calculated, only the difference

between the descriptors is used as a rough transformation.

2. The angle of the transformation should be close to 90 degrees, so transforms outside of this

range can be discarded.

3. Any transformation that does not have a minimum number of points is discarded.

4. From the game rules, it is known that your house will only be built of one type of material. A

check for one type of house is skipped if another type is found.

5. If a match is found for one region, that region is not used for subsequent comparisons.

Player Piece Detection

There are three types of pieces that are specific to a player on a board. For simplification, only

one color is considered for this project. The 'person' and 'stable' pieces are considered using the

following method: On a region, a hue threshold is performed to isolate the piece from the

background. The binary threshold is then morphologically closed and eroded to clean up the

detection. From this binary threshold, the area and the centroid of the isolated piece can be used to

determine a match for that piece. Since normalization can distort a piece to 'expand' into the

neighboring regions, The centroid of a piece will lie within a radius of the center of the region (see

Fig. 3).

Fig. 3: Player Piece detection. RGB image (left) and

corresponding hue threshold (right). Note that the centroid

for the fence from the neighboring region is much closer to

the edge than the true detection.

The 'fence' pieces are isolated using a hue threshold, but instead of calculating the regional

properties for that binary image, a simple percentage is calculated from the binary image. If the

percentage of blue pixels to the total region is above a threshold (20%) then there is a match for a

'fence' piece in that region.

Livestock Piece Detection

There are three type of livestock pieces: the sheep (white), the boar (black) and the cattle

(brown). Since they are all the same cube shape and occur in the same regions, they are given the

same consideration. We only check the regions that do not have a field tile, since the game rules

precludes this from occuring. Thresholds based on HSI conversion of the region are made to produce

a binary detection. Hue and saturation are used for the cattle, and saturation and intensity are used

for the sheep and boar. Morphological operations are used to isolate a detection from noise. The

binary images' area is then compared to a threshold to ensure a detection.

Crop Piece Detection

There are two types of crop pieces: the grain (yellow) piece and the vegetable (orange) piece.

The method to detect them is similar to detecting player or livestock pieces. A hue threshold is

performed on a region, and the region properties of 'blobs' are thresholded to ensure detection. We

use knowledge of the game's rules to only check for pieces on the large top region, and on top of filed

tiles. Since the pieces are rotationally invariant discs, we can use a much larger erosion element to

isolate each piece (see Fig. 4).

Fig. 4: Crop piece detection. The hue threshold is eroded to isolate

the pieces from possible touching.

Pasture Detection

Pastures are defined as any squares that are enclosed by fences on all sides. Since these can be

of an arbitrary shape and size, a hole-filling algorithm [10] is used. A representational 7 by 11 pixel

binary image of the board is created with all the fence detections used to construct outlines of the

pastures. Using Matlab's imfill function, the filled representational image is xor'd with the original

representational image, to create an image that contains only the detected pastures. It is then a simple

matter to count pastures for detection. (see Fig. 5)

Fig. 5: Pasture detection. Representational image showing

fences (left) and resulting pastures (right).

Results

Board Detection and orthonormal image creation

The board detection algorithm took 19 seconds on average to run. Marker detection was

successful on all but one image (9515.jpg), making it 93.75% accurate. It is believed that glare on the

board from nearby light over-saturated the image, affecting the threshold of the image (see Fig. 6).

Fig. 6: Marker detection miss. Successfully detected

marker (left) and unsuccessfully detected marker (right)

Image

9512

9513

9514

9515

9516

9517

9518

9519

9520

9522

9523

9524

9525

9526

9527

9530

Calculated Score

10

17

10

0

32

23

29

25

28

12

9

19

22

24

49

22

Actual Score

11

18

21

22

33

24

29

26

28

12

16

23

23

25

50

25

Accuracy

Notes

90.91% Missed sheep piece

94.44% Missed cattle pieces

47.62% SIFT mismatch

0.00% Marker detect failure

96.97% Missed cattle pieces

95.83% Missed cattle pieces

100.00%

96.15% Missed stable

100.00%

100.00%

56.25% Missed fence

82.61% Missed fence

95.65% Missed fence

96.00% Missed fence

98.00% Missed fence

88.00% 2 persons stacked

Table 1: Scoring results.

The calculated scores versus their actual scores is shown in Table 1. This method is 83.65%

accurate based on these testing images. It is interesting to note that there were no false positives in

detecting pieces, only false negatives. A SIFT mismatch would count one tile as another type, and that

detection would cause checks for the other tile types to be skipped (see Fig. 7). A false positive would

only occur if a person piece was not covering the center of the tile. It may be possible to avoid this

detection by obscuring the center of the template images, but it is unknown if this would affect the

robustness of the detection.

Fig. 7: False positive tile detection.

A fence piece would be missed if the piece was not completely in the region where it would be

checked (see Fig. 8). An attempt to mitigate this was to enlarge the regions where they would be

checked, but a miss like this could be attributed to player error of the piece not being in the correct

region.

Fig. 8: Fence miss. Note that the borders of the square above and below

the region are visible.

Livestock pieces would be missed if the pieces were touching (see Fig. 9). The threshold

detection would treat the pieces as one large 'blob', and only one would be counted for multiple

pieces. Many attempts were tried to remedy this, such as checking the area or bounding rectangle of a

detection. This was exacerbated by occlusion of the piece and distortion of the piece due to the

orthonormal transformation, which would lead to greater variance in the detection.

Fig. 9: Livestock miss. Touching pieces are not isolated from

eachother in a hue threshold.

Discussion

This method for calculating the score is largely accurate, with usually only one miss to account

for the difference in score. The score in 9 of the 16 cases was also only off by one point.

Errors in fence piece registration could be mitigated by further enlarging the region where the

detection is checked. There is a condition where in the game, a player can stack two person pieces on

top of each other. For the sake of simplicity of this method, this condition was not considered. The

biggest obstacle to in correcting the score would be accounting for the cases where livestock pieces

were touching. One way to reduce the exacerbating effects of the normalization of the board would

be to require the picture to be taken from top-down. It would then be much easier to use the area of a

detection to determine if there were multiple pieces in a single 'blob'.

The time to run the algorithm would vary between 100 to 200 seconds. The main contributing

factors to this length are the board marker detection and the SIFT tile detection. This could be

improved by a number of means. Marker detection relies on comparing the centroid of the colored

markers to their white centers, which can result in thousands of region property comparisons being

made. This can be reduced by using solid markers, such as in Hislop's project [11], or by a single

marker (similar to an AR target) that could give the pose needed to determine a transformation. The

SIFT feature descriptor could be replaced by a more compact alternative, such as a BRIEF [8]

descriptor, PC-SIFT [7], or SURF [12].

Before the development of this algorithm, a computer spreadsheet was created to help in

calculation of the score. The time to determine score (for each player) is comparable or shorter to this

algorithm. If this algorithm were optimized, robustified and ported to a stand-alone program, it

could be preferable to use this as a means of determining score.

References

[1] Web. “German-Style board game”, http://en.wikipedia.org/wiki/German-style_board_game,

accessed May 7, 2012.

[2] L. Gatrell, W. Hoff, C. and Sklair, “Robust Image Features: Concentric Contrasting Circles and

Their Image Extraction,” Proc. of Cooperative Intelligent Robotics in Space, Vol. 1612, SPIE, W. Stoney

(ed.), 1991.

[3] C. Wang, and J. Watada “Robust Color Image Segmentation by Karhunen-Loeve Transform based

Otsu Multi-thresholding and K-means Clustering”, 2011 Fifth International Conference on Genetic and

Evolutionary Computing, pp. 377-380, 2011.

[4] N.Otsu, “A threshold selection from gray level histograms”, IEEE Trans. Systems, Man and

Cybernetics, vol.9, pp. 62-66,Mar. 1979.

[5] R. Szeliski, Computer Vision: Algorithms and Applications, Springer International, pp. 289-292,

2010.

[6] R. Szeliski, Computer Vision: Algorithms and Applications, Springer International, p. 223, 2010

[7] Y. Ke and R. Sukthankar, “PCA-SIFT: A More Distinctive Representation for Local Image

Descriptors ,” in IEEE Computer Society Conference on Computer Vision and Pattern Recognition

(CVPR’2004), pp. 506–513, 2004.

[8] M. Calonder, V. Lepetit, C. Strecha, and P. Fua, “BRIEF: Binary Robust Independent Elementary

Features,” in Proceedings of the European Conference on Computer Vision, 2010.

[9] W. Hoff, “EGGN 512: Computer Vision”, http://egdegrees.mines.edu/course/eggn512/lectures/,

Colorado School of Mines, USA, accessed May 7, 2012

[10] P. Soille, Morphological Image Analysis: Principles and Applications, Springer-Verlag, pp. 173174, 1999.

[11] E. Hislop, “Augmented Reality for a Board Game”, Colorado School of Mines, W. Hoff (prof.),

2011.

[12] H. Bay, A. Ess, T. Tuytelaars, L. V. Gool, “Surf: Speeded Up Robust Features”, Computer Vision and

Image Understanding 10, pp. 346–359, 2008.

Appendix A: code

function score=scoring(fname)

% score=scoring(fname)

% Agricola board game AR project

% main scoring function.

% reads fname, and hopefully computes the score corrresponding to that

% board.

% please make sure that the board has markers, and that the image is

% treated properly.

p=path;

if strfind('vlfeat',p)==[]

run('..\vlfeat-0.9.14\toolbox\vl_setup.m') % setup vlfeat toolbox

end

clear p

%% read file

% fname=input('enter file name:\n','s');

I=imread(fname);

%% detect board markers and normalise

[Inorm]=board_norm(I);

%crop image for processing.

crops=board_crop(Inorm);

pause(5)

%% Detect tiles

template_struct

crops=sift_test(crops,stone);

crops=sift_test(crops,clay);

crops=sift_test(crops,wood);

crops=sift_test(crops,field);

%% Detect Stables

crops=stable_test(crops);

%% Detect Fences & Pastures

crops=fence_test(crops);

crops=pasture_test(crops);

%% Detect Persons

crops=person_test(crops);

%% Detect Livestock

crops=cow_test2(crops);

crops=sheep_test(crops);

crops=boar_test(crops);

%% Detect Vegetables/Wheat (Todo)

crops=wheat_test(crops);

crops=veg_test(crops);

%% Determine numbers

run score_list

squarelist=[8:2:16 24:2:32 40:2:48];

% arrays

awheat=[crops.wheat];

aveg=[crops.veg];