Lecture 24

advertisement

16.322 Stochastic Estimation and Control, Fall 2004

Prof. Vander Velde

Lecture 24

Last time:

d

X (t ) = A(t ) X (t ) + X (t ) A(t )T + B(t ) N (t ) B(t )T

dt

If the system reaches a statistical steady state, the covariance matrix would be

constant. The system would have to be invariant,

d

X (t ) = AX + XAT + BNBT = 0

dt

and the steady state covariance matrix can be solved for from this algebraic

equation.

Note that: X (t ) is symmetric.

If [ A, B ] is controllable and X (t0 ) > 0 , X (t ) > 0 for all t .

If, in addition, [ A, C ] is observable, then Cov ⎡⎣ y (t ) ⎤⎦ > 0 for all t.

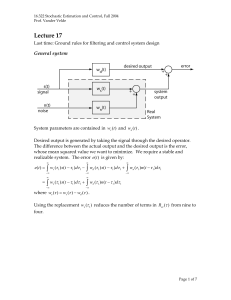

Kalman Filter

Our text treats two forms of the Kalman filter:

• Discrete time filter

Based on the model

o System: x ( k + 1) = φk x ( k ) + wk

o Measurements: zk = H k x ( k ) + vk

•

Continuous time filter

o System: x& = Fx + Gw

o Measurements: z = Hx + v

The most common practical situation is neither of these. In the aerospace area,

and many other application areas as well, we are interested in dynamic systems

that evolve continuously in time, so we must at least start with a continuous

system model. The system may be driven by a known command input and may

be subject to random disturbances. If the given disturbances cannot be well

approximated as white, then a shaping filter must be added to shape the given

disturbance spectrum from white noise.

Page 1 of 7

16.322 Stochastic Estimation and Control, Fall 2004

Prof. Vander Velde

The augmented system is modeled as

x& = A(t ) x + G (t )u + B(t ) n

where n (t ) is an unbiased white noise with correlation function

E ⎡⎣ n (t ) n (τ )T ⎤⎦ = N (t )δ ( t − τ )

We allow the system matrices to be time varying. The most common reason for

dealing with time varying linear dynamics is because we have linearized

nonlinear dynamics about some trajectory.

But although the system dynamics are continuous in time, we do not process

measurements continuously. The Kalman filter involves significant

computations, and those computations are universally executed in a digital

computer. Since a digital computer works on a cyclic basis, the measurements

are processed at discrete points in time.

The measurements are modeled as

zk = H k x (tk ) + vk

where vk is an unbiased, independent noise sequence with

E ⎡⎣ vk vkT ⎤⎦ = Rk

If the measurements have a noise component which is correlated in time, a

continuous time shaping filter must be added to the augmented system model

designed to give the proper correlation for the noise samples. We assume here

that this augmentation, if needed, has been done.

Although we can allow for a correlated measurement noise component, it is

essential that there be an independent noise component as well. If there is no

independent noise component, the whole nature of the optimum filter changes.

So the physical situation of most common interest is a hybrid one:

Page 2 of 7

16.322 Stochastic Estimation and Control, Fall 2004

Prof. Vander Velde

•

•

System dynamics are continuous in time

Measurements are processed at discrete points in time

Where a discrete time measurement is processed, there is a discontinuity in the

estimate, the estimate error, and the error covariance matrix. We use the

superscript “-“ to indicate values before incorporating the measurement at a

measurement time and the superscript “+” to indicate values after incorporating

the measurement at a measurement time.

The filter operates in a sequence of two-part steps

1. Propagate xˆ(t ) and P(t ) in time starting from xˆ + ( tk −1 ) and P + ( tk −1 ) to xˆ − ( tk )

and P − ( tk )

2. Incorporate the measurement at tk to produce xˆ + ( tk ) and P + ( tk )

Time Propagation

After processing the measurement at tk −1 we have the estimate xˆ + ( tk −1 ) and the

estimation error covariance matrix P + ( tk −1 ) .

Then the filter operates open loop until the next measurement point at tk . Note

that we are not considering any effects of computation delays here.

In the interval from tk −1 to tk the system dynamics are described as

x& = A(t ) x + G (t )u + B(t ) n

The estimate xˆ + ( tk −1 ) is the mean of the distribution of x conditioned on all the

measurements processed up to that point. So the estimate error is zero mean.

We want to preserve the error unbiased until the next measurement, and we can

achieve this driving the estimate with the mean or expectation of the system

dynamics.

Page 3 of 7

16.322 Stochastic Estimation and Control, Fall 2004

Prof. Vander Velde

x&ˆ = A(t ) xˆ + G (t ) u

xˆ ( tk −1 ) = xˆ + ( tk −1 )

The error dynamics are then

e (t ) = x (t ) − xˆ (t )

e& = x& − x&ˆ

= A(t ) e + B(t ) n

The mean of the error then satisfies

e& = A(t ) e

since n (t ) = 0 , and since the initial condition is

e ( tk −1 ) = 0

we have e (t ) = 0 , ( tk+−1 , tk− )

The form of the error dynamics are a linear system driven by white noise, which

is the form of dynamics we just analyzed. The covariance matrix for the

estimation error therefore satisfies the differential equation

P& = AP + PAT + BNBT

with the initial condition

P ( tk −1 ) = P + ( tk −1 )

So the time propagation step can be taken by integrating the differential

equations

xˆ ( tk −1 ) = xˆ + ( tk −1 )

x&ˆ = Axˆ + Gu

P& = AP + PAT + BNB T

P ( tk −1 ) = P + ( tk −1 )

Both of these relations can be expressed in the form of discrete time transitions,

but especially if A and/or B and/or G are time-varying, this may not be

convenient.

The transition matrix would be the solution to the differential equation

d

Φ ( t , tk −1 ) = A(t )Φ ( t , tk −1 )

dt

with the initial condition

Φ ( tk −1 , tk −1 ) = I

Then the estimate at the next measurement point would be given by

Page 4 of 7

16.322 Stochastic Estimation and Control, Fall 2004

Prof. Vander Velde

tk

xˆ (tk ) = Φ ( tk , tk −1 ) xˆ (tk −1 ) +

−

+

∫ Φ ( t ,τ ) G(τ )u (τ )dτ

k

tk −1

Note the simplification if u(τ ) is constant over this interval. The corresponding

covariance matrix would be

P (tk ) = Φ ( tk , tk −1 ) P (tk −1 )Φ ( tk , tk −1 ) +

−

+

T

tk

∫ Φ ( t ,τ ) B(τ ) N (τ ) B(τ ) Φ ( t ,τ )

T

T

k

k

dτ

tk −1

Both of these expressions are much more complex to deal with than would be the

case if one were just given a discrete time model of the system dynamics – such

as is done in the text.

xk +1 = φk xk + Gk uk + wk

xˆk−+1 = φk xˆk+ + Gk uk

Pk−+1 = φk Pk+φkT + Qk

where Qk = wk wkT

If you wanted to express continuous time dynamics in discrete time form, you

could only do it if u (τ ) were constant ( = uk ) in ( tk , tk +1 ) . Then

φk = Φ ( tk +1 , tk )

Gk =

tk +1

∫ Φ (t

k +1

,τ ) G (τ )dτ

k +1

,τ ) B(τ ) n (τ )dτ

k +1

,τ ) B(τ ) N (τ ) B(τ )T Φ ( tk +1 ,τ ) dτ

tk

wk =

tk +1

∫ Φ (t

tk

Qk = wk wkT

=

tk +1

∫ Φ (t

T

tk

Measurement Update

At the next measurement point, tk , we have the a priori estimate, xˆ − (tk ) , its error

covariance matrix, P − (tk ) , and a new measurement of the form

zk = H k x (tk ) + vk

where the noises, vk , are an unbiased, independent sequence of random

variables with covariance matrix, Rk .

Page 5 of 7

16.322 Stochastic Estimation and Control, Fall 2004

Prof. Vander Velde

E ⎡⎣ vk vkT ⎤⎦ = Rk

We wish to incorporate the information contained in this measurement in our

estimate of the state.

To this end, postulate an updated estimate, x̂ + , in the form of a general linear

combination of the a priori estimate and the measurement. For simplicity I will

suppress the time arguments.

xˆ + = K1 xˆ − + K 2 z

We wish to choose K1 and K 2 so as to:

1. Preserve the estimation error unbiased

2. Minimize the a posteriori error variance

The a posteriori error is

e + = x − xˆ +

= x − K1 xˆ − − K 2 z

e + = x − K1 ( x − e − ) − K 2 ( Hx + v )

= ( I − K1 − K 2 H ) x + K1 e − − K 2 v

The mean a posteriori error is (remember that the a priori error and the

measurement noise are zero-mean)

e + = ( I − K1 − K 2 H ) x

We want this to be zero for all x . Therefore

K1 = I − K 2 H

With this choice of K1 ,

e+ = 0

and

xˆ + = ( I − K 2 H ) xˆ − + K 2 z

= xˆ − + K 2 ( z − Hxˆ − )

So the updated estimate is the a priori estimate plus a gain times the

measurement residual. We observed this form earlier when discussing

estimation in general.

Now we wish to find the K 2 that minimizes the a posteriori error variance.

Page 6 of 7

16.322 Stochastic Estimation and Control, Fall 2004

Prof. Vander Velde

xˆ + = xˆ − + K 2 ( Hx + v − Hxˆ − )

= xˆ − + K 2 ( He − + v )

e + = x − xˆ +

= x − xˆ − − K 2 ( He − + v )

= ( I − K2 H ) e − − K2 v

Note that v is independent of e − .

P + = E ⎡⎣ e + e + T ⎤⎦

= ( I − K 2 H ) e − e −T ( I − K 2 H ) + K 2 vv T K 2T

T

= ( I − K 2 H ) P − ( I − K 2 H ) + K 2 RK 2T

T

Our optimization criterion will be taken to be the sum of the variances of the a

posteriori estimation errors. The sum of the error variances is the trace of P +

Min J = tr( P + )

T

= tr ⎡( I − K 2 H ) P − ( I − K 2 H ) ⎤ + tr ⎡⎣ K 2 RK 2T ⎤⎦

⎣

⎦

We use a derivative form

∂

⎡ tr ( ABAT ) ⎤ = 2 AB

⎦

∂A ⎣

if B is symmetric.

∂J

∂

T

= 2 ( I − K2 H ) P −

( I − K2 H ) + 2K2 R

∂K 2

∂K 2

{

= 2 {− P H

}

+ R )} = 0

= 2 ( I − K2 H ) P − ( − H T ) + K2 R

−

T

+ K 2 ( HP − H T

K 2 = P − H T ( HP − H T + R )

−1

This is known as the Kalman gain.

Page 7 of 7