( ) Lecture 13

advertisement

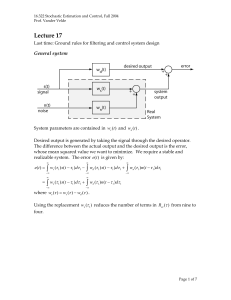

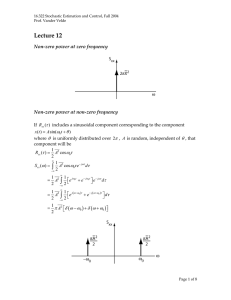

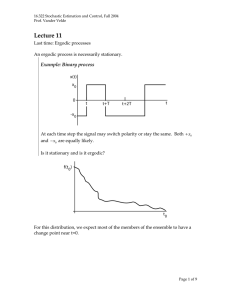

16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde Lecture 13 Last time: ⎧ 2 ⎛ τ ⎞ 2 ⎪a + ⎜ 1 − ⎟ σ a , Rxx (τ ) = ⎨ ⎝ T⎠ ⎪a 2, ⎩ S xx (ω ) = ∞ ∫a 2 − jωτ e dτ + −∞ T ∫σ 2 a −T = 2π a δ (ω ) + 2σ 2 T 2 a ⎛ (τ ≤T) (τ >T) τ ⎞ − jωτ ⎛ ⎜ 1 − ⎟ e dτ ⎝ T⎠ τ⎞ ∫ ⎜⎝1 − T ⎟⎠ cos ωτ dτ 0 = 2π a 2δ (ω ) + 2σ (1 − cos T ω ) Tω 2 a 2 ⎛ ⎛ Tω ⎞ ⎞ ⎜ sin ⎜ 2 ⎟ ⎟ 2 2 ⎠⎟ = 2π a δ (ω ) + T σ a ⎜ ⎝ T ω ⎛ ⎞ ⎜ ⎟ ⎜ ⎜ 2 ⎟ ⎟ ⎠ ⎠ ⎝ ⎝ 2 Amplitude of S xx falls off, but not very rapidly. Page 1 of 8 16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde Use error between early and late indicator to lock onto signal. Error is a linear function of shift, within the range ( −T , T ) . Return to the 1st example process and take the case where the change points are Poisson distributed. 2λσ a2 ω2 + λ 2 Take the limit of this as σ a2 and λ become large in a particular relation: to establish the desired relation, replace S xx (ω ) = σ a2 → kσ a2 λ → kλ 2k λ kσ a2 ω 2 + (k λ )2 and take the limit as k → ∞ . S xx (ω ) = lim S xx (ω ) = lim k →∞ 2k 2λσ a2 k →∞ = ( kλ ) 2 2σ a2 λ Note this is independent of frequency. Page 2 of 8 16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde This is defined to be a “white noise” by analogy with white light, which is supposed to have equal participation by all wavelengths. Can shape x(t ) to the correct spectrum so that it can be analyzed in this manner, by adding a shaping filter in the state-space formulation. Definition of a white noise process White means constant spectral density. S xx (ω ) = S0 , constant Rxx (τ ) = 1 2π ∞ ∫Se 0 jτω dω −∞ = S0δ (τ ) White noise processes only have a defined power density. The variance of a white noise process is not defined. If you start with almost any process x (t ) , lim a x ( at ) ⇒ is a white noise a →∞ Page 3 of 8 16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde So far we have looked only at the Rxx and S xx of processes. We shall find that if we wish to determine only the R yy or S yy (thus the y 2 ) of outputs of linear systems, all we need to know about the inputs are their Rxx or S xx . But what if we wanted to know the probability that the error in a dynamic system would exceed some bound? For this we need the first probability density function of the system error – an output. Very difficult in general. The pdf of the output y (t ) satisfies the Fokker-Planck partial differential equation – also called the Kolmagorov forward equation. Applies to a continuous dynamic system driven by a white noise process. One case is easy: Gaussian process into a linear system, output is Gaussian. Gaussian processes are defined by the property that probability density functions of all order are normal functions. f n ( x1 , t1; x2 , t2 ;... xn , tn ) = n-dimensional normal fn ( x) = 1 (2π ) n 2 e 1 − x T M −1 x 2 M M is the covariance matrix for ⎡ x (t1 ) ⎤ ⎢ x (t ) ⎥ x=⎢ 2 ⎥ ⎢ M ⎥ ⎢ ⎥ ⎣ x ( tn ) ⎦ Thus, f n ( x ) for all n is determined by M - the covariance matrix for x . Page 4 of 8 16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde M ij = x (ti ) x (t j ) = Rxx (ti , t j ) Thus for a Gaussian process, the autocorrelation function completely defines all the statistical properties of the process since it defines the probability density functions of all order. This means: ( ) If R yx (ti , t j ) = Rxx t j − ti , the process is stationary. If two processes x (t ), y (t ) are jointly Gaussian, and are uncorrelated (R xy (ti , t j ) = 0 ) , they are independent processes. Most important: Gaussian input Æ linear system Æ Gaussian output. In this case all the statistical properties of the output are determined by the correlation function of the output – for which we shall require only the correlation functions for the inputs. Upcoming lectures will not cover several sections that deal with: Narrow band Gaussian processes Fast Fourier Transform Pseudorandom binary coded signals These are important topics for your general knowledge. Characteristics of Linear Systems Definition of linear system: If u1 (t ) → y1 (t ) u2 (t ) → y2 (t ) Then au1 (t ) + bu2 (t ) → ay1 (t ) + by2 (t ) Page 5 of 8 16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde By characterizing the response to a standard input, the response to any input can be constructed by superposing responses using the system’s linearity. w(t ,τ ) is the weighting function. y (t ) = lim ∆τ i →0 ∑ w(t,τ i )u(τ i )∆τ i → i t ∫ w(t,τ )u(τ )dτ −∞ The central limit theorem says y (t ) → normal if u(t ) is white noise. Stable if every bounded input gives rise to a bounded output. ∞ ∫ w(t ,τ dτ = const. < ∞ ⇒ bounded for all t −∞ Realizable if causal. w(t ,τ ) = 0, ( t < τ ) State Space – An alternate characterization for a linear differential system Page 6 of 8 16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde If the input and output are related by an nth order linear differential equation, once can also relate input to output by a set of n linear first order differential equations. x& (t ) = A(t ) x (t ) + B(t ) u (t ) y (t ) = C (t ) x (t ) The solution form is: y (t ) = C (t ) x (t ) t x (t ) = Φ (t , t0 ) x (t0 ) + ∫ Φ (t ,τ ) B(τ ) u (τ )dτ t0 where Φ (t ,τ ) satisfies: d Φ (t ,τ ) = A(t )Φ (t ,τ ), Φ (τ ,τ ) = I dt Note that any system which can be cast in this form is not only mathematically realizable but practically realizable as well. Must add a gain times u to y to get as many zeroes as poles. For comparison with the weighting function description, take u and y to be scalars, and take t0 = −∞ . For stable systems, the transition from −∞ to any finite time is zero. Specialize the state space model to single-input, single-output (SISO) and t0 → −∞ : Φ (t , −∞) = 0 y ( t ) = C ( t )T x ( t ) t x (t ) = ∫ Φ(t,τ )b(τ )u(τ )dτ −∞ t y (t ) = ∫ C (t ) T Φ (t ,τ ) b (τ )u(τ )dτ −∞ w(t ,τ ) = C (t )T Φ (t ,τ ) b (τ ) which we recognize by comparison with the earlier expression for y (t ) . Page 7 of 8 16.322 Stochastic Estimation and Control, Fall 2004 Prof. Vander Velde For an invariant system, the shape of w(t ,τ ) is independent of the time the input was applied; the output depends only on the elapsed time since the application of the input. w(t ,τ ) → w(t − τ ) = w(t ), τ = 0 by convention Stability: ∞ ∫ w(t − τ ) dτ = −∞ ∞ ∫ w(t ) dt = const. < ∞ −∞ Note this implies w(t ) is Fourier transformable. Realizability: w(t − τ ) = 0, (t < τ ) w(t ) = 0, (t < 0) Page 8 of 8