EFFECTS OF INCORPORATING CLASSROOM PERFORMANCE SYSTEMS WITH

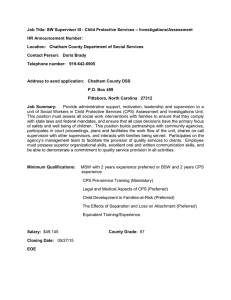

advertisement